In this blog article, we are going to show you how you can build your own stock sentiment classifier completely from scratch. This article accompanies a two part video series on YouTube.

Getting the data - stock news from FinViz

Without data, there can be no machine learning model. There are some pre-built datasets for stock news out there, but sometimes these datasets are relatively small and yield only decent machine learning models at best. Often it’s hard to determine where the data is coming from and when it was collected. That’s why we are going to create our own dataset today.

The data we are using is coming from a website called finviz.com. We really like this site because it is incredibly dense with information about the stock market, companies and finances. You can search for individual companies and get current news about the stock and the company in general. What’s great about this is that the news is from multiple sources, such as Bloomberg, Reuters and Yahoo Finance. We get information from multiple sources in one place, great!

Please keep in mind that finviz.com is only one of many options. We are not affiliated with them in any way, and there are probably many other cool sites where you can gather useful information!

To scrape the data, we have prepared a simple Python script. You can find this script as well as all other files needed for this project, in our GitHub repository! Make sure to check out our YouTube video if you would like a more detailed explanation of the Python code used.

With the Python script provided, we can save the data directly to a CSV file. You can also find a finished dataset by us on Kaggle!

Setting up a project in Kern Refinery

Next up, we need to upload the data to the Kern Refinery. Simply create a new project and upload the newly created CSV file. After the project is initialized, we need to wait until the tokenization is completed before we can continue. Depending on your file size, this might take a couple of minutes.

Preparing the data and setting the labels

After the tokenization is completed, we need to preprocess the data and set the labels we want to give it. We need to preprocess the data because it is still in a raw text format, which isn’t usable by machine learning algorithms. During the preprocessing, the text is getting converted into a numeric representation of the text, called embeddings. To do that, we can go to the settings page and click on “+ generate embeddings”. For that, we need a pre-trained model, preferably one that was trained on texts about stocks or finances. To do that we are using the zhayunduo/roberta-base-stocktwits-finetuned model from huggingface.co. But feel free to use other models if you think they might be useful!

Next, we are going to create a labelling task, which can be set right under the Embeddings. In our case, we later want the classifier to distinguish between positive, neutral and negative stock news. So we need to create a multi-class classification labelling task with exactly these three labels.

Doing some manual labelling

Kern Refinery is immensely powerful because it takes away most of the manual labelling. That being said, we still need to do some manual labelling in some cases. Kern Refinery is using a technique called active learning. In a nutshell, active learning means that the tool learns from the manual labels we made and then goes out to find similar, unlabeled data to then automatically apply labels to them. The more you label yourself, the more accurate the automatic labels get. For this project, about ~ 2 % of the data was labelled manually. Of course, this number can be higher or lower depending on the data that you are using.

Using heuristics and weak supervision

Besides active learning, we can also leverage custom heuristics with Python to label parts of the data (or all of it if you are really good)! For example, we are going to write a heuristic that uses regular expressions to capture if it is mentioned that a stock is up or down by any %. With that heuristic, we managed to automatically label quite a lot of data and therefore save a lot of time. Great!

After having labelled some data for the active learner and setting up some heuristics with Python, we can apply the weak supervision, after which all of our data is completely labelled.

Cloning our GitHub repository

Next up, we can move on to clone the GitHub repository we prepared for this use case, as well as the repository we need to build the sentiment classifier itself.

Repository of the sample use case for the UI elements (optional).

Repository for automl-docker to build a machine learning/ sentiment classifier.

As an example, you could clone the automl-docker repository using SSH with the following command:

git clone git@github.com:code-kern-ai/automl-docker.git

Creating a classifier with automl-docker

After you’re done with the cloning, it's time for building! And using automl-docker is really easy! First, make sure you pip install the requirements.txt to install all the dependencies for automl-docker. Next, run automl-docker by typing:

python3 ml/create_model.py

Then, paste the path to your data and select the right columns for the training and automl-docker will do the rest for you. Once the training is done, it will automatically save a machine learning model in the ml folder for you.

You can now use this model and embed it wherever you want. In the next step, we are going to containerize it with docker and then use it inside a neat little user interface, but feel free to explore other options, too!

To containerize the model, type:

bash start_container (on Linux/ MacOS)

start_container_windows.bat (on Windows)

After that, you’re almost set to use the interface!

Activating a simple user interface

To use the interface, you don’t need to install any other external dependencies if you installed requirements.txt from automl-docker. The interface is built with Streamlit, which is an absolutely fantastic library to build simple web apps using only Python.

Switch to the refinery sample project in the command line and type:

app/streamlit run app.py

This will start up the user interface, which you can access by going to localhost:8501 with the browser of your choice.

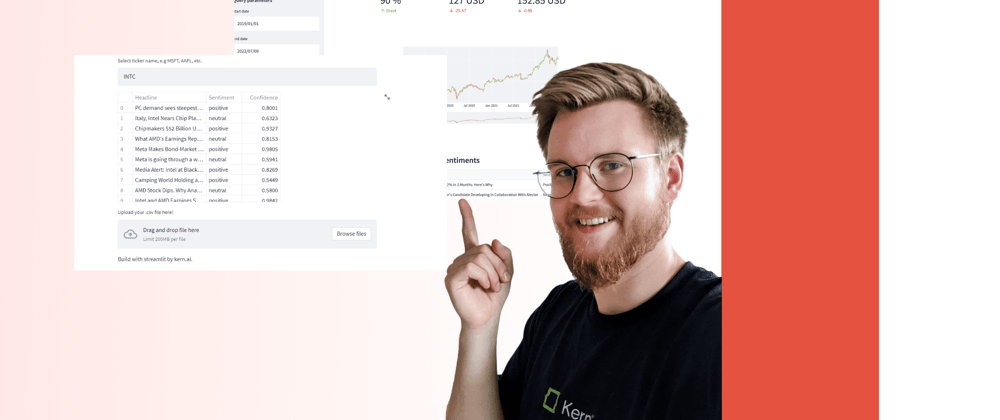

The grand finale - using the stock sentiment classifier

Now we are ready to test out our model. We can either search for a stock in the upper box, which will print out the latest stock news and their corresponding sentiments. Or we could use the lower box and upload them via CSV if we want to.

Have fun with the tool! If you have any questions or comments, please don’t hesitate to reach out to us. If you loved the corresponding video to this article, make sure to like and subscribe.

Disclaimer

This blog article is no investment advice and should only be used for learning purposes. Information about stock, finances and the stock market might be outdated at times. Please always do your own research before investing.

Top comments (0)