We've built our open-source IDE for data-centric NLP with the belief that data scientists and engineers know best what kind of framework they want to use for their model building. Today, we'll show you three new adapters for the SDK.

Let's jump right in.

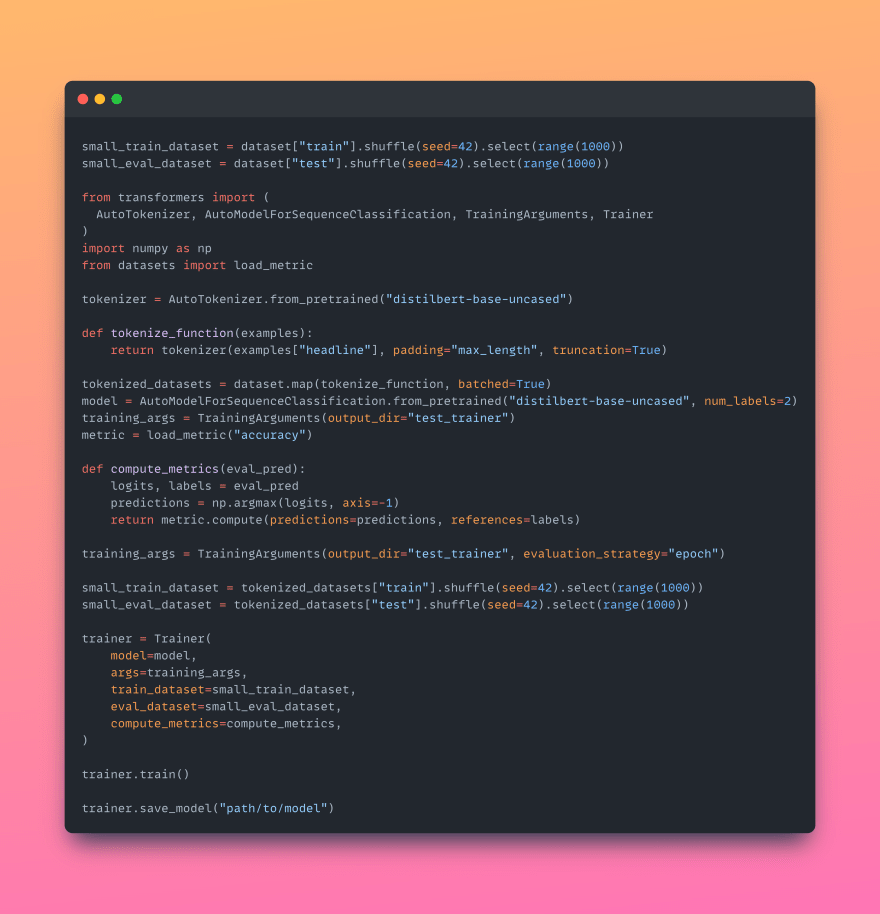

Hugging Face

Transformer models are arguably one of the most interesting breakthroughs in natural language processing. With Hugging Face, you have access to an abundance of pre-trained models. Ultimately, however, you want to finetune them to your task at hand. This is where Hugging Face datasets come into play, and you can generate them with ease using our adapter.

The dataset is a Hugging Face-native object, which you can use as follows to finetune your data. This could look as follows:

The documentations of Hugging Face have some further examples on how to finetune your models.

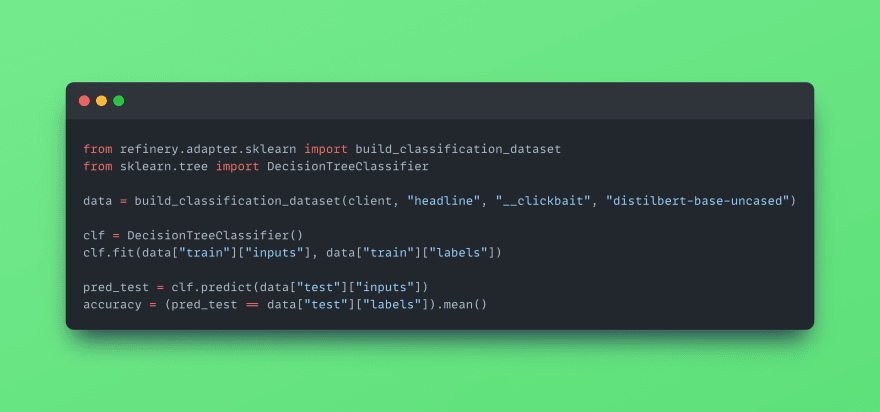

Sklearn

The swiss army knife of machine learning. You most likely have already worked with it, and if so, you surely love the richness of algorithms to select from. We do so to, and so we decided to add an integration to Sklearn. You can pull the data and train a model as easy as follows:

The data object already contains train and test splits derived from the weakly supervised and manually labeled data. You now can fully focus on hyperparameter tuning and model selection. We also highly recommend to check out Truss, an open-source library to quickly serve these models. We'll cover this in a separate article.

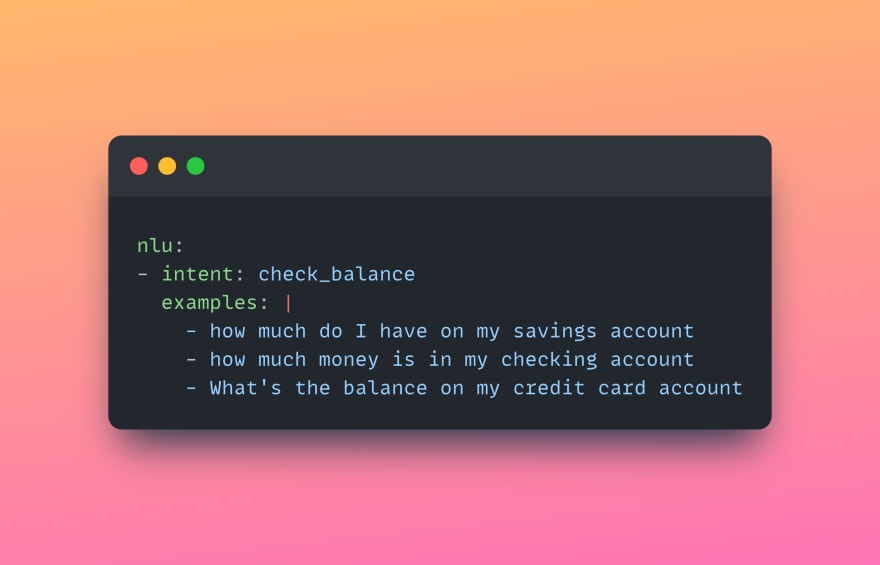

Rasa

If you want to build a chatbot or conversational AI, Rasa arguably is one of the first choices with its strong framework and community. We love building chatbots with Rasa, and already covered a YouTube series on how to do so. Here, we'll now introduce you the rasa adapter for our SDK, with which you can build the training data for your chatbot with ease.

This will directly pull your training data into a YAML format, off-the-shelf ready for your chatbots to learn from. This way, you can manage and maintain all your chat data within refinery, and have it ready for your chatbot training at hand.

You see, we already cover some integrations to open source NLP frameworks. If you're missing one, please let us know. We're happy to add them continuously, to bridge the gap between building training data and building models.

If you haven't tried out refinery yet, make sure to check out our GitHub. Also, feel free to join our Discord community - we're happy to meet you there!

Top comments (4)

@jhoetter tried Kern AI for my new project and really loved it! Just wanted to ask, what is preferred way to deal with imbalanced classes in Kern AI for weakly supervised labels? I'm working on binary classification, and the distribution of labels is 30/70..

Hey @mariazentsova

Sorry for the late reply, hope it still helps now. Generally, you should aim to achieve a similar distribution in your weakly supervised labels as in your manual subset. This is not always exactly achievable, but it is something you should roughly aim for. What helps here a ton is adding active learning models, as they natively learn to follow the distribution of your manually labeled records.

Another option is to trim the weakly supervised records that they fit your desired balance. For instance, you can:

This way you can ensure that the label distribution works nicely :)

Let me know if I can be of any further help. Also feel 100% free to join us on Discord, we respond there within minutes :)

Thanks a lot @jhoetter, this is super helpful! Love the tool :)

Thank you so much for the kind words. It means the world to the team and me :)