Introduction

Active learning is an excellent concept: you train a model as you label the data for it. This way, you can automatically label records of high prediction confidence and pick those records for manual labeling that have low prediction confidence (primarily working for imbalanced datasets).

In this post, we want to look into how you can get much more out of active learning by combining it with large-scale and pre-trained language models in natural language processing. After you’ve read this blog post, you’ll learn the following:

How embeddings can help you to boost the performance of simple machine learning models

How you can integrate active transfer learning into your weak supervision process

Compute embeddings once, train often

Transformer models are, without a doubt, among the most significant breakthroughs in machine learning of recent years. Capable of understanding the context of sentences, they can be used in multiple scenarios such as similarity scoring, classifications, or other natural language understanding tasks.

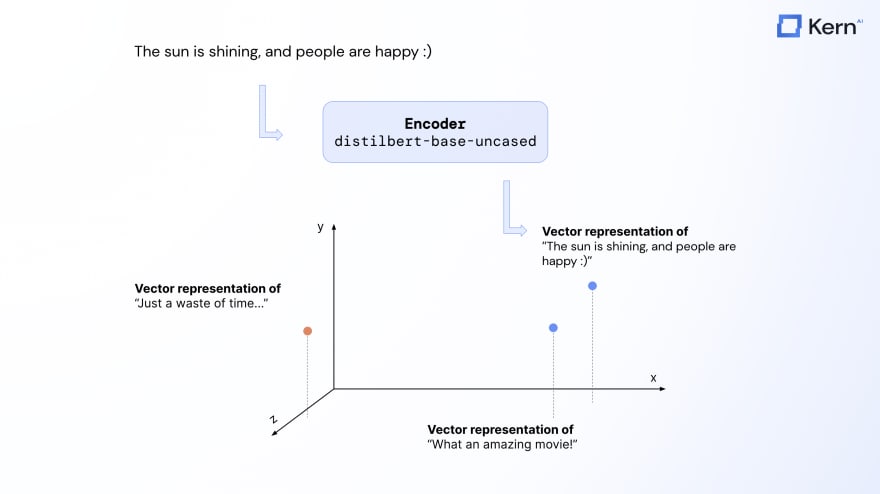

For active transfer learning, they become relevant as encoders. By cutting off the classification head of these networks, transformers can be used to compute vector representations of texts that contain precise semantic information. In other words, encoders can extract structured (but latent, i.e., unnamed) features from unstructured texts.

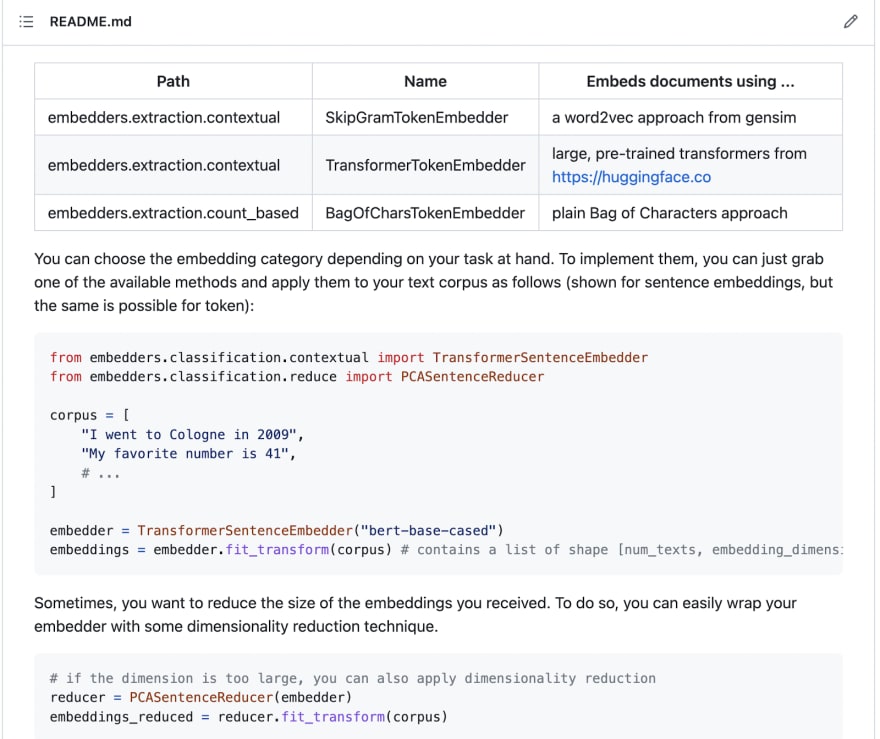

Check out our embedders library if you want to build such embeddings using a high-level, Scikit-Learn-like API.

We’re also going to be showing how these embeddings can be used to significantly improve the selection of records during manual labeling in another blog post related to neural search.

Why is this so beneficial for active learning? There are two reasons:

You only need to compute embeddings once. Even though creating such embeddings might take some time, you will see nearly no negative impact on the training time of your active learning models.

They boost the performance of your active learning models a lot. You can expect your models to learn the first significant patterns from 50 records per class - which is terrific for natural language processing.

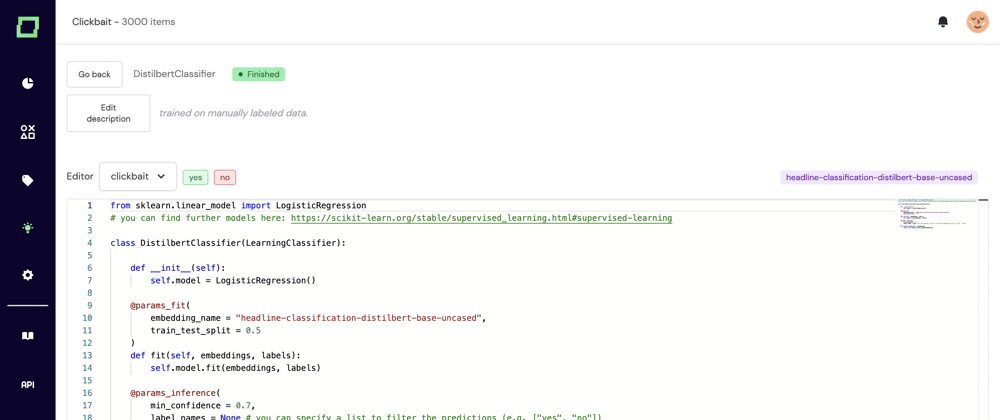

In our open-source software Kern, we split the process into two steps, as shown in the screenshots below. First, you can choose one of many embedding techniques for your data. We download the pre-trained models directly from the Hugging Face Hub. As we use spaCy for tokenization, you can also use those transformers out-of-the-box to compute token-level embeddings (relevant for extraction tasks such as Named Entity Recognition).

Afterward, we provide a scikit-learn-like interface for your models. You can fit and predict your models and intercept and customize the training process to suit your labeling requirements.

Active learning as continuous heuristics

As you might already know, active learning does not have to be standalone. It especially shines when combined with weak supervision, a technology integrating several heuristics (like active transfer learning modules) to compute denoised and automated labels.Active learning generally provides continuous labels, as you can compute the confidence of your predictions. Combined with regular labeling functions and modules such as zero-shot classification (both covered in separate posts), you can create well-defined confidence scores to estimate your label quality. Because of that, active learning is always a great idea to include during weak supervision.

We’re going open-source, try it out yourself

Ultimately, it is best to just play around with some data yourself, right? Well, we’re launching our system soon that we’ve built for more than the past year, so feel free to install it locally and play with it. It comes with a rich set of features, such as integrated transformer models, neural search and flexible labeling tasks.

Subscribe to our newsletter here, stay up to date with the release so you don’t miss out on the chance to win a GeForce RTX 3090 Ti for our launch!:-)

Check out the Youtube tutorial here

Top comments (0)