Let's say you want a shuffle mode on your music service or Netflix service. You'll have to combine randomness with weight, e.g. popularity, relevance, etc. From here on, I will use the term -weighted- to represent a combination of inputs like popular, relevancy, new, etc

Approaches

There are multiple approaches to this that will yield mildly different results. We will only touch on a couple ideas now, but may follow-up with more in the future.

📙 Pool of Popularity

One approach to retrieving randomly weighted data is to limit the available data first and then choosing a random item from the list.

Example: Take the top 500 chart topping songs in a decade and cycle through them.

This approach is good if you want to always exclude less popular songs, but a pitfall is that you're essentially limiting yourself to only 500 songs out of the box; if you've ever used Pandora, you'll know how repetitive this can get.

📒 A Weighted Array

This approach is similar to our final approach, but less efficient. I wanted to discuss it first because it's likely a technique people would think of and implement poorly.

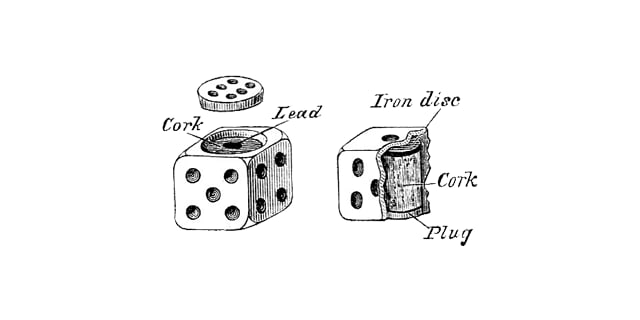

Let's say you have numbers 1-6 and you want 2 and 4 to show up more often than the rest. In a normally distributed set, you'd have an array like:

[1, 2, 3, 4, 5, 6]

And you'd get as random an entry as your random number generator can make for you. However, a simple way of adding weight here is to increase the amount of times a number appears, like:

[1, 2, 2, 3, 4, 4, 5, 6]

If you pick a random number from this set, it's more likely to be a 2 or a 4, but it could still be all the rest. Unlike the Pool of Popularity approach, this will still allow unpopular items to be chosen at a lesser likelihood.

In order to determine fluctuating weights, you could add more numbers:

[1, 2, 2, 2, 2, 2, 2, 2, 3, 4, 4, 4, 5, 6]

Just at a glance, which item do you think is most likely to show up here?

This is an extremely simple way of adding weights, but it's not efficient at all. It's good for dice rolls, but not much more.

📗 Subtracting Popularity

This is my preferred approach over the one above. What we're going to do here is subtract numbers from each other in order to get a likely popular item. There are variations to this approach you can explore, so don't think this implementation is the end-all-be-all.

Let's first start by describing a simple set of data; we'll use movies from 2019. I'll assign them an arbitrary weight (0-1) that we pretend is made up of user reviews, relevance to user, etc.

0. [0.91] Parasite

1. [0.89] Avengers: Endgame

2. [0.85] Joker

3. [0.76] Once Upon a Time... In Hollywood

4. [0.74] Marriage Story

5. [0.71] The Irishman

6. [0.61] Midsommar

7. [0.57] Ad Astra

8. [0.49] Yesterday

9. [0.25] Cats

Example: https://jsfiddle.net/hfnqk0t1/

As you can see, we have a selection of mostly good movies (0-5), then a selection of lesser movies. You'll also notice that our weights can be any number, such as 0.91481 which makes it complicated to use the dice approach above where we add more items to an array.

This example just shows 10 movies, but we could be dealing with hundreds of thousands over the years.

The purpose of this approach is to find a movie that is likely good, but not to completely exclude others that may be less popular. Ever heard of a cult classic? Fight Club, The Thing, and Blade Runner all failed at the box office but went on to become classics.

First, we'll want to sum all of our weights into a number.

// Realistically, you'd iterate or use a SQL SUM(...) function

const sum: number = 0.91 + 0.89 + 0.85 + 0.76 + 0.74 + 0.71 + 0.61 + 0.57 + 0.49 + 0.25;

// 6.78

Second, we'll want a random number between 0 - the sum (6.78).

const sum: number = 6.78; // from above

const target: number = Math.random() * sum;

// 4.76821

Lastly, we iterate through our random dataset subtracting numbers from that target variable. When we go below zero, that's the item we take that is more likely to be popular.

Before we implement this, let's talk about it.

// Implemented below the explanation

Why does this technique work?

When we sum up the numbers to reach 6.78, we're creating an upper bound for our random number. It can't possibly be 6.80 because we just don't have that many movies. If we were to use a lower number like 6.00, that means we'd be leaving some movies out of consideration. By summing everything up, it takes all of our possibilities into consideration.

We take a random number within those bounds as an arbitrary target. This will determine how many iterations we need to go through to find our movie.

Then we iterate through our movies and subtract the weight from our target until we reach zero. This works because a higher weight is more likely to get you toward zero, but a lesser weight still could push you over the line.

For instance, if your target is at 0.75, a popular movie has a really good chance at pushing you across the line: 0.75 - 0.91 = -0.16. But a lesser movie, or multiple lesser movies, still wouldn't work:

0.75 - 0.25 = 0.50 // still above 0.0

0.50 - 0.19 = 0.31 // still above 0.0

0.31 - 0.29 = 0.02 // still above 0.0

0.02 - 0.15 = -0.13 // finally

You can see here how it took 4 less popular movies to inch over that zero line, but 🎊 it was a 0.15 that ultimately did the job proving that less popular movies CAN be chosen, albeit less often.

for (let movie of movies) {

if ((target -= movie.weight) < 0) {

return movie;

}

}

Here's another example that uses a more evenly distributed set of weights so you can see how the results come in more clearly.

But as you can see, every movie has an opportunity to be selected. The more popular ones are chosen more often, but even Cats can be shown from time to time.

If you run that example over and over, you'll see the numbers change each execution but they'll be approximately similar.

Complete Example

const movies = [

{ "selected": 0, "title": "Parasite", "weight": 1.0 },

{ "selected": 0, "title": "Avengers: Endgame", "weight": 0.9 },

{ "selected": 0, "title": "Joker ", "weight": 0.8 },

{ "selected": 0, "title": "Once Upon a Time... In Hollywood", "weight": 0.7 },

{ "selected": 0, "title": "Marriage Story", "weight": 0.6 },

{ "selected": 0, "title": "The Irishman", "weight": 0.5 },

{ "selected": 0, "title": "Midsommar", "weight": 0.4 },

{ "selected": 0, "title": "Ad Astra", "weight": 0.3 },

{ "selected": 0, "title": "Yesterday", "weight": 0.2 },

{ "selected": 0, "title": "Cats", "weight": 0.1 },

];

/**

* Get random movie from our list

*

* @param Movie[] movies

* @return Movie

*/

function getRandomMovie(movies) {

const sum = movies.reduce((accumulator, movie) =>

(isNaN(accumulator) ? movie.weight : accumulator) + movie.weight);

let target = Math.random() * sum;

for (let movie of movies) {

if ((target -= movie.weight) < 0) {

return movie;

}

}

// Unreachable

return movies[0];

}

// Test iterations

for (let i = 0, l = 500; i < l; i++) {

const movie = getRandomMovie(movies);

// Increment how many times this movie was selected for demonstrations

movie.selected ++;

}

// Log our movie array to see how many times each was picked

console.log(movies);

😎 How could it better / scalable?

We completely sum up all of the weights to determine an upper bound to our randomization factor, but if you have 10 million rows, that might be an unnecessary cost. It's possible you could choose an arbitrary clamped weight and then apply this method to an offset of rows.

For instance, if we had 1000 movies we could sum up the weights of 100 of them. Maybe you randomly choose a number between 0 - (1000 - 100), so you end up with 762. Query for 100 rows at that point:

SELECT *

FROM `movies`

LIMIT 100

OFFSET 762

I should note that this technique will put you more at the mercy of your data. If rows 762-862 are all bad movies, then you will be picking from a bad crop.

One might think a way around this is to randomize the dataset first; and you'd be right, but that is not efficient for large datasets.

A better approach would be to take random numbers and checking if your primary key is IN the dataset. People familiar with Laravel may recognize this style from their Eager Loading implementation.

const howManyRows = 10000000;

const sizeOfSet = 10;

let numbers = [];

// Generate random numbers from max set

// NOTE: This isn't dealing with potential duplicates

// but that may be superfluous for such scale.

for (let i = 0, l = sizeOfSet; i < l; i++) {

numbers.push(Math.floor(Math.random() * howManyRows));

}

// Log

console.log(numbers);

// 0: 8316350

// 1: 9670724

// 2: 6592105

// 3: 2823263

// 4: 4172139

// 5: 6591340

// 6: 5969071

// 7: 8285343

// 8: 3639895

// 9: 5067900

Which could then become a SQL query like:

SELECT *

FROM `movies`

WHERE `id` IN (8316350, 9670724, 6592105, ...)

Now you have an efficiently-fetched randomized segment of an extremely large dataset that you can apply our weighted randomization technique to.

Final Note: The above technique assumes sequential numerical IDs and likely wouldn't work on something like Mongo's ObjectId. There are probably additional solutions to this, but I'll write about them in another article.

Feedback

- What did you think?

- What's your favorite technique?

- Did you spot any errors in my code?

- How could these be better?

- Did I miss something in my write-up?

Til then, enjoy your weighted randomization.

Oldest comments (0)