As compared to the the Intel-based 13" Macbook Pro.

A few weeks ago, Apple released its first custom-designed silicon chip for the Mac, the M1. There have been several impressive benchmarks around its performance relative to its Intel-based predecessors, but we were interested in putting it through its paces on a machine learning (and, specifically, a computer vision) workload.

While Apple announced support for TensorFlow training on the M1, the toolchain isn't quite ready yet. With some effort, we were able to get Jupyter notebooks running on Apple Silicon, for example, but the pre-release version of TensorFlow for Mac wasn't ready for primetime just yet (notably SciPy is not yet compatible with the M1 which is required for TensorFlow's Object Detection API).

Instead, we used Apple's Create ML to perform our benchmarks. This should be a fair comparison of the relative performance you will be able to expect for training machine learning models once the compatibility quirks are eventually sorted out in a few months. (Presumably, Apple has already done the work in optimizing Create ML for both for their Intel and M1 devices.)

Unfortunately, since Create ML is not compatible with NVIDIA chips, it still doesn't give us a good head-to-head comparison between the new M1 and the 3090 or 2080ti for example (Apple claims 11 trillion operations per second on the M1's neural engine vs. NVIDIA's claim of 14.2 TOPS on the 2080ti).

As you decide whether or not to purchase the new M1 laptop – which just got a "surprise price cut" – we set out to estimate how well the Apple M1 laptop performs against their previous generation machines' Intel CPUs and AMD Radeon GPUs. Is it worth it?

TL;DR: if you're looking to tackle machine learning and computer vision problems on your Mac, the Apple M1 may be worth the upgrade once the software you require is compatible but it's not yet ready to replace a discrete GPU.

The Test Machines

We used three recent MacBook Pro machines to do our comparison:

- MacBook Pro 13-inch (November 2020) M1 integrated system on a chip with 8GB memory. $1,499

- MacBook Pro 13-inch (May 2020) 1.4 GHz Quad-Core Intel Core i5 with 16GB memory and Intel Iris Plus Graphics 645 (1536MB graphics memory). $1,699

- MacBook Pro 16-inch (2019) 2.4 GHz 8-Core Intel Core i9 with 64GB memory and AMD Radeon Pro 5500M (8GB graphics memory). $3,899

Test Methodology

To compare performance, we used Create ML to tackle a no code object recognition problem. (Want to recreate this? We've written out the steps here.)

- We used the 121,444 image Microsoft COCO object detection dataset.

- We used Roboflow to export data in Create ML format.

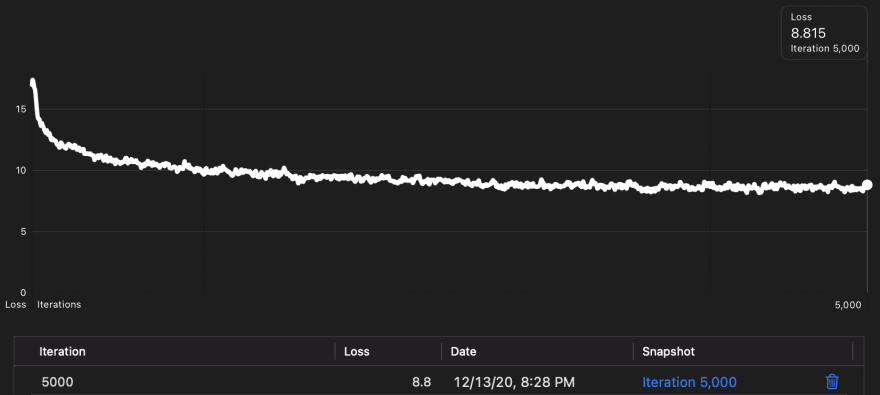

- We used Create ML to fit a YOLOv2 object detection model over 5,000 epochs with a batch size of 32.

- We monitored this on all three computers for the full 5,000 iterations/epochs.

Based on the model's performance, we probably aren't ready to deploy it to the wild yet. (If you want to see how to deploy your model, watch this video.) However, 5,000 iterations is enough for us to get a good sense of how the two systems perform against each other.

On this object detection task in Create ML, the 13" Apple M1-powered Macbook Pro performed significantly better than the 13" Intel Core i5 but underperformed the 15" i9 with its discrete Radeon Pro 5500M GPU.

- The Intel Core i5 took 542 minutes to run through 5,000 iterations (CPU training).

- The Apple M1 took 149 minutes to do the same (8% GPU utilization).

- The Intel Core i9 with Radeon Pro took 70 minutes (100% GPU utilization).

Notably, training was unable to take advantage of the integrated Intel Iris graphics card to accelerate training but it was able to partially utilize the integrated graphics card on the M1. This is quite impressive for a system on a chip.

Unfortunately, while the AMD Radeon was able to reach full 100% utilization on the 15" Macbook Pro, the 13" M1 never made it above 10% utilization. Despite being faster on paper, it still needs more software improvements to capitalize on its hardware. Apple is continuing to actively work on this with their TensorFlow port and their ML Compute framework.

The Verdict: Based on this benchmark, the Apple M1 is 3.64 times as fast as the Intel Core i5 but is not fully utilizing its GPU and, thus, underperforms the i9 with discrete graphics.

It looks like there are still significant software optimizations for Apple to make in Create ML to fully take advantage of the raw power present in the M1. It should eventually be able to fully utilize its GPU during just like the i9 was. At which point it would likely handily outperform it.

There are plenty of things to consider when purchasing a new laptop; fitting an object detection model with Create ML is only one. But when pitting these two laptops purchased in 2020 against each other on this task, the Apple M1 appears to be a promising architecture that should be superior as the software is updated to take advantage of it. But, for now, I plan to hold off while things are ironed out.

Go through the whole process of training and deploying a Create ML model to your iPhone via our YouTube.

Top comments (0)