Sometimes it’s easier to learn from ‘what not to do’ rather than from ‘what to do’. Anti-patter_ns represent c_ommon bad practices, code smells and pitfalls, in this case, when creating automated tests. You should learn them so that you can spot them.

Creating patterns to describe something creates nomenclature. Once you have a name for something, it’s easier to recognize when you see it again.

The Productive Programmer, Neal Ford

📝 The list applies regardless of the language/runtime.

No clear structure within the test

Not following an expectable structure in each test makes it hard to maintain it. Tests are living code documentation , so everyone should be able to quickly read/change them.

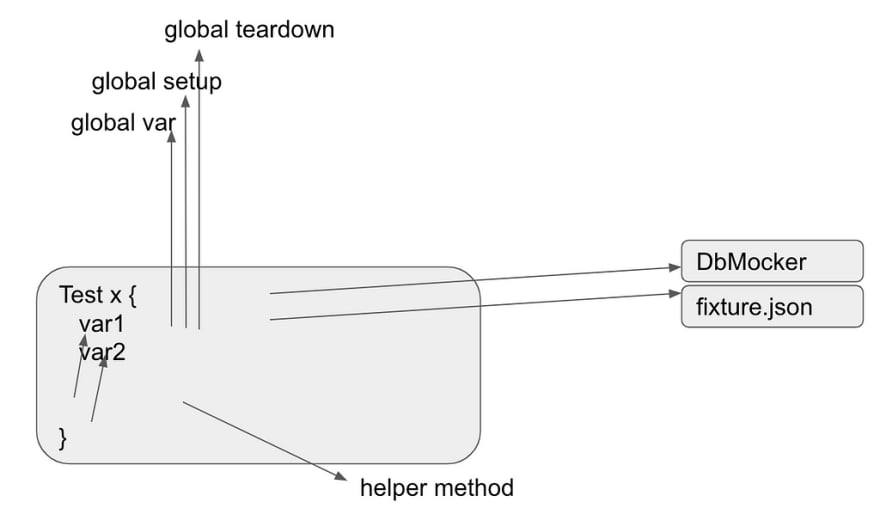

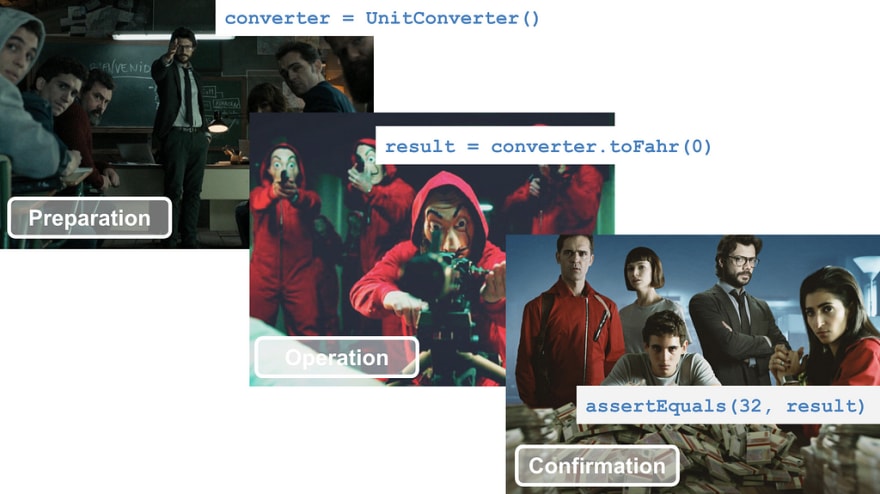

Arrange, Act, Assert is a pattern for structuring code in test methods. Basically, you organize your code in phases for the sake of clarity. It can be mapped to Setup, Exercise, Verify, Teardown in the four-phase test pattern and to Given, When, Then in BDD:

- Arrange /Given/Setup: initialization of the SUT (system under test, whatever you’re testing) and other dependencies like mocks and fakes;

- Act /When/Exercise: the call to the method being tested — ideally a single-liner;

- Assert /Then/Verify: assertions of the output or verifications of calls made;

- (Optional) Teardown: if there are resources to be freed.

As a consequence, I usually have 3 phases visually separated by empty lines. If you reply with: “But I have lots of code in my test! I need more than 2 empty lines!”, I’d say “that’s likely a design smell”: you might be testing a god object which you need to split, you might need to create a “local DSL” — high-level helper methods, or you could consider extracting the common setup and teardown elsewhere.

TL;DR

👎 Having no consistent test structure, harming readability;

👍 Applying Arrange/Act/Assert consistently, visually separated by newlines.

Refactoring when adding a feature

Adding a new feature bears some risk and stress, even when you do TDD. So, why would you make things worse by fixing this or improving that simultaneously? This can lead to an explosion of untested cases and hard-to-track down bugs, but the worse is the anxiety it brings.

So the bottom line is: relax and focus on the feature at hand. It’s true that you should leave the code in a better state than you found it — opportunistic refactoring), but please do it before (if it helps the feature) or after the feature. If it’s after, consider writing the refactoring ideas in a sheet of paper, as TODOs in the code, in tasks/stories in your tracker, to reduce the “fear of forgetting”.

TL;DR

👎 Improving things you step into, while you create a feature;

👍 Doing the refactor before the feature or noting it down for later.

Changing implementation to make tests possible

I recently read Test-Driven Development by Example by Kent Beck and I was a bit disappointed that he was teaching why and how to test private methods (using Java reflection). Later, I think he made up his mind:

I never faced a situation where I needed to test private methods; it always feels like a big red flag.

In “unit test”, the word unit represents a piece of a public interface. Private methods are an implementation detail — auxiliaries of public methods — and are to be tested indirectly through them! There’s probably no exception to this rule. If you find it hard to test all private method possibilities, then maybe you have a god object that you should split into multiple components.

“Ah.. but I can make the method public!”. That’s even worse… you should not change the code implementation just for making testing possible. Testing private methods is just an example, but there’s much more. This usually means you’re doing something wrong.

TL;DR

👎 Changing the implementation solely for the sake of making testing possible;

👍 Be hinted to redesign the implementation code because the tests are hard to write.

The test is hard to write

A common reason for a hard-to-write-test is that you’re new to the problem and need to get more familiar with it. In that case, try explaining the problem to someone (or even to a duck) or take a break to get your ideas organized.

If you’re using TDD, you can try the starter test (a really basic one) or the explanatory test (the one you’d use to explain to someone).

However, if the test ends up being too complicated, too long (e.g. lots of setup code), or it feels like a hack, probably there’s a better way. A good way of finding if your code is too complicated is the need to add comments to explain it: code should speak for itself.

Generally speaking, tests that are hard to write or understand are a symptom of bad code design. Tests are a client of the implementation code — when tests of a component are hard to write, you can extrapolate how hard is to use that component.

If it’s hard to write a test, it’s a signal that you have a design problem, not a testing problem. Loosely coupled, highly cohesive code is easy to test.

Extreme Programming Explained, Kent Beck

In that case, you should consider if the implementation code needs a refactor to make it easier to test and maintain. The solution may be divide and conquer, as your SUT may be a god object. It may also be wise to try to use more adequate software design patterns.

TL;DR

👎 Making hacks that need an explanation or having complicated setups, so that a scenario can be tested;

👍 Considering a code redesign when a test is hard to write.

Optimizing DRY

This is the most common mistake I encounter. People see a repeated value and they will immediately create a variable for it. That’s not an early optimization: it’s just making documentation (tests) harder to read.

In tests, some repetition is bearable for the sake of clarity. This is one of the few differences between test code and implementation code. Test code ≠ implementation code. Tests should more DAMP (Descriptive And Meaningful Phrases) than DRY (Don’t Repeat Yourself).

On a related note, I hate when I have to scroll up and down or open multiple files to understand a single test. A typical case is a folder full of sample data that is used everywhere. Sometimes it’s fine to leave repeated setup and teardown in all tests in all cases. Tests should be self-evident. Tests are documentation. Therefore, I’d prefer to read tests top-down, like in a user manual (imagine if you had to swap pages multiple times when reading your washing machine manual…). This is also a callout to avoid using globals in tests (i.e., class or file globals).

TL;DR

👎 Pursuing DRYness by creating variables and functions in tests;

👍 Starting with everything local and evident; break the rule only if it brings more value.

Very similar test cases

Copy-pasting of more than one line of code should generally raise an alarm in your head. Well… it’s OK to do it just to make it work, but you should fix it later. It’s worse if you copy an entire test where only the initial values vary. This calls out loud to resort to table-driven testing, also known as data-driven testing. Table-driven testing allows running the same test multiple times, one per set of inputs and expected output (or other consequences).

Input | Output

-----------------

1 | 1

2 | 2

3 | Fizz

5 | Buzz

15 | Fizz Buzz

📝 It’s not my goal to teach you here about it, so I’ll leave a few links:

- JUnit parameterized tests (Java, Kotlin);

- NUnit parameterized tests_ (C#);_

- Jest each method (JavaScript, TypeScript);

- RSpec shared_examples (Ruby);

- Table-driven testing in Go, Python, and PHP.

Bottom line: use table-driven testing if you keep duplicating the same test with different data. As advantages, you’ll have:

- Less code because you don’t repeat very similar tests;

- Easy comparison of test cases;

- Quick ability to add or remove test cases;

- Better documentation, because positive, negative, and edge cases are explicit.

⚠️ In table-driven testing, do not mix the happy path with the error scenarios . These should be kept apart for clarity reasons.

Finally, consider the trade-off you’re making, given that the tests become less DAMP when applying this technique.

TL;DR

👎 Copy-pasting many similar chunks of test code to add new test cases;

👍 Learning how to do table-driven testing with your language/library.

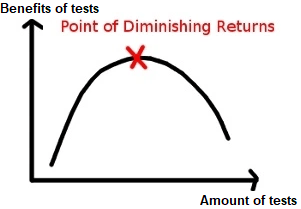

Testing everything

According to the law of diminishing returns, there’s a point where the cost to add tests does not compensate for its benefits anymore.

Applied to automated testing, not every possibility needs to be tested. Some situations can be left untested:

- some are too rare or exotic;

- others will quickly arise when using the software (e.g. missing env. var.)

- many won’t have a big impact if they fail (e.g. CSS styling, animations).

How to determine if a certain test is worth it? You should roughly calculate its benefit-cost ratio: is benefit/cost ≥ 1? Within benefit consider the permanent prevention against the problem (testing as a safety net). For the cost, take into account the effort to simulate the test scenario and the resources (lines of code to maintain, time to run) it will require from thereon.

TL;DR

👎 Religiously trying to test everything;

👍 Analyzing the cost-ratio test by test, to decide if it’s worth it.

Other smells and anti-patterns

- Using the word “and” when describing a test : this hints that you should split the test in two, each asserting/verifying different things (check code example above);

- Testing lots of combinations (combinatorial explosion): this is a smell that you have multiple components into one; the solution is to recognize them and slowly split them apart.

- Treating test code as a second class citizen : test code is your safety net so take good care of it; most good code practices are transversal to any source code (e.g. avoid globals);

- Not discarding a spike ’s code, especially when doing TDD : a spike is a learning tool; its code should not be kept and evolve;

- Testing business rules though UI : business rules should be tested regardless of the UI being used. Of course, end-to-end testing is an exception;

- Quantity without diversity : test cases should be different somehow — do not add cases for the sake of quantity; the same applies to test scenarios: they should vary;

- Mocking what you don’t own: avoid leakage of libraries into your software (runtime or external); there are alternatives like wrappers and mock servers;

- Conditionals in tests : having “ifs” in tests is wrong; it’s proof that you need another test scenario;

- Overvaluing test coverage : coverage shouldn’t be something you pursue just for the sake of it because you may be near 100% and still have crappy tests. Anyway, with TDD, coverage is almost an irrelevant concept.

- Early optimization : in TDD, always reduce the “distance” between a red (now) and a green state (future). Do the “quick and dirty” implementation even if it means to return a constant or do an ugly or slow implementation. Make it pass, then make it beautiful. The reason is not only psychological — it’s dopamine-freeing to see a green state — but it’s also about learning sooner. You learn, from the start, more about the problem and the solution (e.g. code design) if you make it pass first.

Final remarks

One could notice that a lot of anti-patterns in tests can be attributed to an anti-pattern in implementation: the god object: having huge test files, having long tests, a test being hard to write, among others are warning signs. That said, my advice is:

- Write software in a top-down approach , so that you can more easily understand the “components below” deriving from the “ones above” (and you only implement what’s needed);

- Recognize when you have multiple reasons to change a component so you can split it (single-responsibility principle).

Bottom line: if it’s complicated, you’re doing it wrong. The ideal solution is usually simple; you just need to be persevering and iterate until you achieve it.

The presented list was not supposed to be exhaustive since there are good resources out there (e.g. StackOverflow’s thread, Yegor Bugayenko’s list). Software Testing Anti-patterns by Kostis Kapelonis is a must-read. You can find good patterns in Part III of Test-Driven Development by Example by Kent Beck. Finally, watch TDD, Where Did It All Go Wrong by Ian Cooper:

Top comments (0)