I always like to understand what the lower bound looks like. What is the absolute fastest performance we can hope for? I find it insightful as it sets a baseline for everything else.

A necessary warning here is the risk of extrapolating too much from such a trivial sample. We need to take the data for what it is: a baseline. It's not representative of real-world business logic. Simply adding some I/O operations would greatly increase the processing time. Usually I/O is 1,000x to 1,000,000x slower than code.

Minimal Lambda Function

The Minimal project defines a Lambda function that takes a stream and returns an empty response. It has no business logic and only includes required libraries. There is also no deserialization of a payload. This is the Lambda function with the least amount of overhead.

using System.IO;

using System.Threading.Tasks;

namespace Benchmark.Minimal {

public sealed class Function {

//--- Methods ---

public async Task<Stream> ProcessAsync(Stream request)

=> Stream.Null;

}

}

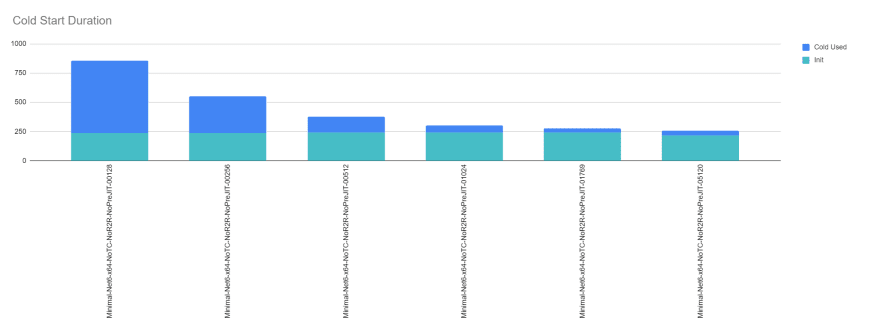

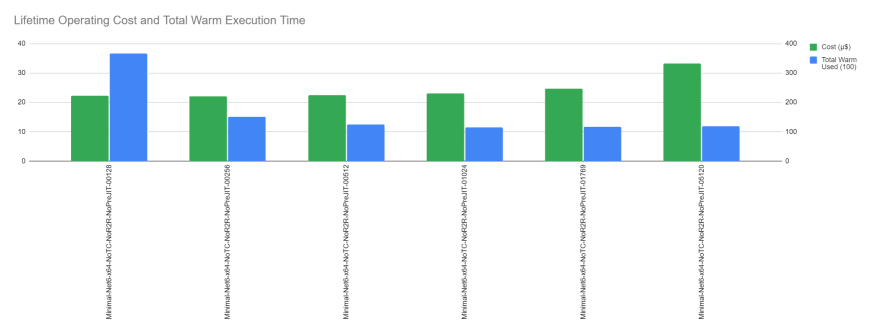

Benchmark Data for .NET 6 on x86-64

The data neatly shows that the INIT phase is approximately the same for all memory configurations under the 3,008 MB threshold. As mentioned in the Anatomy of the AWS Lambda Lifecycle post, the INIT phase always runs at full speed.

The cold INVOKE phase is about 10x slower for 128 MB than it is for 1,024 MB. However, the sum of all warm INVOKE phases is only ~3x slower. Yet, the cost is less than 5% higher for the improved performance.

It's surprising that even for such a trivial example, we can already appreciate the delicate balance between performance and cost.

| Memory Size | Init | Cold Used | Total Cold Start | Total Warm Used (100) | Cost (µ$) |

|---|---|---|---|---|---|

| 128MB | 235.615 | 620.921 | 856.536 | 365.519 | 22.25509 |

| 256MB | 238.296 | 315.731 | 554.027 | 150.124 | 22.14107 |

| 512MB | 241.193 | 136.89 | 378.083 | 124.686 | 22.37980 |

| 1024MB | 239.972 | 60.804 | 300.776 | 115.53 | 23.13891 |

| 1769MB | 241.005 | 37.623 | 278.628 | 116.322 | 24.63246 |

| 5120MB | 218.112 | 37.009 | 255.121 | 119.559 | 33.24730 |

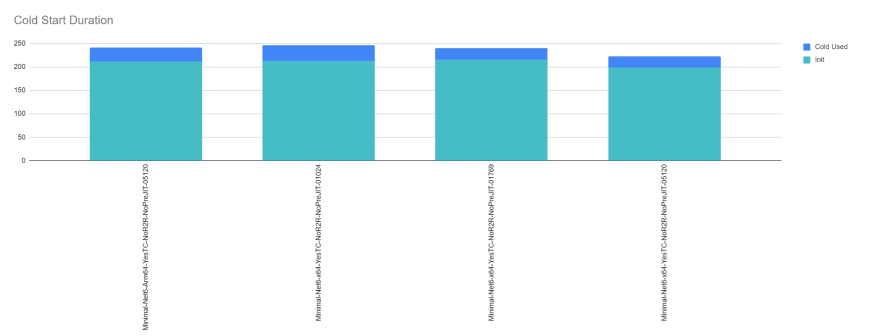

Minimum Cold Start Duration for .NET 6

Not surprisingly, the lowest cold start duration was achieved using the highest memory configuration. Tiered Compilation also helped lower the number. However, ReadyToRun did not make much of an impact, which is expected since our minimal project has almost no code.

More notable is that the ARM64 architecture was slower for comparable memory configurations than the x86-64 architecture.

| Architecture | Memory Size | Tiered | Ready2Run | PreJIT | Init | Cold Used | Total Cold Start |

|---|---|---|---|---|---|---|---|

| arm64 | 5120MB | yes | no | no | 211.006 | 30.165 | 241.171 |

| x86_64 | 1024MB | yes | no | no | 213.085 | 33.173 | 246.258 |

| x86_64 | 1769MB | yes | no | no | 215.754 | 24.164 | 239.918 |

| x86_64 | 5120MB | yes | no | no | 198.771 | 24.094 | 222.865 |

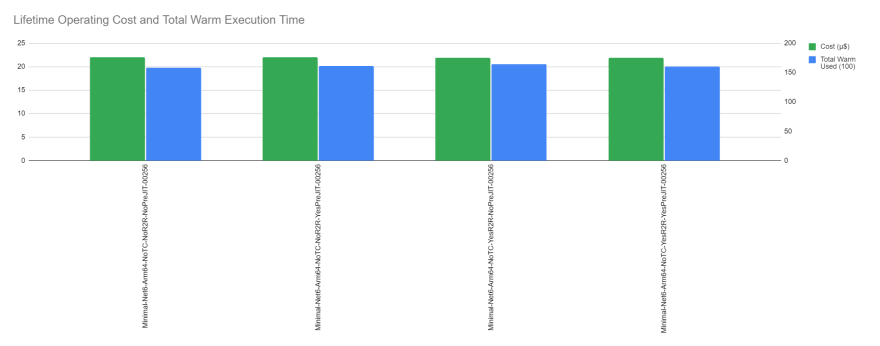

Minimum Execution Cost for .NET 6

Another unsurprising result is that the ARM64 architecture yields the lowest execution cost since its unit price is 20% lower. Similarly, the memory configuration is towards the bottom end at only 256 MB.

More interesting is that Tiered Compilation is always more expensive to operate. This makes intuitively sense since it requires additional processing time to re-jit code. After that, it's a bit of tossup between the ReadyToRun and PreJIT settings.

| Architecture | Memory Size | Tiered | Ready2Run | PreJIT | Init | Cold Used | Total Warm Used (100) | Cost (µ$) |

|---|---|---|---|---|---|---|---|---|

| arm64 | 256MB | no | no | no | 266.026 | 378.676 | 158.064 | 21.98914228 |

| arm64 | 256MB | no | no | yes | 288.025 | 371.274 | 161.529 | 21.97601788 |

| arm64 | 256MB | no | yes | no | 264.304 | 361.657 | 164.619 | 21.95426344 |

| arm64 | 256MB | no | yes | yes | 287.762 | 361.285 | 160.248 | 21.93844936 |

What about .NET Core 3.1?

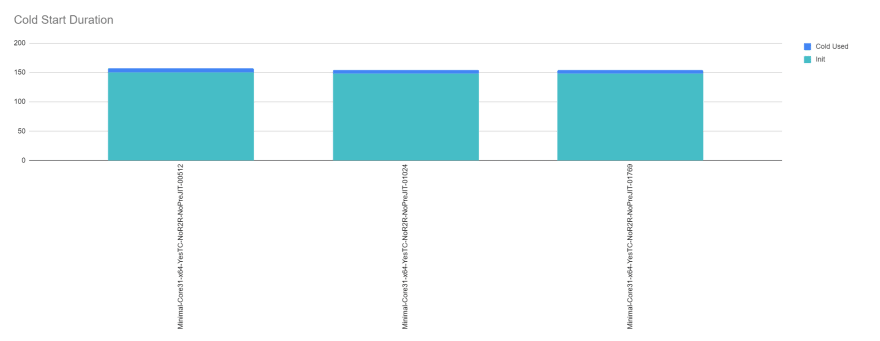

I struggled if I should mention this since .NET Core 3.1 is reaching end-of-life in December 2022, but the performance delta for the baseline case is just staggering.

A Lambda function using .NET Core 3.1 with 512 MB is 40% faster on cold start than one using .NET 6 with 5,120 MB!

I'm just flabbergasted by this outcome. All I can do is remind myself that this baseline test is not representative of real-world code.

| Architecture | Memory Size | Tiered | Ready2Run | PreJIT | Init | Cold Used | Total Cold Start |

|---|---|---|---|---|---|---|---|

| x86_64 | 512MB | yes | no | no | 150.129 | 6.903 | 157.032 |

| x86_64 | 1024MB | yes | no | no | 148.376 | 6.081 | 154.457 |

| x86_64 | 1769MB | yes | no | no | 148.338 | 5.972 | 154.31 |

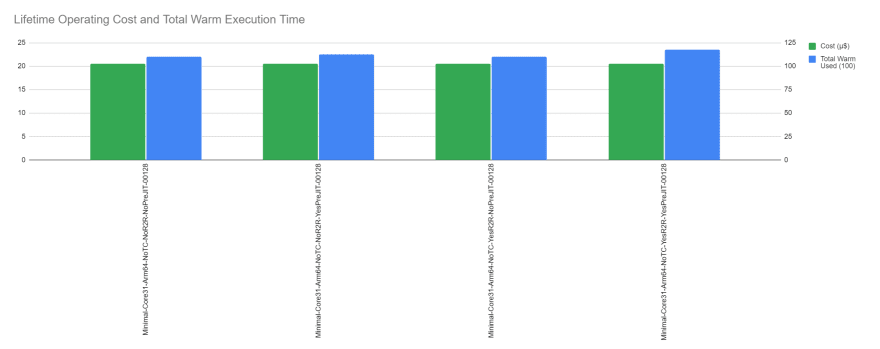

Similarly, execution cost is lower with .NET Core 3.1, but not as dramatically. Still, for .NET 6 there were just 4 configurations that achieved a cost under 22µ$. For .NET Core 3.1, there are 39 configurations under 21µ$!

Interestingly, the 4 lowest cost configurations follow a similar pattern: ARM64, 128 MB, no Tiered Compilation, and tossup for ReadyToRun and PreJIT.

| Architecture | Memory Size | Tiered | Ready2Run | PreJIT | Init | Cold Used | Total Warm Used (100) | Cost (µ$) |

|---|---|---|---|---|---|---|---|---|

| arm64 | 128MB | no | no | no | 162.366 | 102.693 | 110.096 | 20.55465044 |

| arm64 | 128MB | no | no | yes | 186.627 | 98.641 | 112.327 | 20.55161642 |

| arm64 | 128MB | no | yes | no | 161.989 | 88.677 | 110.391 | 20.53178133 |

| arm64 | 128MB | no | yes | yes | 185.923 | 85.289 | 117.811 | 20.53850086 |

Conclusion

Based on the benchmarks, we can establish these lower bounds.

For .NET 6:

- Cold start duration: 223ms

- Execution cost: 21.94µ$

For .NET Core 3.1:

- Cold start duration: 154ms

- Execution cost: 20.53µ$

Unless anything fundamental changes, we should not expect to do better than these baseline values.

What's Next

In the next post, I'm going to benchmark JSON serializers. Specifically, the popular Newtonsoft JSON.NET library, the built-in System.Text.Json namespace, and the new .NET 6 JSON source generators.

Latest comments (1)

Awesome post! :)