Young people these days don't do Ruby.

So I am writing this note about YOLOv7 in Ruby. Since there is ONNX Runtime, almost anything works if you just want to infer.

ankane

/

onnxruntime-ruby

ankane

/

onnxruntime-ruby

Run ONNX models in Ruby

ONNX Runtime Ruby

Check out an example

Installation

Add this line to your application’s Gemfile:

gem "onnxruntime"

Getting Started

Load a model and make predictions

model = OnnxRuntime::Model.new("model.onnx")

model.predict({x: [1, 2, 3]})

Download pre-trained models from the ONNX Model Zoo

Get inputs

model.inputs

Get outputs

model.outputs

Get metadata

model.metadata

Load a model from a string or other IO object

io = StringIO.new("...")

model = OnnxRuntime::Model.new(io)

Get specific outputs

model.predict({x: [1, 2, 3]}, output_names: ["label"])

Session Options

OnnxRuntime::Model.new(…I wrote ↓ three years ago, so I will reuse code.

YOLO object detection using ONNXRuntime with Ruby

kojix2 ・ Sep 2 '19 ・ 2 min read

The YOLOv7 ONNX model exists. ↓

ibaiGorordo

/

ONNX-YOLOv7-Object-Detection

ibaiGorordo

/

ONNX-YOLOv7-Object-Detection

Python scripts performing object detection using the YOLOv7 model in ONNX.

ONNX YOLOv7 Object Detection

Python scripts performing object detection using the YOLOv7 model in ONNX.

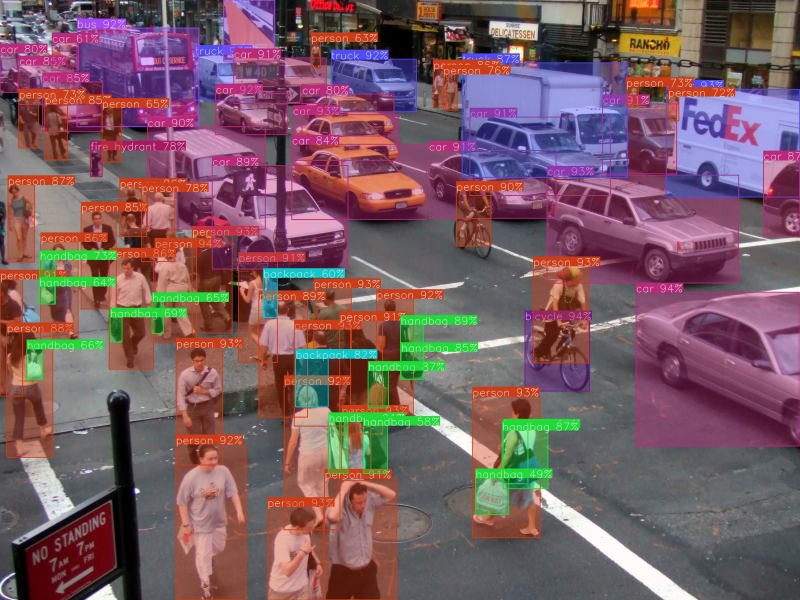

Original image: https://www.flickr.com/photos/nicolelee/19041780

Original image: https://www.flickr.com/photos/nicolelee/19041780

Important

- The input images are directly resized to match the input size of the model. I skipped adding the pad to the input image, it might affect the accuracy of the model if the input image has a different aspect ratio compared to the input size of the model. Always try to get an input size with a ratio close to the input images you will use.

Requirements

- Check the requirements.txt file.

- For ONNX, if you have a NVIDIA GPU, then install the onnxruntime-gpu, otherwise use the onnxruntime library.

Installation

git clone https://github.com/ibaiGorordo/ONNX-YOLOv7-Object-Detection.git

cd ONNX-YOLOv7-Object-Detection

pip install -r requirements.txt

ONNX Runtime

For Nvidia GPU computers

pip install onnxruntime-gpu

Otherwise

pip install onnxruntime

ONNX model

The original models were converted to different formats (including .onnx) by PINTO0309. Download the models from…

Download the ONNX model from here ↓ : 307_YOLOv7

PINTO0309

/

PINTO_model_zoo

PINTO0309

/

PINTO_model_zoo

A repository for storing models that have been inter-converted between various frameworks. Supported frameworks are TensorFlow, PyTorch, ONNX, OpenVINO, TFJS, TFTRT, TensorFlowLite (Float32/16/INT8), EdgeTPU, CoreML.

PINTO_model_zoo

Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts are released under the MIT license, but the license of the source model itself is subject to the license of the provider repository.

Contributors

Made with contrib.rocks.

A repository for storing models that have been inter-converted between various frameworks. Supported frameworks are TensorFlow, PyTorch, ONNX, OpenVINO, TFJS, TFTRT, TensorFlowLite (Float32/16/INT8), EdgeTPU, CoreML.

TensorFlow Lite, OpenVINO, CoreML, TensorFlow.js, TF-TRT, MediaPipe, ONNX [.tflite, .h5, .pb, saved_model, tfjs, tftrt, mlmodel, .xml/.bin, .onnx]

I have been working on quantization of various models as a hobby, but I have skipped the work of making sample code to check the operation because it takes a lot of time. I welcome a pull request from volunteers to provide sample code.

[Note Jan 05, 2020] Currently, the MobileNetV3 backbone model and the Full Integer…

The key point here is to use post-process_merged to download a model with post-process implemented, otherwise you will have to implement post-process yourself, which is troublesome.

git clone https://github.com/PINTO0309/PINTO_model_zoo

cd PINTO_model_zoo/307_YOLOv7

chmod +x download_single_batch_post-process_merged.sh

./download_single_batch_post-process_merged.sh

Now the models are downloaded. Use yolov7_post_640x640.onnx, which seems to be the main one.

Install the Ruby version of onnxruntime.

gem install onnxruntime

Those who want to use GPUs need to set up their own.

Prepare Netron to check the ONNX model.

lutzroeder

/

netron

lutzroeder

/

netron

Visualizer for neural network, deep learning, and machine learning models

Netron is a viewer for neural network, deep learning and machine learning models.

Netron supports ONNX, TensorFlow Lite, Caffe, Keras, Darknet, PaddlePaddle, ncnn, MNN, Core ML, RKNN, MXNet, MindSpore Lite, TNN, Barracuda, Tengine, CNTK, TensorFlow.js, Caffe2 and UFF.

Netron has experimental support for PyTorch, TensorFlow, TorchScript, OpenVINO, Torch, Vitis AI, kmodel, Arm NN, BigDL, Chainer, Deeplearning4j, MediaPipe, MegEngine, ML.NET and scikit-learn.

Install

macOS: Download the .dmg file or run brew install --cask netron

Linux: Download the .AppImage file or run snap install netron

Windows: Download the .exe installer or run winget install -s winget netron

Browser: Start the browser version.

Python Server: Run pip install netron and netron [FILE] or netron.start('[FILE]').

Models

Sample model files to download or open using the browser version:

It is a good idea to open the model in Netron to see what the inputs and outputs look like.

Very complicated. All I know is the shape of the INPUT and OUTPUT matrices. Let's Write throwaway code!

require 'mini_magick'

require 'numo/narray'

require 'onnxruntime'

SFloat = Numo::SFloat

input_path = ARGV[0]

output_path = ARGV[1]

model = OnnxRuntime::Model.new('yolov7_post_640x640.onnx')

labels = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train',

'truck', 'boat', 'traffic light', 'fire hydrant', 'stop sign', 'parking meter',

'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow', 'elephant',

'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie',

'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball', 'kite',

'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket',

'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana',

'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'dining table',

'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock',

'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush']

# preprocessing

img = MiniMagick::Image.open(input_path)

img.combine_options do |b|

b.resize '640x640!'

b.gravity 'center'

b.background 'transparent'

# b.extent '640x640'

end

img_data = SFloat.cast(img.get_pixels)

img_data /= 255.0

image_data = img_data.transpose(2, 0, 1)

.expand_dims(0)

.to_a # NArray -> Array

# inference

output = model.predict({ images: image_data })

# postprocessing

scores, indices = output.values

# visualization

img = MiniMagick::Image.open(input_path)

img.colorspace 'gray'

scores.zip(indices).each do |score, i|

cl = i[1] # cl is class

hue = cl * 100 / 80.0

label = labels[cl]

score = score[0]

p "draw box"

y1 = i[2] * img.height / 640

x1 = i[3] * img.width / 640

y2 = i[4] * img.height / 640

x2 = i[5] * img.width / 640

img.combine_options do |c|

c.draw "rectangle #{x1}, #{y1}, #{x2}, #{y2}"

c.fill "hsla(#{hue}%, 20%, 80%, 0.25)"

c.stroke "hsla(#{hue}%, 70%, 60%, 1.0)"

c.strokewidth (score * 3).to_s

end

# draw text

img.combine_options do |c|

c.draw "text #{x1}, #{y1 - 5} \"#{label}\""

c.fill 'white'

c.pointsize 18

end

end

img.write output_path

Then run ruby yolo.rb A.png B.png

↓

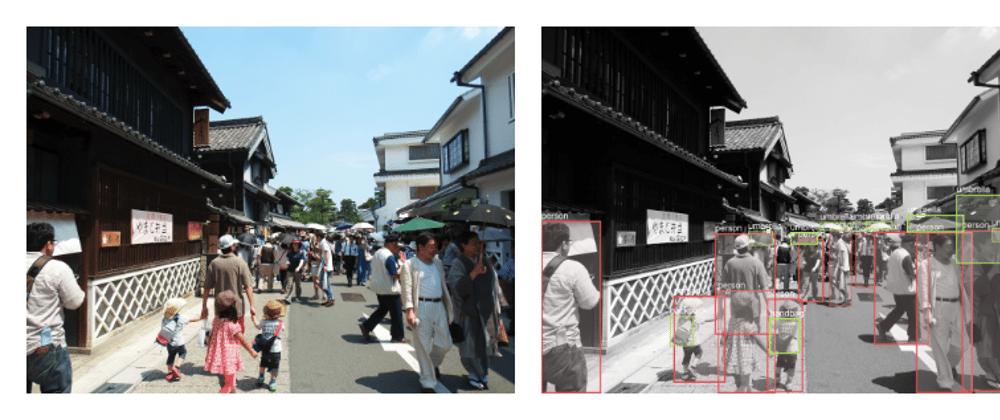

I can see that the accuracy is even better than it was in YOLOv3.

mini_magick is much slower than YOLO.

I hear that rmagick is well maintained these days, so you may want to use that.

If ONNX bindings work, any minor language should be able to do the same. I think this would mean that if you create a machine learning model in Python, you can convert it to ONNX and then deploy it in any language.

Thank you for your reading.

Have a nice day!

Top comments (0)