Attention Is All You Need introduced Transformer models, which have been wildly effective in solving various Machine Learning problems. However, the 10 page paper is incredibly dense. There are so many details, it was difficult for me to gain high-level insights about how they work and why they are effective.

After several months of reading other blog posts about them, I understood them well enough to create a Transformer in a spreadsheet and made a video walking through it.

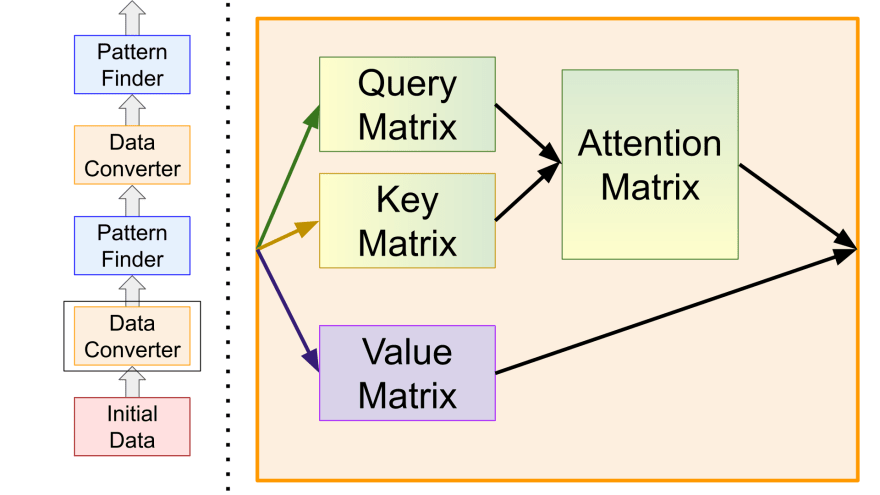

At a high level, Transformers are effective because they convert the data in a way that can make it easier to find patterns. They build on ideas from Convolutional Neural Networks and Recurrent Neural Networks (Focus and Memory), combining them in something called self-attention.

The video covers these ideas in more details and this is the link to the spreadsheet with the implemented Transformer. Skip to the "Appendix" sheet if you want to see a layer with all the bells and whistles, including multi-headed attention and residual connections.

Implementing the Transformer really helped me understand all the components. I'm especially proud of my metaphor of "scoring points" for explaining self-attention.

Other resources I found useful when researching transformers:

- https://nlp.seas.harvard.edu/2018/04/03/attention.html

- https://jalammar.github.io/illustrated-transformer/

- https://towardsdatascience.com/illustrated-guide-to-transformers-step-by-step-explanation-f74876522bc0

- The Narrated Transformer Language Model

- Attention Model Intuition

- Attention Mechanisms

- LSTM is dead. Long Live Transformers!

- What exactly are keys, queries, and values?

- https://blog.acolyer.org/2016/04/21/the-amazing-power-of-word-vectors/

- https://medium.com/deeper-learning/glossary-of-deep-learning-word-embedding-f90c3cec34ca

More of my work:

- Building a traditional neural network in a spreadsheet to learn AND and XOR (Part 1, Part 2)

- Building a convolutional neural network in a spreadsheet to recognize letters (Part 1, Part 2)

- Building a recurrent neural network in a spreadsheet to emulate autocomplete

Latest comments (0)