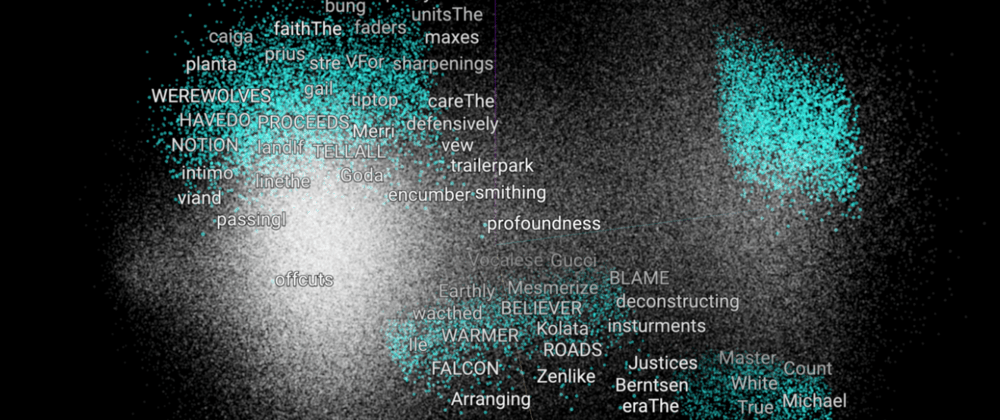

Word Embeddings converts a word to an n-dimensional vector. Words that are related such as ‘desk’ and ‘table’ map to similar n-dimensional vectors, while dissimilar words such as ‘car’ and ‘pen’ have dissimilar vectors. Through this representation, a word’s meaning is reflected based on its embedding hence a machine learning model can learn how words in a given document relate to each other. The benefit of this method is that a model trained on the word ‘desk’ will be able to react to the word ‘table’ even if it had never seen that word in training. To tackle the high-dimensional issues, word embeddings use pre-defined vector space such as 200 to present every word. The vector space dimension is fixed regardless of the size of the corpus. Most word embedding dimension varies between 8 dimensional (for small datasets), up to 1024 dimensions when working with large datasets.

Higher-dimensional embeddings can capture detailed relationships between words but take more time to learn. The vector space then has each word as a vector represented with values within the defined dimension. To determine the vector representation values, two techniques can be adopted depending on factors such as time resource needed and accuracy. These are the Continuous Bag of Words (CBOW) approach and the Skip-gram approach.

When using the Continuous Bag of Words method, a neural network is used to inspect the immediately surrounding context words and predict a target word that comes in between. The Skip-Gram method, on the other hand, uses a neural network to inspect the target word and predict the immediately surrounding context words. For regular terms, CBOW works much faster and has a slightly better accuracy while Skip-gram works well with small quantities of data, and even with uncommon words or phrases.

Also important that using this method for feature extraction is feasible when you have lots of data.

Top comments (0)