During the recent #DevDiscuss chat on "Developer Confessions", I confessed that I didn't really know what I was doing when I started my first dev job 3 years ago. To demonstrate my inexperience, I shared an example of the kind of code I was writing at the time.

Confession: I was winging it completely when I first started my job. This is one of my favorite code monstrosities I wrote. 😂 I promise I know how to use loops now. #DevDiscuss01:13 AM - 03 Apr 2019

Confession: I was winging it completely when I first started my job. This is one of my favorite code monstrosities I wrote. 😂 I promise I know how to use loops now. #DevDiscuss01:13 AM - 03 Apr 2019

The response I received has been overwhelmingly positive. Most of us have written "bad"* code that we're not proud of, but it's a sign of growth when you can look back at that older code, recognize how it could be better, and maybe laugh at yourself for the choices you made. In the spirit of continuing to learn, I want to share some of the ways I might solve this problem today.

*Although this bit of code is silly and could have been written much more efficiently, hard-coding accomplishes the task it needed to just fine.

Context & Goals

Before refactoring any legacy code, it's critical to step-back and assess the context that the code was written in. There could be an important reason for the madness choices a developer made that were influenced by context that you might not be aware of (or remember, if it's your code). In my case, I was simply inexperienced, so this code can be safely refactored.

The code was written for two data visualizations: "Global Foreign Direct Investment Stocks" (in/out) and "China Bilateral Investment Outflows" (China). They have similar data & functionality, with the primary goal allowing the user to explore the datasets by filtering by type, year, or region. I'm going to focus on the global data, but the China dataset can be refactored in a similar way.

Let's assume that changing one of the filters will result in the below values being returned:

let currentType = 'in' // or 'out'

let currentYear = 2017

let currentRegions = ['Africa', 'Americas', 'Asia', 'Europe', 'Oceania']

Note: The region checkboxes don't currently work this way, hence the "All" and "Partial" in the snippet, but this is how it should have been done.

Finally, here is a simplified example of the data itself after it's been loaded in from a CSV:

const data = [

{ country: "Name", type: "in", value: 100, region: "Asia", year: 2000 },

{ country: "Name", type: "out", value: 200, region: "Asia", year: 2000 },

...

]

// Total Items in Array: ~2,400

Option 1: Initializing Empty Objects

Beyond being hard-coded, my original snippet completely violates the Don't Repeat Yourself (DRY) approach to writing code. There are absolutely instances where repeating yourself makes sense, but in this case when the same properties are being repeated over and over again, it's a smarter choice to create the objects dynamically. Doing so also reduces the amount of manual work required when a new year is added to the dataset, and limits the opportunities for input error.

There are several different approaches to making this more DRY: for, .forEach, .reduce, etc. I'm going to use the .reduce Array method, because it processes an array and transforms it into something else (in our case, an object). We're going to use .reduce three times, once per categorization.

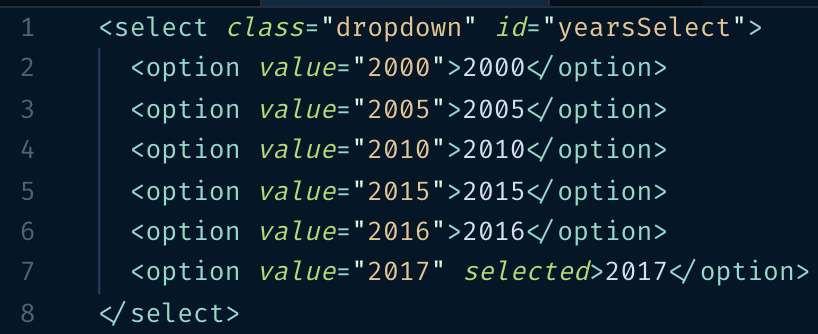

Let's start by declaring our categories as constants. In the future, we only need to add a new year to our years array. The code we're about to write will take care of the rest.

const types = ['in', 'out']

const years = [2000, 2005, 2010, 2015, 2016, 2017]

const regions = ['Africa', 'Americas', 'Asia', 'Europe', 'Oceania']

Rather than thinking about this as types → years → regions, we want to reverse it and start with regions. Once regions is turned into an object, that object will be the value assigned to the years properties. The same is true for years in types as well. Note that it's possible to write this in fewer lines of code, but I'm opting for clarity over cleverness.

const types = ['in', 'out']

const years = [2000, 2005, 2010, 2015, 2016, 2017]

const regions = ['Africa', 'Americas', 'Asia', 'Europe', 'Oceania']

/*

Convert regions to an object with each region as a property and

the region's value as an empty array.

*/

const regionsObj = regions.reduce((acc, region) => {

acc[region] = []

return acc

}, {}) // The initial value of the accumulator (`acc`) is set to `{}`.

console.log(regionsObj)

// {Africa: [], Americas: [], Asia: [], Europe: [], Oceania: []}

Now that we have our regions object, we can do something similar for the years and types. But instead of setting their values to an empty array like we did for the regions, we set their values to the previous category's object.

Edit: It was brought to my attention that the original code snippet didn't actually work once you attempted to load data into it because I was merely referencing an existing object instead of instantiating a new one. The below snippet has been updated to fix this problem by creating a deep copy of the existing object. An explanation is available in this article on "How to differentiate between deep and shallow copies in JavaScript" by Lukas Gisder-Dubé.

function copyObj(obj) {

return JSON.parse(JSON.stringify(obj))

}

/*

Do the same thing with the years, but set the value

for each year to the regions object.

*/

const yearsObj = years.reduce((acc, year) => {

acc[year] = copyObj(regionsObj)

return acc

}, {})

// One more time for the type. This will return our final object.

const dataset = types.reduce((acc, type) => {

acc[type] = copyObj(yearsObj)

return acc

}, {})

console.log(dataset)

// {

// in: {2000: {Africa: [], Americas: [],...}, ...},

// out: {2000: {Africa: [], Americas: [], ...}, ...}

// }

We now have the same result as my original snippet, but have successfully refactored the existing code snippet to be more readable and maintainable! No more copying and pasting when it comes to adding a new year to the dataset!

But here's the thing: this method still requires somebody to manually update the year list. And if we're going to be loading data into the object anyway, there's no reason to separately initialize an empty object. The next two refactoring options remove my original code snippet completely and demonstrate how we can use the data directly.

Aside: Honestly, if I had tried to code this 3 years ago, I probably would have done 3 nested for loops and been happy with the result. But nested loops can have significant negative performance impacts. This method focuses on each layer of categorization separately, eliminating extraneous looping and improving performance. Edit: Check out this comment for an example of what this method would look like and a discussion on performance.

Option 2: Filtering Directly

Some of you are probably wondering why we're even bothering with grouping our data by category. Based on our data structure, we could use .filter to return the data we need based on the currentType, currentYear, and currentRegion, like so:

/*

`.filter` will create a new array with all elements that return true

if they are of the `currentType` and `currentYear`

`.includes` returns true or false based on if `currentRegions`

includes the entry's region

*/

let currentData = data.filter(d => d.type === currentType &&

d.year === currentYear && currentRegion.includes(d.region))

While this one-liner works great, I wouldn't recommend using it in our case for two reasons:

- Every time the user makes a selection, this method will run. Depending on the size of that dataset (remember, it grows every year), there could be a negative impact on performance. Modern browsers are efficient and the performance hit might be minuscule, but if we already know that the user can only select 1 type and 1 year at a time, we can be proactive about improving performance by grouping the data from the beginning.

- This option doesn't give us a list of the available types, years, or regions. If we have those lists, we can use them to dynamically generate the selection UI instead of manually creating (and updating) it.

Option 3: Data Driven Objects

We can combine aspects of the first and second options to refactor the code in a third way. The goal is to not have to change the code at all when updating the dataset, but determine the categories from the data itself.

Again, there are multiple technical ways to achieve this, but I'm going to stick with .reduce because we're going to transform our array of data into an object.

const dataset = data.reduce((acc, curr) => {

/*

If the current type exists as a property of our accumulator,

set it equal to itself. Otherwise, set it equal to an empty object.

*/

acc[curr.type] = acc[curr.type] || {}

// Treat the year layer the same way

acc[curr.type][curr.year] = acc[curr.type][curr.year] || []

acc[curr.type][curr.year].push(curr)

return acc

}, {})

Note that I've eliminated the region layer of categorization from my dataset object. Because unlike type and year, multiple regions can be selected at once in any combination. This makes pre-grouping into regions virtually useless since we have to merge them together anyway.

With that in mind, here is the updated one-liner to get the currentData based on the selected type, year, and regions. Since we're limiting the lookup to data with the current type and year, we know that the maximum number of items in array is the number of countries (less than 200), making this far more efficient than option #2's implementation of .filter.

let currentData = dataset[currentType][currentYear].filter(d => currentRegions.includes(d.region))

The last step is getting the array of the different types, years, and regions. For that, I like to use .map and Sets. Below is an example of how to get an array that contains all the unique regions in the data.

/*

`.map` will extract the specified object property

value (eg. regions) into a new array

*/

let regions = data.map(d => d.region)

/*

By definition, a value in a Set must be unique.

Duplicate values are excluded.

*/

regions = new Set(regions)

// Array.from creates a new array from the Set

regions = Array.from(regions)

// One-line version

regions = Array.from(new Set(data.map(d => d.region)))

// or using the spread operator

regions = [...new Set(data.map(d => d.region))]

Repeat for type & year to create those arrays. You can then create the filtering UI dynamically based on the array values.

Final Refactored Code

Putting it all together, we end up with code that is future-proofed to changes in the dataset. No manual updates required!

// Unique Types, Years, and Regions

const types = Array.from(new Set(data.map(d => d.type)))

const years = Array.from(new Set(data.map(d => d.year)))

const regions = Array.from(new Set(data.map(d => d.region)))

// Group data according to type and year

const dataset = data.reduce((acc, curr) => {

acc[curr.type] = acc[curr.type] || {}

acc[curr.type][curr.year] = acc[curr.type][curr.year] || []

acc[curr.type][curr.year].push(curr)

return acc

}, {})

// Update current dataset based on selection

let currentData = dataset[currentType][currentYear].filter(d => currentRegions.includes(d.region))

Final Thoughts

Cleaning up syntax is only a small part of refactoring, but often "refactoring code" really means reconceptualizing the implementation or relationship between different pieces. Refactoring is hard because there are several ways to solve problems. Once you've figured out a solution that works, it can be hard to think of different ones. Determining which solution is better is not always obvious, and can vary based on the code context and frankly, personal preference.

My advice to getting better at refactoring is simple: read more code. If you're on a team, actively participate in code reviews. If you're asked to refactor something, ask why and try to understand how others approach problems. If you're working alone (as I was when I first started), pay attention when different solutions are offered to the same question and seek out guides on best code practices. I highly recommend reading BaseCode by Jason McCreary. It's an excellent field guide to writing less complex and more readable code, and covers a lot of real world examples.

Most importantly, accept that you're going to write bad code sometimes and going through the process of refactoring - making it better - is a sign of growth and should be celebrated.

Top comments (43)

I remember when you posted this code on Twitter unabashedly, which I SUPER respected. It showed people especially new up and comers that EVERYONE starts somewhere.

This is doubly awesome because it can show people YOU will IMPROVE 🙂🙂🙂

Thank you so much! I was honestly really nervous about posting it on Twitter, so I'm glad it was so well received. I wish more developers would share their bad or in-progress code for exactly the reason you stated - everybody starts somewhere and everyone improves. :D

It's awesome that you've got an eye out for performance - that's always great to see. You are right that nested loops can be problematic, but keep in mind that the cost of the work done in the loop is the main factor.

In this case it's actually 6x faster to build your structure using nested loops, since the cost of creating empty arrays is tiny compared to the overhead of all the other calls in your write-up. I'd argue that the nested loop version is easier to understand too:

As the number of values in

types,yearsandregionsincreases, the nested loop implementation remains 6x faster as the main cost is running the rest of the code, not creating the array.It's cool that you know how to reduce iterations like this, but nested loops aren't automatically a bad thing 🙂

Thanks for the comment! I'm aware that nested loops aren't always a bad thing, that's why I included the word "can" in the part of my article you quoted. :)

In this case, you're right, the nested loop is faster, so I stand corrected on the claim that the solution I proposed is better for performance. Most "best practice" articles/guides I've read have all suggested to stay away from nested loops when possible, so I opted to highlight a different method. Of course it's all context specific, so the "best" way is going to be variable depending on the problem being solved.

In any case, I've updated my article with a link to your comment so others can see it as well. Thanks!

Would you consider it highjacking your post if we used the comments to share "hold my beer" examples of our own terrible old code? I'm currently thinking of some ridiculously bloated and complicated Java code I wrote in years past when inheritance and generics were my solution to all problems.

Not at all! Share away. :D

"Hold my <E extends Beer>"

I once thought the above was good code and was proud of how I had ensured type-safety 😭

As a Java dev, this is just amazing! 😂

At a glance, I would say your original code, while not dry is actually better in terms of long term maintenance and easier for people to jump into.

The reason I say this is that we learn to make things dry and obsess to reduce our code but when you have to maintain a project for 5+ years and developers come and go you realize this is where the mantra "Clever vs Clear" steps into effect and you look at your code in a different way.

You're already a Pro 👍

Thanks for the comment! I think I’m going to disagree. Although I concede that at a glance, reading the hard coded version is easier to jump into, from a long term maintenance perspective it isn’t better. I’ve had to update the data for this interactive twice now, and both times something went wrong at first because I forgot to fully implement the new year. I’ve built a lot more interactives using the data driven approach that don’t have that same problem and can be updated just by uploading a new file. Yes, it takes longer for a new dev to read the code to understand how it works, but it’s straight forward enough that I think that extra mental effort pays for itself.

There are so many unknowns still unanswered so the best reply I could give was 'at a glance'.

First, I really enjoyed this, so please take that. It's lovely to see someone learn so much. This is the sort of code I try help my teammates write. Neat, organised and with clear optimisations.

I want to note a couple further optimisations though.

First, you could create the full dataset in a single reduce using the .add() method on Set. I don't know how Array.from on a Set is or a new Set on an array are implemented but if it isn't some recast of memory then you actually have a few implicit nested loops in this section.

Then, the .includes() test on currentRegions could be faster (and this might matter for large data sets) if the values in currentRegions were either a Set or stored as object keys. This would allow testing via .has() or .hasOwnProperty(), which are O(1) rather than O(n) lookups.

And this is less a matter of optimisation (indeed less optimal on memory but also not coupled to line location) than my taste for non-mutative code, but you can return directly from a reduce using something like:

return {...acc, [curr.type]: { [curr.year]: [...acc[curr.type][curr.year], ...curr] } }

But still bravo on a lot of this and thanks for writing your journey.

(Sorry to write without code highlighting.)

Hey, thanks for the comments! I'm always happy to hear constructive criticism on how to make my code better. :)

If I'm understanding you correctly, you're suggesting creating the arrays of types, years, and regions within the

.reducefunction? That's not a bad idea, and you're right, would provide some optimization. I didn't explain this in the post, but part of my rationale for keeping it separate is to maintain separation of concerns. Those 3 lines are specifically about isolating the values of those variables within the dataset, while the.reduceaccomplishes a separate task. But you make a good point and it's something to consider for future implementations!I hadn't considered storing those values as a Set instead of an array, that's a great idea

Yep! However, I intentionally wrote the longer version for clarity. :) Certainly is worth considering how to make it more concise in production, though!

Re 1, it's not so much where it is but rather that I suspect Array.from loops and new Set loops and the map loops, which means 3 loops for each construction of those items.

That said, you could do it all in one reduce and keep the concerns separated with object keys, and then destructure them into variables in the outer scope.

I think re 3 you're talking about my non-mutation comment?

If you destructure the variables it can be terser, but my case is more for non-destructive operations. I don't like modifying things because it makes the original unavailable for later use without larger refactor. But again I'm an ideologue about this and it isn't common opinion.

Nice Jacque! I remember that feeling of gnarly, non-DRY code from my first coding challenge at my bootcamp where I wrote out 500 something lines for converting a number to its roman numeral equivalent. This is a great solution and well explained as well!

Oh my gosh, that sounds awful 😂And absolutely something that I would have done. Here's to learning to spend our time on harder problems!

I'll never forget how I felt that day. It's burned in to my brain as the lowest I've ever felt as an engineer.

Great post Jacque - it definitely looks cleaner in the end (and less brittle to boot).

Just wondering though - you've performed this refactor, but you've not mentioned how you knew you weren't changing the behaviour? Were there automated tests surrounding the code, or were you executing the code with some sample data and comparing the results manually, or were you doing something else?

Thanks so much!

Thanks for pointing out this omission. This particular project doesn't use tests, so my comparing the before/after code was done by manually checking the final dataset object and the returned UI on the data viz. It was definitely more tedious than it could have been if I had also added tests. :)

Regarding your advice of reading our of code. There is a website that shows you code examples indexed from github for your code search.

I always forget the name, but it always appear when searching by code in Google.

Do you know something similar for Javascript?

It is very useful to see working examples from real life open source projects.

Unfortunately the search in github code via the web site is not very good.

I always get cloning the projects and using grep.

I'm afraid I'm not familiar with any tools like that for JavaScript, but that sounds awesome! If you think of the name, please update us!

I think is this one: programcreek

Now I realize that it covers also Python, Java and Scala.

This is an example of a search for pytz.timezone

Awesome - thanks for posting Jacque!!

I think this point you make is key:

One thing I think helps, tell me if you agree, is if you know a lot more about the mission of the project you're on. In some cases, the mission might be: "I just need to get some web ui out there RIGHT NOW to show some investors what our app might look like," in which case your first solution may be perfectly acceptable.

One the other hand, if you know you need a robust, flexible solution that's going to be around for a long time and be able to adapt to any solution, then what you refactored might be perfect.

So I think it's very important to work with your tech lead to make sure the vision for the task is super clear. If you're the tech lead, make sure you are clear about what the mission is. Then that context helps inform your low level design choices...

What do you think?

Pretty sure, by the way, that my early code was far worse than yours btw!! Fortunately, it's all long gone now!

Thanks so much! Glad you enjoyed it. :) And glad to hear I'm not the only one who has made some poor choices with their code before!

I absolutely agree that knowing more about the mission helps in determining in what works as a solution. There is a very real danger in over-engineering something that can be adequately done with less polish. However, I also think we need to be careful to not let poor code choices (like my original snippet) that are done for demo purposes make it into the final code base. I know I've written something quickly for sake of getting it done, didn't take the time to clean it up or think about a "proper" solution, and now it's sitting in production adding to that project's tech debt.

So yes, 99% agree with a 1% caveat to be mindful that poor code doesn't get moved to production because it's already "done". :)

Thanks for sharing Jacque! It's nice to see that you could take something positive from a complicated experience haha! Can I suggest an article related to the code refactoring subject? This is it, a dev friend of mine wrote it: uruit.com/blog/code-refactoring/

Wow! Great to see such a progress! When I saw your initial code, I couldn't come up with an easy way to "DRY" it. :)

What you did is a great job. That also goes for the other "Options" you added later.

One thing that caught my eye:

...is performance. Yes, some other users already mentioned it. :)

I think we (me too) generally think too much about performance. One great book that I'm reading right now is Martin Fowler's "Refactoring" (pun? :D). In it, he writes, and I agree, to not be so strict about performance. Usually performance bottlenecks are in the parts of the apps we don't think they are.

I'd just add that without a benchmark, we are both just playing poker with no cards in the hand. :)

I'd just advise against even mentioning performance. If you're not writing the next react, just ignore it for this use-case.

hi,Jacque

Can I translate your article into English and share it with Chinese developers?

Hi there! Sure, please go ahead. Just include proper credit & a link back to the original here on Dev.to. :) And please share the link with me once it's published!

Chinese link :《重构我写过的最糟糕的代码》 nextfe.com/refactor-worst-code-hav...

Thanks!

Thanks for sharing!

As a Java person just starting out with JS, this post gives me serious hope that eventually I will write JavaScript code I'm not embarrassed of. Thanks for sharing!

I’m so glad you found it useful and encouraging! That’s exactly why I wanted to share it. I promise you will absolutely get to that point when you’re ready to share. 💚

Very nice article. Thanks.

Actually, you might have already learned that nobody makes the perfect choice at first try.

I like to start with simple versions and evolve it over time.

Thanks for the great post. Love it.

Some comments may only be visible to logged-in visitors. Sign in to view all comments.