Architecting a modern application

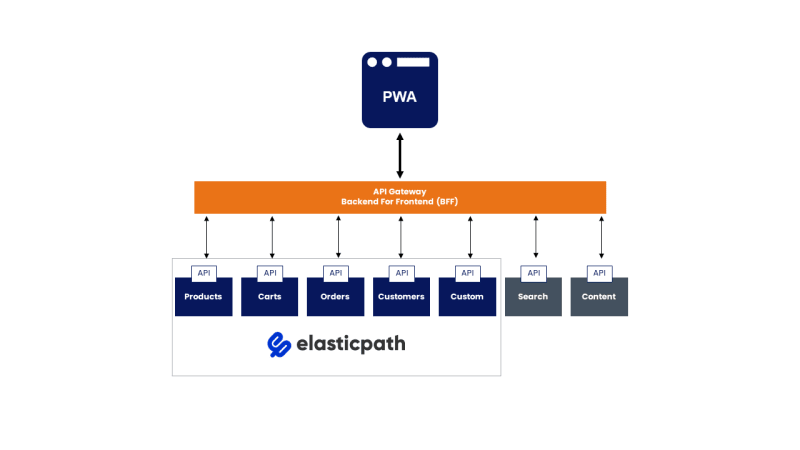

API-First services have transformed the eCommerce space with companies moving to a more modular approach. While the space is still new, a few standard practices have already been identified.

The different modular components for the customer experience may vary depending on the business needs. However, you will always see Search, Content Management System (CMS), and Commerce platforms.

While companies may attempt to push products into a CMS, or adopt a commerce platform with built-in search, these attempts result in subpar performance and go against a major benefit of a modular system. The ability to choose the best-of-breed option in all places.

Ideally, you want to find companies that follow a few technical principles:

API-First - Having a robust and well-thought-out API simplifies merging the different components.

Modular - Each service should be modular, allowing you to replace individual pieces if necessary. This is most often handled through distinct microservices.

Results Oriented - Companies should focus on solving business problems. Don’t get fooled by SaaS companies that only focus on trendy technology.

Scalable - Systems should be cloud-native and ready to scale to your sales volume.

For the UI layer (front-end), to create a robust customer experience, a modern web framework is the best choice. This is typically a React, Vue.JS, or Svelte application with modern cross-device features typically associated with Progressive Web Apps (PWA). The front-end needs to match the scalability of the APIs and should be deployed with a Content Delivery Network (CDN). This may be a JAMStack or Transitional App deployment. If utilizing traditional Server-Side Rendering (SSR), it should be containerized, auto-scaled, and redundant.

So now that the pieces have been identified, the most pressing question is how to merge them into a single experience.

The Backend for Frontend (BFF)

One common approach is to leverage the front-end layer to aggregate the multiple APIs and handle any business logic.

This keeps the overall architecture simple, but it places a significant amount of pressure on the front-end development team. This approach can also be insufficient when dealing with sensitive data such as tax calculation.

A more robust approach is to leverage an API Gateway or Backend for Frontend (BFF) layer between the multiple services and the UI application. To keep things simple, I will define them as a middle-layer between the APIs and Front-End. This is an over-simplification and loses the specifics between an API Gateway and BFF, but is useful in this context.

This new layer can be leveraged for aggregating data, implementing business logic, and handling secure activities. Unfortunately, it becomes a single point of failure for the entire system.

In addition, enterprise class software offers amazing performance and scalability. For example, Elastic Path Commerce Cloud offers a 100ms performance guarantee. If this BFF layer is slow, the entire system will be slow and fails to capitalize on the conversion rate bump leveraging Elastic Path Commerce Cloud provides.

It’s critical that the BFF layer be hosted in a system that auto-scales and optimizes performance. Choosing the best architecture and cloud deployment can have a significant impact on site-speed and reliability.

Deploying the BFF

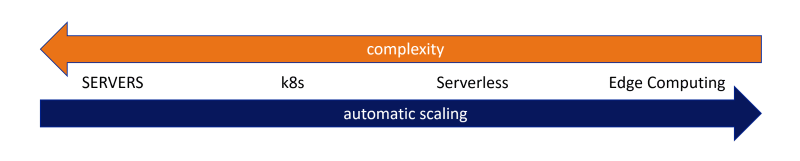

One option is a simple application hosted on a traditional server. A decade ago, this would have been the clear and obvious choice. Compared to the options available today, this monolithic and traditional design has many limitations and requires a high degree of maintenance. The lack of auto-scaling means over-provisioning capacity resulting in higher costs.

Kubernetes (k8s) and containers quickly took over as the leading option for deploying software. Leveraging a k8s cluster allows the BFF to be built as a set of microservices, each independently deployed and automatically scaled. You can find many architecture guides defining this approach. Kubernetes is an excellent enterprise grade solution and the best solution for complex applications that require multiple services, databases, and API gateways.

Source: https://kubernetes.io/docs/concepts/overview/components/

A BFF layer is often quite simple with no database requirements and just the need to call multiple APIs. For these basic requirements less expensive options exist that are easier to set up, maintain, and push to multiple regions.

To reduce maintenance costs, a serverless solution can be considered. This may be a managed container service or Functions-as-a-Service (FaaS). These are extremely easy to leverage and scale. With a pay-per-use model it’s much cheaper than over-provisioning a traditional server but may be more expensive than a k8s cluster. Serverless does come with drawbacks around cold starts, but that is quickly being improved.

With each new deployment option, the amount of code and infrastructure required shrinks, and the ability to auto-scale is streamlined. The responsibility of deploying and scaling the BFF shifted from the development team to the cloud provider.

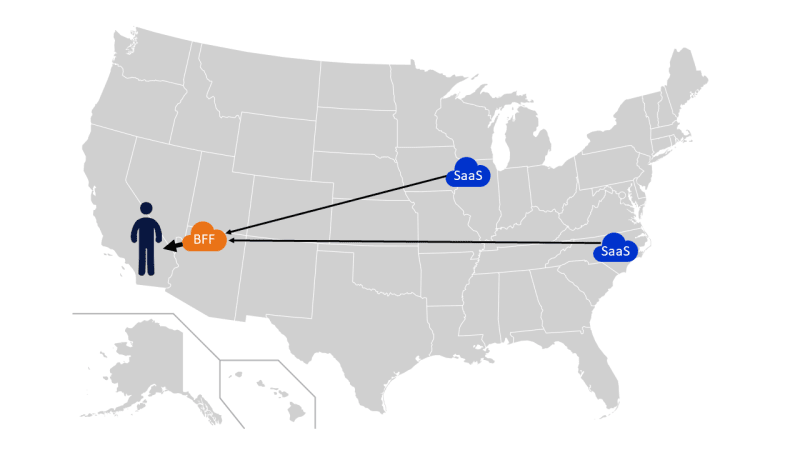

This brings us to Edge Computing. While CDN (content delivery networks) have been leveraged for years to bring static content closer to the end-user with distributed servers and caching, in the same way edge computing brings serverless function execution closer to end-users dropping overall latency.

In order to understand the impact of edge computing, a JavaScript function was deployed to both a standard AWS Lambda with API gateway and as a lambda@edge deployed to CloudFront. Then the two API endpoints were performance tested from a variety of locations.

* The graph removed Singapore for better readability.

Dataset from the traditional lambda function.

| TEST | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Amsterdam | 390 | 400 | 421 | 398 | 413 | 370 | 394 | 398 | 377 | 404 |

| London | 394 | 367 | 405 | 355 | 361 | 358 | 359 | 358 | 360 | 353 |

| New York | 67 | 71 | 64 | 65 | 62 | 78 | 65 | 71 | 72 | 67 |

| Dallas | 177 | 167 | 181 | 178 | 177 | 255 | 176 | 210 | 167 | 254 |

| San Francisco | 320 | 338 | 329 | 328 | 311 | 323 | 318 | 321 | 311 | 330 |

| Singapore | 969 | 958 | 102000 | 975 | 990 | 974 | 970 | 982 | 972 | 974 |

| Sydney | 915 | 905 | 905 | 911 | 896 | 908 | 923 | 911 | 913 | 900 |

| Tokyo | 733 | 768 | 745 | 716 | 702 | 734 | 717 | 758 | 744 | 716 |

| Bangalore | 923 | 934 | 926 | 959 | 897 | 906 | 922 | 911 | 915 | 928 |

Dataset from the lambda@edge deployment.

| TEST | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Amsterdam | 63 | 30 | 22 | 30 | 67 | 23 | 27 | 75 | 61 | 55 |

| London | 18 | 27 | 23 | 20 | 23 | 29 | 32 | 35 | 16 | 27 |

| New York | 141 | 103 | 104 | 122 | 108 | 114 | 107 | 112 | 109 | 121 |

| Dallas | 123 | 108 | 108 | 43 | 47 | 70 | 117 | 114 | 92 | 57 |

| San Francisco | 43 | 44 | 42 | 39 | 42 | 44 | 67 | 82 | 81 | 94 |

| Singapore | 17 | 18 | 100 | 22 | 21 | 99 | 22 | 21 | 25 | 78 |

| Sydney | 27 | 26 | 30 | 26 | 26 | 38 | 29 | 28 | 32 | 46 |

| Tokyo | 31 | 34 | 63 | 39 | 28 | 68 | 45 | 32 | 45 | 94 |

| Bangalore | 57 | 89 | 56 | 95 | 56 | 86 | 81 | 87 | 57 | 105 |

This massive improvement is expected. While the Lambda is deployed to a single region, the Lambda@Edge was deployed to over four hundred distinct locations around the world.

At this time Edge Computing has some major limitations https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/edge-functions-restrictions.html:

- Limited to basic functions

- For Lambda@Edge only NodeJS and Python are supported

- Strict memory and processor limitations.

- There is no concept of Data Storage at edge locations and there is no data replication.

These limitations mean you can’t host a full application on the edge, but the requirements for a BFF fit well into this model.

Benefits of Edge Computing

Latency - The obvious benefit of edge computing is lowering latency and providing faster response times to end users.

Even if the edge function needs to call back to a central region for data access, you will still see performance benefits by leveraging this as your BFF.

You may have data bouncing around the globe before finding its way to your end-user.

Even when the locations are well optimized. If this layer is used to server-render the website, the final HTML will be significantly larger than the streamlined JSON coming from your APIs.

Data intensive HTML at Edge

Reliability - Without any infrastructure to manage, the code will be deployed to hundreds of global servers. This is significantly more redundancy than the standard container-based solution.

**

Scalability** - Your BFF will enjoy set-it-and-forget-it scalability. Without any configuration needed each server will auto-scale your code based on the local demand.

Data Residency - There is no concern over data residency with any data moving through your BFF layer. Since the user will be connecting to a local server location all data will stay within their geographic region.

Price - Edge computing takes a serverless model where you only pay for what is used.

Ecommerce Stats - Website performance and page speed are inversely related. Even small performance improvements can have large impacts on overall revenue. From the Akamai Online Retail Performance Report:

- A 100ms delay led to 7% drop in sales

- 2s delay increased bounce rate by 103%

- 53% smartphone users don’t convert if load time is over 3s

- Optimal loading for most sales is 1.8-2.7s

- 28% of users won’t return to slow sites

- Webpages with most sales loaded 26% faster than competitors

Getting Started

Many companies have already converted to a modular API-first approach. This provides flexibility to design an ideal software stack, more personalized customer experiences, unparalleled scalability, and increased speed to market for new initiatives. Switching away from a legacy monolith is the first step towards improving site performance. You can take a DIY approach with the numerous headless commerce offerings available, or you can work with Elastic Path, who pioneered composable commerce, to help guide this transition.

For those already benefiting from composable commerce, the move towards edge computing may be the next step to improving performance.

Functions can be deployed to lambda@edge directly using tools such as AWS Amplify or Serverless Framework. This may be the best approach if looking to migrate an existing BFF from containers or a standard serverless setup to edge computing.

For new projects modern front-end JavaScript frameworks should be considered.

NEXT.js 12+ includes an Edge Runtime and Edge API Routes allowing for new BFF APIs or server-rendering to fully leverage Edge Computing.

Svelte-Kit also includes adapters supporting Cloudflare, Netlify, and Vercel’s edge computing options with additional community adapters being added as it moves towards v1.0.

These modern solutions allow the BFF to be coded within the same project as the front-end and then easily deployed to Edge Computing for the best possible performance.

Elastic Path has a new open-source front-end starter-kit available on GitHub (https://github.com/elasticpath/d2c-starter-kit) that leverages NEXT.js. If you’d like to learn more about adopting the best eCommerce platform with a modern front-end solution you can find out more at elasticpath.com.

Latest comments (0)