Introduction

When dealing with a database, anytime you need to extract data, you need to execute an operation called query. However, queries only return the data that already exists in the database. But this aggregation operation enables you to analyze your data to zero in on patterns or other information about the data.

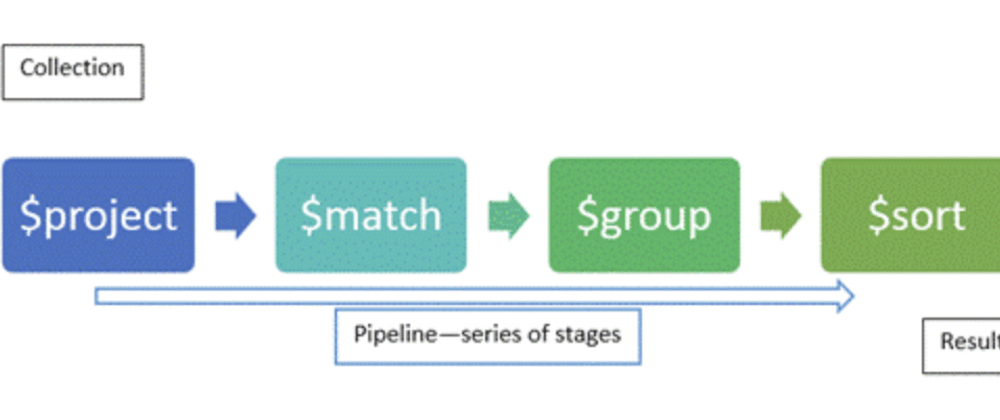

MongoDB aggregation pipelines is just series of instructions called stages that help you perform operations like Sorting, Filtering, Grouping and Transforming etc.

Why

The Aggregation Framework is flexible and used for many different data processing and manipulation tasks. Some typical example uses are for:

- Analytics

- Real time dashboards

- Preparing data to present via views

- Joining data from different collections

- Post processing of data

- Data masking to hide sensitive data

- ...and many more

Tip 1: When To Use $set, $unset & $project

You should use these stages when you need to retain most of the fields from the input records, and you want to add, update or remove a small subset of fields from the input.

For example, imagine there is a collection of user documents similar to the following:

// INPUT (a record from the source collection to be operated on by an aggregation)

{

_id: ObjectId("6044faa70b2c21f8705d8954"),

first_name: "Maurice",

last_name: "Moss",

email: "Maurice.Moss@gmail.com",

registered: true,

dob: "1983-08-31T23:59:59.736Z",

age:39,

address: {

street: "123 Fake St",

city: "Springfield",

state: "IL",

zip: "12345"

}

}

Then imagine an aggregation pipeline is required to produce modified versions of the documents, as shown below:

// OUTPUT (a record in the results of the executed aggregation)

{

name: "Maurice Moss", // Added a new name field

first_name: "Maurice",

last_name: "Moss",

email: "Maurice.Moss@gmail.com",

registered: true,

dob: ISODate("1983-08-31T23:59:59.736Z"), // Field type converted from text

age:39,

address: {

street: "123 Fake St",

city: "Springfield",

state: "IL",

zip: "12345"

}

}

Here, shown by the // comments, there was a requirement to modify each document's structure slightly, to convert the dob text field into a proper date field, and add a new name field, set to the value "first_name + last_name", for every record.

Naively you might decide to build an aggregation pipeline using a $project stage to achieve this transformation, which would probably look similar to the following:

//BAD

[

{

$project: {

// Modify a field + add a new field

name: { $concat: [ "$first_name", " ", "$last_name" ] },

dob: { $dateFromString: {"dateString": "$dob"} },

// Must now name all the other fields for those fields to be retained

email: 1,

registered: 1,

age: 1,

address: 1,

// Remove _id field

_id: 0

}

}

]

As you can see, this pipeline stage length can become quite lengthy based on the input document. Since you use a $project to modify or add fields, you must also explicitly mention each other existing fields from the source records for inclusion. Imagine if your source document has hundreds of fields!

A better approach to achieve the same results, would be to use $set and $unset instead, as shown below:

[

{$set: {

// Modified + new field

name: { $concat: [ "$first_name", " ", "$last_name" ] },

dob: { $dateFromString: {"dateString": "$dob"} },

}},

{$unset: [

// Remove _id field

_id,

]},

]

Notice how easy it gets with $set and $unset when you have hundreds of fields in the source document and you just need to modify few of them.

It is best to use a $project stage when the required shape of output documents is very different from the input documents' shape. This situation often arises when you do not need to include most of the original fields.

Tip 2: Using Explain Plans

While writing queries, it is important to view the explain plan for a query to determine if you have used the appropriate index and if you need to optimize the query.

The same applies to the aggregation pipelines and the ability to view an explain plan for the executed pipeline. It is very critical in aggregations because you generally tend to have more complex logic.

To view the explain plan for an aggregation pipeline, you can execute commands such as following

const pipeline = [{"$match": {"name": "Jo"}}]

db.users.explain().aggregate(pipeline);

Tip 3: Streaming Vs Blocking Stages

When executing an aggregation pipeline, the database engine pulls records from the initial query cursor generated against the source collection. It then attempts to stream each batch through the aggregation pipeline stages. If your have so called _streaming stages _ the database engine will process one batch and then immediately stream it to the next stage. It will do without waiting for other batches to arrive.

However, there are two types of stages that must block and wait for all batches to arrive. Therefore they are referred as blocking stages

- $sort

- $group

If you think about it, it becomes evident that they have to block. Because, if the stage only sort/group each batch content and not wait for other batches, the output records would sorted with in the batch but not whole result set.

These un-avoidable blocking stage increase your execution time by reducing concurrency. If used carelessly, significant increase in memory consumption and slow throughput is inevitable.

Tip 4: Match Filters To Appear Early In The Pipeline

According to mongoDB docs, their engine will do its best to optimize the pipeline at runtime. Especially, steps like moving $match stages to the top of the pipeline.

However, it may not always be possible to promote $match filters for database engine.

Sometimes, a $match stage is defined later in a pipeline to perform a filter on a field that the pipeline computed in an earlier stage. The computed field isn't present in the pipeline's original input collection.

So you as a developer, have to think and promote the $match stage to top level with appropriate fields rather than using computed fields from earlier stages.

Thanks for reading. You can find more best practices at MongoDB docs.

Top comments (0)