Whitepaper Version 1.7 - This is the Original Whitepaper of the Itheum Protocol published in 2022, the protocol has evolved significantly in implementation but the vision of the Itheum Protocol as detailed in this Whitepaper remains the same. To understand the current state of the Itheum Protocol, please read this Whitepaper in combination with the up-to-date documentation located at https://docs.itheum.io

Abstract

Itheum is the world's 1st decentralized data brokerage platform that transforms your personal data into a highly tradable asset class. It provides Data Creators and Data Consumers with the tools required to "bridge" highly valuable personal data from web2 into web3 and to then trade data with a seamless UX that’s built on top of blockchain technology and decentralized governance. We provide the end-to-end platform required for personal data to be made available in web3 for the first time in history and to enable many more wonderful and complex real-world use cases to enter the web3 ecosystem. Itheum provides core, cross-chain web3 infrastructure required to enable personal data ownership, data sovereignty, and fair compensation for data usage - and this positions Itheum as the data platform for the Web3 and Metaverse Era.

Table Of Contents

Disclaimers

Introduction

What is Itheum?

Our Core Products

1) Data Collection & Analytics Toolkit (Data CAT)

2) Decentralized Data Exchange (Data DEX)

3) Data Metaverse

Decentralized Data Trade

Types of Direct Trade

Methods for Buying Access to Data

Our 5-stage Product Development Process

Data DEX Components

Peer to Peer Data Trade

Data NFTs

Data Coalitions

Personal Data Proofs

Data Streams

Data Vault

Trusted Computation Framework

Regional Decentralization Hubs

The Data Metaverse and NFMe ID Avatar Technology

Technology Philosophy

Multi-Chain Strategy

Multi-Chain Technical Design Goals

Cross Chain Tokens

Data Trading Fees

Distribution of Community Fee Rewards

Claims Portal

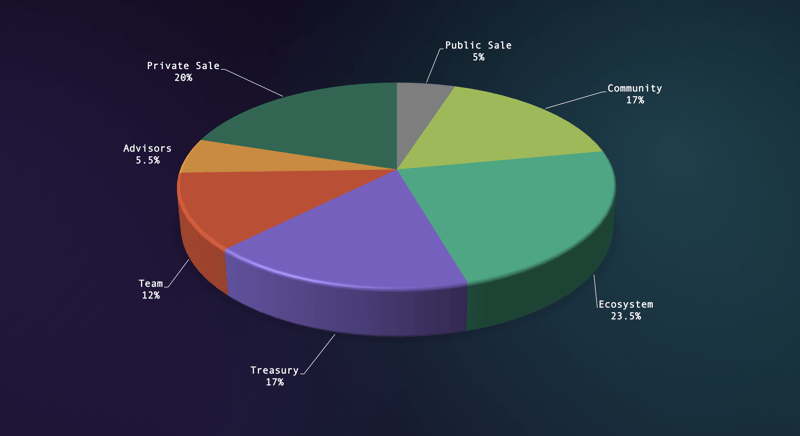

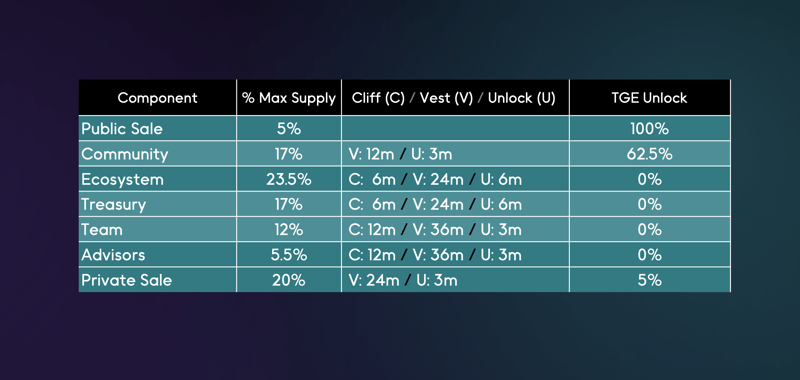

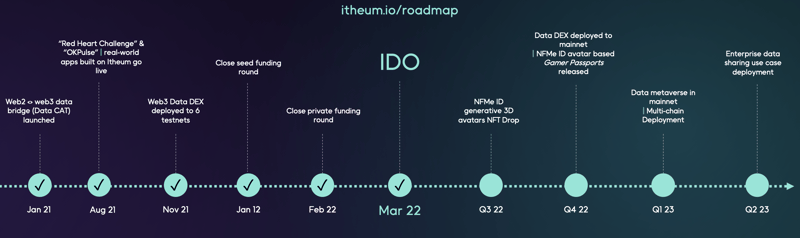

Itheum Token (Tokenomics)

$ITHEUM Staking

Decentralized Governance

Data Collection & Analytics Toolkit Components (Web2 Data Bridge)

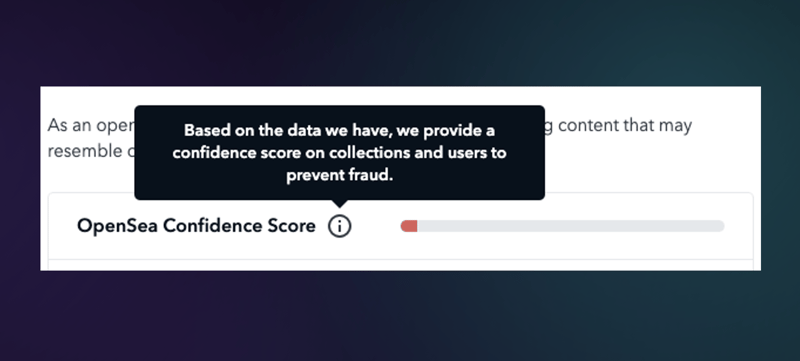

Fraud Detection — “Gaming” the system

Key Terms of Reference

RISKS

Disclaimers

PLEASE READ THE ENTIRETY OF THIS "DISCLAIMER" SECTION CAREFULLY. NOTHING HEREIN CONSTITUTES LEGAL, FINANCIAL, BUSINESS OR TAX ADVICE AND YOU ARE STRONGLY ADVISED TO CONSULT YOUR OWN LEGAL, FINANCIAL, TAX OR OTHER PROFESSIONAL ADVISOR(S) BEFORE ENGAGING IN ANY ACTIVITY IN CONNECTION HEREWITH. NEITHER ITHEUM LIMITED (THE COMPANY), ANY OF THE PROJECT TEAM MEMBERS (THE ITHEUM TEAM) WHO HAVE WORKED ON THE ITHEUM PLATFORM (AS DEFINED HEREIN) OR PROJECT TO DEVELOP THE ITHEUM PLATFORM IN ANY WAY WHATSOEVER, ANY DISTRIBUTOR/VENDOR OF $ITHEUM TOKENS (THE DISTRIBUTOR), NOR ANY SERVICE PROVIDER SHALL BE LIABLE FOR ANY KIND OF DIRECT OR INDIRECT DAMAGE OR LOSS WHATSOEVER WHICH YOU MAY SUFFER IN CONNECTION WITH ACCESSING THE PAPER, DECK OR MATERIAL RELATING TO $ITHEUM (THE TOKEN DOCUMENTATION) AVAILABLE ON THE WEBSITE AT HTTPS://WWW.ITHEUM.IO/ (THE WEBSITE, INCLUDING ANY SUB-DOMAINS THEREON) OR ANY OTHER WEBSITES OR MATERIALS PUBLISHED BY THE COMPANY FROM TIME TO TIME.

This Whitepaper and any other documents published in association with it including the related token sale terms and conditions (the Documents) relate to a potential token (Token) offering to persons (contributors) in respect of the intended development and use of the network by various participants. The Documents do not constitute an offer of securities or a promotion, invitation or solicitation for investment purposes.

The purchase of Tokens involves significant risks and prior to purchasing them, you should carefully assess and take into account the potential risks including those described in the Documents and on our website.

Although there may be speculation on the value of the Tokens, we disclaim any liability for the use of Tokens in this manner. A market in the Tokens may not emerge and there is no guarantee of liquidity in the trading of the Tokens nor that any markets will accept them for trading.

By accessing the Token Documentation or the Website (or any part thereof), you shall be deemed to represent and warrant to the Company, the Distributor, their respective affiliates, and the Itheum team as follows: (a) in any decision to acquire any $ITHEUM, you have not relied on and shall not rely on any statement set out in the Token Documentation or the Website; (b) you will and shall at your own expense ensure compliance with all laws, regulatory requirements and restrictions applicable to you (as the case may be); (c) you acknowledge, understand and agree that $ITHEUM may have no value, there is no guarantee or representation of value or liquidity for $ITHEUM, and $ITHEUM is not an investment product nor is it intended for any speculative investment whatsoever; (d) none of the Company, the Distributor, their respective affiliates, and/or the Itheum team members shall be responsible for or liable for the value of $ITHEUM, the transferability and/or liquidity of $ITHEUM and/or the availability of any market for $ITHEUM through third parties or otherwise; and (e) you acknowledge, understand and agree that you are not eligible to participate in the distribution of $ITHEUM if you are a citizen, national, resident (tax or otherwise), domiciliary and/or green card holder of a geographic area or country (i) where it is likely that the distribution of $ITHEUM would be construed as the sale of a security (howsoever named), financial service or investment product and/or (ii) where participation in token distributions is prohibited by applicable law, decree, regulation, treaty, or administrative act (including without limitation the United States of America, Canada, and the People's Republic of China); and to this effect you agree to provide all such identity verification document when requested in order for the relevant checks to be carried out. The Company, the Distributor and the Itheum team do not and do not purport to make, and hereby disclaims, all representations, warranties or undertaking to any entity or person (including without limitation warranties as to the accuracy, completeness, timeliness, or reliability of the contents of the Token Documentation or the Website, or any other materials published by the Company or the Distributor). To the maximum extent permitted by law, the Company, the Distributor, their respective affiliates and service providers shall not be liable for any indirect, special, incidental, consequential or other losses of any kind, in tort, contract or otherwise (including, without limitation, any liability arising from default or negligence on the part of any of them, or any loss of revenue, income or profits, and loss of use or data) arising from the use of the Token Documentation or the Website, or any other materials published, or its contents (including without limitation any errors or omissions) or otherwise arising in connection with the same. Prospective acquirors of $ITHEUM should carefully consider and evaluate all risks and uncertainties (including financial and legal risks and uncertainties) associated with the distribution of $ITHEUM, the Company, the Distributor and the Itheum team.

$ITHEUM are designed to be utilised, and that is the goal of the $ITHEUM distribution. In particular, it is highlighted that $ITHEUM: (a) does not have any tangible or physical manifestation, and does not have any intrinsic value (nor does any person make any representation or give any commitment as to its value); (b) is non-refundable and cannot be exchanged for cash (or its equivalent value in any other digital asset) or any payment obligation by the Company, the Distributor or any of their respective affiliates; (c) does not represent or confer on the token holder any right of any form with respect to the Company, the Distributor (or any of their respective affiliates), or their revenues or assets, including without limitation any right to receive future dividends, revenue, shares, ownership right or stake, share or security, any voting, distribution, redemption, liquidation, proprietary (including all forms of intellectual property or licence rights), right to receive accounts, financial statements or other financial data, the right to requisition or participate in shareholder meetings, the right to nominate a director, or other financial or legal rights or equivalent rights, or intellectual property rights or any other form of participation in or relating to the Itheum platform, the Company, the Distributor and/or their service providers; (d) is not intended to represent any rights under a contract for differences or under any other contract the purpose or pretended purpose of which is to secure a profit or avoid a loss; (e) is not intended to be a representation of money (including electronic money), security, commodity, bond, debt instrument, unit in a collective investment scheme or any other kind of financial instrument or investment; (f) is not a loan to the Company, the Distributor or any of their respective affiliates, is not intended to represent a debt owed by the Company, the Distributor or any of their respective affiliates, and there is no expectation of profit; and (g) does not provide the token holder with any ownership or other interest in the Company, the Distributor or any of their respective affiliates.

Notwithstanding the $ITHEUM distribution, users have no economic or legal right over or beneficial interest in the assets of the Company, the Distributor, or any of their affiliates after the token distribution.

To the extent a secondary market or exchange for trading $ITHEUM does develop, it would be run and operated wholly independently of the Company, the Distributor, the distribution of $ITHEUM and the Itheum platform. Neither the Company nor the Distributor will create such secondary markets nor will either entity act as an exchange for $ITHEUM.

This Whitepaper describes a future project and contains forward-looking statements that are based on our beliefs and assumptions at the present time. The project envisaged in this Whitepaper is under development and being constantly updated and accordingly, if and when the project is completed, it may differ significantly from the project set out in this whitepaper. No representation or warranty is given as to the achievement or reasonableness of any plans, future projections or prospects and nothing in the Documents is or should be relied upon as a promise or representation as to the future.

The laws of various jurisdictions may apply to the Tokens. The regulatory treatment of the Tokens may change from time to time which may affect the offering and use of the Token and the development of the project described in this Whitepaper.

Introduction

Every day, billions of people give away their personal data to organizations in return for some service or product. They sign up to apps, websites, social networks, make online purchases, use digital banking for their day to day transactions, use wearables to monitor their health, and use countless other digital services run by commercial profit-seeking organizations who absorb their personal data and put it into locked-up data silos.

These organizations then use your personal data to learn more about you and people like you. They then create more products and services for you to buy and get hooked onto… they call this "stickiness"; and their objective is for you to keep coming back and to share more data.

Some organizations (usually the largest and most influential) can even use your data to influence your thinking and ideas or even resell your data to 3rd Party Data Brokers (independent commercial organizations). These 3rd Party Data Brokers lurk in the shadows and "deal in the personal data trade" making millions of dollars of profit by packaging and selling your data to other organizations. There are over 4000 of these Data Brokers in the world today and they have over 3000 data points collected on each one of us! The entire Data Brokerage industry is worth over USD 200 billion each year and the person generating this data (You!) have no idea how your data is used nor do you get any form of compensation.

It gets more unnerving when we realize that as the ever-growing digitization of our physical world gives birth to the hyper-digitized "Metaverse", the amount of data we generate will also grow at an exponential rate - and all this "new" data will also be "harvested" from us.

At Itheum, we believe that YOUR DATA IS YOUR BUSINESS!

What is Itheum?

Itheum wants to change this current toxic model for personal data collection and exchange and level the playing field — where the commercial enterprise and “you” (the Data Creator) equally benefit from the trade of personal data.

Itheum empowers data ownership in the metaverse and brings new market value to your data. It enables this by providing “decentralized data brokerage” technology. It’s a suite of tools that enables high-value data to be bridged from web2 to web3 and then be traded peer-to-peer using blockchain technology. It allows for “viral adoption” via our creative NFMe ID (Non-Fungible Me ID) data-backed 3D NFT avatars, "Data NFT" data licensing technology, and our innovative Data Coalition DAOs (DAO entities that can bulk-trade your data with governance and oversight). It also aims to be fully privacy-preserving, regulation-friendly, and cross-chain; making it the most comprehensive core blockchain data infrastructure available in the market with use cases in both the enterprise and consumer space.

It provides 3 fundamental groups of products (that when used together) will flip the dynamic of personal data collection, brokerage, and trade from being a fully top-down model to a more inclusive bottom-up model.

Our Core Products

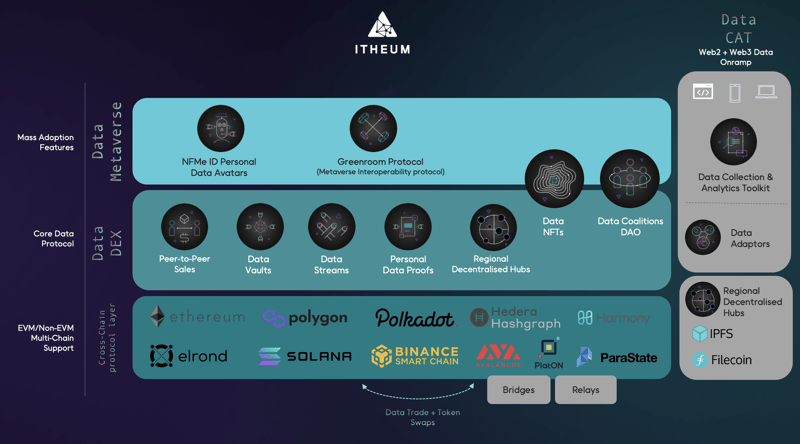

Itheum has 3 Core Products that work in unison to enable new data to be generated and collected from the web2 world and for that data to then flow seamlessly into the web3 domain (via our Data CAT - Collection & Analytics Toolkit). This data can be claimed by the Data Creators (users who originally generated the data) and traded using our innovative peer-to-peer data trading technology (via our Data DEX).

For a platform like Itheum to reach its highest potential it also needs to have mass adoption (more users = more data = more data trade) - we aim to achieve this via our final core product group (called the Data Metaverse) - where we provide "viral", "sexy" and "low barrier to entry" consumer products that will appeal to the masses and in-turn fuel mass adoption. The general idea is to make the topic of data ownership less complex to understand and therefore evolve into an attractive new technology area for the masses to explore and participate.

1) Data Collection & Analytics Toolkit (Data CAT)

Tools for structured and rich personal data collection and analytics.

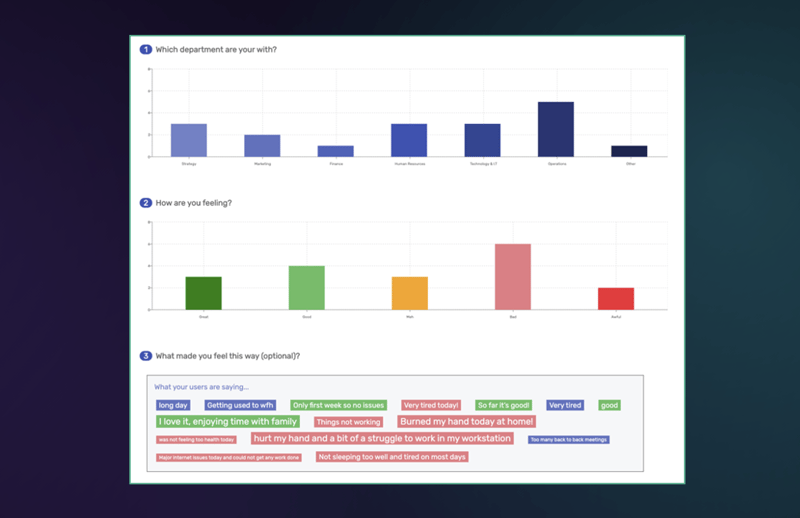

Anyone can use Itheum's Data CAT to seamlessly build apps and programs that can collect structured and rich personal data from users and also provide visual trends and patterns on the collected data (usually using fully anonymous or semi-anonymous analytics to protect the “Data Creator” — i.e. the user who generated the original data)

With this product, Itheum provides real-world value and adoption — and in return, we generate highly structured and outcome-oriented personal datasets (i.e we normalize what user data looks like across organization silos)

This product is essentially a fully-featured personal data and analytics platform offered as a PaaS (platform as a service) to organizations. For example:

A health and wellness company can use the Itheum Data CAT and embed it into their apps; enabling the collection of health data like blood pressure, fitness/activity, sleep quality, and then visualize trends and patterns of their app user base.

A financial organization can use the Itheum Data CAT to collect scheduled data via customized surveys or questionnaires, triggered by certain actions related to spending patterns — the trends and patterns can then give them more context of their customers’ spending habits.

Itheum already has multiple apps and programs using the Data CAT — you can read about these real-world case studies here

With this product offering; we are enabling the creation of highly-structured, outcome-oriented and normalized personal datasets (e.g. here is a sample of the data insights being collected by the OKPulse app which is built on Itheum). This data can then be bridged from web2 to web3 and unlocked by our core web3 data protocol - called the Data DEX.

2) Decentralized Data Exchange (Data DEX)

Suite of web3 tools for the seamless trade of personal data.

This product enables you (the Data Creator) to own and trade the personal data which was collected by real-world organizations and entities (who built apps on Itheum's Data CAT product or who may have datasets of their own stored in on-premise data centers). It unlocks the personal data from these private silos and lets you trade your data on the open market with other organizations and entities that can derive value from these previously locked datasets.

So in those same examples given above:

If you use that health and wellness company’s app; you get to own your data and then trade the health and wellness data you collect as part of your subscription to that app by using the Itheum Data DEX.

If you use that financial organization product; you get to own your behavioral data and trade it as you choose with anyone else on the Itheum Data DEX.

As your personal data was collected as part of a real-world product or service, it’s highly-structured, outcome-oriented, and normalized — which means it’s highly valuable to many organizations across the world looking to build high-quality datasets to power their machine learning & analytics backed business insights engines.

This is the real value of Itheum and a key differentiator when compared to other blockchain-based data platforms. They struggle to gain any real momentum as their products cannot effectively be used in the real world. Itheum wants to focus on real-world adoption and our 3 core product groups are a balanced approach to adoption and decentralized self-sovereignty.

Using the Itheum Data DEX, you can trade your data via a fully autonomous peer-to-peer mechanism or use our Data Coalition DAO technology and align to a decentralized entity (run by a DAO) which will trade your data on your behalf (whilst acting on your best interests) and compensate you after the successful bulk-trade of the data. Data Coalitions are explained in more detail in a below section, but they are a bit like decentralized credit unions - View a view on Credit Union Philosophy— who by representing many members (DAO participants) have the collective bargaining power on who can procure your data and for what it can be used for (i.e. they trade on behalf of their members' interests)

The Data DEX is an extremely powerful web3 infrastructure construct; it will aim to be fully multi-chain compliant (EVM and non-EVM blockchains) so it can gain the broadest adoption and will eventually run on decentralized storage (e.g. IPFS) and domain names (e.g. ENS) - meaning it will be fully censorship-resistant data marketplace for the open metaverse.

The Data DEX can also be thought of as the core data protocol layer that enables decentralized data ownership and trade. This protocol can be abstracted with more products built on top of it; the Data Metaverse being an example of such a consumer-friendly, "viral" product suite.

3) Data Metaverse

Suite of metaverse, gaming and NFT aligned consumer products that appeal to the masses with a goal to enable mass adoption.

The concepts behind data trading and blockchain-backed data technology can be overwhelming for most people to grasp. This is a key reason why many of the current web3 data platforms have very low adoption and hype. For platforms such as Itheum to change the dynamic of data ownership we need large-scale bottom-up adoption - and this kind of adoption can only come if we build consumer products that "everyone wants to use".

In web3; we have observed that this viral adoption is most present in the gaming industry where there are large groups of people from varying socioeconomic and demographic backgrounds who are readily willing to participate in new gaming platforms and adopt new technology. The interest in play-to-earn games like "Axie Infinity" and metaverse games like "The Sandbox" are evidence of this (with new platforms coming to market weekly and still having rapid adoption and growth)

The challenge for Itheum was to take "serious and complex ideas around data ownership" and abstract them with "sexy and easy-to-use consumer products". We knew that if we achieve this we can drive large adoption for our platform and in turn normalize the complex concepts around data ownership and trade. In turn; Itheum gets a large user base to test our core protocol layer and to increase the volume of data available for decentralized data trade.

The key products that form our Data Metaverse ecosystem are:

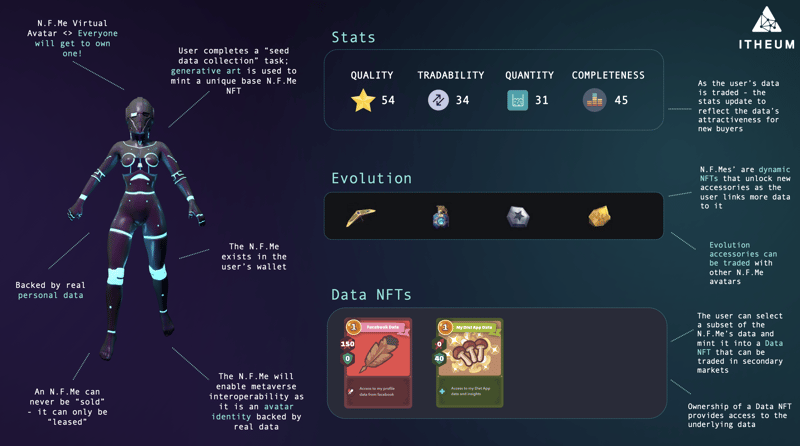

NFMe ID (Non-Fungible Me ID)

Every person on earth can be thought of as a "non-fungible human", as we have data that is unique to each one of us (like our DNA or even our fingerprints - which are non-fungible). NFMe ID is a digital avatar identity that is built on dynamic NFT technology and backed by real personal data. Everyone in the world can mint an NFMe ID on Itheum's platform and "truly own" it. As more data is collected by the "NFMe ID host" and pushed into the NFMe ID; more "accessories" are minted and linked to the NFMe ID. These accessories are NFTs themselves and can be freely traded. NFMe ID avatars are the next generation "Profile Picture NFT" and/or gaming avatars and the goal is to have it evolve into being a universal avatar for each human on earth. They will be portable in the real-world and interoperable in all web2 and web3 metaverse platforms (e.g MultiversX blockchain's XWorld metaverse, The SandBox, Axie Infinity, Decentraland, NVIDIA Omniverse, Meta etc.); backed by real value (in the form of personal data), and powered by true ownership (NFT technology)Data NFTs

The NFMe ID host can choose to take subsets of their base data and mint them into separate Data NFTs. These Data NFTs are linked to the base NFMe ID but can be independently traded in our own Data NFT marketplace or secondary marketplaces (e.g. XOXNO or OpenSea). As an example; the user can add more data to their NFMe ID by joining an app like the Red Heart Challenge (an app built on Itheum's Data CAT), as the user participates in the data collection as part of this app; data will flow into the NFMe ID. The user can then choose to mint a Data NFT that only holds the subset of data generated via the Red Heart Challenge. This Data NFT can then be traded to a commercial enterprise or researcher who may be keen to access the core dataset. Ownership of a Data NFT provides the "license" to access to the underlying data from the "Creator"Data Coalition DAOs

A more detailed explanation of Data Coalitions are provided in a below section, but within the context of the Data Metaverse - you can think of Data Coalitions as enterprises or groups who are keen to trade bulk datasets on behalf of a large group of individual users. As personal data is much more valuable when traded in bulk, it makes sense to align to a Data Coalition and have your data grouped into a large dataset. In the Data Metaverse - a Data Coalition may occupy some "land" and setup up their "virtual DAO premise" and your NFMe ID will be able to visit this premise and choose to align to the Data Coalition which in turn will make sure the user's best interests are taken care of during any trade of data that happens as part of this interaction.Greenroom Protocol

We envision a future where metaverse interoperability can be achieved via open-standard digital avatars and your NFMe ID avatars aim to be these open-standard avatars. Interoperability between metaverse platforms is a very early-stage idea but the primary use case is to have the "users or players" move their digital assets and avatars between independent metaverse platforms. As an example; a user on MultiversX blockchain's XWorld metaverse can move their avatars, data and assets to The Sandbox or possibly even into centralized metaverse platforms like Meta and participate in the native gameplay and ecosystem exploration. The Greenroom protocol is Itheum's initiative for open-standard metaverse interoperability. It will consist of two parts:

- A set of rules and standards to define NFT metadata that can then be implemented across metaverse platforms. The metadata can indicate the data for the visual characteristics of a character and (possibly) the standards for animation rigging to ensure the character appears as a native character in any metaverse platform.

- A metaverse interoperability "virtual waiting room" called the Greenroom. The Greenroom is where your NFMe ID will exist (visually in 3D) and you will be able to see and organize your "accessories" and other NFMe ID characters and trade accessories whilst interacting with them. The Greenroom is where the "Itheum Data metaverse" will integrate with other metaverse platforms like MultiversX blockchain's XWorld or The Sandbox. Once open metaverse integrations are built with other 3rd party platforms, they will appear as a portal inside your Greenroom and you will be able to enter it and teleport your NFMe ID avatar into other metaverse ecosystems. In show business; a Greenroom is a waiting room where performers "hang out" until they are called on stage - similarly; Itheum's metaverse Greenroom is where your NFMe ID avatar can hang out until it can teleport to other metaverse platforms.

As we are a MultiversX blockchain core project, we aim to build the first version of the Greenroom Protocol in XWorld - a network of interoperable open metaverse worlds powered by the MultiversX blockchain.

Solution Overview Diagram

The below high-level solution overview diagram details how all our key components and features align to enable Itheum's comprehensive data technology solution for the web3 and metaverse ecosystem.

Important disclaimer on usage of certain words in following sections

It must be duly noted that we use terms such as "Buy", "Buying", "Purchase", "Pay", "Sale", "Selling" and "Sell" purely for simplification of explanation in the following sections; in reality, a "sale" or "purchase" refers to an "access rights request transaction" between two parties and not to any form of monetary transaction. Itheum is a system that allows for data access to be granted via peer-to-peer handshakes coordinated by our $ITHEUM utility token. Please read our Token Utility section for more on this. It must also be duly noted that we use the $ symbol as a prefix to the $ITHEUM utility token as a means to simplify our written communication in this whitepaper and for the reader to be able to differentiate the $ITHEUM token from the "ITHEUM platform" - the $ is in no way, form or shape an indication that it's a form of currency, it is purely a "software-based utility key" that is needed to unlock access to data assets that are powered by the Itheum platform.

Decentralized Data Trade

The Data DEX allows for the seamless trade of personal data by “Data Creators”. The sale, verification, and ownership accreditation of the data are handled on-chain but the actual data being traded is kept off-chain.

There are a few reasons for this:

On-chain storage of large datasets is not feasible. Blockchains are not built for the storage of data and doing this will be costly and also pollute the blockchain ledger.

On-chain storage of entire data or segments of data can also lead to privacy and data sovereignty issues as the blockchain is a fully open, distributed, and transparent tool.

For these reasons, the trade of data is facilitated via a hybrid on-chain/off-chain model.

Data is first validated and hashed. The validated and encrypted dataset is uploaded to a centralized, secure storage location to a hidden (non-publicly advertised) destination.

It’s important to note that the above “storage location” is centralized. rather than being decentralized via IPFS or FileCoin (for example). Again there are reasons for this — this mainly has to do with the data sovereignty laws of data where certain countries or regions require data to remain within geographical boundaries. We are working on a solution that allows for decentralized, region-based storage or via validated decentralized node-based storage. This will be abstracted via both the Data Coalitions and Regional Decentralized Hubs features - where privacy, security, and regulatory requirements for storage of data are governed via a delegated, authorized

Data Coalition Boardwho will manage these on behalf of the end-user.The hashed value and the identifier for the location are stored on the blockchain. The availability of this new data for trade is then “Advertised” — allowing for "access" this new dataset to be “discovered” and then purchased via on-chain transaction facilitation.

At this point, there are “two groups” of data you can trade on the DEX. They are as follows:

1) Trading Core Itheum Data

The core Itheum platform is a general-purpose personal data collection platform where organizations can build applications (which require structured user data) using Itheum's Data CAT.

Examples of these structured data collection applications are:

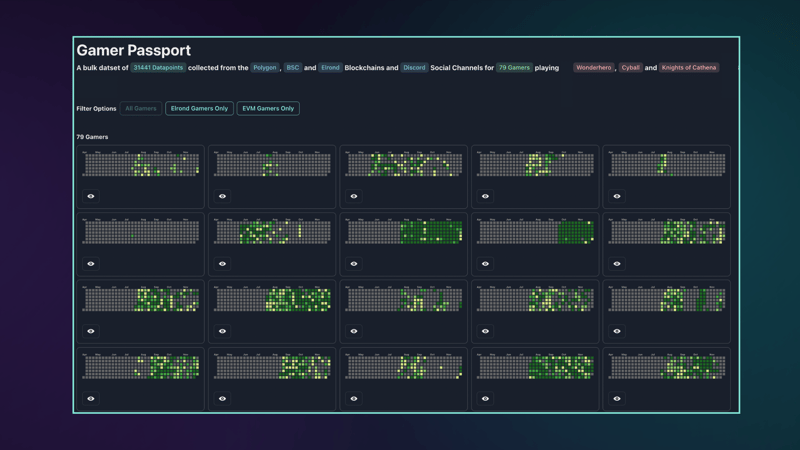

- Gamer Passport - Join alpha release

- Red Heart Challenge (https://itheum.com/redheartchallenge)

- OKPulse (https://okpulse.life/)

- Other Programs (https://itheum.io/#case-studies)

The applications are called “programs” within the Itheum Data CAT platform and these programs can onboard their own “end-users". These end-users generate a lot of personal data and have access to an end-user portal app which provides full visibility over the data and insights being collected.

This demo video shows you an example of the end-user portal for OKPulse (https://www.youtube.com/watch?v=ITONnseBFV4)

The end-user portal allows the user (Data Creator) to link their MultiversX (previously called Elrond), Ethereum, Polygon, BSC, Avalanche (or other supported Blockchain) account to their Itheum platform account. Once they have done this they can then use the Data DEX to load the raw datasets they have generated and advertise them for trade.

As the data collected via an Itheum application is fully structured and outcome-oriented, for example — Red Heart Challenge data is centered around the self-management of cardiovascular disease and OKPulse is around the proactive monitoring of employee health and wellness issues — it is very valuable for a Data Consumer who wants to align analytics discovery or outcome analysis around a certain topic.

When this data is grouped with multiple people who have joined the same application via a “Data Coalition” and augmented with personal data via the “Data Vault” then the value of the data can grow exponentially as the relevance, quantity, and quality of the datasets grow.

2) Trading Any Arbitrary Data

The Data DEX also allows you to trade any arbitrary data using the same on-chain facilitation process. This allows anyone with a crypto wallet to trade their original datasets via the Data DEX. This reduces the barrier of entry for end-users and also provides them an equal opportunity to participate in the shared data economy.

2.1) Trading Social Media Profile Data

As you may know, Facebook opened up the option for individuals to download all their data in bulk. This was a feature that was released by Facebook after pressure from data advocacy regulators who wanted to ensure individuals had the right to download their data from the Facebook platform should they ever want to delete their Facebook account or move to a new social media platform.

The Itheum Data DEX will allow for the above scenario (of moving to a new social media platform) to be handled seamlessly. For example, an individual can download all their Facebook data and advertise it for trade on the Data DEX, they can then align with a Data Coalition centered around the responsible usage of data by Social Media organizations. A new social media platform can then view the “Facebook user datasets” managed for trade by the Data Coalition, agree to the responsible terms of use, and procure bulk access to the data. The new social media platform uses the Data DEX to bootstrap and migrates Facebook users to its platform directly via an authorized, delegated owner (Data Coalition) of the end-user data. The end-user (original Facebook user) then gets the relative micropayments from the Data Coalition and can also view the complete audit trail of data transfer between the social media platforms.

Important: Please note that the use-case of trading your Facebook profile data is given primarily as a futuristic proof-of-concept on how data like social media profiles can be "made portable". Itheum does not encourage this activity as (if not done cautiously) may lead to your sensitive social media data being propagated outside of your control. Soon, Itheum will be launching a technology called "Data NFT-Lease", which will enable a much more controlled sharing experience of sensitive data.

2.2) Trading Original Data as a "Data Stream"

The Data DEX also allows for the on-chain trade of any other type of original data as a "Data Stream" (a stream of data or files that you can host yourself in a secure and regulation-compliant location). For example, you can create a dataset of all the brands and products you, your family and friends like, all the reviews and ratings of your favorite restaurants, personal fitness, or other wellness data. You can also create and trade other interesting IP-centric general datasets like utterances to intent mappings which can then be used by organizations to train NLU and speech-to-text applications. For example, a hospitality booking organization (which provides telephony services or conversational tools like chatbots to help users make a booking) might already be spending a lot of investment in continuing to study end-user speech-to-text patterns and map them to intents that are relevant to their industry. They can sell the utterance-to-intent mapping data on the Data DEX to recoup some of their spending in producing this IP.

Note: "Data Stream" based trading of data will be live soon thanks to our launch of Data NFT technology. Please more about it in the below section.

Types of Direct Trade

As described in the above section - you can use the Itheum Data DEX to trade personal data using on-chain tools that coordinate the trade between buyer and seller. On the Data DEX, there are essentially two types of direct trade that can occur. We mention “direct trade” here as it’s the seller (Data Creator) who decides which type they prefer and initiates the trade process within those constraints. In a later section we will describe how data can also be sold indirectly via a delegated Data Coalition, but for now, let’s focus on the following two direct trade types.

- Trade of Data NFTs

- Trade of Data Packs

let’s now dive into these two types individually and understand the difference.

Trade of Data NFTs

Once a Data Creator decides to trade their personal data, the default method of trade is via Data NFT technology. A Data Creator can choose to trade their personal data as an NFT (Non-Fungible Token) which functions as a "digital, autonomous license" for data usage between counterparties. Using an NFT token for this use case makes a lot of sense as personal data is very unique and NOT fungible (watch this short video to understand the difference between fungible and non-fungible). This allows for the Data Creator to have more control of their data and can align to NFT features that make their data grow in value (rarity/scarcity) and increase the exposure of their data assets due to the interoperability and portability NFT standards have in the blockchain ecosystem.

A Data Creator should choose to trade data as a Data NFT if they have the following requirements:

- Trade a “limited number” of copies of data: This is the same concept as the limited edition NFTs we see in the market today. Having a limited number of copies of the data will create organic growth demand for the data as the buyer will realize the rarity and scarcity of the data. For example, if a Data Creator “only mints 2 copies” of their DNA sequencing result — this has a lot of value to a buyer trying to build a dataset of similar DNA sequencing results as there are only 2 copies available. If there were virtually unlimited copies available (as you would have with Data Pack-based trade) — then the perceived inherent value for the data will be less.

- Allow for the “re-sale” of data: The Data Creator is happy to allow for the re-sale of the NFT packaged data by a buyer. This opens up a lot of opportunities for the data to grow in value as the buyer might have a better ability to “market the data”. This also opens up opportunities for secondary markets where “verified, legitimate data brokers can exist on-chain” — a revolutionary concept and a futuristic solution to the problem that exists today where centralized data brokers are selling your personal data without your knowledge.

- Benefit from the “re-sale” of data via Royalties: Your data is essentially your IP (much like a song by a music artist or a book by an author) and packaging your data and selling it as an NFT will allow you to earn a royalty on the resale of the data. For example, you can choose to nominate a 10% royalty condition and if a buyer re-sells the data, 10% of the sale will be transferred to your account.

- Benefit from multiple NFT marketplaces: As Itheum Data NFTs are built on the NFT standard, they immediately have interoperability with all the NFT marketplaces that support this standard (XOXNO, OpenSea, Rarible, etc). This significantly increases the audience and therefore increases your potential to sell.

Demo 1: minting and selling Genomics data as a Data NFT

Demo 2: minting and selling Blood tests results as a Data NFT on OpenSea

Classes of Data NFTs

There are 2 classes of Data NFTs in Itheum and you can pick a specific class of NFTs based on your requirements for privacy, regulation, control of data, re-trade ability, and royalty collection.

Data NFT-FT

These Data NFTs are comparable to the perpetual fully transferable license model for "data usage". With these Data NFTs, you can mint multiple copies of the license and also enable royalties on the "re-trade" of each copy. They are ideal for the trade of high-value, unique datasets between humans and machines and machines and machines (e.g. An Electric Vehicle (EV) trading its data with another EV or with a charging station)Data NFT-LEASE

These Data NFTs enable "time-based subscription trading" of your data, where you can have data access "leased out" for 1 week, 1 month, or for any fixed time limit. This is ideal for regulation-compliant (as you can "exit the system" anytime) trading of your sensitive personal datasets.

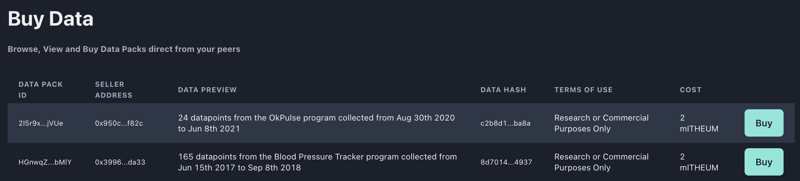

Trade of Data Packs

Important: Data Packs were an early design construct in the Itheum platform and is documented here to provide some historical context. Data trade on Itheum will primarily use Data NFT technology given its fully decentralized design. Data Packs, on the other hand, require some form of centralization for the storage and cataloging of datasets, and until Itheum has some robust alternative for these issues our focus will be on building robust data trading schemes powered by Data NFTs.

Data Packs allow a user to have tighter control over their data trading activity but at the cost of decentralization and also flexibility. Each Data Pack provides a location of a file that was uploaded within the Data DEX by the user, the link to where this file can be securely downloaded from (after a trade is complete), a preview of the data, when it was created, terms of use, etc. Once the user creates a Data Pack it gets advertised for sale ‘on-chain’. Once this on-chain advertising process is completed, the original “data hash” and the “transaction reference” of the advertising process are stored as metadata against the Data Pack. The sale of Data Packs can be described as direct peer-to-peer trade where no secondary market activity can happen once data is advertised. As Data Packs are "file-based" peer-to-peer sharing of data on the blockchain, they also can be used for a powerful construct called "personal data proofs" - you can learn more about this in a below section.

Once the on-chain Data Pack advertising process is complete, buyers will see the advertised Data Packs in the “Buy Data” section of the Data DEX. The buyer can pay the $ITHEUM cost for the data and then “own” access to the underlying data (these copies are called Data Orders and appear in the Purchased Data section of the Data DEX). As part of the buying process, they agree (on-chain) to abide by the “terms of use”. A record of this agreement is stored on-chain as part of the transaction and serves as an immutable audit trail for this agreement. One key point to note is that in this type of peer-to-peer trade — the re-sale of data is NOT permitted.

A Data Creator should choose to trade data as a Data Pack if they have the following requirements:

- Sell multiple (potentially unlimited) copies of their data to buyers (Data Consumers) around the world

- Don’t want their data to be re-sold by the buyer (i.e. the buyer can only use it as per the terms of sale and for their own use/consumption — if a buyer breaks their agreement and resells the data, the owner will have the ability to detect this and mediate a conflict resolution process — but only if the trade is handled by the Data Coalition — more on this below — but the Data Creator, unfortunately, won’t have a direct method to track the re-sale or get any benefit from it. e.g. royalties).

- Only allow for their personal data to be traded on the Itheum Data DEX (no other decentralized marketplace will display the Data Pack for sale — this is because it’s not built on any open blockchain standard like ERC20 or ERC721 that allows for interoperability). This can be considered a benefit if the Data Creator wants to limit exposure of their data (as it’s available for trade, in only one data marketplace)

Methods for Buying Access to Data

Datasets advertised for trade on the Data DEX are traded between counterparties thanks primarily to Data NFT licensing technology. As described in the above section “Decentralized Data Trade”, there are various types of data that can be put up for trade on the Data DEX. Along with static datasets (Files that don't change once produced), the Itheum Data DEX also allows for the trade to “Data Streams".

Data is traded via two channels:

- Direct between Data Creator and Buyer (Data Consumer)

- Via an intermediary, i.e.g. an authorized Data Coalition

Anyone with a crypto wallet can gain access to Data NFTs (and the underlying datasets) under certain conditions:

They need to have $ITHEUM tokens to pay for the data. The $ITHEUM token is sent directly to the Data Creator (if the sale is directly between Data Creator and buyer) or to a Data Coalition if the Coalition is “brokering the trade”.

If the purchase is via an authorized Data Coalition, then the buyer needs to adhere to the terms and conditions of use as per the Data Coalition and also put in collateral in the form of $ITHEUM tokens for a certain period (until the buyer earns a higher credibility score) – data traded via a Data Coalition has more robust misuse remediation and dispute resolution process handled via decentralized governance.

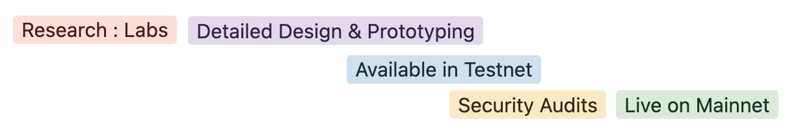

Our 5-stage Product Development Process

To ensure we continually innovate and deliver tangible web3 / blockchain features to market - we will use a simple 5-stage product development process. All our features will continually be categorized as per these stages to ensure progress is transparent to our community.

- Research : Labs - Ideas that are running through an R&D stage.

- Detailed Design & Prototyping - Ideas that pass our labs stage and for which we are doing detailed solution architecture and prototyping. Release candidate notes are also prepared for formal development to commence.

- Available in Testnet - Iterative builds being released to testnet; tested and signed off by our QA team and community testers. This will continue until a mature build passes QA and is ready for deployment to mainnet.

- Security Audits - Final release that goes through internal and external security audits.

- Available in Mainnet - Released to mainnet.

How the 5 stage lifecycle works to move product ideas into mainnet

Data DEX Components

The following key components form our Data DEX product, they work to provide the core data protocol required to make Itheum a fully-featured decentralized data platform.

Peer to Peer Data Trade (Data Marketplace)

When you visit the Data DEX you will be directly presented with the ability to discover and trade data with your "direct peers" in a Data NFT marketplace. You will be able to see all "advertised data" from others and also "advertise" your data in the data marketplace. As detailed in the section (Decentralized Data Trade)[#data-trade], you can advertise your data from apps that you have joined that are built on Itheum's Data CAT or you can also advertise any "arbitrary data" that you own.

When you place data for sale you will nominate a purchase price in $ITHEUM tokens, which is effectively the price for a Data Consumer to access your data. If someone wants access to your data, they will transfer the $ITHEUM tokens requested and in return get access to the data. The data marketplace operates completely in a "peer-to-peer" form where there are no intermediaries involved in the transaction. Data Creators and Data Consumers deal direct with each other and the entire process is mediated using Smart Contract technology.

Peer-to-Peer Data Trade (Data Marketplace) is currently in the "Available in Testnet" stage.

Data NFTs

Data is an asset in itself and personal data is a “unique asset” as no two personal datasets are the same. Highly personal or sensitive datasets can essentially function as an NFT allowing for uniqueness and limited availability.

For example, your might want to share your DNA or partial sequencing results under the “research” terms and conditions — but you may want to limit how many buyers can purchase it and use it (controlling distribution)— Data NFTs allow you to do this.

To make it more aesthetic to trade, we convert valuable datasets (usually this will be in JSON or data in any another interoperable format) to a unique visual representation of that data (which will be in a deterministic, random image abstract format) — to do this, we use a generative art algorithm for image generation that's based on the unique signature of the data.

Packaging and trading your personal data as NFTs have the following benefits (when compared to regular one-off trade using the DEX):

Limit the distribution of your highly sensitive or protected datasets to a smaller amount (similar to limited edition NFTs we see in the market today)

You can choose to earn royalties if your data is resold. You can limit the distribution of your data but should a buyer resell your data NFT, you have the option to earn a % as royalty. This is a game-changer and can prove to be a steady secondary income stream for you. This is especially true if your data is bulk curated into a Data Coalition - which will have a high buyer demand.

Once data is packaged and tokenized as an NFT, it can be traded on any NFT marketplace (e.g. Opensea - we already support this interface in our testnet). This significantly increases the selling power of your data as the audience for your data increases. Read about it here on our blogpost Selling your Itheum Data NFT on OpenSea

Your unique data will be minted with an aesthetic “generative art wrapper” created using the unique signature of the data. E.g. your DNA data can be represented as a piece of unique art (similar to Autoglyphs or other generative art) which will have inherent value as it has rarity, creativity, and actual utility packed into it.

What real-world trade characteristics do NFT wrapped data assets provide?

Let's look at some key trade characteristics we can get by wrapping our data as an NFT and then opening it for trade.

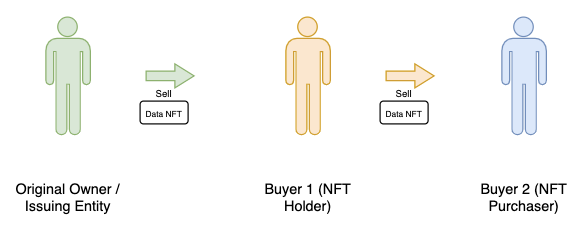

When working with NFTs in general, the main actors to consider are:

- Issuing Entity: The issuing entity behind the NFT (in Itheum's case; the Original Owner or a Data Coalition can be the Issuing Entity)

- Original Owner: The original Data Creator who choose to trade their data as a Data NFT

- NFT Holder - The present holder of the Data NFT

- NFT Purchaser - Someone with the intent of acquiring the Data NFT from the present NFT Holder

In this example, let's assume you want to trade your Genomics dataset as a Data NFT.

You use the Itheum Data DEX to mint a new Data NFT that wraps around your dataset and provides the "license for data usage". You are the Original Owner / Issuing Entity. The Data NFT is minted with a "proof of ownership" along with other metadata that enables access to the original data file if ownership is proven. All this metadata will be stored on-chain via the standard NFT metadata file schema standards.

You then head over to the Itheum Data Marketplace or use a 3rd party secondary NFT marketplace (e.g. XOXNO) and place it for sale for 100 $ITHEUM

Buyer 1 comes along and purchases the Data NFT as they see value in owning a genomics/DNA-based dataset. Transfer of ownership now moves from you to Buyer 1. They are now the NFT Holder

But Buyer 1 does not intend to use the data for any utility, they are essentially a pure "data trader" and intend to resell the Data NFT at a higher price. They increase the sale price to 200 $ITHEUM.

Buyer 2 comes along and wants to purchase ownership of the Data NFT as they intend to use it for their research into topics relating to Genomics. They are now the NFT Purchaser. As their data research requires absolute truth-fullness in the data quality (e.g. they may intend to use it to train an ML model for disease diagnosis and need to guarantee that the provenance of the data is authentic) - they choose to procure this data via decentralized blockchain-based trade instead of buying it from centralized data brokers or data sellers.

Let's look at the list of benefits each actor received by trading data wrapped as an NFT

Original Owner / Issuing Entity:

- Limit the supply of the dataset to just a single item (they can always mint more if they see demand grow)

- During the initial sale on the open NFT Marketplace (e.g. XOXNO), assign a royalty % that they get paid during all future re-sales

- Benefit from all the open NFT Marketplace features to control the sale (duration / minimum period, highest bid, etc)

NFT Holder:

- Ability to speculate on the future price of non-fungible data and earn profits for re-sale

- Build Data NFT collections based on market/seasonal demand for certain types of datasets

NFT Purchaser

- This actor gets the most value as they intend to use the contents of the Data NFT (raw dataset) for actual utility.

- They can view and prove the provenance of the NFT using on-chain lookup and identify the Original Owner

- They can view and prove the lineage of the NFT using on-chain lookup (Original Owner -> Buyer 1 -> Buyer 2)

- They can view and prove the veracity (truthfulness/accuracy) of the NFT using an on-chain lookup of the metadata and data proofs.

- They can obtain formal transfer of ownership to own the IP if a fully transferable Data NFT-FT class of Data NFTs are used by the Issuing Entity.

Note: It's important to note that the "royalty" feature works natively within Itheum's internal data marketplace but requires an upgrade to the 3rd party marketplace (e.g. XOXNO) smart contracts to work. This code upgrade is a trivial change and will be provided by an Itheum SDK for 3rd party developers.

Data NFTs are currently in the "Available in Testnet" stage.

Data Coalitions

Independently trading personal data is inefficient and time-consuming. Continuing to curate and monitor the “terms and conditions” for each sale as well as to keep track of what data will be used for and by whom will quickly become overwhelming.

Your personal data (both the longitudinal data from your structured programs and highly personal & sensitive data from your Data Vault) — is also not very valuable “when viewed in isolation” — but when your data is “grouped” into clusters of similar people, it grows significantly in value as the volume and quality increases (e.g. your health data is worth > $1,500 if sold as part of a larger dataset). The grouped data then becomes useful for deep analysis or to train machine learning models for example. We believe that this is the future of how data will be sourced on the blockchain to train AI and for deep analytics.

Data Coalitions are DAOs where the "Creators" of the Data Coalition will bond $ITHEUM tokens to form and run it. The creators are called "Board Members" and they have an incentive to run the Coalition in the best interests of its "Members". Board Members have a "stake in the game" with their bond and therefore will need to always act in the best interest of the Members. Board members will also earn a share of the trade, so it's in their best interest to keep their Data Coalition as robust as possible to attract new Members (and therefore more data).

Itheum envisions a future where the most successful Data Coalitions will be run by enterprises and SMEs (subject matter experts like legal and regulation experts, commercial data warehouses, academic/research institutes, government departments, etc) and will be the perfect balance between the commercialization of data and accountability to end-users (Data Providers). Board Members vote to agree on the terms and conditions and the governance (privacy and security) of the data trade, the parameters they agree to will be made visible to anyone who wants to join the Data Coalition.

Users (called Members) can then align to the Data Coalition who they feel acts in their best interests. You then delegate the ownership of your Data NFTs to your preferred Data Coalitions. The Data Coalition will group your data into clusters of similar data and advertise the bulk datasets to interested Data Consumers, who can then procure access to the bulk datasets. In return, you can earn a steady value return on your data or choose to lock up your returns for the longer-term growth of the Data Coalitions that you support.

Data Coalitions also allow for "staking" of $ITHEUM tokens, where anyone can stake their $ITHEUM tokens with a Data Coalition (you don't HAVE to provide your data) to flag their support for the Data Coalition and to signal that the data is good (Crowd-Sourced Data Curation). This allows for the Itheum platform to be used by users who want to participate in the personal data economy but who don't necessarily want to provide their data for trade. All parties involved in the Data Coalition (Board Members who bond $ITHEUM tokens, Members who share data, Members who stake $ITHEUM tokens are all incentivized relative to their role and stake and earn micropayments after each trade is finalized)

Itheum's Data Coalitions are modeled on the Credit Union Philosophy

Decentralized Board Members

As introduced above; Data Coalitions are formed and run by a virtual board — they have additional governance responsibility and can mediate/provide conflict resolution, negotiate terms of sale of data with real-world entities and other Data Coalitions, etc. Board Members have to bond $ITHEUM tokens into the Data Coalition to ensure they have a “stake in the game”, after which they can stand for election and be voted in by other board members.

To prevent hard centralization, Board Members will serve a fixed term (if required by the Members - i.e. it's not mandatory), after which they will need to rotate out and be replaced with a new board. Board members earn a share of the sale in data (paid out in $ITHEUM tokens) that is housed within the Data Coalition. They can also lose $ITHEUM tokens in the event they don’t represent their member’s best interests or conduct an incorrect sale of data (i.e. a trade that breaks the terms of sale contract) which then needs to be revoked and damage compensation needs to be paid to both the sellers and buyers.

Although not mandatory, Members will be able to participate in ongoing period votes to express their satisfaction with the Board's performance. If satisfaction rates are low for multiple voting points - this will trigger a board rotation clause.

Other Notable Properties of Data Coalitions

- Data Coalitions enable "collective bargaining power" for end-users and will be a viable solution to the problem of centralized enterprise data silos that don't provide any value to the Data Creator.

- DAO-based governance and decision-making will be used to manage the ongoing operations of the Data Coalition.

- They will also be delegated custodians of “Data Vaults” and can autonomously trade high-value data from the Vaults by attaching it to the other datasets within the Data Coalition.

- They will also be able to link with the "Trusted Computation Framework" and facilitate the privacy-preserving compute-to-data technology handshake, where 3rd parties will be able to run algorithms on the datasets housed within the Coalition without having the identity and privacy of the original Data Creators leaked.

- They can efficiently facilitate "micropayments" to all its members in return for data. For example, a Data Coalition can have 1000 "members" who contribute data for bulk sales. After each sale, the 1000 members will be sent a share of the earnings via micropayments. Traditional banking payment systems are unable to handle these kinds of micropayments due to the overhead of fees - but blockchain payment mechanisms will be able to facilitate this well.

- Data Coalitions in the future will also trade with other Data Coalitions and be connected to autonomous machines via a machine-to-machine type interface. E.g. wearables or EVs who join Coalitions directly and participate in the data economy.

- They can allow (if voted by members) for "anonymous cohort analysis" of data trends via tooling provided via our "Data CAT" feature. For example; there may be a Data Coalition set up for the collection of "fitness and demographic data" - where you, as a Data Creator can align to and provide your wearable data from Fitbit or Garmin as well as append specific demographic data from your Data Vault (e.g. Gender, Age, Ethnicity) to enhance its value. Anonymous cohort analysis can then be enabled to visualize the type of data under the control of this Data Coalition. This adds more appeal to buyers who can preview data with more detail before committing to buying at a premium price.

Anonymous cohort analysis via our Data CAT integration

Cohort analysis analytics enabled on the Gamer Passport Data Coalition

- Data Coalitions will be able to mandate minimum data format and quality requirements for members who join to contribute data. For example, buyers who are interested in Health and Genomics data for automated ingestion into their systems - will prefer a more globally interoperable standard like FHIR - Fast Healthcare Interoperability Resources Standard. Data Coalitions will be able to mandate this as a minimum requirement for its members and ensure that the data being contributed is in the FHIR JSON standard.

- Anyone can start a Data Coalition but it will take some effort to progress it into an "operational mode" and attract new data to come under its control. E.g. if you start a new Data Coalition you will need to bring in Board Members with credibility and who will need to bond $ITHEUM tokens for their term duration. Once you have filled the minimum requirements for the Board Members, the Data Coalition then enters "operational mode" and can begin accepting data and $ITHEUM token stakes from regular users (Members). But being in "operational mode" is not sufficient to attract the best quality data; all details about Data Coalition Board Members are made public - so you must have some commercial experience in data-related matters to give you credibility. Any "slashes or disputes" arising from your Data Coalition's trade activity are also made public. This is very similar to how the

Delegated Proof of Stake validator selectionprocess works, where you can stake your tokens after doing some due diligence on the validator's reputation and past performance. So for a Data Coalition to be successful - it will need to be in an "operational mode" and have some credible "Board Members" whilst maintaining ongoing trade operational credibility.

This feature is currently in the "Detailed Design & Prototyping" stage.

Personal Data Proofs

In the decentralized DApps ecosystem (DeFi, DAOs, or any other application use cases enabled via DApps); Smart Contracts enable for agreements between parties to execute based on "indisputable truths". For example, in a DeFi exchange, a trade transaction between two parties can happen backed by the on-chain state data that confirms that the transaction can indeed proceed (e.g. party A has the tokens to transfer and party B is entitled to receive the tokens based on some pre-agreed above condition). Smart contracts enable trust-less interaction to happen between multiple counter-parties. Traditionally, transactions such as these in finance require a trusted intermediary such as a bank to coordinate.

But Smart Contracts do have a key limitation in that the data they have access to on-chain to facilitate such trust-less behavior is very limited. We only have data around transaction history and other such on-chain information (e.g. voting outcomes etc). For more complex trust-less applications to migrate to the blockchain, we need "richer, real-world data" to flow into smart contracts. ChainLink provides this real-world data via their Decentralized Oracle Networks and enables the technology of "hybrid smart contracts". These hybrid smart contracts use real-world oracle data to make decisions and carry out transactions between parties. ChainLink enables smart contracts to tap into real-world data like exchange price feeds, weather, or sensor data and allows for the contracts to mediate transactions between multiple parties based on outcomes resulting from smart contract code execution backed by real-world data.

But if blockchain technology was to branch out and handle many more mainstream use-cases, we will need a wider variety of real-world data to flow into the smart contract world. One type of data, in particular, that is not available today (via Chainlink or any other Oracle-based networks) but is crucial for richer blockchain use-cases - is Personal Data.

As described in the above section titled "Types of Direct Trade" - the Itheum platform enables Data Creators to place their personal data for direct peer-to-peer on-chain trade. One of the underlying qualities of this type of trade is that a "proof of the personal data" is stored on-chain. This proof is then used (by a Data Consumer) to verify that the data's veracity is untampered before the trade happens.

These features enabled by the Itheum Data DEX can also be used as a "Personal Data Proof" by smart contracts to execute specific rules that enable transactions on-chain to happen that are backed by personal data.

This revolutionary concept is best explained with an example:

Home Loan Application - Real World Scenario

Jack wants to buy a house. He heads over to his bank and requests a bank loan. The bank needs to carry out a detailed assessment to make sure Jack is eligible for the loan (i.e. can he make repayments? what is the risk he will default on it?). The bank does a credit check on Jack and finds that his credit history is good. The bank's home loan broker then does an extended personal due-diligence assessment. Jack is asked to provide his financial history, income history, and other details about his spending habits, family, dependents, and work history. This information is collected using a detailed bank loan application form the bank has prepared as a standard document. Once Jack fills the form he needs to attest the form as holding "true" information (via a statutory declaration that is legally binding). The bank assesses the details on the form and makes a decision to give Jack the loan. Jack uses the loan and buys the house.

Now let's imagine that we want to port this entire scenario to the blockchain and remove as many intermediaries as we can (the bank, the credit history provider, the home loan broker, etc.)

Home Loan Application - DeFi Scenario

Jack wants to buy a house. He visits a DeFi Lending DApp that is a DAO (modeled after a real-world credit union but fully decentralized) that allows for borrowing based on voting-based approval and on-chain evidence of collateral (e.g. other assets that Jack owns in the same DeFi DApp or other DApps). Jack requests a loan and the DApp begins its automated due diligence process. As part of this process; the DApp invokes another DeFi DApp's smart contracts that allow for deep credit history checks (possibly via deep index lookups or via the Graph for more advanced lookups). The credit checks come back positive for Jack. The DeFi lending DApp then refers Jack to an application form built and run using Itheum's Data CAT, which includes all the due-diligence questions asked by banks during loan applications that need to be answered by an applicant directly (e.g. what is your employment history? your spending habits? your family details and other financial responsibilities? basically, all the personal information that is needed to make an informed decision about a borrowing risk but cannot be obtained using blockchain lookups). Jack completes the form and his responses are stored inside the Itheum Data DEX as a "Data Pack". Jack then Advertises this Data Pack on-chain and the proof of his response is published on-chain and sent to the DeFi Lending DApp as a "Personal Data Proof" as an attestation to his responses to the form. The DeFi Lending DApp now has all the information it has to take his application to the final DAO voting panel. The members of the DAO have all the attested information and proof to make a decision on the home loan (collateral confirmation, positive credit history, application form due-diligence responses, and on-chain proof). The DeFi Lending DApp is happy with the application and approves the loan and Jack receives the money.

Itheum provides the complete platform for these kinds of Personal Data Proofs that can be used for on-chain decision-making. Think of Itheum as the next layer of data inflow into the blockchain world. Core blockchains provide transaction data, ChainLink provides real-world event data and Itheum provides Personal Data Proofs direct from the end-user. When used in unison; we can replicate many real-world scenarios using smart contracts and remove redundant intermediaries.

Watch more real-world use cases and code demos

Data Vault

You can store highly sensitive personal data in your data vault. For example; details about your gender, race, sexual preference, prior health conditions, financial history etc.

This sensitive data is encrypted using your own encryption keys (no one else can unlock and view it) and stored in decentralized, redundant storage (no one else can destroy it)

You can then choose to append data from your vault to the regular data you trade on the Data DEX. As this gives the “dataset” more context, it becomes more valuable to the buyer — and you will earn more $ITHEUM tokens.

As the data is encrypted using the user’s private key we need to enable a frictionless UX during trade between buyer and seller where keys need to change hands with minimum manual involvement between parties; For this purpose, “symmetric key pools” (decentralized middleware) are used to enforce secure authorization between seller and buyer in real-time. Symmetric key pools will operate using a modified proof-of-authority mechanism to enforce the highest security with balanced decentralization (Please note that the specific technical design is still being finalized).

Other Notable Properties of Data Vaults

- Their design will enable true data sovereignty via a "proof of ownership" based design architecture. Detailed technical design of data vaults will be released in our "Yellow Paper" but at a high level - all datasets that include sensitive data will be encrypted with keys that belong solely to the Data Creator. If a copy of the data is given to a new buyer, decentralized middleware will be used to mediate the handover of the data access rights with encryption handled behind the scenes (to ensure UX is seamless). But in the case of a Data NFT, where the actual ownership of a data asset can move from one party to another - the "proof of ownership" will also be transferred. This process will also be handled by the "decentralized middleware" service.

- Data Vaults will enable a user to "opt-out" of the system in the event they do not want to share their data anymore (e.g. a requirement in GDPR). This is achieved by the above-mentioned "proof of ownership" protocol. Where the unique decrypting key can be "burned" - which then effectively makes all decentralized copies of data (e.g. in IPFS or elsewhere) become untethered from the Data Creator. The data without its decryption key is now effectively just a blob of scrambled text without any identity or utility attached to it. There are of course challenges to this that we need to solve, e.g. what happens if you sell your data and then change your mind after the sale? Do we allow for a recall of data sale? if so, how can we ensure that a user has the ability to completely opt out?

This feature is currently in the "Detailed Design & Prototyping" stage.

Data Streams

You can let buyers subscribe to “personal data streams” — unlike the on-off datasets that can also be purchased on the Data Dex, data streams will continue to feed data once a “subscription” is purchased.

Data streams are a more powerful way for Data Consumers to subscribe to longitudinal datasets that grow over time. For example, health and wellness data like activity, sleep quality, blood pressure, or financial activity like spend habits, etc.

When paired with context-rich data from your “Data Vault” — Data streams become a valuable and steady source of passive income that unlocks in exchange for the sharing of access rights to personal data.

This feature is currently in the "Research:Labs" stage.

Trusted Computation Framework

As personal datasets under the control of Data Coalitions grow over time, certain end use-cases may require access to highly sensitive, identifiable data — often these use-cases will provide the most “payout” for data usage (as such they are considered high-value use-cases). In such situations, a Trusted Computation Framework can be used to ensure computation is handled off-chain with tamper-proof integrity. The Data Coalition will coordinate these computation jobs on-chain (with possible coordination assistance of Chainlink’s Attested Oracles

All personal data traded on the on-chain Data DEX is never stored on-chain — only hash values are stored to ensure the integrity of traded datasets. But in certain advanced use-cases where Data Coalitions manage the data of multiple users, there will be encrypted personal metadata stored on-chain. There will be cases where this data cannot be put on-chain even when encrypted due to privacy regulations, especially if the blockchain network is spread across multiple geographies. Off-chain execution is, in some cases, the only option for processing this data. Trusted Computation Framework can be used to localize the computation of the data to ensure the data storage and processing complies with all data sovereignty regulations.

The Trusted Computation Framework is tethered to the “Regional Decentralization Hub” and is our "Compute-to-Data” solution for highly sensitive data processing requirements within high regulatory environments.

This feature is currently in the "Research:Labs" stage.

Regional Decentralisation Hubs

Highly sensitive data like medical data from hospitals, personal health records, financial transactions, or credit history are protected by regional or local sovereignty laws. To unlock the trade of this data we cannot use fully decentralized global storage or compute. For example; we may want to limit trade, storage, and compute on data to only the EU region so that it complies with laws like GDPR whilst also discouraging a central point-of-attack vector on these data and compute resources.

Regional Decentralized Hubs are a novel idea we are exploring around regional decentralization which balances legal sovereignty laws with personal data sovereignty.

This feature is currently in the "Research:Labs" stage.

The Data Metaverse and NFMe ID Avatar Technology

NFMe ID (Non-Fungible Me ID) are your Data Avatars of the Metaverse. Join the Data DEX and complete a "seed profiler job" and have your very own NFMe ID minted and stored in your wallet. NFMe ID's have "personal data categories" (PDC) linked to them; these feed data into the NFMe ID's and this data is secured in personal Data Vaults. Example PDCs are social, financial, historical, internal and external.

Apps built on Itheum's Data CAT feed data into the PDCs, these apps are run by Itheum as well as other organizations who want to generate high-value data and then incentivize you to provide them access to your data. Itheum's Personal Data Adaptors can also discover and harvest on-chain and off-chain personal data and lock it inside your Data Vault and link it to your NFMe ID.

As more data is added to the NFMe ID avatar; its "data signature" changes and more "accessories", "evolution traits" and "skins" are made available to your NFMe ID avatar. This is akin to purchasing gaming accessories and traits to augment your in-game NFT characters. Your NFMe ID is organic and evolves like a human as more data is added to it. You can apply "skins" over your base NFMe ID avatar - so you can choose the pick a different visual appearance for your NFMe IF Avatar based on on the situation you want to use your avatar in or mood you want to convey (e.g. you can apply a photorealistic 3D skin, a "cartoonish" skin, or even a completely imaginary robotic skin)

What can I do with an NFMe ID?

- NFMe ID avatars are NFT tokens; so it supports all NFT capabilities. But you can never completely sell your NFMe ID, you can only lease it to others and they can use it to access your data. It's an "authorization key" you provide to a 3rd party for gated entry to web3 and metaverse domains and it can also represent and supply a personal data footprint to 3rd party applications (if you choose to allow it to do so). In this way; NFMe ID avatars are

soulbound - Explore Itheum's 3D

Virtual Data Metaverse(called the Greenroom); which is a digital metaverse launchpad where you can manage your "accessories", "evolution traits" and "skins" and interact with other NFMe ID avatars. - Participate in the governance of

Data Coalition DAOs- These are bulk data trading DAOs that exist to serve the people and protect personal data. Stake to participate in existing Data Coalition DAOs and provide data curation and data quality assessment services or give them access to your NFMe ID and other data assets and allow them to trade your data on your behalf as part of a larger bulk dataset. - "Slice out" certain segments of your data (e.g. Data from a specific app or category) and mint them into

Data NFTsand trade these in NFT marketplaces.

In the web2 world; your personal data is exploited by 3rd parties and large corporations. In Itheum's Data Metaverse; we give you true ownership of your data via your own Data Avatar... welcome to the era of the NFMe ID Avatar.

NFMe ID Interoperability

An NFMe ID is your virtual data avatar; so they ideally need to be interoperable with other digital metaverses so you can explore these virtual worlds and ecosystems and interact with more digital assets. Itheum will have its own Virtual Data Metaverse (called the Greenroom) but we are also looking at methods to "port" our NFMe ID avatars into other virtual worlds.

The concept of interoperable metaverse avatars is in its very early stages of development but there is some promising progress being made by projects like WorlWideWeb3 - who will allow for external NFT Avatars to be ported into their virtual world. We will also actively follow the progress in this new space by following the developments of organizations like the Open Metaverse Interoperability Group and also contribute to helping create open standards for future metaverse interoperability.

Itheum may also eventually become the world's 1st fully decentralized open metaverse backed by true data ownership - a vision we hold close to our hearts.

This feature is currently in the "Research:Labs" stage.

Technology Philosophy

The following sections provide an overview of the underlying technology principles that we aim to use as the foundation for our evolving solution design and architecture. It covers elements like multi-chain design, cross-chain communication, and token design principles.

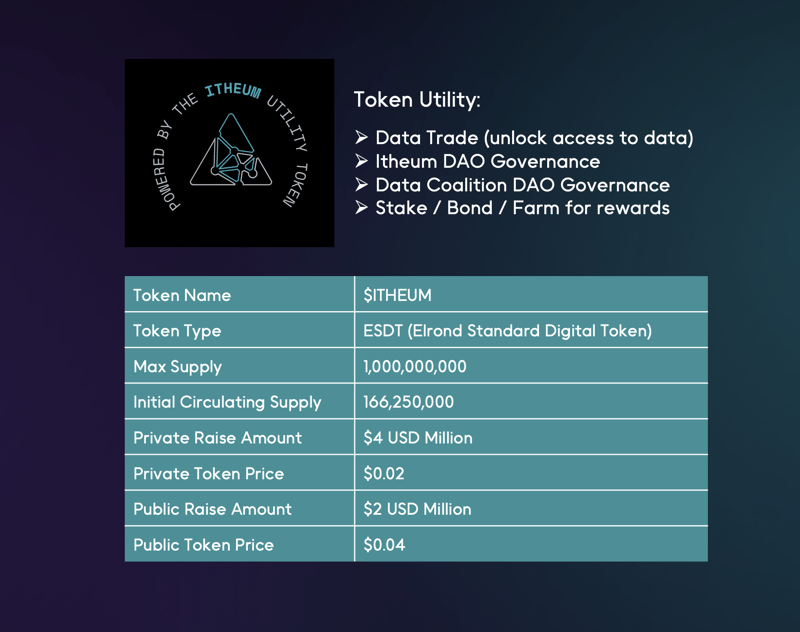

Multi-Chain Strategy