Earlier this year I wrote about a NestJS API packed in an AWS Lambda. If you haven't read it, feel free to check it out if you'd like to learn how to deploy an API quickly using Serverless framework. This article is a follow-up on that one and it will explore the famous "cold start" issue with Lambda functions, particularly in this case of a so called mono-lambda or fat lambda.

To test and demonstrate the cold start statistics, we will be hitting the Songs API with a variable load of requests for a couple of minutes. Then, we'll briefly analyze the results by looking at the Latency metric for the API Gateway and other useful Lambda metrics like (Init) Duration and Concurrent Executions.

What is a cold start?

There are a lot of articles already explaining this, so I'll be super short and use an image below, referenced from AWS:

Bottom line is your Lambda will be a lot slower the first time it runs compared to the invocations that follow on an already "warm" instance. This means that even if the majority of requests are processed really fast, those that are impacted by the cold start will be noticeably slower.

How did we come to this?

We've already been running one project in production with more than a 100 Lambdas written in TypeScript. When the second project came, we wanted to try something different - develop an API using NestJS, a framework we haven't worked with before but heard nice things about.

Since we relied on CloudWatch to monitor our APIs, the usual approach we saw on tutorials with only one http event that has method any and /{proxy+} path wouldn't work for us. We wanted detailed API monitoring per endpoint, so first we did something like this:

...

functions:

getSongs:

handler: dist/lambda.handler

events:

- http:

method: get

path: songs

getOneSong:

handler: dist/lambda.handler

events:

- http:

method: get

path: songs/{id}

...

As you can see, a function per endpoint, but each function actually pointing to a whole NestJS app. And very soon we had something to see on our monitoring dashboard - this was getting pretty slow because each request was spinning up a new Lambda most of the times. And the application wasn't even that big yet.

We started looking for solutions to the cold start problem. One pretty common was to use libraries or workarounds that are "warming up" the Lambdas using a scheduled pinging mechanism. On why this approach cannot guarantee a warm Lambda execution, you can read more on AWS: Lambda execution environments. However, if you're interested to experiment with this, there's a plugin for Serverless Framework called Serverless Plugin Warmup.

The second approach is sort of an official recommendation from AWS - provisioned concurrency. Worrying about scaling beats the purpose of going serverless, right? More importantly, the API we were building was relatively new and we didn't have usage patterns which would help define the provisioned concurrency. And of course the price was a big factor, as it would cost the same as some average EC2 instance 💸

So, we ended up deploying this mono-lambda, but with a slight change to our Serverless configuration:

...

functions:

api:

handler: dist/lambda.handler

events:

- http:

method: get

path: songs

- http:

method: get

path: songs/{id}

- http:

method: post

path: songs

...

This results in all the API Gateway resources getting created, enabling us to have detailed monitoring per endpoint while minimizing the number of cold starts, since it's just one Lambda with multiple http events.

Note: In order to have metrics per API Gateway resource (endpoint), Detailed CloudWatch Metrics option needs to be enabled. It won't be enabled for the purposes of this article, as it incurs some additional costs. More information can be found on API Gateway metrics and dimensions ℹ️

I just want to hit this API with some requests so we can see the results in the monitoring and observe available metrics. With the help of Apache Bench tool I can do just that with a few lines of bash code. The load is an average of 10 requests per second for about 2 minutes. Let's discuss the results real quick.

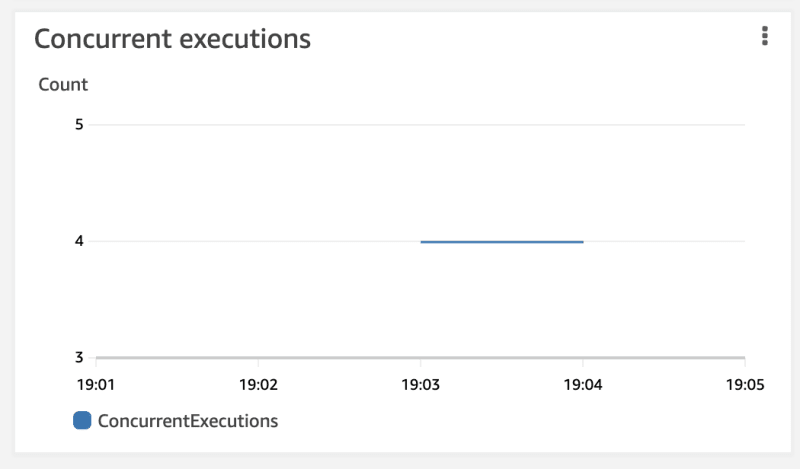

The diagram above shows maximum latency which is very high at the beginning, due to the cold start. It falls drastically and quickly for sub-sequent requests as the Lambdas are warm. So how many cold starts did we have during this small experiment? The metrics say there are 4 concurrent Lambda executions, which means we got 4 cold starts.

For about 1000 requests, that's less than half percent. For these slow requests, lasting around 1.5 seconds, it was Lambda's Init Duration that was taking up about 1 to 1.1 seconds. The rest was Lambda handler initialization + function execution. We can see the most expensive executions, lasting under 400ms each, on the image below (Init Duration not included):

Average request time was 70ms which means that those warm Lambdas were really hitting it off. While this API is probably not like anything you'd have in production (or is it?), it's still an interesting topic to discuss.

Conclusions

I would say that API in a "fat" Lambda does not have to be terrible. Lambda should definitely not be obese, but a chubby one can perform. This seems to be a good fit for an API up to a certain size and if you don't want to manage or worry about infrastructure. As it grows it can be split into micro-services behind an API Gateway. And if performance is critical, the sweet spot might be scheduling provisioned concurrency after you understand the usage patterns.

Thanks for reading and feel free to leave your thoughts and experiences in the comments! 👋

Top comments (0)