Introduction

Today, the cloud has opened up countless solutions and enabled us to think beyond what we were thinking impossible. Looking back to 20 years ago, and exactly how PayPal launched, and the way they hosted their website on a development local machine by day while working on it by night, is one of the fascinating examples of how far we've gone today thanks to the cloud.

Therefore, let's see how we can scale up an application from 1 user, which is probably you, the developer, all the way up to 1 million users. And compare that between the two most popular cloud providers today: Google Cloud Platform and Amazon Web Services.

Stage I: "You" to 1k Users

Let's imagine that you have just finished developing this amazing app called "ScaleUp" in your local machine, and you have got some friends and family testing it for a while now, and it seems stable to host it somewhere in the cloud, think of it as making your own computer access to everyone on the globe!

Google Cloud infrastructure is available in 24 regions, exactly as Amazon's in Q1 of 2020. And thus, you want to deploy your code and services to the nearest region of your users. Let's assume it to be in Europe, and exactly in "London".

Solution Design

After hosting our beautiful web app in our chosen region in a VM instance that runs a web server and a PostgreSQL database, we can then assign a domain name to resolve our VM's external IP address and attach traffic to it. The total setup becomes as follows:

Solution Setup

- 1 Web Server

- [GCP] Compute Engine

- [AWS] Elastic Compute Cloud (EC2)

- Running Web App

- Running PostgreSQL DB

- DNS for VM's External IP

- [GCP] Cloud DNS

- [AWS] Route 53

Stage II: >10k Users

Issues of "Stage I"

Now that we moved into stage two, and I have around 10K users, I will start to experience some odd behavior and performance issues. I can summarize that into three types of issues:

1. Single Instance Failure

Since I only have a single VM and there might be different types of errors that occur on that single instance, think of it as VM issues, web service issues, or database issues.

Whenever that happens, I need to either restart my services or reboot my VM. What that really means is that there is downtime to my whole application. This is the cause of a single instance failure.

2. Single Zone Failure

Imagine that my VM is hosted in zone "A" of the "London" region, and for some unlikely reason something happens to that zone, and I don't have any backup instances in another zone. This will, again, cause my whole application downtime.

3. Scalability Issues

So as my application grows, I have more users concurrently accessing my application, which might cause some inconsistent behaviors.

That really can be avoided since I don't have any monitoring or auto-healing or anything within my single VM right now.

So based on these issues, we can observe that my current architecture is really flawed with a single point of failure, meaning one failure will cause my whole system to go down.

Based on all of that, what we really need is to build a scalable and resilient solution. Scalable simply means that my application needs to work with one user all the way up to 100 million users. Resilient on the other hand means that my application needs to be highly available. It needs to continue to function when an unexpected failure happens.

Now let's see how we can do that on GCP and AWS.

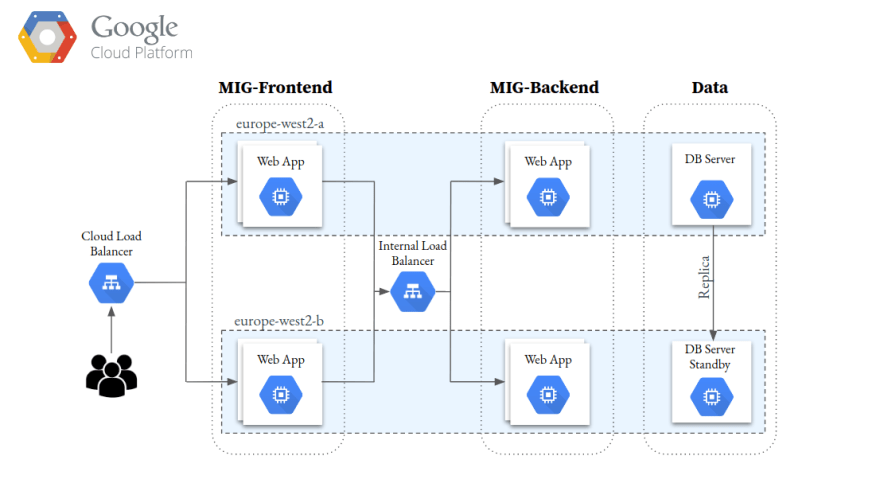

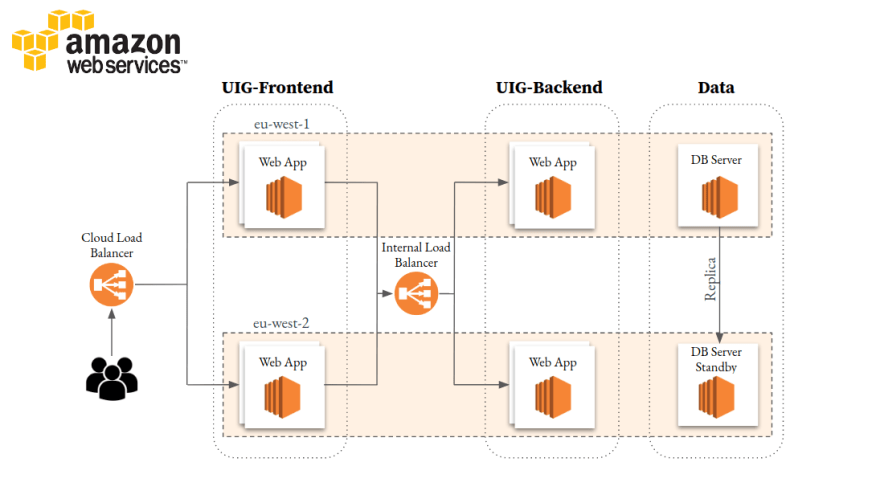

Solution Design

Solution Setup

- Frontend & Backend

- [GCP] Managed Instance Group

- [AWS] Unified Instance Group

- Multi-zone deployment

- External IP on Network LB

- [GCP] Cloud Load Balancer

- [AWS] Elastic Load Balancer

- PostgreSQL server in the Data layer

As the application grows, the number of VM instances grows as well. So we can clearly find that it is getting difficult, but still manageable though.

Now, what if you need to scale over tens of VM instances? And when one of them goes down, you have to take action, right?

Here Managed/Uniform Instance Group comes into the solution to assist with auto-scaling and auto-healing.

MIG/UIG contains a single base instance template, When the service needs to scale out for a new instance, it can create it from this base template.

In addition, MIG/UIG has three major features:

1. Auto-Scaling

Autoscaler can create and destroy VM instances based on the loading, which is defined by yourself.

For example, you can use the network throughput, CPU usage, and memory usage as the scale in metric.

2. Auto-Healing

Auto-healer will recreate the instance which becomes unhealthy. Unhealthy can be also defined by yourself with the custom health check.

3. Regional Distribution

You can distribute all of the VM instances across multiple zones which makes your solution more highly available.

Looking at these three separate features, these actually solve the previous issues that we had, Scalability and High Availability. With Auto-Scaling, we can make sure that your application is always scaling to the current zone.

With Auto-Healing and the Regional-Distribution your application can recreate unhealthy instances and avoid single zone failure.

Now that we have MIG/UIG config, we need to distribute your traffic across all the VM instances within the group. This is where the load balancer comes into play. Once you deploy your instances across multiple zones, the Load balancer will spread the traffic across all the VM instances within the group, and there is a single IP attached to this network load balancer.

In previous architecture, you can see that we run all of the services on the same VM instance. This is half the scale in point of view, and also harmful to service availability.

Imagine that one instance is down which costs the entire service running on the same instance to be down as well (think of the DB for instance). Hence, this is why architecture evolves, we decouple the application into the typical three-tier web applications, The frontend, the backend, and the third base layer.

MIG/UIG is configured to both frontend and backend services to provide scalability and high availability. At each layer, we deployed a VM instance across multiple zones to cope with the single zone failure.

At the frontend, we have the Cloud load balancer distribute the traffic to the frontend, and we use the internal load balancer to spread the traffic to the backend service as we deploy our backend service into the private network.

And the third base layer, PostgreSQL is running on CE/EC2 with the HA (High-Availability) mode. This provides the master to slave replication for High-Availability purposes.

Troubleshooting

Monitoring and logging easily help you troubleshoot your system when something goes wrong.

"Stackdriver" for GCP and "CloudWatch" for AWS, is a comprehensive service that helps you monitor and make alert policies in abnormal situations.

In addition, "Stackdriver" and "CloudWatch" debugger can help you to debug your service online without interrupting your service.

So in this stage, we address scalability and resilience through the MIG/UIG and load balancer. Application is also decoupled into three tiers to avoid interruption between services.

That's it for today, in the next part, we are going to see together the limits of this solution, and how we can scale up to 100K users, and how we can adjust our architecture.

Thanks for reading up until here, please feel free to comment on your thoughts or reach me out, and even suggest modifications. I really appreciate that!

Top comments (0)