How it all started

Infrastructure as Code is getting more and more attention and for good reasons.

Previously infrastructures where created and maintained by hand, which works well until...

So when I needed to create a new database cluster I looked into a setup that would be reproducible and it should be testable before it was deployed on the actual servers.

For stateless things we can used docker but that wasn't a great fit for the database servers as we need to have persistent storage and scaling and/or changes where not expected that often.

I went for a golden oldie: Ansible

While I had worked with it before, it was mainly putting some roles together run the script and validate, manually, that it worked.

This time around I didn't want to 'test' the provisioning manually. As the setup was a bit larger and the overhead (commit, deploy) was bigger.

So I thought there should be a smarter and better way.

Start of the journey

As I am mainly a dev engineer, I didn't had thorough expertise on Ansible. (still don't) So Google it was to get the journey started. Looking through all the things, I mostly saw tests for Ansible Roles. Or ways to test the playbook on the target systems, but I wanted to test the playbook before deployment during the build and/or locally.

Turned out it was not that hard to create, but getting all the information was.

Because of the combination of Ansible and Vagrant and probably my lack of knowledge on those topics 😃~

The project setup

First off, what are we actually going to provision?

We will be provisioning a 3 server cluster of a database server, RethinkDB

You probably didn't heard from it before, and you can forget about it after reading this.

There will be some specific commands for rethinkDB, but most of it will be about the testing setup and ways you should test.

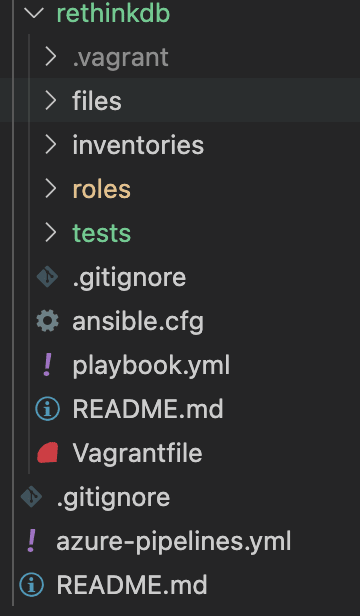

Project layout

A pretty normal layout for Ansible projects.

The part we will focus on is the test folder.

Test

There are a few levels of testing that can be done for the Ansible playbook.

You can have Ansible roles unit tests, which will validate if the roles 'works'.

And you can have tests for the playbook.

In my case the roles I created where specific for my situation so I didn't, wanted to, create unit tests as that would have been a bit overkill.

Instead I focussed on a few other things:

The role itself should validate if the work it did was actually executed and working as intended.

Making sure roles are doing what they should

So for instance the install-rethinkdb role should validate that it did install the package provided.

To do so, we get the version that is installed by executing the rethinkdb -v command and save the output. In another step we validate that the output is what we expect.

This way we are verifying that the installation of the (new) version went successful as the version is being used.

- name: Install rethinkdb

yum:

name: "/tmp/rethinkdb-{{ rethinkdb_version }}.x86_64.rpm"

state: present

allow_downgrade: yes

disable_gpg_check: yes

- name: Get installed rethinkdb version

command: rethinkdb -v

register: rethinkdb_version_installed

- name: Validate rethinkdb version used is the same as wanted version

assert:

that:

- "'{{ rethinkdb_version }}' in rethinkdb_version_installed.stdout"

In another role I updated the configuration and needed to restart the database in order to get the configuration working.

For this we restart the service via systemd and wait for couple of seconds.

We then get the service_facts and validate that the service is in a running state.

- name: Make sure rethinkdb service is restarted

systemd:

state: restarted

name: rethinkdb.service

enabled: yes

daemon_reload: yes

- name: Pause for 10 seconds to start the service

pause:

seconds: 10

- name: Get service statuses

service_facts:

register: service_state_rethinkdb

- name: Validate status of rethinkdb service

assert:

that:

- "'running' in service_state_rethinkdb.ansible_facts.services['rethinkdb.service'].state"

The take way here is that you should look at your roles as parts that will have a single purpose and that you should also validate that they do what you expect. Role tests and such are great but verifying it during runtime is a must, as that is what matters in the end

Testing the playbook in a controlled (local/build) environment

For testing the playbook we need servers to executed the playbook on.

In comes, if you looked at the layout you could have guessed it, Vagrant

So here is the Vagrantfile that I used, I added some comments in there to help you understand what is going on.

In short, it will provision 3 servers that are in an own private network and execute the (test) playbook on those servers. Effectively creating a local database cluster.

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Copy certificate files to destination they are expected.

FileUtils.cp %w(tests/files/ca.pem tests/files/cert.pem tests/files/key.pem), 'roles/ansible-role-rethinkdb-configure/files/'

FileUtils.cp %w(tests/files/vagrant1.local.net.crt tests/files/vagrant1.local.net.key), 'files/'

FileUtils.cp %w(tests/files/vagrant2.local.net.crt tests/files/vagrant2.local.net.key), 'files/'

FileUtils.cp %w(tests/files/vagrant3.local.net.crt tests/files/vagrant3.local.net.key), 'files/'

Vagrant.configure("2") do |config|

# If you have issues with SSL certificates add this

config.vm.box_download_insecure = true

# We want to use redhat7 as the target servers are also redhat 7

config.vm.box = "generic/rhel7"

# To save overhead and time we use linked_clones (https://www.vagrantup.com/docs/providers/virtualbox/configuration#linked-clones)

config.vm.provider "virtualbox" do |v|

v.linked_clone = true

end

# We want to provision 3 servers for our cluster

N = 3

(1..N).each do |machine_id|

# Give the server a unique name

config.vm.define "vagrant#{machine_id}" do |machine|

# same for the hostname of the VM

machine.vm.hostname = "vagrant#{machine_id}"

# Here we will be setting up the private network for the cluster.

# In this network the cluster can communicate to each other.

# We also want to expose (forward) some ports that are used inside the VM to the host (so that we can connect to the dashboard)

# In this example I only added it for server1.

if machine_id === 1

# Private network instruction for vagrant

machine.vm.network "private_network", ip: "192.168.77.#{20+machine_id}", virtualbox__intnet: "network", name: "network"

# Forward all the ports below to the host. auto_correct is set to true to chose an available port if the one specified is taken

machine.vm.network "forwarded_port", guest: 10082, host: 10082, auto_correct: true

machine.vm.network "forwarded_port", guest: 10081, host: 10081, auto_correct: true

machine.vm.network "forwarded_port", guest: 8443, host: 8443, auto_correct: true

machine.vm.network "forwarded_port", guest: 9088, host: 9088, auto_correct: true

machine.vm.network "forwarded_port", guest: 9089, host: 9089, auto_correct: true

else

# The other servers (2,3) should be in the private network

machine.vm.network "private_network", ip: "192.168.77.#{20+machine_id}", virtualbox__intnet: "network"

end

# Only execute when all the machines are up and ready.

if machine_id == N

machine.vm.provision :ansible do |ansible|

# Disable default limit to connect to all the machines

ansible.limit = "all"

# Playbook that should be executed, in this case the test playbook

ansible.playbook = "tests/test.yml"

# Please log it all

ansible.verbose = true

# We need to provied a few extra vars to the playbook

ansible.extra_vars = {

RETHINKDB_DATABASE_ADMIN_PASSWORD: '',

NGINX_DASHBOARD_PASSWORD: 'pasword'

}

# These groups are the same as you normally specify in the inventorie file

ansible.groups = {

leader: ["vagrant1"],

followers: ["vagrant2", "vagrant3"],

}

end

end

end

end

end

The 'test' playbook looks like this:

I am using a specific playbook for tests as I needed to add a few things that are normally there if you get a server from your provider. At least that was the case in my project.

---

- name: Install rethinkdb

hosts: all # Executed this on all 3 servers

become: yes # become root

# pre_tasks are there to create the rethinkdb user.

# These will be executed before the rest.

pre_tasks:

- name: Add group "rethinkdb01"

group:

name: rethinkdb01

state: present

- name: Add user "ansi_rethinkdb01"

user:

name: ansi_rethinkdb01

groups: rethinkdb01

shell: /sbin/nologin

append: yes

comment: "vagrant nologin User"

state: present

- name: Add user "rethinkdb01"

user:

name: ansi_rethinkdb01

groups: rethinkdb01

shell: /sbin/nologin

append: yes

comment: "vagrant nologin User"

state: present

- name: Allow 'rethinkdb01' group to have passwordless sudo

lineinfile:

dest: /etc/sudoers

state: present

regexp: '^%rethinkdb01'

line: '%rethinkdb01 ALL=(ALL) NOPASSWD: ALL'

validate: 'visudo -cf %s'

- name: Add sudoers users to rethinkdb01 group

user: name=ansi_rethinkdb01 groups=rethinkdb01 append=yes state=present createhome=yes

- name: Add sudoers users to rethinkdb01 group

user: name=rethinkdb01 groups=rethinkdb01 append=yes state=present createhome=yes

# As the firewall was interrupting with the private network I disabled it

- name: Stop and disable firewalld.

service:

name: firewalld

state: stopped

enabled: False

# Rethinkdb can be memory happy so we needed to increase the virtual memory on the real servers. Here to test if it works.

- name: Increase virtual memory

shell: echo "vm.max_map_count=262144" >> /etc/sysctl.d/rethinkdb.conf && sudo sysctl --system

# We need to have machine certificates for cluster security.

- name: Copy certificate files

copy:

src: "{{ item.src }}"

dest: "{{ item.dest }}"

group: "{{ target_group }}"

owner: "{{ target_user }}"

force: yes

loop:

- {src: '{{ ansible_hostname }}.local.net.crt', dest: '/etc/ssl/certs/{{ ansible_hostname }}.local.net.crt'}

- {src: '{{ ansible_hostname }}.local.net.key', dest: '/etc/ssl/certs/{{ ansible_hostname }}.local.net.key'}

# The roles that will be executed.

roles:

- name: ../roles/ansible-role-install-rethinkdb-offline

- name: ../roles/ansible-role-rethinkdb-configure

- name: ../roles/ansible-role-install-node-exporter-offline

# This is the real 'testing' part of the playbook

# As described before each role should make sure it does what it should do.

# But in order to make sure the provisioning works as a whole we will be testing some things here again

post_tasks:

- name: Get virtual memory config

shell: sysctl vm.max_map_count

register: rethinkdb_virtual_memory

- name: Validate virtual memory config is correct

assert:

that:

- "'vm.max_map_count = 262144' in rethinkdb_virtual_memory.stdout"

- name: Get service status

service_facts:

register: service_state_rethinkdb

- name: Validate status of rethinkdb service

assert:

that:

- "'running' in service_state_rethinkdb.ansible_facts.services['rethinkdb.service'].state"

# Call the node_exporter locally and save the output

- name: Check if node exporter is working

uri:

url: https://localhost:10081/metrics

return_content: yes

validate_certs: no

register: node_exporter_response

# Validate that there is some output.

- name: Validate node exporter running

assert:

that:

- "'go_goroutines' in node_exporter_response.content"

- name: install horizon and proxy

hosts: leader # Only execute this on the servers in the `leader` group

become: yes

roles:

- name: ../roles/ansible-role-rethinkdb-horizon

- name: ../roles/ansible-role-rethinkdb-exporter

- name: ../roles/ansible-role-rethinkdb-nginx

post_tasks:

- name: Get service status

service_facts:

register: service_state_rethinkdb

- name: Validate status of rethinkdb service

assert:

that:

- "'running' in service_state_rethinkdb.ansible_facts.services['rethinkdb.service'].state"

- name: Validate status of rethinkdb-exporter service

assert:

that:

- "'running' in service_state_rethinkdb.ansible_facts.services['rethinkdb-exporter.service'].state"

run_once: true

# The rethinkdb rethinkdb_exporter should provided metrics, and we want to check the results so save it to a variable.

- name: Check if all nodes are connected

uri:

url: https://localhost:10082/metrics

return_content: yes

validate_certs: no

register: exporter_response

run_once: true

# Validate that there are three servers connected to the cluster according to the rethinkdb_exporter

- name: Validate number of nodes running

assert:

that:

- "'rethinkdb_cluster_servers_total {{ rethinkdb_number_of_nodes }}' in exporter_response.content"

run_once: true

...

In the post_taks you can add as many assertions as you want/need. Make sure that you at least test the basics, so that the thing you installed is up and working.

Execute it

Well we only need to run one command to test the whole thing:

vagrant up

Or if you want to execute it on Azure DevOps:

stages:

- stage: Verifying

pool:

vmImage: 'macOS-latest'

jobs:

- job: Test_the_setup

condition: or(eq('${{parameters.testRethinkDBSetup}}', true), eq(variables.isMain, false))

steps:

- script: brew install ansible

displayName: 'Install ansible'

- script: |

cd rethinkdb

vagrant up

displayName: 'Run rethinkdb ansible scripts'

This will start the Vagrantfile and in effect create the VM's and start the playbook execution.

vagrant1: Calculating and comparing box checksum...

==> vagrant1: Successfully added box 'generic/rhel7' (v3.2.10) for 'virtualbox'!

==> vagrant1: Preparing master VM for linked clones...

vagrant1: This is a one time operation. Once the master VM is prepared,

vagrant1: it will be used as a base for linked clones, making the creation

vagrant1: of new VMs take milliseconds on a modern system.

==> vagrant1: Importing base box 'generic/rhel7'...

---

PLAY [Install rethinkdb] *******************************************************

TASK [Gathering Facts] *********************************************************

ok: [vagrant2]

ok: [vagrant3]

ok: [vagrant1]

TASK [Add group "rethinkdb01"] *************************************************

changed: [vagrant2] => {"changed": true, "gid": 1001, "name": "rethinkdb01", "state": "present", "system": false}

changed: [vagrant1] => {"changed": true, "gid": 1001, "name": "rethinkdb01", "state": "present", "system": false}

changed: [vagrant3] => {"changed": true, "gid": 1001, "name": "rethinkdb01", "state": "present", "system": false}

--------

PLAY RECAP *********************************************************************

vagrant1 : ok=79 changed=48 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

vagrant2 : ok=44 changed=27 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

vagrant3 : ok=44 changed=27 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

With this setup you are sure that the provisioned servers are working as intended and that if you make a change it is validated. Even after the creator left 😄

We now have a local running database cluster that is tested and, as a bonus, you can use it for development.

If you have any question regarding testing Ansible playbooks and or setting up a local Vagrant (cluster) environment, please let me know! (Not sure if I can answer them but will do my best 😰)

Also any other remarks/improvements are always welcome.

Oldest comments (0)