Monorepos—single repositories that store code and assets for multiple software projects—are trendy in the frontend engineering scene. We at Hotjar are splitting our main app (Insights Webapp) from one huge monolith into small projects within a monorepo. One of the reasons we’ve done this is to make project development more scalable, regardless of the number of areas involved and the engineers working in them.

We mainly instrument our monorepo with pnpm for the dependencies and Nx for the tooling and running tasks. However, orchestrating a monorepo is challenging, and scaling it correctly is more complicated than you’d think.

Running our tests in CI within a monorepo in the optimal amount of time was a complex process that took many iterations to refine.

The old approach

We run the tests only for monorepo workspaces affected by the changes in a branch, so we don’t waste time running tests for code that is not affected at all. Nx is great at this—it identifies the different workspaces of the monorepo affected by the files changed in our PRs.

However, as mentioned earlier, we are in the process of breaking down the monolith into small pieces. We have many small, modern monorepo workspaces (let’s call them modernA, modernB, etc.), and two massive legacy workspaces (we’ll refer to them as legacyX and legacyY).

The runtime difference between modern and legacy tests is massive: the former have only a few tests that are specific to their scope, while the latter have hundreds of tests still covering many areas owned by various squads.

So, this was our approach: we parallelized our test runs in six jobs within GitLab CI, distributing the tests by workspaces. Since legacyX and legacyY are the slowest test runs—and almost always affected by Nx (because they still contain the core and shell of the webapp)—we isolate them in their own jobs. Then, we have four jobs remaining for the rest of the modern workspaces affected by the PR changes.

With this in mind, let’s assume a PR where our changes affected legacyX, legacyY, modernA, modernB, modernC, modernD, modernE, modernF, modernG, and modernH. The tests would be grouped like this in CI jobs:

legacyXlegacyY-

modernA,modernB -

modernC,modernD -

modernE,modernF -

modernG,modernH

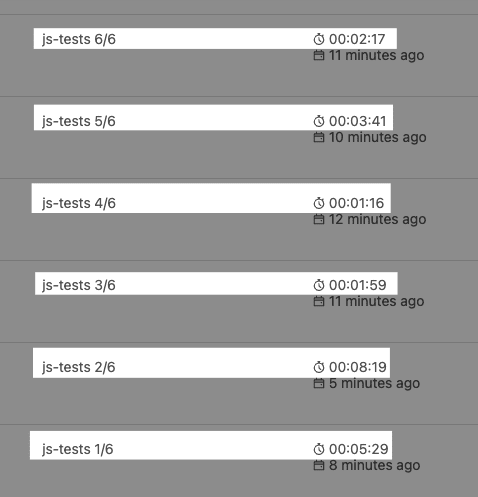

So far, so good. The problem comes when we take a look at the runtimes in CI:

We can see that jobs 3-6 are reasonably fast, but 1 and 2 became a bottleneck! We can’t break them down any further (to do that, we need to keep extracting functionality into its own workspace). Our CI won’t be completed until the slowest test job is finished. So, in this case, our CI will take eight minutes, no matter how fast the remaining workspace tests are.

This is because all our monorepo workspaces contain different numbers of tests–jobs 1 and 2 are running many, many tests for the legacy workspaces, while the others are running relatively small modern workspaces with fewer tests.

The new approach

Our test framework and runner for JS/TS is Jest. In v28, they introduced a new feature for sharding test runs: Jest now includes a new --shard CLI option that allows you to run parts of your test across different machines—it’s one of Jest’s oldest feature requests.

This feature is handy for distributing our test suites in homogeneous chunks across our parallel jobs. We were already running tests split by workspace through Nx, but this didn’t give us granular control over how many tests we could run per job. Some ran hundreds of tests (e.g., the legacy workspaces), taking eight minutes to complete; others executed fewer than 20 tests (e.g., recently-created modern workspaces), taking only a minute to complete.

Although the tests are no longer grouped by monorepo workspaces on each job, we still keep the ability to run the tests only for affected workspaces. Thanks to the Nx print-affected command, we can use the list of projects affected to manually filter workspaces that need to be checked.

With this new approach in mind, and the same PR used as an example above, the tests would be distributed in CI jobs like this:

-

legacyX 1/6,legacyY 1/6,modernA 1/6,modernB 1/6,modernC 1/6,modernD 1/6,modernE 1/6,modernF 1/6,modernG 1/6,modernH 1/6 -

legacyX 2/6,legacyY 2/6,modernA 2/6,modernB 2/6,modernC 2/6,modernD 2/6,modernE 2/6,modernF 2/6,modernG 2/6,modernH 2/6 - ...

- ...

- ...

-

legacyX 6/6,legacyY 6/6,modernA 6/6,modernB 6/6,modernC 6/6,modernD 6/6,modernE 6/6,modernF 6/6,modernG 6/6,modernH 6/6

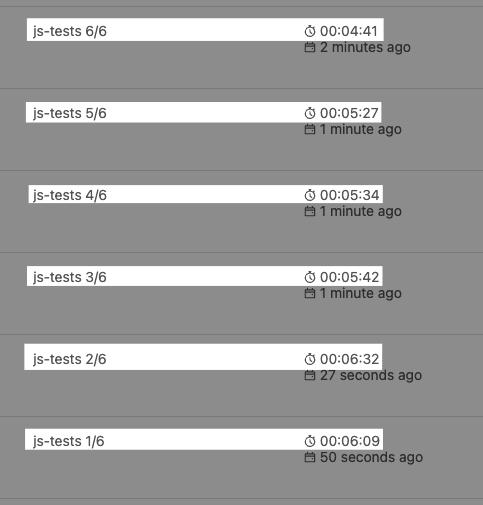

This leads to a pipeline result like the one below:

The runtimes are more consistent simply because we mixed tests from all the monorepo workspaces together! Now, the slowest test job takes just six minutes, saving us a solid two minutes from the CI runtime.

These numbers are not just examples—they’re real runtimes from our CI. This, in particular, was our worst-case scenario: running the tests for all the workspaces in our monorepo. For the average scenario (seven to eight workspaces affected, including the two legacy ones), we can save almost four minutes per pipeline—a potential save of 50%!

The Jest shard feature didn’t just make our tests’ runtime more consistent in CI; it also made it more scalable. Now, it doesn’t matter if our tests grow by the number of tests per workspace, or by new workspaces introduced to the monorepo: they’ll be distributed consistently between available parallel jobs.

This is a huge win, especially compared to the previous approach. In the past, we would always have a job taking eight minutes to complete, and the remaining jobs taking different, haphazard completion times on each PR, according to the number of tests assigned.

This new approach was also surprisingly easy to set up in GitLab CI with a custom script, which looks like this:

// calculate-affected-projects.js (snippet simplified for convenience)

const exec = require('node:child_process').exec;

exec('nx print-affected --target=test', (err, stdout) => {

// do something if `err`

const affectedProjects = JSON.parse(stdout.toString().trim()).projects;

const filterAffectedProjects = affectedProjects.map((projectName) => `--filter ${projectName}`).join(' ');

console.log(filterAffectedProjects);

});

# .gitlab-cy.yml (snippet simplified for convenience)

js-tests:

parallel: 6

script:

- export FILTER_AFFECTED_PROJECTS=$(node calculate-affected-projects.js)

- pnpm --no-bail --if-present $FILTER_AFFECTED_PROJECTS test --ci --shard=$CI_NODE_INDEX/$CI_NODE_TOTAL

Trade-offs

Everything that glitters isn’t gold, though! This new approach with Jest shard has some implications worth mentioning:

Challenging upgrade to Jest v28

This is dependent on your codebase and your current version of Jest. At the time, we were still on Jest v26. Upgrading two majors was hard, not only because of the breaking changes introduced in v27 and v28, but also due to Jest and js-dom working more closely to a real browser on every upgrade. This led to flaky tests, side effects, and unexpected behaviors during the upgrade that were complicated to debug and fix. The result was worth it, but if you’re a few majors behind Jest v28, remember: you may need to put in more effort than you think.

Inability to take advantage of Nx computation cache

Nx uses a computation cache internally, so it never rebuilds or re-runs the same code twice. This can save a lot of time if the affected monorepo workspaces didn’t change, so Nx can directly output the previous test results.

But with the new Jest shard approach, we can’t take advantage of it. This isn’t a huge deal for us because we weren’t using this functionality yet. Even if we did, the two legacies’ monorepo workspaces are constantly changing, invalidating the computation cache. It was easy for us to ditch the feature, but perhaps you rely on it heavily. If so, we suggest measuring the impact of using Nx computation cache vs. Jest shard for running your tests before changing the way you run them on CI.

Lack of coverage threshold

For us, this has been the biggest downside: the Jest shard option is not compatible with the global coverage threshold. Surprisingly, this is not mentioned anywhere in the docs, but there is this issue open in their repo. Hopefully, this feature will become compatible with the shard flag soon. In the meantime, we’ve had to disable the global coverage threshold while we explore other ways to set it.

Longer total computed runtime on CI

If you compare the total time spent by the six jobs in the old approach (~23 minutes) and the new approach (~30 minutes), the former took less computation time from our CI. This is not causing a significant impact for us, but it could in other cases, especially if you have a tightly restricted minutes-per-month plan to run your CI.

That’s it! We hope you found this post interesting—not just because of the new Jest shard feature, but also the learnings around our take on monorepos and the trade-offs that come with different approaches.

Cover photo by Marcin Jozwiak on Unsplash

Oldest comments (0)