If you’re an enterprise architect, you’ve probably heard of and worked with a microservices architecture. And while you might have used REST as your service communications layer in the past, more and more projects are moving to an event-driven architecture. Let’s dive into the pros and cons of this popular architecture, some of the key design choices it entails, and common anti-patterns.

What is Event-Driven Microservice Architecture?

In event-driven architecture, when a service performs some piece of work that other services might be interested in, that service produces an event—a record of the performed action. Other services consume those events so that they can perform any of their own tasks needed as a result of the event. Unlike with REST, services that create requests do not need to know the details of the services consuming the requests.

Here’s a simple example: When an order is placed on an ecommerce site, a single “order placed” event is produced and then consumed by several microservices:

1) the order service which could write an order record to the database

2) the customer service which could create the customer record, and

3) the payment service which could process the payment.

Events can be published in a variety of ways. For example, they can be published to a queue that guarantees delivery of the event to the appropriate consumers, or they can be published to a “pub/sub” model stream that publishes the event and allows access to all interested parties. In either case, the producer publishes the event, and the consumer receives that event, reacting accordingly. Note that in some cases, these two actors can also be called the publisher (the producer) and the subscriber (the consumer).

Why Use Event-Driven Architecture

An event-driven architecture offers several advantages over REST, which include:

Asynchronous – event-based architectures are asynchronous without blocking. This allows resources to move freely to the next task once their unit of work is complete, without worrying about what happened before or will happen next. They also allow events to be queued or buffered which prevents consumers from putting back pressure on producers or blocking them.

Loose Coupling – services don’t need (and shouldn’t have) knowledge of, or dependencies on other services. When using events, services operate independently, without knowledge of other services, including their implementation details and transport protocol. Services under an event model can be updated, tested, and deployed independently and more easily.

Easy Scaling – Since the services are decoupled under an event-driven architecture, and as services typically perform only one task, tracking down bottlenecks to a specific service, and scaling that service (and only that service) becomes easy.

Recovery support – An event-driven architecture with a queue can recover lost work by “replaying” events from the past. This can be valuable to prevent data loss when a consumer needs to recover.

Of course, event-driven architectures have drawbacks as well. They are easy to over-engineer by separating concerns that might be simpler when closely coupled; can require a significant upfront investment; and often result in additional complexity in infrastructure, service contracts or schemas, polyglot build systems, and dependency graphs.

Perhaps the most significant drawback and challenge is data and transaction management. Because of their asynchronous nature, event-driven models must carefully handle inconsistent data between services, incompatible versions, watch for duplicate events, and typically do not support ACID transactions, instead supporting eventual consistency which can be more difficult to track or debug.

Even with these drawbacks, an event-driven architecture is usually the better choice for enterprise-level microservice systems. The pros—scalable, loosely coupled, dev-ops friendly design—outweigh the cons.

When to Use REST

There are, however, times when a REST/web interface may still be preferable:

- You need a synchronous request/reply interface

- You need convenient support for strong transactions

- Your API is available to the public

- Your project is small (REST is much simpler to set up and deploy)

Your Most Important Design Choice – Messaging Framework

Once you’ve decided on an event-driven architecture, it is time to choose your event framework. The way your events are produced and consumed is a key factor in your system. Dozens of proven frameworks and choices exist and choosing the right one takes time and research.

Your basic choice comes down to message processing or stream processing.

Message Processing

In traditional message processing, a component creates a message then sends it to a specific (and typically single) destination. The receiving component, which has been sitting idle and waiting, receives the message and acts accordingly. Typically, when the message arrives, the receiving component performs a single process. Then, the message is deleted.

A typical example of a message processing architecture is a Message Queue. Though most newer projects use stream processing (as described below), architectures using message (or event) queues are still popular. Message queues typically use a “store and forward” system of brokers where events travel from broker to broker until they reach the appropriate consumer. ActiveMQ and RabbitMQ are two popular examples of message queue frameworks. Both of these projects have years of proven use and established communities.

Stream Processing

On the other hand, in stream processing, components emit events when they reach a certain state. Other interested components listen for these events on the event stream and react accordingly. Events are not targeted to a certain recipient, but rather are available to all interested components.

In stream processing, components can react to multiple events at the same time, and apply complex operations on multiple streams and events. Some streams include persistence where events stay on the stream for as long as necessary.

With stream processing, a system can reproduce a history of events, come online after the event occurred and still react to it, and even perform sliding window computations. For example, it could calculate the average CPU usage per minute from a stream of per-second events.

One of the most popular stream processing frameworks is Apache Kafka. Kafka is a mature and stable solution used by many projects. It can be considered a go-to, industrial-strength stream processing solution. Kafka has a large userbase, a helpful community, and an evolved toolset.

Other Choices

There are other frameworks that offer either a combination of stream and message processing or their own unique solution. For example, Pulsar, a newer offering from Apache, is an open-source pub/sub messaging system that supports both streams and event queues, all with extremely high performance. Pulsar is feature-rich—it offers multi-tenancy and geo-replication—and accordingly complex. It’s been said that Kafka aims for high throughput, while Pulsar aims for low latency.

NATS is an alternative pub/sub messaging system with “synthetic” queueing. NATS is designed for sending small, frequent messages. It offers both high performance and low latency. However, NATS considers some level of data loss to be acceptable, prioritizing performance over delivery guarantees.

Other Design Considerations

Once you’ve selected your event framework, here are several other challenges to consider:

-

Event Sourcing

It is difficult to implement a combination of loosely-coupled services, distinct data stores, and atomic transactions. One pattern that may help is Event Sourcing. In Event Sourcing, updates and deletes are never performed directly on the data; rather, state changes of an entity are saved as a series of events.

-

CQRS

The above event sourcing introduces another issue: Since state needs to be built from a series of events, queries can be slow and complex. Command Query Responsibility Segregation (CQRS) is a design solution that calls for separate models for insert operations and read operations.

-

Discovering Event Information

One of the greatest challenges in event-driven architecture is cataloging services and events. Where do you find event descriptions and details? What is the reason for an event? What team created the event? Are they actively working on it?

-

Dealing with Change

Will an event schema change? How do you change an event schema without breaking other services? How you answer these questions becomes critical as your number of services and events grows.

Being a good event consumer means coding for schemas that change. Being a good event producer means being cognizant of how your schema changes impact other services and creating well-designed events that are documented clearly. -

On Premise vs Hosted Deployment

Regardless of your event framework, you’ll also need to decide between deploying the framework yourself on premise (message brokers are not trivial to operate, especially with high availability), or using a hosted service such as Apache Kafka on Heroku.

Anti-Patterns

As with most architectures, an event-driven architecture comes with its own set of anti-patterns. Here are a few to watch out for.

-

Too much of a good thing

Be careful you don’t get too excited about creating events. Creating too many events will create unnecessary complexity between the services, increase cognitive load for developers, make deployment and testing more difficult, and cause congestion for event consumers. Not every method needs to be an event.

-

Generic events

Don’t use generic events, either in name or in purpose. You want other teams to understand why your event exists, what it should be used for, and when it should be used. Events should have a specific purpose and be named accordingly. Events with generic names, or generic events with confusing flags, cause issues.

-

Complex dependency graphs

Watch out for services that depend on one another and create complex dependency graphs or feedback loops. Each network hop adds additional latency to the original request, particularly north/south network traffic that leaves the datacenter.

-

Depending on guaranteed order, delivery, or side effects

Events are asynchronous; therefore, including assumptions of order or duplicates will not only add complexity but will negate many of the key benefits of event-based architecture. If your consumer has side effects, such as adding a value in a database, then you may be unable to recover by replaying events.

-

Premature optimization

Most products start off small and grow over time. While you may dream of future needs to scale to a large complex organization, if your team is small then the added complexity of event-driven architectures may actually slow you down. Instead, consider designing your system with a simple architecture but include the necessary separation of concerns so that you can swap it out as your needs grow.

-

Expecting event-driven to fix everything

On a less technical level, don’t expect event-driven architecture to fix all your problems. While this architecture can certainly improve many areas of technical dysfunction, it can’t fix core problems such as a lack of automated testing, poor team communication, or outdated dev-ops practices.

Learn More

Understanding the pros and cons of event-driven architectures, and some of their most common design decisions and challenges is an important part of creating the best design possible.

If you want to learn more, check out this event-driven reference architecture, which allows you to deploy a working project on Heroku with a single click. This reference architecture creates a web store selling fictional coffee products.

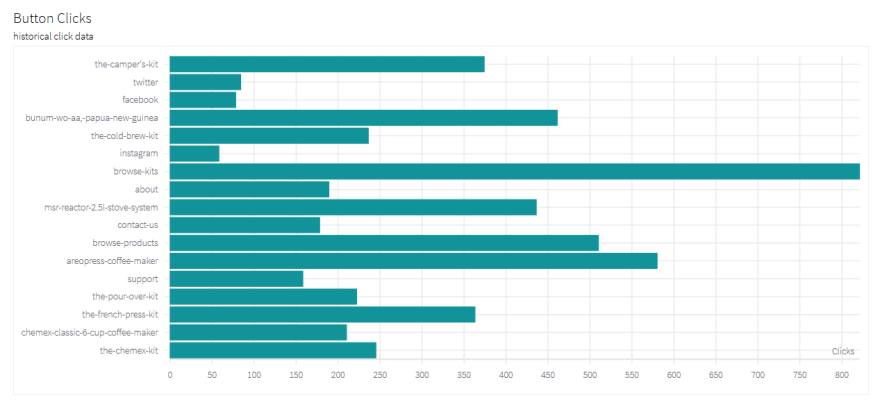

Product clicks are tracked as events and stored in Kafka. Then, they are consumed by a reporting dashboard.

The code is open source so you can modify it according to your needs and run your own experiments.

Top comments (15)

Great Article! We've stumbled upon all of this separately. Cool to see it all in one place. How do you handle "replays" or getting to current state? In a highly decoupled, event based system, microservices don't really know who created the event. So, how would a "new" microservice get up to date with a stream that might be several months or years old? Seems impractical to read back all of the messages of an event stream.

Is a REST endpoint ok to use? But would also introduce some coupling.

thanks!

Great question! I think it depends a little bit on your use case. In my last company we had a limited data retention window so replaying from the beginning of the window was slow but worked. For months or years of data, it sounds like you need a different approach. If the size of the current state is less than the total number of state changes over time (eg. an account balance not an account history), then I have some suggestions. You could load the current state from another canonical source (eg. from a REST endpoint, a database or a replica). Alternatively, you could periodically checkpoint or back up the state to a persistent store, and just replay events from the last checkpoint time to catch up. It's even possible to store checkpoints to another Kafka partition to avoid adding other service dependencies. Hopefully one of those ideas helps :)

Thanks! seems like a combination of snapshots and/or REST endpoints might be the way to go. I was trying to avoid having one service talk directly to another, but "catching up" might be an exception =)

Too funny. Hadn't heard of Pulsar so when I looked it up and saw it was an Apache project I immediately started thinking, "please, nozookeeper nozookeeper nozookeeper nozookeeper, ..., OH G----MN F---KING ZOOKEEPER! Kill it with fire!"

Hi Jason,

Thanks for the article. Where do Amazon SNS and Google Pub/Sub fit in the picture? When would you recommend these services over Apache Kafka?

Those are both great for managed pub/sub messaging. However, they don't guarantee ordering. If order matters to you, such as for time-ordered logs or metrics, then you might want to use Apache Kafka for Heroku or Amazon Kinesis instead. Also consider the delivery guarantees. SNS tries a limited number of times. For more control over expiration, or to reprocess data, then something like Kafka will be better.

What's your view on the idea that microservices is only advantageous for applications with enough complexity?

For low-complexity apps, it suggests that it's better to start monolith (applying loose coupling principles) and only migrate to microservices if really needed in the future.

Yes I definitely agree with this. It's best to avoid premature optimization and unnecessary abstractions. A low complexity app is the ideal design if it fits your business requirements. A microservice architecture adds complexity and latency over method calls, so you need to have a benefit that offsets that.

This is a great article. I recently wrote a similar article explaining event-driven architecture:

dev.to/himoacs/what-is-event-drive...

However, it's unfortunate to see that Solace's PubSub+ event broker is not mentioned. Though, it might be because it's not open source. Nonetheless, anyone who is looking to go the event-driven way should consider a smart enterprise-grade broker that supports all of the features you mentioned in your article (including request/reply).

If nothing else, you will certainly not have to deal with Zookeeper with PubSub+.

And it's available to be used in production for FREE. :)

"REST is much simpler to set up and deploy"

I don't know that I agree with this statement, at least not entirely. If you're setting up all the infrastructure, absolutely.

Pick a tooling like serverless, and it's arguably simpler to set up an event driven app. I can have the skeleton up and running on AWS or Google in very little time.

This!

This is how you make microservices. Anyone that makes SOA should use this. We have so many dependant microservices at work it's a distributed monolith painful to work with.

Check this out kalium.alkal.io

There's an easy way make your stuff reactive using Kalium.

link.medium.com/04k7E87bi0

Thanks for this amazing article!

Great article. Thanks for sharing!