Introduction

The use of machine learning (ML) for image classification is very common today from identifying objects in the original ImageNet dataset to grading levels of cancerous tumors. Machine learning models trained on such datasets, however, aren't resistant to Out-Of-Distribution (OOD) testing samples, in which the models are assessed on their edge cases and commonly result in inaccurate classifications. One particular ongoing challenge is held by the European Conference on Computer Vision (ECCV) specifically addressing these edge cases. While testing edge cases for these models may seem boring, there is a whole field of ML dedicated to addressing the limitations of classification models that's on the rise called adversarial ML.

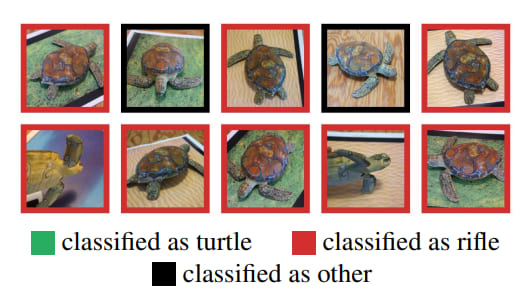

Beyond just testing models on their edge cases, adversarial ML is important for maintaining the security and safe usage of such ML models. Adversarial attacks from the input data are intentional image patterns generated to fool the classification model based on previous knowledge of its weaknesses. There are several strategies to generate such patterns, but one of the easiest ones to understand is to recognize that deep learning classification models are trained on the principle of gradient descent to minimize their defined loss function. Instead of descending, we want to ascend the gradient so we achieve maximal loss and generate a specific pattern that can be put on the object to be detected to fool the classifier to think that the object is something else. This type of adversarial attack is called an untargeted attack since we simply want to make the model think that the object is something else. On the other hand, a targeted attack is fooling the classifier to think that the object is another specific label, such as making a turtle look like a rifle.

Original Paper

The original paper from Google research named "Synthesizing Robust Adversarial Examples" from 2017 brought the field of adversarial ML to the public eye when it showed how a specific image pattern applied to a turtle's back fooled a classifier into thinking that the turtle was a rifle when observed from any angle. While it is a surprising finding that such a targeted attack can make a turtle look like a rifle, the more important question on everyone's minds (especially the US Department of Defense) was: can a rifle be made to look like a turtle?

With the introduction of machine learning applications to all aspects of our lives, it is not surprising that the defense industry is leveraging this emerging technology in all ways possible. A couple of examples of relevant use today range from image classification of vehicles for targeting to identifying hidden weapons. If the opposing side knows that the defense industry is using such ML models, they can theoretically pose adversarial attacks and fool the models; thus, the topic of adversarial defenses is naturally introduced.

Much like the parallel evolution of attacks and defenses between bacteria and viruses, adversarial attacks and adversarial defenses are quickly advancing in research to match the development rate of the other.

Closing Remarks

Personally, I'm excited to see how this field progresses as it's still in its early stages. One tweet I saw that made me chuckle said that anyone could write a paper on the adversarial attacks or out-of-distribution data samples of a new state-of-the-art model and get it easily published - theoretically pushing out hundreds of papers every year!

Oldest comments (0)