I've been working on a Call for Code project, called Choirless. I've written a number of posts on here about IBM Cloud Functions, which are used for most of the render pipeline. A few weeks ago we did the "alpha" release of Choirless.

Since then, we've had a number of people contact us about using Choirless. From barbershop choirs to marching bands. At the larger end of the scale, the marching bands have around 100-120 musicians performing at once.

Could Choirless handle that many? Well... we certainly want it to, but out of interest could the 'alpha' version we've put together in the past 8 weeks cope with it? I decided to give it a try!

The rendering pipeline is a series of IBM Cloud Functions, that have triggers on IBM Cloud Object Storage (COS) buckets. So a file gets uploaded from the Choirless front end to the first COS bucket, and then a trigger fires calling a serverless function to process it and put the output in a different bucket. That bucket in turn has triggers on it, and so on. Until we get to the end.

The current pipeline processing stages are:

- Convert the raw video file to standard format, reduce size, normalise audio loudness

- Calculate the alignment with the reference audio track

- Trim the audio/video based on alignment calculation

- Stitch all the videos together to produce final video

- Take snapshot jpg of video

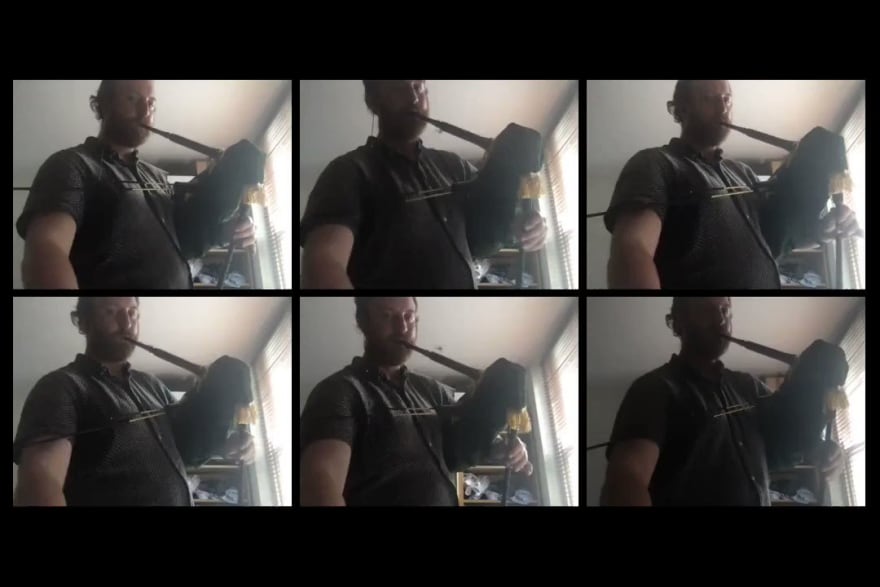

My colleague, Sean, got out his bagpipes and recorded 6 renditions of a particular piece of music. This was how that came out on Choirless to start with:

Decided to test out some of the latest choirless.com alignment technologies with my practice #Bagpipes.

Decided to test out some of the latest choirless.com alignment technologies with my practice #Bagpipes.

This is why my neighbours hate me 😂

(The bits out of sync aren't the algorithms fault, that's just me struggling to play without a drum beat 😅)18:52 PM - 21 Jul 2020

So to stress test the render pipeline I was going to need to replicate Sean's videos multiple times. So I create a new cloud function called "wateraftermidnight" (can you guess the reference?). This serverless function counted how many "gremlin" pieces there are in the COS bucket:

choir_id = args['choir_id']

song_id = args['song_id']

num_gremlins = int(args['num_gremlins'])

bucket = "choirless-videos-converted"

prefix_key = f"{choir_id}+{song_id}"

# list all objects in the COS bucket

contents = cos.list_objects(

Bucket=bucket,

Prefix=prefix_key

)

# Just get the "keys" (filenames)

files = [ x['Key'] for x in contents['Contents'] ]

# Find the gremlins and non-gremlins

gremlins = [ x for x in files if 'gremlin' in x ]

non_gremlins = [ x for x in files if 'gremlin' not in x ]

# if we have more gremlins than we need, abort

if len(gremlins) > num_gremlins:

args["success"] = "true"

return args

# pick a random non_gremlins as the source file

source_key = random.choice(non_gremlins)

So by this point we have checked if we have got enough files, if so we abort.

Later on in the code, we take the source part and apply a random delay to the audio of up to 50ms

i = len(gremlins) + 1

new_part = f"gremlin-{i}"

new_filename = f"{choir_id}+{song_id}+{part_id}-{new_part}{file_path.suffix}"

new_path = file_path.with_name(new_filename)

delay = random.randint(0,50)

stream = ffmpeg.input(str(file_path))

audio = stream.filter_('adelay', delays=[delay, delay]).filter_('asetpts', 'PTS-STARTPTS')

video = stream.video

out = ffmpeg.output(audio, video, str(new_path))

stdout, stderr = out.run()

This is so that we simulate pieces being out a sync slightly so that our sync code in our pipeline has some work to do.

At the end, we call ourselves again, so this function will run over and over again until we have enough "gremlins"

url = "https://eu-gb.functions.appdomain.cloud/api/v1/web/1d0ffa5a-835d-4c40-ac80-77ca4a35f028/choirless/wateraftermidnight.json"

data = {'blocking': False,

'choir_id': args['choir_id'],

'song_id': args['song_id'],

'num_gremlins': args['num_gremlins'],

'endpoint': args['endpoint'],

'auth': args['auth'],

}

headers = {'X-Require-Whisk-Auth': args['auth']}

response = requests.post(url,

headers=headers,

json=data)

How did it perform? Well as the number of parts increased, it certainly took longer to process. Eventually we hit the 10 minute max processing time for an IBM Cloud Function:

2020-07-25 17:48:57 9b844c7035a54743844c7035a5574376 blackbox warm 10m0.1s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:48:46 deb00965e52a4517b00965e52a451702 blackbox warm 8m16.267s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:48:23 803eebed9a094efbbeebed9a094efb8d blackbox cold 10m0.887s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:48:14 9a3f147606e84495bf147606e8b49567 blackbox cold 10m0.738s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:47:56 b51ddd379e0841a49ddd379e08e1a43b blackbox warm 10m0.1s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:47:37 2360edede1bf4c81a0edede1bfcc81f9 blackbox warm 10m0.1s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:47:08 51d0a0c7a58c4a8c90a0c7a58c7a8c97 blackbox cold 10m0.9s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:45:56 87e813b70ed0489ba813b70ed0a89b33 blackbox cold 10m0.654s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:45:49 cd6ecfbfc80b468aaecfbfc80b968a76 blackbox cold 5m48.593s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:45:47 579fe9bf797e4d799fe9bf797ecd7990 blackbox cold 10m0.758s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:45:29 0590fda27cb041a490fda27cb061a489 blackbox cold 5m37.71s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:44:35 8b00060e460d40f480060e460db0f446 blackbox cold 10m0.588s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:42:59 2fdfd1b0f6684d629fd1b0f668bd6255 blackbox cold 5m47.901s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:42:48 397d601be46843eebd601be468f3ee10 blackbox warm 5m39.129s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:42:22 fdaec354afa848faaec354afa828fa6e blackbox warm 7m30.957s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 17:42:13 bdcb0526692047468b052669204746ac blackbox warm 8m22.11s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

Originally step 1. above in our pipeline was reducing our input video files down to a max width of 640px. But if we have 120 videos on a screen, then the final videos will each be tiny. So I wrote a script to reduce them all down further to 160px each. And re-ran the experiment:

% ic fn action invoke choirless/wateraftermidnight --param choir_id 001jr0nG1EKeJr2W13rs3ZIZi42mi3fO --param song_id 001jxwlK1NkxRI0Y7T3S1jUSHW2Ndy5D --param num_gremlins 120

It was still close to the 10m limit, but in managed to get through, with the final run being 7m37s:

2020-07-25 20:33:31 7f8a96c60f544dd28a96c60f540dd217 blackbox cold 7m37.326s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:31 89326734e14d4dacb26734e14d7dacbf blackbox cold 8m46.551s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:30 d779c856e14a4836b9c856e14a083602 blackbox cold 10m0.612s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:24 62f3899173ee4a7db3899173ee6a7de7 blackbox cold 10m0.922s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:12 fc7a69b025614514ba69b02561c5147c blackbox cold 10m0.725s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:06 d5981e250aca4d75981e250aca0d75f3 blackbox cold 10m0.547s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:05 adf6fc4789d746bbb6fc4789d7d6bbd4 blackbox cold 10m0.58s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:04 f16bbd2d6f754109abbd2d6f75410992 blackbox cold 8m27.366s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:04 4481728f79b246e281728f79b206e25f blackbox cold 8m39.499s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:49:01 1e4f7485d9364bfc8f7485d936abfc27 blackbox warm 7m50.131s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:48:54 79a5603cd3744b05a5603cd3742b0599 blackbox warm 9m39.024s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:48:52 1d6c6d0a42ff4661ac6d0a42ffa661b7 blackbox cold 7m32.764s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:48:52 02b087103f444b56b087103f44fb567f blackbox cold 7m24.082s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:48:49 17f4fdea027148f2b4fdea027188f27b blackbox warm 10m0.102s developer error 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:48:48 9258a88f95864ddd98a88f95860dddda blackbox cold 9m54.567s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

2020-07-25 19:48:46 d38d78db20ee48e38d78db20eea8e31e blackbox cold 7m34.876s success 1d0ffa5a-8...a35f028/stitcher:0.0.1

And how did it come out? Pretty amazingly!

I was expecting it to fail due to running out of temp disk space, or file descriptors or memory... but it runs in under 2GB of RAM.

This is very promising. We are already planning a re-write of the rendering pipeline to break it up into stages a bit more. And the part that actually forms the ffmpeg command to run, currently in node.js will be re-written in Python and made more modular so we can add additional functionality in (adjust volume of individual parts, manually tune the panning, etc)

Latest comments (0)