Introduction

As many of you will already know, I am Head of Technology at FirstPort.

A key part of my role is delivering FirstPort’s vision of ‘People First’ technology. To do this, it is imperative that I select the right technology to underpin the delivery of services that help make customers’ lives easier.

Today I want to talk about my selection of SonarQube (and a whole host of other cool tools & services such as GitHub Actions, Terraform, Caddy, Let's Encrypt, Docker & more)

SonarQube is the leading tool for continuously inspecting the Code Quality and Security of your codebases and guiding development teams during Code Reviews

Building our Container Image

I did not want to use SonarCloud nor did I want to host this on a VM. So I decided on ACI (Azure Container Instances)

However, when trying to use ACI with an external database I found that any version of SonarQube after 7.7 throws an error:

ERROR: [1] bootstrap checks failed

[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

I found this was because SonarQube uses an embedded Elasticsearch, therefore you need to ensure that your Docker host configuration complies with the Elasticsearch production mode requirements

As the requirements above suggest, in order to fix this it would mean changing the host OS settings to increase the max_map_count, on a Linux OS this would be changing the /etc/sysctl.conf file to update the max_map_count

vm.max_map_count=262144

The problem with ACI is that there is no access to the host, so how can the latest SonarQube (latest version at the time of writing was 9.0.1) be ran in ACI if this cannot be changed.

In this blog I am going to detail the way we run SonarQube in Azure Container Instances with an external Azure SQL database.

Here at FirstPort, we also use Terraform to build the Azure infrastructure and of course GitHub Actions.

Next Steps

The first thing is to address the max_map_count issue, for this we need a sonar.properties file that contains the following setting:

sonar.search.javaAdditionalOpts=-Dnode.store.allow_mmap=false

This setting provides the ability to disable memory mapping in elastic search, which is needed when running SonarQube inside containers where you cannot change the hosts vm.max_map_count. (See Elasticsearch documentation)

Now we have our sonar.properties file we need to create a custom container so we can add that into the setup. A small dockerfile can achieve this:

FROM sonarqube:9.0.1-community

COPY sonar.properties /opt/sonarqube/conf/sonar.properties

RUN chown sonarqube:sonarqube /opt/sonarqube/conf/sonar.properties

This dockerfile is now ready to be built using Docker and pushed to an ACR (Azure Container Registry).

For more info on how to build a container and/or push to an ACR then have a look at the Docker and Microsoft documentation which have easy to follow instructions.

We first build the ACR using Terraform:

resource "azurerm_container_registry" "acr" {

name = join("", [var.product, "acr", var.location, var.environment])

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

admin_enabled = true

sku = "Standard"

tags = local.tags

}

and then use our standard workflow for running Terraform in GitHub Actions

Next we use GitHub Actions to build & push our container image to the ACR setup above. Note the cd container line. This is because we have our dockerfile and sonar.properties files in a folder called container (the sonar folder contains all the Terraform files for the rest of the infrastructure):

name: Build Container Image & Push to ACR

on:

workflow_dispatch:

jobs:

build:

name: Build Container & Push to ACR

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@master

- name: ACR build

uses: azure/docker-login@v1

with:

login-server: acrname.azurecr.io

username: acrusername

password: ${{ secrets.REGISTRY_PASSWORD }}

- run: |

cd container && docker build . -t acrname.azurecr.io/acrrepo:${{ github.sha }}

docker push acrname.azurecr.io/acrrepo:${{ github.sha }}

Building the SonarQube Infrastructure

So now that we have a container image uploaded to ACR we can look at the rest of the configuration.

There are a number of parts to create:

- File shares

- External Database

- Container Group

- SonarQube

- Reverse Proxy

At FirstPort our default is to use IaC (Infrastructure as Code), so I will show you how I use Terraform to configure the SonarQube infrastructure.

File Shares

The SonarQube documentation mentions setting up volume mounts for data, extensions and logs, for this I use an Azure Storage Account and Shares.

resource "azurerm_storage_account" "storage" {

name = join("", [var.product, "strg", var.location, var.environment])

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

account_tier = "Standard"

account_replication_type = "RAGZRS"

min_tls_version = "TLS1_2"

tags = local.tags

}

resource "azurerm_storage_share" "data-share" {

name = "data"

storage_account_name = azurerm_storage_account.storage.name

quota = 50

}

resource "azurerm_storage_share" "extensions-share" {

name = "extensions"

storage_account_name = azurerm_storage_account.storage.name

quota = 50

}

resource "azurerm_storage_share" "logs-share" {

name = "logs"

storage_account_name = azurerm_storage_account.storage.name

quota = 50

}

External Database

For the external database part I am using Azure SQL Server, a SQL Database and setup a firewall rule to allow azure services to access the database.

By using the random_password resource to create a SQL password no secrets are included and there is no need to know the password as long as the SonarQube Server does.

resource "azurerm_mssql_server" "sql_server" {

name = join("", [var.product, "sql", var.location, var.environment])

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

version = "12.0"

administrator_login = "sonaradmin"

administrator_login_password = random_password.sql_admin_password.result

minimum_tls_version = "1.2"

identity {

type = "SystemAssigned"

}

tags = local.tags

}

resource "azurerm_mssql_server_transparent_data_encryption" "sql_tde" {

server_id = azurerm_mssql_server.sql_server.id

}

resource "azurerm_sql_firewall_rule" "sql_firewall_azure" {

name = "AllowAccessToAzure"

resource_group_name = azurerm_resource_group.rg.name

server_name = azurerm_mssql_server.sql_server.name

start_ip_address = "0.0.0.0"

end_ip_address = "0.0.0.0"

}

resource "azurerm_mssql_database" "sonar" {

name = "sonar"

server_id = azurerm_mssql_server.sql_server.id

collation = "SQL_Latin1_General_CP1_CS_AS"

sku_name = "S2"

tags = local.tags

}

resource "random_password" "sql_admin_password" {

length = 32

special = true

override_special = "/@\" "

}

Container Group

Setting up the container group requires credentials to access to the Azure Container Registry to run the custom SonarQube container. Using the data resource allows retrieval of the details without passing them as variables:

data "azurerm_container_registry" "registry" {

name = "acrname"

resource_group_name = "acr-rg-name"

}

For this setup we are going to have two containers - the custom SonarQube container and a Caddy container. Caddy can be used as a reverse proxy and is small, lightweight and provides management of certificates automatically with Let’s Encrypt.

The SonarQube container configuration connects the SQL Database and Azure Storage Account Shares configured earlier.

The Caddy container configuration sets up the reverse proxy to the SonarQube instance:

resource "azurerm_container_group" "container" {

name = "containergroupname"

location = azurerm_resource_group.rg.location

resource_group_name = azurerm_resource_group.rg.name

ip_address_type = "public"

dns_name_label = "acrdnslabel"

os_type = "Linux"

restart_policy = "OnFailure"

tags = local.tags

image_registry_credential {

server = data.azurerm_container_registry.registry.login_server

username = data.azurerm_container_registry.registry.admin_username

password = data.azurerm_container_registry.registry.admin_password

}

container {

name = "sonarqube-server"

image = "${data.azurerm_container_registry.registry.login_server}/acrrepo:latest"

cpu = "2"

memory = "4"

environment_variables = {

WEBSITES_CONTAINER_START_TIME_LIMIT = 400

}

secure_environment_variables = {

SONARQUBE_JDBC_URL = "jdbc:sqlserver://${azurerm_mssql_server.sql_server.name}.database.windows.net:1433;database=${azurerm_mssql_database.sonar.name};user=${azurerm_mssql_server.sql_server.administrator_login}@${azurerm_mssql_server.sql_server.name};password=${azurerm_mssql_server.sql_server.administrator_login_password};encrypt=true;trustServerCertificate=false;hostNameInCertificate=*.database.windows.net;loginTimeout=30;"

SONARQUBE_JDBC_USERNAME = azurerm_mssql_server.sql_server.administrator_login

SONARQUBE_JDBC_PASSWORD = random_password.sql_admin_password.result

}

ports {

port = 9000

protocol = "TCP"

}

volume {

name = "data"

mount_path = "/opt/sonarqube/data"

share_name = "data"

storage_account_name = azurerm_storage_account.storage.name

storage_account_key = azurerm_storage_account.storage.primary_access_key

}

volume {

name = "extensions"

mount_path = "/opt/sonarqube/extensions"

share_name = "extensions"

storage_account_name = azurerm_storage_account.storage.name

storage_account_key = azurerm_storage_account.storage.primary_access_key

}

volume {

name = "logs"

mount_path = "/opt/sonarqube/logs"

share_name = "logs"

storage_account_name = azurerm_storage_account.storage.name

storage_account_key = azurerm_storage_account.storage.primary_access_key

}

}

container {

name = "caddy-ssl-server"

image = "caddy:latest"

cpu = "1"

memory = "1"

commands = ["caddy", "reverse-proxy", "--from", "acrrepo.azurecontainer.io", "--to", "localhost:9000"]

ports {

port = 443

protocol = "TCP"

}

ports {

port = 80

protocol = "TCP"

}

}

}

Final Configuration

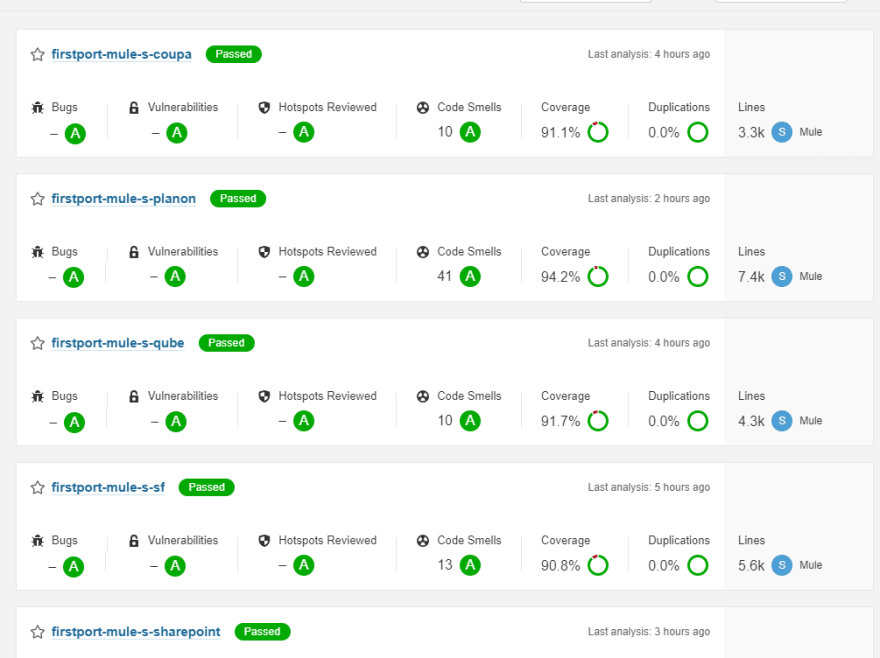

Just follow the SonarQube documentation for your specific source control. Then add the required steps to your application code GitHub Actions workflows, then you will see something like this within the SonarQube dashboard:

Next Steps

Once the container instance is running you probably do not want it running 24/7 so using an Azure Function or Logic App to stop and start the instance when its not needed will definitely save money. I plan to run an Azure Logic App to start the container at 08:00 and stop the container at 18:00 Monday to Friday (I can feel another blog post coming on!)

I hope I could help you learn something new today, and share how we do things here at FirstPort.

Any questions, get in touch on Twitter

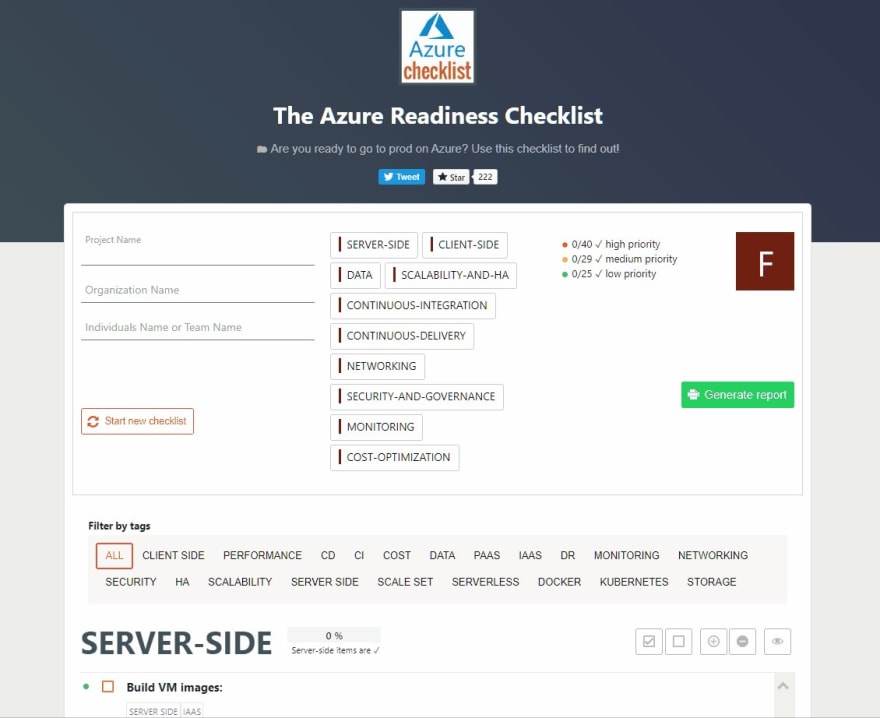

✨Azure Readiness Checklist✨

✨Azure Readiness Checklist✨

Are you ready to go to prod on Azure? Use this checklist to find out: azurechecklist.com

Call for #contributors! - Help make this THE go to list for #Azure

#AzureDevOps #OpenSource - PLS RT ❤️09:24 AM - 01 Nov 2019

![Andrew [MVP] profile image](https://res.cloudinary.com/practicaldev/image/fetch/s--GXR7wJds--/c_limit%2Cf_auto%2Cfl_progressive%2Cq_auto%2Cw_880/https://pbs.twimg.com/profile_images/1266114810590179328/at1PK_qL_normal.jpg)

Oldest comments (0)