Let's say that you would like to get all the URLs' from the dev.to homepage, how would you achieve that? Today, we'll be using python to extract every link from dev.to homepage.

Step 1: Install Pip3

If you have pip3 already installed, feel free to skip this part.

To check if you have installed pip3, open up command prompt for windows, unix command shell for linux or terminal for mac. After that, run the following command.

pip3 -v

If it returns with something that looks like this you are good to go:

Usage:

C:\Python38\python.exe -m pip <command> [options]

Commands:

install Install packages.

download Download packages.

uninstall Uninstall packages.

freeze Output installed packages in requirements format.

list List installed packages.

show Show information about installed packages.

...

Otherwise, read this guide to install pip3:

pip3 installation instructions

Step 2: Install Dependencies

If you already have requests and bs4 installed, feel free to skip this step, otherwise, open command prompt(Terminal on mac) and run the following commands.

pip3 install bs4

pip3 install requests

Step 3: Coding

Open your IDE of choice, I'll be using Visual studio code, which you can download below:

Visual studio code download

Now create a folder anywhere on your laptop, open the folder on your IDE(file>open folder on vscode), then create a file in the folder. Name the file scrape.py.

After that, paste this bunch of code into the python file.

import requests

from bs4 import BeautifulSoup

def listToString(s):

# initialize an empty string

str1 = ""

# traverse in the string

for ele in s:

str1 += ele+'\n'

# return string

return str1

links = []

url = "https://dev.to"

website = requests.get(url)

website_text = website.text

soup = BeautifulSoup(website_text)

for link in soup.find_all('a'):

links.append(link.get('href'))

number = 0

for link in links:

print(link)

print(len(links))

Basically, you are using requests to get the html document from the url you provided, then you are getting all the anchor tags(links) from the website and printing them out.

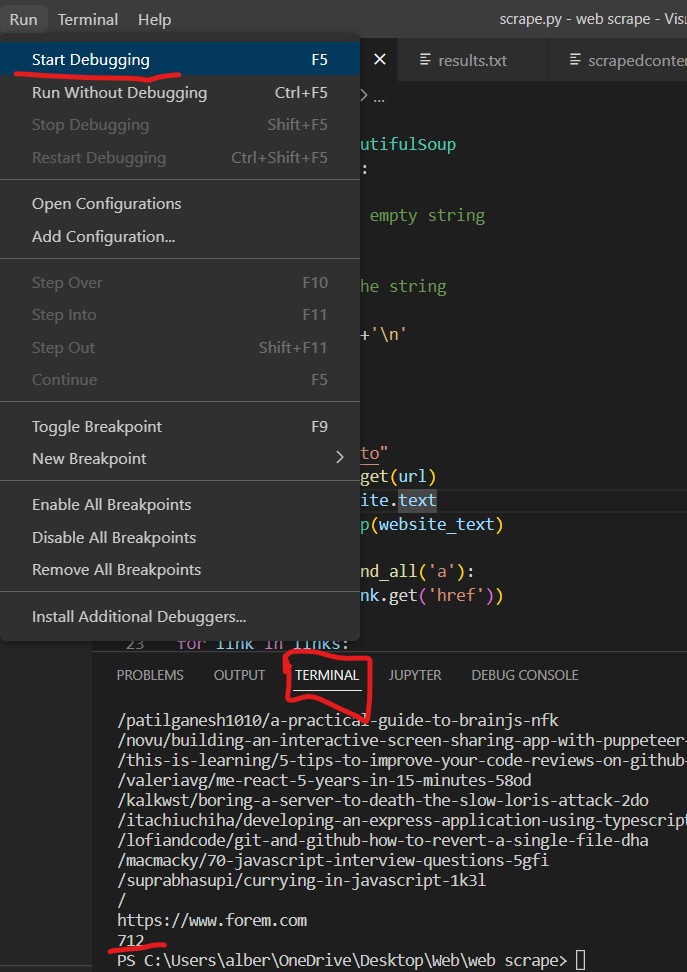

Now if you run the file(run>start debugging on vs code) you should see quite a few links being printed out, followed by the total number of links included.

Conclusion

I hope this worked for you, It took me a bit of trial and error to get this working, please comment below if it did not work for you, I'll be in touch ASAP.

Anyway, thanks a lot and have a good day.

Top comments (3)

Thanks for your info Wahyu.

Hello!

it's great! Unfortunately it won't work with dynamic pages... for that, selenium

Thanks JoseXS for your response.