No matter if you write a frontend application, a backend application, or some tooling in JavaScript or TypeScript - you'll eventually end up with a bundler to actually optimize your assets for delivery.

Bundling can not only transpile files (such as going from TypeScript to JavaScript), combine multiple files (concatenating different modules by wrapping them in functions), and remove unused code, it can also remove dependencies from your package. This is an easy way of reducing the weight of an npm package.

The general working principle of a bundler is shown below.

No matter why you use a bundler, in this article I'll show you yet another trick you will be able to do with the bundler of your choice: Use codegen modules to actually dynamically insert modules at bundling (i.e., build) time - these modules can be actually generated on the fly. Let's start with a simple example.

Example: Inserting Package Information

The situation is simple: You are tasked to add two static pieces to your frontend application, namely

- The name of the application from the package.json, and

- The version of the application from the package.json.

Luckily, almost all bundlers support importing .json files. They are treated like a module (default) exporting an object, i.e., the JSON content.

In code this could look like:

import { name, version } from '../package.json';

console.log('The name and version is:', name, version);

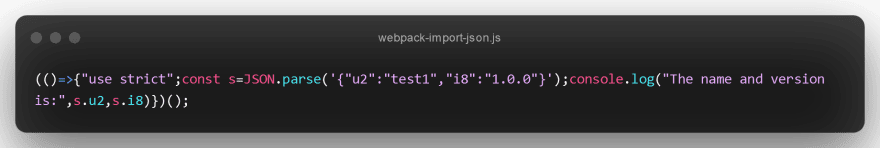

Webpack (v5):

This is super optimized as we are using an import construct. Most bundlers will treat import constructs as either side-effect free or determine the tree-shack-ability by flags from the underlying package.json. Any way, the code above is already good, but what if we would have used require instead?

const { name, version } = require('../package.json');

console.log('The name and version is:', name, version);

Webpack (v5):

Now in this variant the whole output is not only unnecessary verbose, but also contains potentially sensitive information, such as the used dependencies and build commands. Really not ideal.

What if we could just generate a module on the fly, that will only export the properties (name and version) that we are interested in?

const { name, version } = require('./info.codegen');

console.log('The name and version is:', name, version);

In this case the info.codegen looks like:

module.exports = function() {

const { name, version } = require('../package.json');

return `

export const name = ${JSON.stringify(name)};

export const version = ${JSON.stringify(version)};

`;

};

This is just a standard / normal Node.js (CommonJS) module. If we'd run the exported function we get back a string with valid JavaScript code. This is the module that Webpack will consider. The imported module is just a definition to actually generate the module.

The process is shown below.

Now what does that give us?

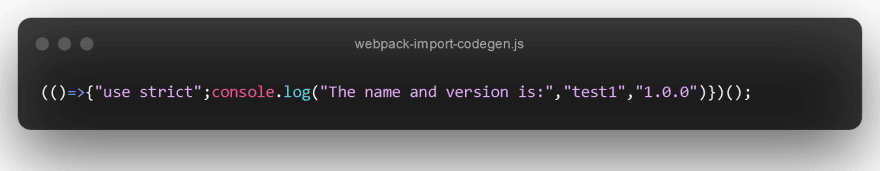

Webpack (v5):

Surely, as we are using some other JS module via require Webpack still introduces some overhead with all the definitions in here, but overall the information is condensed to the format we'd like to see. The output gets even better using import, in which case the introduced overhead can be just removed cleanly:

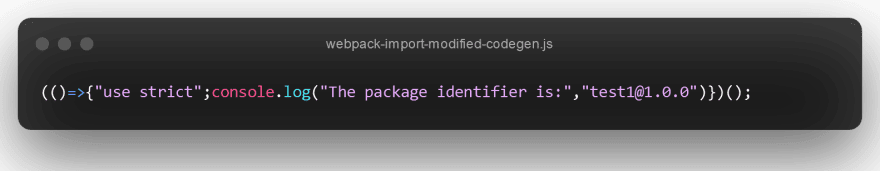

Those gained few bytes are, of course, not the full spectrum of code generation benefits. Even though we can already see some advantage. Consider that you are not interested in name and version, but rather a package identifier. Originally you'd have written:

import { name, version } from '../package.json';

const identifier = `${name}@${version}`;

console.log('The package identifier is:', identifier);

Resulting in the following code (Webpack v5):

However, using codegen you can spare the computation at runtime. You just prepare everything as you want it to be:

// index.js

import { packageId } from './info.codegen';

console.log('The package identifier is:', packageId);

// info.codegen

module.exports = function() {

const { name, version } = require('../package.json');

return `

export const packageId = ${JSON.stringify(`${name}@${version}`)};

`;

};

With this, the resulting code (Webpack v5) looks like:

Not only smaller, but also does not require any computation. The information that can be pre-computed is pre-computed.

How Does It Work?

The beauty of this approach is that a module transformation has direct access not only to Node.js, but also to the module itself. As such, it can just require or evaluate the module in a sandbox. Afterwards, the exported function can be run to get back a string. This string is the result of the code transformation.

The whole process works as shown in the following diagram.

In theory the string does not have to be JavaScript code. It could also be the content of an asset file or something transpiling to JavaScript (like TypeScript). In practice, however, JavaScript makes the most sense.

Since many bundlers actually allow parallel transformations we should also try to make the API as asynchronous as possible. A good step in this direction is to allow returning a Promise from the code transformer. Using our previous example we could rewrite that to be:

// info.codegen

const { readFile } = require('fs/promises');

module.exports = async function() {

const content = await readFile('../package.json', 'utf8');

const { name, version } = JSON.parse(content);

return `

export const packageId = ${JSON.stringify(`${name}@${version}`)};

`;

};

The whole flow can be used to use asynchronous APIs for constructing modules dynamically. Let's see an example in action.

Example: Getting Asynchronous Infos

Let's say we have a small static website that is gonna be built everyday (periodic CI/CD). On build-time an API is being called and the result is used to actually drive the build.

How would you construct such a thing? Well, surely, one way is to have a shell script doing the download. Then you either use the download assets directly in your code base (essentially bundling it in) or you already bring the asset in the desired form, e.g., into some React code. This way, there would be less work at runtime later on.

While this is a suitable solution it is not the most elegant one, nor is it the most robust one. For instance, what language do you use for the script file? If its bash or PowerShell then the file might not run on the system of other developers. Okay, cool, so you've chosen JavaScript - and that most work, as the other tooling uses the same. Well, even then running this script and bundling are two separate commands. Okay, so you bring the script into the bundler somewhat. But then how do you reference the output? Another .gitignore rule... Okay, so on first-time use TypeScript will show errors...

Using a .codegen file now allows you to bring this into your bundler pipeline - including caching, re-evaluation, and hot reloading.

Here's an example code:

// App.tsx

import Pictures from './pictures.codegen';

export default () => (

<>

<div>Some content</div>

<Pictures />

</>

);

// pictures.codegen

const { default: axios } = require('axios');

module.exports = async function() {

const { data } = await axios.get('https://jsonplaceholderapi.com/');

const urls = data.thumbnailUrls.slice(0, 50);

return `

export default function Pictures() {

return (

<div>

${urls.map(url => `<img src=${JSON.stringify(url)} />`)}

</div>

);

};

`;

};

The idea here is to generate everything necessary, such that at runtime no additional work has to be done.

Example: Building a Media Library

The previous example could also be used to make certain images / media assets available in a much simpler form. Usually in your bundler you'll need to

import MyImage from '../assets/my-image.png';

// ...

<img src={MyImage} />

But that is broken when you, e.g., want to have something like

const userAvatar = user.avatar;

const image = images[userAvatar];

<img src={image} />

Now, of course, you can go the hard way and define images like this

import Hulk from '../assets/avatars/hulk.png';

import Ironman from '../assets/avatars/ironman.png';

import Spiderman from '../assets/avatars/spiderman.png';

// ...

const images = {

hulk: Hulk,

ironman: Ironman,

Spiderman: Spiderman,

// ...

};

but that is not only very verbose, but also not very flexible. A new avatar was added? Well, good luck remembering that you need to change the file above - and change it on two places.

To simplify this Webpack actually supports require.context, but its rather limited and only available in Webpack. A better / more flexible alternative is to use .codegen here.

import { images } from './media.codegen';

// media.codegen

const { readdir } = require('fs/promises');

const { resolve } = require('path');

module.exports = async function() {

const assets = resolve(process.cwd(), 'src', 'assets');

const avatarsDir = resolve(assets, 'avatars');

const avatars = await readdir(avatarsDir);

const usedImports = avatars

.filter(a => a.endsWith('.png'))

.map((a, i) => `import _${i} from ${JSON.stringify(resolve(avatarsDir, a))};`);

const images = avatars

.filter(a => a.endsWith('.png'))

.map((a, i) => `${JSON.stringify(a.replace(/\.png$/, ''))}: _${i}`);

return `

${usedImports.join('\n')}

export const images = {

${images.join(',')}

};

`;

};

Importantly, in the example above we generate a set of imports that just look identifiers like _0, _1, ... - pretty much ensuring that there are no conflicts (otherwise, we'd need to make sure to not only generate valid identifiers from the file name, but also non-conflicting ones). However, for the actual key names we still use the filename without the extension - which may be problematic. Therefore, instead of making keys like

const images = {

foo: _0,

bar: _1,

};

we use it with a string to avoid issues with the name (e.g., my-asset.png would be problematic otherwise):

const images = {

"foo": _0,

"bar": _1,

"foo-bar": _2,

};

Knowing such tricks / concepts is quite handy when generating code reliably.

Example: Automatically Generating Routing Information

One feature that many web frameworks / static-site generators (e.g., Next.js, Remix, Astro, ...) have is file system-based routing. As such if you have a file system structure like

root

+ pages

+ blogs

- index.tsx

- first.tsx

- second.tsx

+ internal

- first.tsx

- other.tsx

- index.tsx

- about.tsx

Using .codegen you can make this work in any codebase. Let's see how this could look like:

// App.tsx

import { BrowserRouter } from 'react-router-dom';

import Routes from './routes.codegen';

export default () => (

<BrowserRouter>

<Routes />

</BrowserRouter>

);

// example: pages/blogs/index.tsx

export default function Page() {

// content

}

// routes.codegen

const { readdir, stat } = require('fs/promises');

const { resolve, parse } = require('path');

function makeSegment(name, sep = "-") {

return name

.normalize('NFD')

.replace(/[\u0300-\u036f]/g, '')

.toLowerCase()

.trim()

.replace(/[^a-z0-9 ]/g, '')

.replace(/\s+/g, sep);

}

function makeElement(path) {

return `React.createElement(React.lazy(() => import(${JSON.stringify(path)})))`;

}

async function makeRoutes(baseDir, baseRoute) {

const routes = [];

const names = await readdir(baseDir);

for (const name of names) {

const path = resolve(baseDir, name);

const info = await stat(path);

if (info.isDirectory()) {

const subRoutes = await makeRoutes(path, `${baseRoute}/${makeSegment(name)}`);

routes.push(...subRoutes);

} else if (fn === 'index.tsx') {

const route = `${baseRoute}/`;

const element = makeElement(path);

routes.push(`<Route path=${JSON.stringify(route)} element={${element}} />`);

} else {

const fn = parse(path).name;

const route = `${baseRoute}/${makeSegment(fn)}`;

const element = makeElement(path);

routes.push(`<Route path=${JSON.stringify(route)} element={${element}} />`);

}

}

return routes;

}

module.exports = async function() {

const pages = resolve(process.cwd(), 'pages');

const routes = await makeRoutes(pages, '');

return `

import * as React from 'react';

import { Route, Routes } from 'react-router';

export default () => {

return (

<Routes>

${routes.join('\n')}

</Routes>

);

};

`;

};

There is quite a bit going on - but rest assured most of the logic is to read out the directories and contained files, then adding them to the routes and converting it all to a proper React representation. Every route is lazy loaded to optimize the overall bundle size.

(Yes, you might even want to make this non-static / allow dynamic routing with parameters, e.g., a file index[id].tsx would be a route to /:id - but I left that out for simplicity.)

All those examples have been mentioned just to give you some impression - there is much more that you can do with it (and presumably much better / more elaborate than what I've shown here).

Bundler Support

Right now, support for codegen exists in the following bundlers.

- Parcel v1 (parcel-plugin-codegen)

- Parcel v2 (parcel-transformer-codegen)

- esbuild (esbuild-codegen-plugin)

- Webpack (v4 and v5) (parcel-codegen-loader)

- Vite (vite-plugin-codegen)

- Rollup (rollup-plugin-codegen)

Examples for each bundler are included in their package descriptions. The .codegen content does not change - the API remains the same across the different bundlers.

For instance, to integrate support for .codegen files in Webpack (v5) you'll to first add the dependency.

Unit Testing

Now that you have .codegen imports in your project - can you still use standard unit testing tools such as Jest for actually running tests? Yes, definitely. There are multiple ways.

- Just mock the import. Similar to the idea with dedicated .d.ts files (see recipes below) you can introduce a mock explicitly (e.g., using

jest.mock) or implicitly (e.g., having a satellite file .js that would be preferred by the unit test runner). - Use a dedicated transformer.

Unfortunately, a the time of writing Jest could either do only synchronous transformers, or an asynchronous transformer if everything was available / running in ESM format.

A synchronous transformer in Jest looks like:

import { transformSync } from '@babel/core';

import { SyncTransformer } from '@jest/transform';

const codegen: SyncTransformer = {

process(_: string, filename: string) {

const factory = require(filename);

const result = factory.call({

outDir: global.process.cwd(),

rootDir: global.process.cwd(),

});

const { code } = transformSync(result, {

presets: [

[

'@babel/preset-env',

{

modules: 'commonjs',

},

],

],

});

return { code };

},

getCacheKey(_: string, filename: string) {

// let's never - you can choose a different strategy here, too

// e.g., considering the content of the file for having a proper hash

const rnd = Math.random().toString();

return `${filename}?_=${rnd}`;

},

};

export default codegen;

Now you can set this up using the transformers section:

const config = {

// ...

transform: {

'^.+\\.codegen$': resolve(__dirname, 'codegen.js'),

},

};

IDE Support / VS Code

The support is actually quite simple - just change the syntax to JavaScript (js) and you are good to go. Some editors allow you to just configure a mapping, e.g., to map .codegen to use js or for .codegen to be an alias of .js. In any case, that's all you get for the moment.

In the future I think there might be dedicated support - even to the point where the generated code could be inspected upfront and potential syntax errors (or general issues) could be detected before the bundler runs.

Recipes

TypeScript

If you want TypeScript support then all you need to do is to have a .d.ts file adjacent to your .codegen file. For instance, in the initial example we came up with info.codegen. Adding a info.codegen.d.ts next to the original codegen with the following content:

export const packageId: string;

would be all that's needed for supporting TypeScript. Now, TypeScript knows that we can import the .codegen file and actually "knows" what the content will be.

Variable Output

Right now a codegen file is still just a single module. Therefore, using import './my.codegen from two different modules will actually have no impact - it's only evaluated once. Is there a way to reuse one codegen module generating a different module depending on the context? In Webpack there could be - but in general not. Therefore, the codegen bundler plugin does not support this directly. This is, however, not the end of that story.

What you can do is to generate additional (physical) modules and reference those. As an example let's say you have two modules (a.ts and b.ts) and both would need the rendered content of some markdown document (a.md and b.md respectively). Now having two .codegen files (a.codegen and b.codegen) would not be very nice - after all, both would have pretty much the same instructions. Instead, we'd like to only have only a single one (md.codegen). But we cannot parameterize it, right?

So we cannot do

// a.ts

import content from './md.codegen?file=a.md'

// ...

and

// a.ts

import content from './md.codegen?file=b.md'

// ...

(Sure, as mentioned we can make this work in Webpack, but generalizing it to other bundlers is difficult.)

Instead, you could maybe do something like

// a.ts

import { aContent } from './md.codegen';

// ...

and

// b.ts

import { bContent } from './md.codegen';

// ...

The trick is to use the generation dynamically, e.g., to do something like:

const { readDir, readFile } = require('fs/promises');

const { resolve } = require('path');

const convertMarkdown = require('./mdConverter');

module.exports = async function() {

const dir = resolve(process.cwd(), 'docs');

const docsFiles = await readDir(dir);

const mdFiles = docsFiles.filter(m => m.endsWith('.md')).map(f => resolve(dir, f));

const result = await Promise.all(mdFiles.map(convertMarkdown));

// returns something like:

// export const aContent = "<div>my markdown content</div>";

// export const bContent = "<div>your markdown content</div>";

return result.map(({ name, content }) => `export const ${name}Content = ${JSON.stringify(content)};`).join('\n');

}

Since the generated module is side-effect free you'll get tree shaking, essentially removing not used exports. Therefore, such a parameterization might be more expensive at build-time, but is not adding to the runtime cost.

Lazy Loading

Changing the previous problem statement to a question regarding runtime behavior we might end up with a demand for lazy loading. That is, we actually would want to write something like

// a.ts

import('./md.codegen?file=a.md').then(({ default: content }) => {

// ...

});

As written beforehand, we cannot parameterize such calls. Also, the tree shaking for such lazy imports works a bit different. So we need a different strategy here. The idea is to have the following:

// a.ts

import { loadA } from './md.codegen';

loadA().then(({ default: content }) => {

// ...

});

Now we only need to generate the lazy loading in the .codegen file. The idea is to have:

const { readDir, readFile } = require('fs/promises');

const { resolve } = require('path');

const { upperFirst, camelCase } = require('lodash');

const convertMarkdown = require('./mdConverter');

module.exports = async function() {

const dir = resolve(process.cwd(), 'docs');

const docsFiles = await readDir(dir);

const mdFiles = docsFiles.filter(m => m.endsWith('.md')).map(f => resolve(dir, f));

const result = await Promise.all(mdFiles.map(convertMarkdown));

// returns something like:

// export const loadA = () => import("../temp/md_a.ts");

// export const loadB = () => import("../temp/md_b.ts");

return result.map(({ name, path }) => `export const load${upperFirst(camelCase(name))} = () => import("./${path}");`).join('\n');

}

In the code above we assume that convertMarkdown actually does not only give us the content (or does no longer give us the content), but actually also gives us a path to the content in a temporary file. Now this file can be referenced from our generated module, making it fully lazy-loadable from the bundler's perspective.

Conclusion

In this post I've went over a quite simple, yet powerful plugin that is available for most bundlers (and could be presumably easily added to missing ones): Codegen. It allows you to create a (virtual) module on the fly - using the full Node.js ecosystem.

Codegen gives you the bundler-agnostic superpowers that are needed to build great applications today and in the future. The only question is: What will you build?

Oldest comments (4)

These codegen module examples rely a lot on CJS magic, specifically the part where CJS seems to magically call the default exported function on

require().Pretty sure this won't fly in the actual Nodejs ESM setup.

I wouldn't call a require CJS magic, but surely as presented it only works with CJS (nevertheless, you could also use ESM if you want - it's just an

importaway).The part where this:

to be used like

import { symbolName } from "..."makes a lot of assumptions I am not sure any module resolver should make (especially in async context). Is it the syntax specific to the bundle loaders?Where are you taking this part from? It does not appear in the article. The

module.exportsis a standard CJS export definition. The returned function is just what we use in the bundler (this needs to be integrated via a bundler plugin - otherwise the code would just appear in-place, but we want that the string that is evaluated in-place will appear in the resulting bundle).