Github Action makes the CI process of projects hosted in Github very convenient, but the runner configuration of 2C7G provided by Github by default is too low, and it will be very slow to run some large-scale project compilation tasks. This article is DeepFlow using public cloud high-end cheap Spot instances to accelerate Action After a series of explorations, we finally found an ideal solution to solve all the requirements of performance, cost, ARM, etc., and hope it will be useful to you.

0x0: Problems with GitHub Actions

Since the DeepFlow open source code was pushed to GitHub, we encountered the problem that GitHub Action took too long to compile tasks due to the low managed Runner configuration. Before that, the Alibaba Cloud 32C ECI Spot instance used by our internal GitLab CI took a few minutes. You can run all the jobs (the specific method will be introduced in a separate article later). After seeing the changes brought by the Spot instance to our GitLab CI, the GitHub Action of DeepFlow has been looking for a way from the first day of its launch. Each Job is assigned an independent Runner and supports the solution of the X86/ARM64 architecture, but this process is not smooth, and after 5 versions before and after, an ideal solution is finally found.

Some problems encountered by DeepFlow in the early stage of using GitHub Action:

- Poor performance: The GitHub hosting machine configuration is too low and the compilation phase takes too long

- Poor flexibility: The fixed

self-hostedRunner cannot be dynamically scaled, the jobs are often queued, the machine is idle for a long time, and it is not cost-effective to fill the annual and monthly configuration - High cost: ARM64 architecture compilation requires another machine, Alibaba Cloud does not have ARM64 architecture instances in overseas regions, and AWS’s annual and monthly ARM64 machine price is relatively high (about 500$ per month for 32C64G)

- Network instability: GitHub-hosted Runner often times out when pushing images to domestic Alibaba Cloud warehouses

Our needs:

- Support auto scaling, assign at least one Runner with at least 32 cores to each Job

- Works in much the same way as a GitHub-hosted Runner

- Supports both X86 and ARM64 architectures

- Low maintenance cost, preferably no maintenance

- Stable and fast push image to domestic Alibaba Cloud image warehouse

0x1: Accelerate the exploration of GitHub Actions

Based on our needs and the GitHub Action community documentation, we also found some solutions:

- K8s Controller: Kubernetes controller for GitHub Actions self-hosted runner

- Terraform: Autoscale AWS EC2 as GitHub Runner with Terraform and AWS Lambda

- Github: Paid Larger Runners service currently only open to GitHub Team and Enterprise organizations

- Cirun: Automatically scale VMs of cloud platforms such as AWS/GCP/AZURE/OpenStack as GitHub Runner

| K8s Controller | Terraform | Github Larger Runners | Cirun | |

|---|---|---|---|---|

| Runner | Container | Linux, Windows | Linux, Window, Mac | Linux, Windows, Mac |

| Supported Cloud Platforms | Kubernetes | AWS | - | AWS, GCP, Azure, OpenStack |

| Whether to support ARM64 | Supported | Supported | Not supported | Supported |

| Whether Spot is supported | Not supported | Supported | - | Supported |

| Deployment Maintenance Cost | Medium | High | None | None |

The first solution we tried was K8s Controller. After trying it out, we found the following defects:

- AWS EKS Fargate does not support Privileged Containers

- AWS EKS Fargate does not support ARM64 architecture

- AWS EKS Fargate does not support

generic-ephemeral-volumes

If there is no way to use Fargate, it is necessary to prepare independent nodes, and there is no way to dynamically scale nodes and use pay-as-you-go instances and Spot instances.

Next we tried the Terraform solution, but also encountered some setbacks:

- Failed to install and deploy multiple times

- Not friendly to Terrafrom newbies

- there is maintenance cost

The GitHub solution does not support ARM64 instances, and passes directly.

In the end we chose Cirun:

Cirun: Supports customization of arbitrary machine specifications, architectures and images. It is free for open source projects, does not require deployment and maintenance, does not require additional resources, and is very simple to operate:

Step 1: Install the App

Install Cirun APP in GitHub Marketplace

Step 2: Add Repo

Add the required repo in the Cirun console

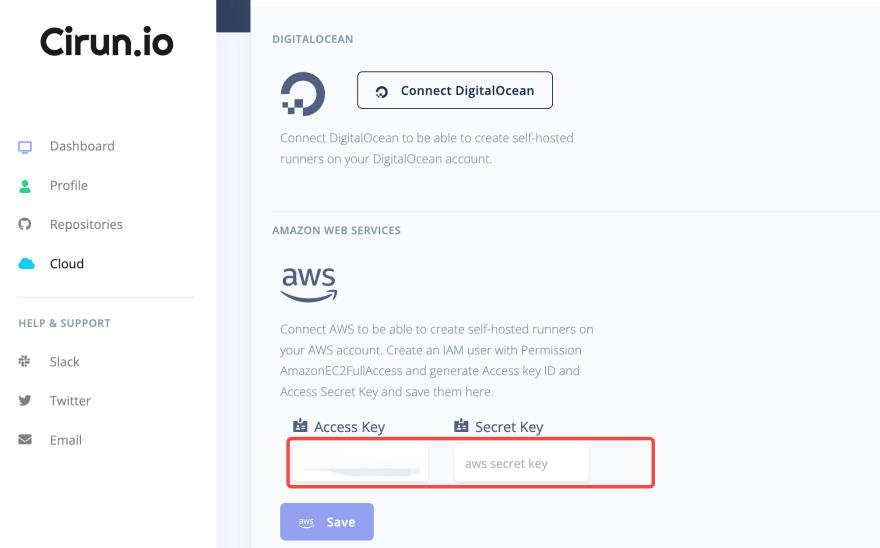

Step 3: Configure AK/SK

Configure AWS ACCESS KEY and Secret KEY in the Cirun console

Step 4: Configure Machine Specifications

Machine specification and Runner Label are defined in GitHub Repo

runners:

- name: "aws-amd64-32c"

cloud: "aws"

instance_type: "c6id.8xlarge"

machine_image: "ami-097a2df4ac947655f"

preemptible: true

labels:

- "aws-amd64-32c"

- name: "aws-arm64-32c"

cloud: "aws"

instance_type: "c6g.8xlarge"

machine_image: "ami-0a9790c5a531163ee"

preemptible: true

labels:

- "aws-arm64-32c"

Step 5: Getting Started

Toggle GitHub Job's runs-on field

jobs:

build_agent:

name: build agent

runs-on: "cirun-aws-amd64-32c--${{ github.run_id }}"

steps:

- name: Checkout

uses: actions/checkout@v3

with:

submodules: recursive

fetch-depth: 0

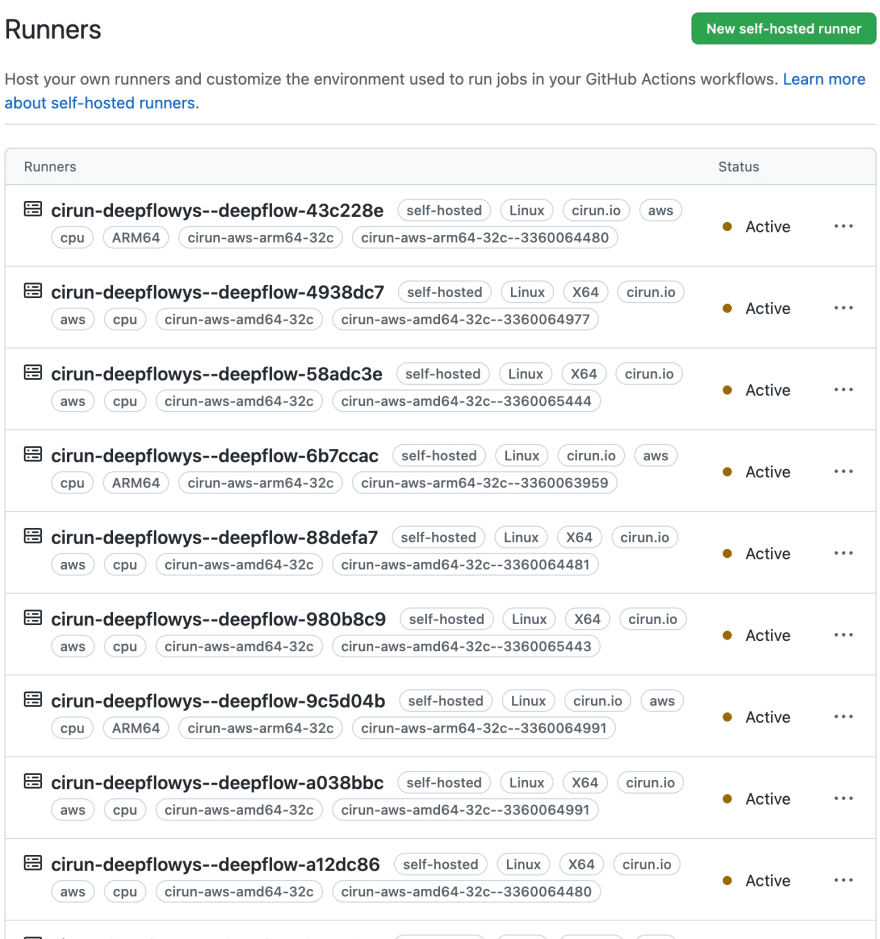

final effect:

0x2: Changes after DeepFlow uses Cirun

Currently DeepFlow uses AWS 32C64G Spot instances to run CI in parallel, with an average monthly consumption of $300. If you use the monthly subscription method, you can only run two 16C32G X86/ARM64 instances under the same consumption, and once there are parallel tasks, you need to wait in a long queue.

DeepFlow's main CIs have been switched to Cirun's Runners, meeting all previous expectations:

- Performance: deepflow-agent compilation time is reduced by 40%, and all CI Jobs have since said goodbye to queuing

- Cost: Our GitHub Action switched from two fixed 16C32G machines to a 32C64G AWS Spot EC2 instance per job at almost the same cost

- Flexibility: switch ARM64 X86 instances seamlessly

- Maintenance cost: no maintenance cost, additional resource cost

- Network status: Thanks to the excellent network of AWS, compared with GitHub hosting Runner, the failure rate of pushing images to domestic Alibaba Cloud image warehouses is greatly reduced

0x3: Future Outlook

We also encountered some problems in the middle of using Cirun, all of which have been well supported by the author, see Issues for details:

There is also some work in progress:

- Optimize CI time-consuming and fix abnormal time-consuming steps

- AWS's ARM64 machine Spot requests often fail to create due to insufficient resources. Currently, the ARM64 architecture Runner does not use Spot Instances, and the author of Cirun fixed the problem and switched to Spot Instances

- Shorten the waiting time for jobs using Cirun Runner from the current 90 seconds to less than 30 seconds

- Added Cirun to GitHub Action documentation

0x4: What are Spot Instances

Quoting the introduction of AWS official website:

- The only difference between On-Demand Instances and Spot Instances is that when EC2 needs more capacity, it interrupts Spot Instances with a two-minute notice. You can use EC2 Spot for a variety of fault-tolerant and flexible applications, such as test and development environments, stateless web servers, image rendering, video transcoding, to run analytics, machine learning, and high-performance computing (HPC) workloads. EC2 Spot also tightly integrates with other AWS products, including EMR, Auto Scaling, Elastic Container Service (ECS), CloudFormation, and more, giving you flexibility in how to launch and maintain applications running on Spot Instances.

- Spot Instances are a new way to buy and use Amazon EC2 instances. The spot price of Spot Instances changes periodically based on supply and demand. Start Spot Instances directly using a method similar to purchasing On-Demand Instances, and the price will be determined based on the supply and demand relationship (not exceeding the On-Demand Instance price); users can also set a maximum price, which will run during the period when the set maximum price is higher than the current spot price such instances. Spot Instances complement On-Demand and Reserved Instances and provide another option for obtaining compute capacity

0x5: What is Cirun

Cirun is a way for developers and teams to run their CI/CD pipelines on their secure cloud infrastructure through GitHub Actions. This project aims to provide the freedom to choose cloud machines with any configuration, save money by using low-cost instances, and save time by enabling unlimited concurrency and high-performance machines, all through a simple developer-friendly yaml file implementation. It currently supports all major clouds including GCP, AWS, Oracle, DigitalOcean, Azure and OpenStack. Cirun is completely free for open source projects without any restrictions.

0x6: What is DeepFlow

DeepFlow is an open source, highly automated observability platform. Link, high-performance data engine. DeepFlow uses new technologies such as eBPF, WASM, and OpenTelemetry, and innovatively implements core mechanisms such as AutoTracing, AutoMetrics, AutoTagging, and SmartEncoding, helping developers to improve the automation level of embedded code insertion and reduce the O&M complexity of the observability platform. Using DeepFlow's programmability and open interface, developers can quickly integrate it into their observability stack.

GitHub address: https://github.com/deepflowys/deepflow

Visit DeepFlow Demo to experience a new era of highly automated observability.

Latest comments (2)

If you want an on-premise solution, you could also try RunsOn!

Excellent comparison! I have always wondered how all the other options play out.