Having worked with a few Distributed Systems over the years such as Apache Kafka and Ethereum nodes I was wondering what it would take to build a minimal one. In this article we’ll be building a micro “kafka-like” distributed logging app with super simple features, using Go and Hashicorp Consul. You can follow along using this github repository.

What is Consul ?

Looking at the definition in the intro section of the official website:

Consul is a service mesh solution providing a full featured control plane with service discovery, configuration, and segmentation functionality

That might sounds a bit scary, but don’t leave just yet. The main Consul feature we’ll use in this article is the Key/Value store , which will support our simple Leader Election ** ** system .

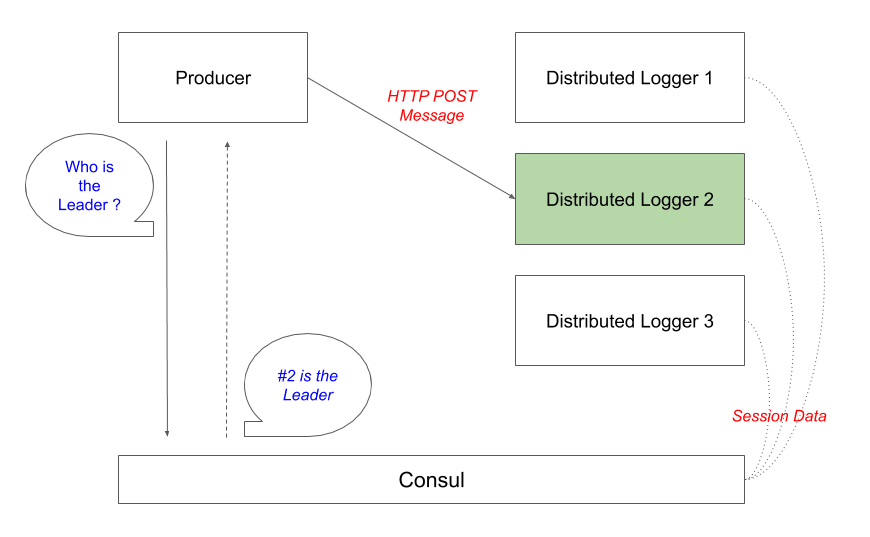

System Architecture

The diagram below shows the system’s architecture :

The system is composed of 3 parts:

- consul : Consul instance that provides support for leader election and service discovery.

- distributed-logger : The Distributed Logger nodes expose a REST API that logs received messages to Stdout. Only the cluster leader accepts messages at any given time. A new node takes over in case of leader node failure.

- producer : The producer queries Consul periodically to determine the distributed-logger leader and sends it a numbered message.

Demo

You can run the demo using docker-compose by pulling the repo and running:

$ docker-compose up -d --scale distributed-logger=3

What happens then is:

- The Consul instance is started.

- 3 distributed-logger instances are started and register a Session with Consul.

- A distributed-logger wins the election (in this case just because it’s faster in acquiring the lock from Consul) and becomes the Leader.

- The producer comes online, then polls Consul for the leader every 5 seconds and sends it a message.

- The leader receives the messages and logs it to Stdout

- If the leader dies or is killed via SIGTERM or SIGINT, a new distributed-logger node takes over as the leader and starts receiving messages from the producer

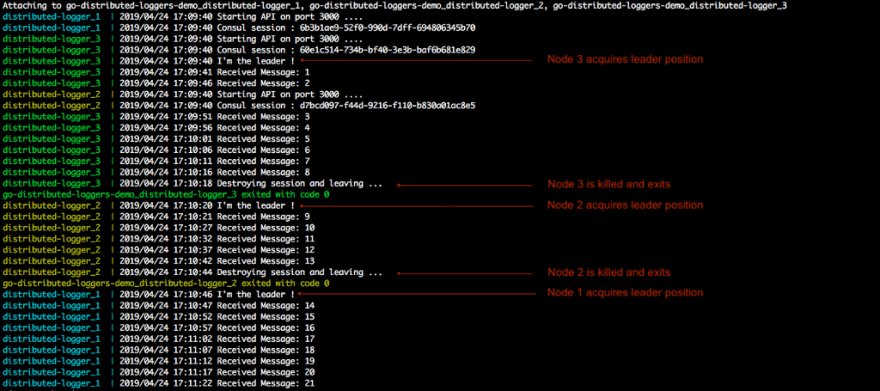

You can see an example below where Node 3 is the leader, then Node 2, then Node 1:

Show me the code

The full code is available in the repo, but let’s look at a couple of interesting aspects of the app:

- To acquire leader status, each distributed-logger node runs a goroutine that tries periodically to acquire a lock in Consul:

- When signaled with SIGINT or SIGTERM, the distributed-logger nodes destroy their Consul Session, effectively releasing the lock and losing Leader status if the node was the Leader:

- The producer runs a loop to discover the Leader node and send messages:

Conclusion

Our little Proof of Concept is very far from being production ready. Distributed Systems is a very vast field and we are missing many aspects like: Replication, High Availability and solid Fault Tolerance.

That being said, I hope that you have enjoyed this example and that it will allow you to start diving into this complex topic and hopefully start building your own Distributed Systems ! Let me know of you have any questions or remarks.

Top comments (0)