Introduction

I like "Awesome XYZ" lists on Github. They often provide a fantastic summary of the most important libraries/topics in a dev ecosystem. Often enough though, the linked repositories are orphaned and no activity happend in years. I therefore wrote a little browser extension for Firefox and Chrome that indicates since how many days a repository was not changed. It's free and open source - give it a try!

In this article, I'd like to walk through the source code of my first web extension and share my lessons learned.

Mozilla MDN is great

The Mozilla MDN documentation is great. I'm a mediocre Javascript developer with zero experience in browser extension development. However the documentation contains almost everything you need to get up and running quickly.

File Organization

The plugin consists of the following relevant files that I will discuss in this article:

- manifest.json

- addindicators.js

Extension Manifest

manifest.json lives in the root folder of the extension and contains important meta-data:

First I describe my extension and give it a nice icon. For the version field it's recommended to use semantic versioning.

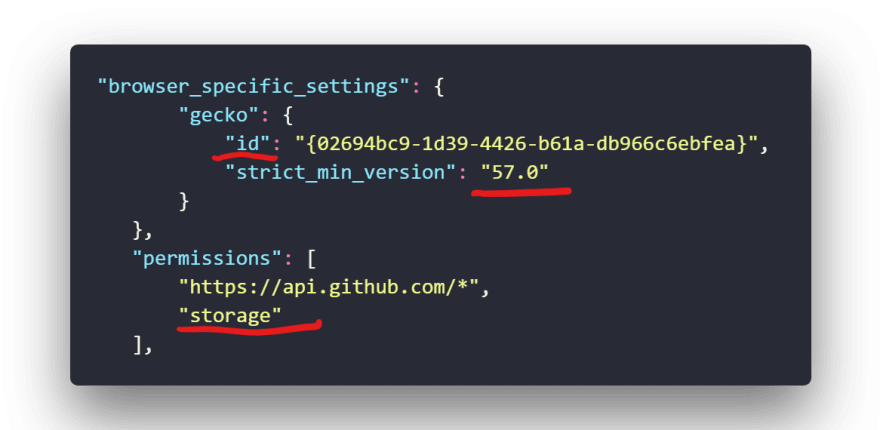

For Firefox some additional settings are required:

- Set

idto a guid. Even though it is stated as optional in the documentation, you should do it. Otherwise you will run into issues later on (e.g. you can't access local storage) - Set

strict_min_versionto something fairly recent. Otherwise you will get warnings when you try to access APIs that are not available in earlier versions.

Note that Chrome will complain that it does not know about this section. I just ignored this warning.

In the permissions section you have to specify the permissions that the plugin requires. Plugins run with high priviledges so only ask for permissions you really need:

- I request access to all pages in the github api subdomain. You can then just use

fetchand CORS does not get in your way. - I also want to access local storage, so that I can save the settings of the plugin.

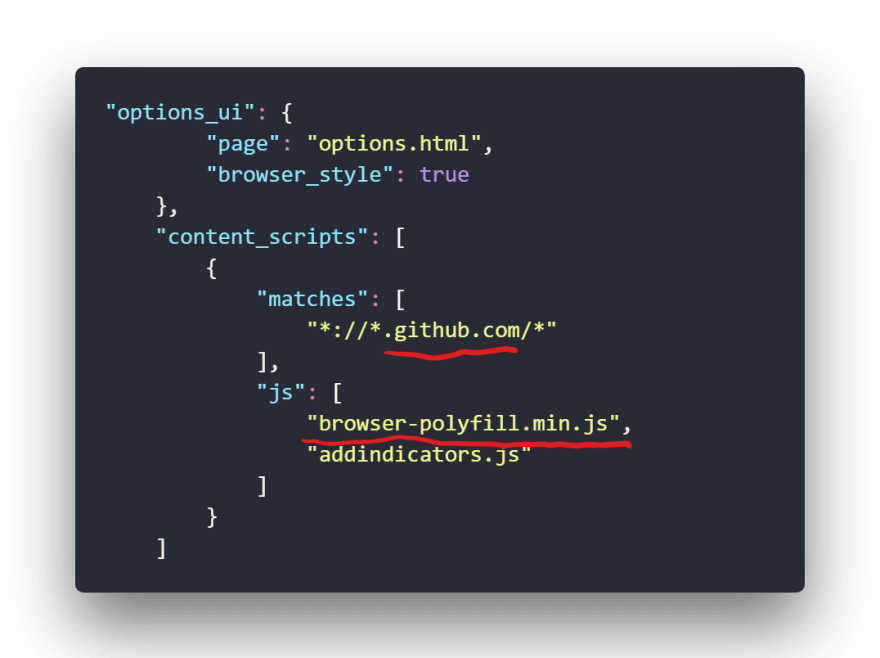

Within options_ui you define the html page that will be rendered as iframe in the settings page of the plugin. In my plugin it allows you to set a custom Github API access token.

content_scripts allow you to inject custom Javascript into arbitrary pages:

matcheslooks for URL patterns. My plugin should only be activated on github.com.jslists the Javascript files that are injected into these pages.addindicators.jsis the interesting one. I've included a browser polyfill so that the web extension works on both Chrome and Firefox.

Lesson learned: The order of the scripts matter. First I included the polyfill after my script and it took me a while to figure out why it's not working.

Implementation

addindicators.js contains the implementation of the plugin. The general flow is very simple:

- Enable or disable debug logs

- Get the API key from the settings or use the default.

- Find all links to Github repositories

- Assemble a GraphQl query and query Github

- Add an indicator to each repository link

Let's look at some code:

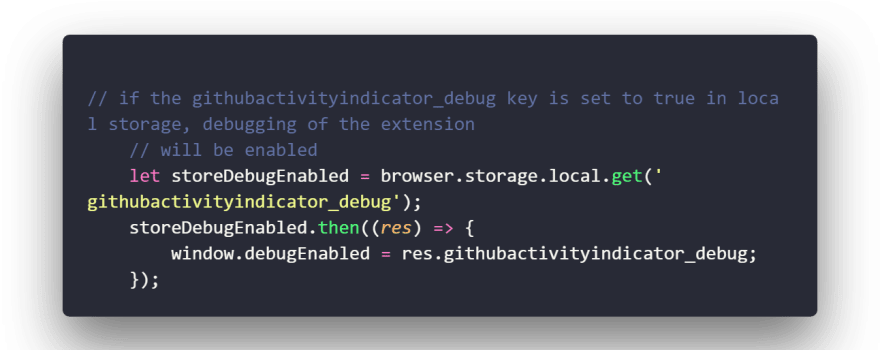

Debug Flags

I check if the debug flag is set in the browser local storage and set a global variable accordingly. All dlog code you see later just checks on the debugEnabled flag and either prints the message to the console or not.

Lesson learned: browser will only work in Firefox unless you use the polyfill.

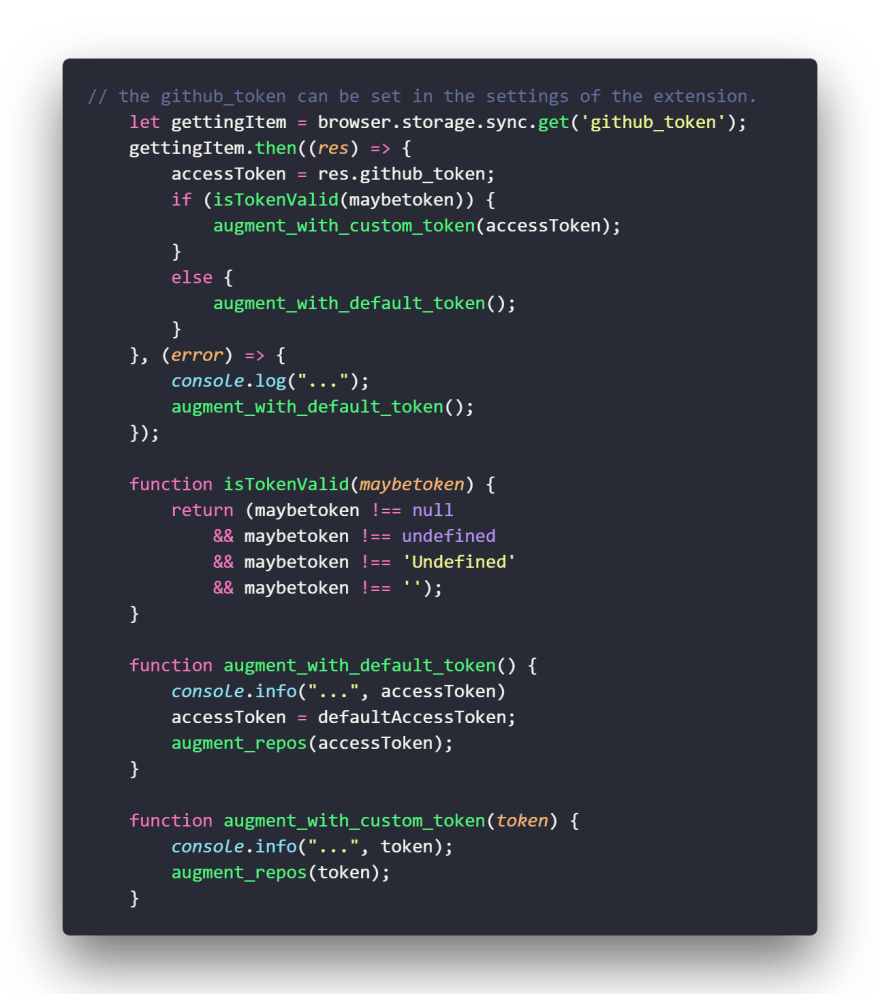

Getting the API token

It took me a surprising amount of code to either get the token from local storage (set via the settings dialog), or use the default token. Improvement suggestions are very welcome!

A few things to note:

- I use

browser.storage.syncinstead ofbrowser.storage.local. This ensures that the token is synced across user devices (if enabled). - In the

isTokenValidfunction I also check for the string 'Undefined'. I need to do this because I was lazy in my settings dialog implementation... - SECURITY REMARK: I created an unpriviledged Github token for my account that I use as default token. In general this is a horrible security sin so please don't do this at home. I just couldn't quickly find a more convenient way without doing the lovely OAuth dance.

- My function naming is neither consistent nor ideomatic throughout the whole codebase. Sorry for that.

Find links to Github repositories

First I get all links on the page via document.getElementsByTagName and then filter out all links that are not links to github repositories.

Lesson learned: At the first glance document.getElementsByTagName seems to return an array. You can also iterate over it with a for loop like this:

for(const x of document.getElementsByTagName('a')){

// do something

}

However it's not a real array thats why this would fail:

// this will fail!!

document.getElementsByTagName('a').map(x => x.href())

You have to use Array.from to change it into an array.

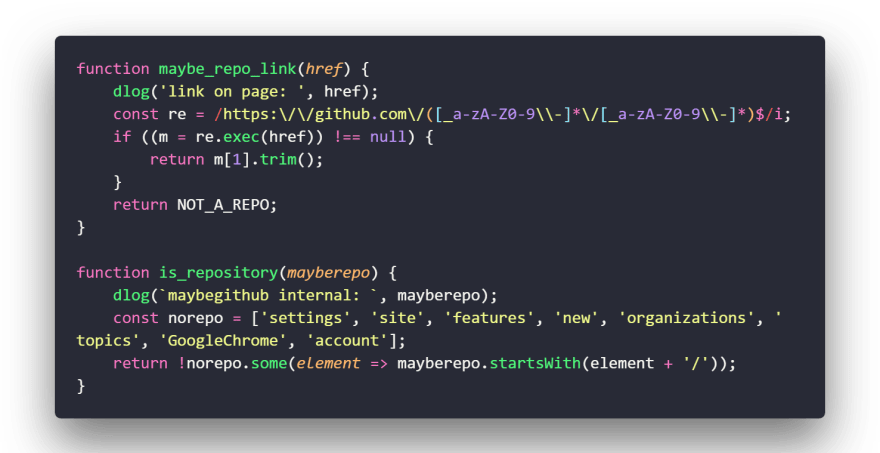

In maybe_repo_link I filter out github urls that match the owner/repository pattern.

Many Github internal links match the same pattern, so I wrote is_repository to catch at least a few more and avoid errors later on.

Lessons learned: At first I tried to filter the internal URLs like that and was annoyed that it didn't work:

// this does not work!

norepo.forEach(x => {

if(mayberepo.startsWith(x)){

return false;

}

});

return true;

As very clearly described in the MDN Documentation, forEach is useful if you're interested in triggering side effects. However you can't break the loop. My return statement was just ignored and the function always returned true.

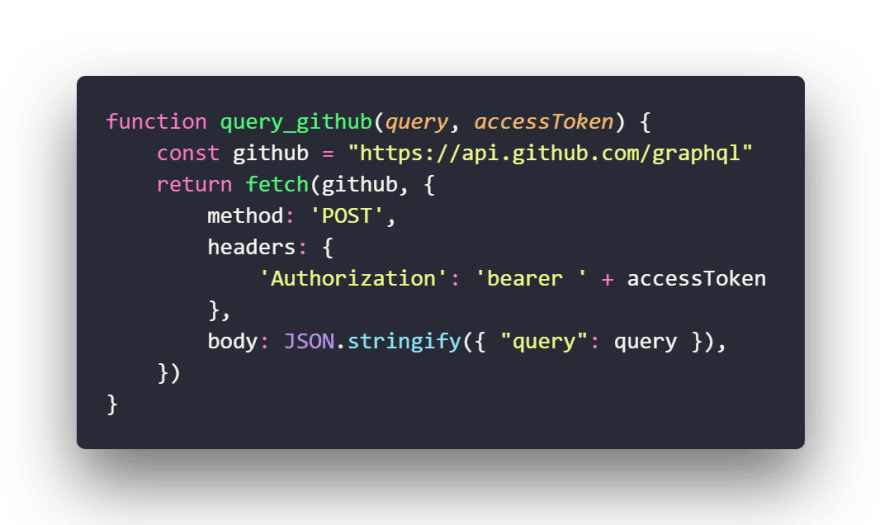

Querying Github via GraphQL

GraphQL is a language for accessing your APIs. Compared to REST, you have more flexibility in defining what you want to query in a single call and which data should be included in the response.

I make use of the Github GraphQL API because it only takes a single HTTP call to query the information for all repositories on a page:

First I assemble the query, then I query Github, and finally I extract the information from the response.

I use a single GraphQL query to fetch the last repository push date of all repositories at once.

In graphql_fragment_for I assemble the query for a single repository. In order to have multiple repository clauses, I need to prefix them by an arbitrary unique name.

Note the ...fields section: this states that at this position the fragment with the name "fields" should be inserted. Fragments are a little bit like makros and avoid repetition.

The response of the function looks like this:

r0: repository(owner:"derhackler",name:"catexcel"){

...fields

},

SECURITY_ALERT: I use string concatenation to pass in owner and name. THIS IS DANGEROUS and in general a very bad idea. Think of SQL Injection but only for your API. GraphQL supports the concept of variables which should be used instead. I couldn't figure out though on how to use them in my scenario...

In graphql_for_repos, I assemble the subqueries for the repositories. In addition I ask for how many API calls I have left (for debugging purposes).

The actual query is an HTTP POST to the Graphql endpoint of Github. Compared to REST, GraphQL APIs are exposed via a single URL.

In extract_from_graph I filter out all responses where no repository data could be found (and just ignore it) and convert the timestamp to days until now.

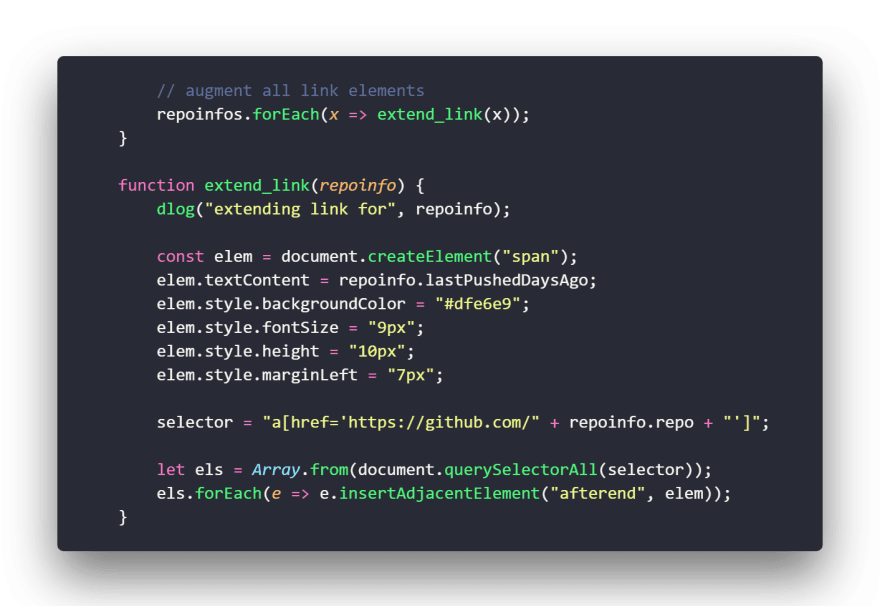

Augmenting the Repository Links

In the last step, I add a little indicator to each repository link. I'm not using the DOM elements that I queried orignially, but query for each link individually again. I think this is cleaner as the original element may have been gone already.

Final Verdict

- Writing browser extensions is fun and surprisingly painless

- Writing blogposts reviewing my own code takes me much longer than writing the code

Top comments (0)