Web scraping or crawling is the fact of fetching data from a third party website by downloading and parsing the HTML code to extract the data you want.

But you should use an API for this!

Not every website offers an API, and APIs don't always expose every piece of information you need. So it's often the only solution to extract website data.

There are many use cases for web scraping:

- E-commerce price monitoring

- News aggregation

- Lead generation

- SEO (Search engine result page monitoring)

- Bank account aggregation (Mint in the US, Bankin' in Europe)

- But also lots of individual and researchers who need to build a dataset otherwise not available.

So, what is the problem?

The main problem is that most websites do not want to be scraped. They only want to serve content to real users using real web browser (except Google, they all want to be scraped by Google).

So, when you scrape, you have to be careful not being recognized as a robot by basically doing two things: using human tools & having a human behavior. This post will guide you through all the things you can use to cover yourself and through all the tools websites use to block you.

Emulate human tool i.e: Headless Chrome

Why using headless browsing?

When you open your browser and go to a webpage it almost always means that you are you asking an HTTP server for some content. And one of the easiest ways pull content from an HTTP server is to use a classic command-line tool such as cURL.

Thing is if you just do a: curl www.google.com, Google has many ways to know that you are not a human, just by looking at the headers for examples. Headers are small pieces of information that goes with every HTTP request that hit the servers, and one of those pieces of information precisely describe the client making the request, I am talking about the "User-Agent" header. And just by looking at the "User-Agent" header, Google now knows that you are using cURL. If you want to learn more about headers, the Wikipedia page is great, and to make some experiment, just go over here, it's a webpage that simply displays the headers information of your request.

Headers are really easy to alter with cURL, and copying the User-Agent header of a legit browser could do the trick. In the real world, you'd need to set more than just one header but more generally it is not very difficult to artificially craft an HTTP request with cURL or any library that will make this request looks exactly like a request made with a browser. Everybody knows that, and so, to know if you are using a real browser website will check one thing that cURL and library can not do: JS execution.

Do you speak JS?

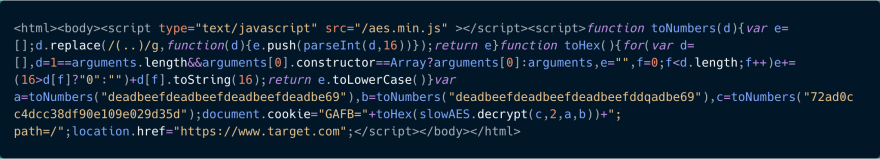

The concept is very simple, the website embeds a little snippet of JS in its webpage that, once executed, will "unlock" the webpage. If you are using a real browser, then, you won't notice the difference, but if you're not, all you'll receive is an HTML page with some obscure JS in it.

But once again, this solution is not completely bulletproof, mainly because since nodeJS it is now very easy to execute JS outside of a browser. But once again, the web evolved and there are other tricks to determine if you are using a real browser or not.

Headless Browsing

Trying to execute snippet JS on the side with the node is really difficult and not robust at all. And more importantly, as soon as the website has a more complicated check system or is a big single-page application cURL and pseudo-JS execution with node become useless. So the best way to look like a real browser is to actually use one.

Headless Browsers will behave "exactly" like a real browser except that you will easily be able to programmatically use them. The most used is Chrome Headless, a Chrome option that has the behavior of Chrome without all the UI wrapping it.

The easiest way to use Headless Chrome is by calling driver that wraps all its functionality into an easy API, Selenium and Puppeteer are the two most famous solutions.

However, it will not be enough as websites have now tools that allow them to detect a headless browser. This arms race that's been going on for a long time.

Fingerprinting

Everyone, and mostly front dev, knows how every browser behaves differently. Sometimes it can be about rendering CSS, sometimes JS, sometimes just internal properties. Most of those differences are well known and it is now possible to detect if a browser is actually who it pretends to be. Meaning the website is asking itself "are all the browser properties and behaviors matched what I know about the User-Agent sent by this browser?".

This is why there is an everlasting arms race between scrapers who want to pass themselves as a real browser and websites who want to distinguish headless from the rest.

However, in this arms race, web scrapers tend to have a big advantage and here is why.

Most of the time, when a Javascript code tries to detect whether it's being run in headless mode is when it is a malware that is trying to evade behavioral fingerprinting. Meaning that the JS will behave nicely inside a scanning environment and badly inside real browsers. And this is why the team behind the Chrome headless mode are trying to make it indistinguishable from a real user's web browser in order to stop malware from doing that. And this is why web scrapers, in this arms race can profit from this effort.

One another thing to know is that whereas running 20 cURL in parallel is trivial, Chrome Headless while relatively easy to use for small use cases, can be tricky to put at scale. Mainly because it uses lots of RAM so managing more than 20 instances of it is a challenge.

If you want to learn more about browser fingerprinting I suggest you take a look at Antoine Vastel blog, a blog entirely dedicated to this subject.

That's about all you need to know to understand how to pretend like you are using a real browser. Let's now take a look at how do you behave like a real human.

Emulate human behaviour i.e: Proxy, Captchas solving and Request pattern

Proxy yourself

A human using a real browser will rarely request 20 pages per second from the same website, so if you want to request a lot of page from the same website you have to trick this website into thinking that all those requests come from a different place in the world i.e: different I.P addresses. In other words, you need to use proxies.

Proxies are now not very expensive: ~1$ per IP. However, if you need to do more than ~10k requests per day on the same website, costs can go up quickly, with hundreds of addresses needed. One thing to consider is that proxies IPs needs to be constantly monitored in order to discard the one that is not working anymore and replace it.

There are several proxy solutions in the market, here are the most used: Luminati Network, Blazing SEO and SmartProxy.

There is also a lot of free proxy list and I don’t recommend using these because there are often slow, unreliable, and websites offering these lists are not always transparent about where these

proxies are located. Those free proxy lists are most of the time public, and therefore, their IPs will be automatically banned by the most website. Proxy quality is very important, anti crawling services are known to maintain an internal list of proxy IP so every traffic coming from those IPs will also be blocked. Be careful to choose a good reputation Proxy. This is why I recommend using a paid proxy network or build your own

To build your on you could take a look at scrapoxy, a great open-source API, allowing you to build a proxy API on top of different cloud providers. Scrapoxy will create a proxy pool by creating instances on various cloud providers (AWS, OVH, Digital Ocean). Then you will be able to configure your client so it uses the Scrapoxy URL as the main proxy, and Scrapoxy it will automatically assign a proxy inside the proxy pool. Scrapoxy is easily customizable to fit your needs (rate limit, blacklist ...) but can be a little tedious to put in place.

You could also use the TOR network, aka, The Onion Router. It is a worldwide computer network designed to route traffic through many different servers to hide its origin. TOR usage makes network surveillance/traffic analysis very difficult. There are a lot of use cases for TOR usage, such as privacy, freedom of speech, journalists in the dictatorship regime, and of course, illegal activities. In the context of web scraping, TOR can hide your IP address, and change your bot’s IP address every 10 minutes. The TOR exit nodes IP addresses are public. Some websites block TOR traffic using a simple rule: if the server receives a request from one of the TOR public exit nodes, it will block it. That’s why in many

cases, TOR won’t help you, compared to classic proxies. It's worth noting that traffic through TOR is also inherently much slower because of the multiple routing thing.

Captchas

But sometimes proxies will not be enough, some websites systematically ask you to confirm that you are a human with so-called CAPTCHAs. Most of the time CAPTCHAs are only displayed to suspicious IP, so switching proxy will work in those cases. For the other cases, you'll need to use CAPTCHAs solving service (2Captchas and DeathByCaptchas come to mind).

You have to know that while some Captchas can be automatically resolved with optical character recognition (OCR), the most recent one has to be solved by hand.

What it means is that if you use those aforementioned services, on the other side of the API call you'll have hundreds of people resolving CAPTCHAs for as low as 20ct an hour.

But then again, even if you solve CAPCHAs or switch proxy as soon as you see one, websites can still detect your little scraping job.

Request Pattern

A last advanced tool used by the website to detect scraping is pattern recognition. So if you plan to scrap every ids from 1 to 10 000 for the URL www.example.com/product/, try not to do it sequentially and with a constant rate of request. You could, for example, maintain a set of integer going from 1 to 10 000 and randomly choose one integer inside this set and then scraping your product.

This one simple example, some websites also do statistic on browser fingerprint per endpoint. Which means that if you don't change some parameters in your headless browser and target a single endpoint, they might block you anyway.

Websites also tend to monitor the origin of traffic, so if you want to scrape a website if Brazil, try not doing it with proxies in Vietnam for example.

But from experience, what I can tell, is that rate is the most important factor in "Request Pattern Recognition", sot the slower you scrape, the less chance you have to be discovered.

Conclusion

I hope that this overview will help you understand better web-scraping and that you learned things reading this post.

Everything I talked about in this post is things I used to build ScrapingBee, the simplest web scraping API around there. Do not hesitate to test our solution if you don’t want to lose too much time setting everything up, the first 1k API calls are on us :).

Do not hesitate to tell in the comments what you'd like to know about scraping, I'll talk about it in my next post.

Happy scraping 😎

Top comments (25)

I am going to come at this from a different angle, working for an API platform: PLEASE USE THE API (much more specifically, where there IS an API)

Yes, I get that you can (arguably...) work around limits by doing headless scraping, but this is often against the platform terms of service: you will get far less, less useful, metadata; your IP address may be blocked; and the UI typically has no contract with you as a developer that can help to ensure that data access is maintained when a site layout changes, leading to more work for you.

Be cool. Work with us, as API providers. We want you to use our APIs and not have you run around us to grab data in unnecessary ways.

Most of all though, enjoy coding!

I remember when I started scrapping, I used to search for free proxies and tried to save my money in this important step of scraping. But really quickly I realized that you cannot trust free proxies because they are so unreliable and unstable. I totally agree with you that people should not use free proxies. All of your listed proxy providers are really solid names for an affordable price. Personally, I prefer Smartproxy for their price and quality balance. All in all, a really solid article, Pierre!

ScrapingNinja looks really cool! Just curious ― are there any legal issues with providing a service like that?

Thank you very much.

As long as we ensure that people don't use our service for DDOS purpose, we've been told we should be fine 🤞

Hmm, interesting. Many sites list scraping, crawling, and / or non-human access as violations of their Terms of Service.

For example, see section 8.2(b) of LinkedIn's User Agreement (I list LinkedIn because I know they're a common target for scraping).

Yes, you are right, Linkedin is well known for this.

Well, I am not a lawyer so I'd rather say nothing than saying no-sense.

We plan to do a blog post about this, well-sourced and more detailed than my answers :)

Smart :)

Cool, looking forward to reading that!

What about complying with terms of service for the websites and API platforms your service may scrape?

This blog is your go-to guide for web scraping essentials. It breaks down why scraping is important and how to avoid detection by websites, offering tips like using Headless Chrome, proxies, and CAPTCHA solving. Plus, it mentions ScrapingBee, a super user-friendly option for hassle-free scraping tasks.

Do explore and check Crawlbase aswell.

Great article and nice proxy recommendation. I even have a review about one proxy provider you mentioned.

I guess I'm here too early? I saw this and immediately came to the comments because there's no way that image is't going to shock the arachnophobes among us 🤣

Haha!! I expected much more of an uproar as well... I mean that image is absolutely terrifying.

Hi Pierre, I really like your post, great job!

I am actually working on web scraper using Python with requests library

I am getting information about job titles from my country and find out a pattern

Proxy rotation is very useful in this and many other tasks, especially for automation I think. Next to proxy services weird that you didn't mention Oxylabs or Geosurf, these seem to be some of the more web scraping centered proxy providers.

One of the best articles on this topic that I've ever read! Very great!

And I must add that proxy services are very important and necessary in this case, as well as to choose the right proxy provider that could meet all your needs and would help to mask your scraping tool from detection.

This was an awesome read! Seems like the link was broken where it said, "just go over here, it's a webpage that simply displays"? Maybe I'm wrong, but I was very interested and wanted to take a look 👀

Oopsie, thanks for the catch, it is now fixed !

Thanks!

Do you have any opinion on the Scrapy API? I've gotten some good results with them:

scrapy.org/

Scrapy is AWSOME !

It allows you to do so much with such a few lines of codes.

I consider Scrapy as a requests package under big steroïds.

The fact that you can handle parallelization, throttling, data filtering, and data loading in one place is very good. I am specifically fond of the autothrottle feature

However, Scrapy need some extension to work well with proxies and headless browser.

Awesome piece

awesome article thank you. also, I have found another site scraper service. Maybe it will help someone too. e-scraper.com/useful-articles/down...

scraperapi is a tool that takes some of the headache out of this, you only pay for successes. scraperapi.com/?fp_ref=albert-ko83

Some comments may only be visible to logged-in visitors. Sign in to view all comments.