My name is Zhenya Kosmak. Now I work as a product manager, but I have 3+ years of quite serious commercial experience using Django. And even now, I strive to code for at least 10 hours weekly. I deeply believe the best way to master any framework, Django as well, is to build a truly big pet project. So a few bits of my recent pet project experience.

The pet project's name is Palianytsia. It's a tool for enriching Ukrainian vocabulary and, generally, studying Ukrainian. Now, 2 months after project public launch, we have 200+ DAU (daily active users) across all clients (web app, iOS, and Android apps) and a sufficient audience (10K+ followers on Instagram). The back-end of this product is entirely on Django.

It must be noted that a great developer in the modern world couldn’t be imagined as a single player. Many tough challenges show up, particularly in teamwork. So it would be a rather more quality experience if you start your pet project with a team containing competent people for such roles as product owner, designer, and project manager.

Your pet project should solve a sufficient number of most standard back-end tasks. The tasks have to be real, not synthetical. Here are examples of how you can set them up.

Main feature

In our case, it was a search mechanism on top of ElasticSearch. We have a database of sentences from different sources, and the task of this API endpoint is to return the most relevant sentences for each query. We have additional aspects on this. The sentences must show different contexts in which the user's input was used. Also, the search results should come from various sources in order to include principally different ways to use the search phrase.

This task teaches you how to work with ElasticSearch, and customize its ordering. Also, you practice using DRF to build quality API endpoints here. If you want to obtain excellent documentation of such endpoints, you might use drf-spectacular or else as well.

Data collection

Django developer must be a Python professional as well. Not all the everyday tasks are covered with some Django libraries, but they still have to be solved. HTTP scraping is one of the most standard ones.

We needed to collect articles from 40+ websites to provide users with comprehensive examples of each word or phrase usage. So we've built some abstract parser classes for most routine needs:

- BaseParser. We define general parsing logic here.

- BaseFeedPaginationParser. We use it to collect article URLs when the website's feeds are paginated, i.e., the URLs of the feed look like

https://example.com/feed/<page>. - BaseFeedCrawlerParser. On some sites, there were no paginated URLs; they had only infinite scroll or "Next page" URLs on each page. This parser works with such websites. Also, we use it for Wikipedia.

- BaseArticleParser. This guy is used for collecting the content of each article.

Such class hierarchy was a definitely productive solution. After finishing work on these classes, developing a parser was mainly like writing a config file. For example:

class DetectorMediaBaseFeedParser(BaseFeedParser):

start_date: datetime.date

def feed_urls(self) -> List[str]:

return [

f"{self.base_url}archive/{d.strftime('%Y-%m-%d')}/"

for d in date_range(self.start_date, today())

]

class DetectorMediaBaseArticleParser(BaseArticleParser):

paragraphs_selector = "#artelem > p"

def extract_paragraphs(self, dom: HtmlElement) -> List[str]:

# Current markup

paragraphs = super().extract_paragraphs(dom)

if paragraphs:

return paragraphs

# Old br-only markup

content = cssselect_one(dom, "#artelem")

paragraphs = split_br_into_paragraphs_lxml(content) if content else []

return paragraphs

You can only guess what a mess we could get if we developed each parser separately. It's also an excellent practice for requests, asyncio, or anything else you prefer to get the data from websites.

This task also teaches you how to orchestrate those parsers. We had to gather millions of articles based on our estimates, and we wanted to be able to collect all data from all sites in 24 hours. So we have to use a tasks queue to ensure the solution is efficient and scalable. The most common approach on the Django stack is to use celery, but we prefer dramatiq as the most lightweight and still productive one. Bringing such a solution to a stable and scalable level is also a very effective way to improve your coding skills.

Last but not least, we need a state here. We have to save each gathered URL and article content after scraping, so we wouldn't lose the work results if our server crashes. And the content is stored in S3 Wasabi cloud storage. Here is your practice with PostgreSQL or any other RDBMS you'd like, and S3 as well.

Data processing

Okay, we have articles; what's next? We need a database of Ukrainian sentences split properly, with filtered artifacts (such as BB-code parts or broken HTML, which occurs on most websites), normalized (we don't need repeated spaces or else). As input, we have a PostgreSQL table with articles; each article is stored on S3 storage and split into paragraphs. So we've got such tasks:

- Split a paragraph into a list of sentences. The job looks easier than it is. No way it's something like

paragraph.split('.'), it's way harder. For some direction, you can look at this StackOverflow question. - Skip all paragraphs with mostly non-Ukrainian content.

- Filter out trash content.

Let's add some 3rd-party tools

Sometimes when you want to say something in one language, you can only recall the word or phrase in another. So let's add Google Translate so that our users would be able to enter queries using any language, and we will search for translation in our database.

It's a nice opportunity to practice using dependencies (google-cloud-translate), storing credentials, and even developing a cache mechanic. The last one would be helpful to decrease the number of requests sent to the API so that we will spend less. By the way, if you send less than 500K characters monthly, you wouldn't even be charged with Google Cloud cause it's free under that limit.

And one more tricky task. The user searches a query. Should you or shouldn't you translate it? There are several ways to solve this issue. We decided to go this way: we stem and normalize all the words from the query, then we find all of them in our dictionary of Ukrainian words. We definitely shouldn't go for a translation if we found all of them. Else way, we better get it.

User-related mechanics

We need authentication, authorization, registration, password recovery — all that boring stuff.

It's a good chance to practice django-allauth, dj-rest-auth, djangorestframework-simplejwt. Remember such things:

- Users shouldn't be logged out for no reason, so cookies shouldn't expire in one hour,

- Their credentials must be safe, so that should be HTTP-only cookies and data kept secure on the server side,

- All errors must be processed intuitively and logically, so the front-end part will be easy to develop.

Developing 3rd-party authentications is also a good idea for new technical experience. Adding Google or Facebook as sign-up methods might be a more intriguing task than you'd imagine.

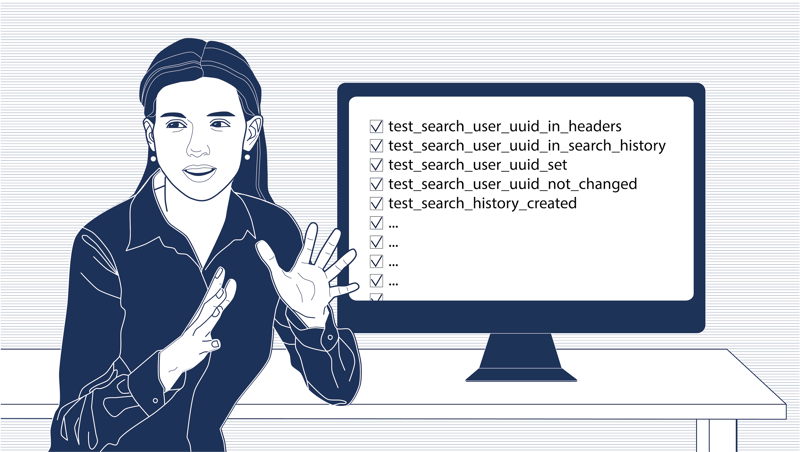

Should we do some automated tests?

Of course, we should! But please don't do it like your home assignment at university. Choose the most vulnerable parts of projects for future developer mistakes and cover them up. In our case, we made such:

- ElasticSearch test for disk usage, RAM, and response speed. Actually, we did this before all else in this project as a part of the proof-of-concept stage. We filled the ElasticSearch DB with dummy data and used

ApacheBench, and some write-only Python scripts to test the performance on the production-level server. - Performance tests on the primary endpoint to ensure we'll endure the load. These tests were added to the stable code base so that we could rerun the test when needed.

- Unit tests for parsers, text processing mechanics, and string utilities. So that we can be sure that our technical solutions would be changed only in a conscious way.

Bottom line

Let's summarize. On such a pet project, you can effectively learn:

- General business logic development. When you have a real-world task, the coding experience becomes more fluent.

- Work with mainly used databases. Any project relies on them, so if your task is challenging enough, you may learn those well.

- Task queues. They become vital just as you encounter any IO-bound, CPU-bound or high-loaded objective. Choose high restrictions, and you learn it well for sure.

- 3rd-parties. They are an integral part of any product. The more you learn, the better you're ready for the next ones.

- Network libraries. Not to say vital, but an omnipresent piece of knowledge you'd definitely need. Any pet project will acquire your attention on this point.

- Tests. Not homework, but real salvation of doing error twice.

It's almost the same as spending a year on a commercial project. But you can do it yourself, and if you are patient and stubborn enough, you can master it. So think about it :)

And the last thing. If you are thinking about something bigger than a pet project, you can talk with me directly or check out our cases on Daiquiri Team.

Top comments (1)

Thanks for the article.