In the previous part of this guide, we deployed the Django web application on AWS ECS.

In this part, we'll create a PostgreSQL RDS instance on AWS, connect it to the Django ECS task and enable access to Django Admin. We choose PostgreSQL because it supports complex SQL queries, MVCC, good performance for concurrent queries, and has a large community. As AWS says:

PostgreSQL has become the preferred open source relational database for many enterprise developers and start-ups, powering leading business and mobile applications. Amazon RDS makes it easy to set up, operate, and scale PostgreSQL deployments in the cloud.

Add PostgreSQL to Django project

Go to the django-aws-backend folder and activate virtual environment: cd ../django-aws-backend && . ./venv/bin/activate.

First, let's set up PostgreSQL via Docker for local development. Create docker-compose.yml with the following content:

version: "3.1"

services:

postgres:

image: "postgres:14"

ports:

- "5433:5432"

environment:

POSTGRES_PASSWORD: "postgres"

POSTGRES_DB: "django_aws"

Then run docker-compose up -d to start a container. Now, let's connect Django to PostgreSQL.

We use a non-standard 5433 port to avoid potential conflict with your local PostgreSQL

Next, we need to add pip packages: psycopg2-binary for working with PostgreSQL and django-environ to retrieve PostgreSQL connection string from environment. Also, we'll add the WhiteNoise package to serve static files for Django Admin.

Add to the requirements.txt file these packages and run pip install -r requirements.txt:

django-environ==0.8.1

psycopg2-binary==2.9.3

whitenoise==6.1.0

Now, change settings.py. First, let's load env variables. We try to read the .env file in the project root if it exists.

import environ

BASE_DIR = Path(__file__).resolve().parent.parent

env = environ.Env()

env_path = BASE_DIR / ".env"

if env_path.is_file():

environ.Env.read_env(env_file=str(env_path))

Now we can use environment variables in settings.py. Let's provide DATABASES from env:

DATABASES = {

'default': env.db(default="postgresql://postgres:postgres@127.0.0.1:5433/django_aws")

}

We've set a default value for the DATABASE_URL to allow running the Django project locally without specifying this variable in the .env file.

Third, add the WhiteNoiseMiddleware to serve static files and specify the STATIC_ROOT variable.

MIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

"whitenoise.middleware.WhiteNoiseMiddleware",

...

]

...

STATIC_URL = '/static/'

STATIC_ROOT = BASE_DIR / "static"

Also, add the RUN ./manage.py collectstatic --noinput line to the bottom of Dockerfile to collect static files in the static folder.

Apply migrations, create a superuser and start a web server:

(venv) $ python manage.py migrate

Operations to perform:

Apply all migrations: admin, auth, contenttypes, sessions

...

(venv) $ python manage.py createsuperuser

Username: admin

Email address:

Password:

Password (again):

Superuser created

(venv) $ python manage.py runserver

...

Starting development server at http://127.0.0.1:8000/

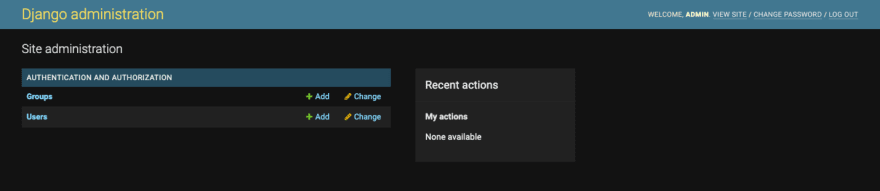

Go to http://127.0.0.1:8000/admin/ and sign in with your user.

Finally, let's commit changes, build and push the docker image.

$ git add .

$ git commit -m "add postgresql, environ and static files serving"

$ docker build . -t 947134793474.dkr.ecr.us-east-2.amazonaws.com/django-aws-backend:latest

$ docker push 947134793474.dkr.ecr.us-east-2.amazonaws.com/django-aws-backend:latest

We are done with the Django part. Next, we create a PostgreSQL instance on AWS and connect it to the ECS task.

Creating RDS

AWS provides the Relational Database Service to run a PostgreSQL. Now, we'll describe the RDS setup with Terraform. Go to the Terraform folder ../django-aws-infrastructure and add a rds.tf file:

resource "aws_db_subnet_group" "prod" {

name = "prod"

subnet_ids = [aws_subnet.prod_private_1.id, aws_subnet.prod_private_2.id]

}

resource "aws_db_instance" "prod" {

identifier = "prod"

db_name = var.prod_rds_db_name

username = var.prod_rds_username

password = var.prod_rds_password

port = "5432"

engine = "postgres"

engine_version = "14.2"

instance_class = var.prod_rds_instance_class

allocated_storage = "20"

storage_encrypted = false

vpc_security_group_ids = [aws_security_group.rds_prod.id]

db_subnet_group_name = aws_db_subnet_group.prod.name

multi_az = false

storage_type = "gp2"

publicly_accessible = false

backup_retention_period = 5

skip_final_snapshot = true

}

# RDS Security Group (traffic ECS -> RDS)

resource "aws_security_group" "rds_prod" {

name = "rds-prod"

vpc_id = aws_vpc.prod.id

ingress {

protocol = "tcp"

from_port = "5432"

to_port = "5432"

security_groups = [aws_security_group.prod_ecs_backend.id]

}

egress {

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

}

}

Here we create an RDS instance, a DB subnet group, and a Security Group to allow incoming traffic from ECS 5432 port only. We'll specify db_name, username, password, and instance_class in the variables.tf:

# rds

variable "prod_rds_db_name" {

description = "RDS database name"

default = "django_aws"

}

variable "prod_rds_username" {

description = "RDS database username"

default = "django_aws"

}

variable "prod_rds_password" {

description = "postgres password for production DB"

}

variable "prod_rds_instance_class" {

description = "RDS instance type"

default = "db.t4g.micro"

}

Notice that we haven't provided a default value for the prod_rds_password variable to prevent committing the database password to the repository. Terraform will ask you for a variable value if you try to apply these changes.

$ terraform apply

var.prod_rds_password

postgres password for production DB

Enter a value:

It's not convenient and error-prone to type a password every time. Gladly, Terraform can read variable values from the environment. Create .env file with TF_VAR_prod_rds_password=YOUR_PASSWORD variable. Interrupt password prompting, run export $(cat .env | xargs) to load .env variable, and rerun terraform apply. Now Terraform picks the password from the .env file and creates an RDS PostgreSQL instance. Check it in the AWS RDS console.

Connecting to ECS

Let's connect the RDS instance to ECS tasks.

Add to backend_container.json.tpl environment var DATABASE_URL

[

{

...

"environment": [

{

"name": "DATABASE_URL",

"value": "postgresql://${rds_username}:${rds_password}@${rds_hostname}:5432/${rds_db_name}"

}

],

...

}

]

Add RDS variables to the prod_backend_web task definition in the ecs.tf file:

resource "aws_ecs_task_definition" "prod_backend_web" {

...

container_definitions = templatefile(

"templates/backend_container.json.tpl",

{

...

rds_db_name = var.prod_rds_db_name

rds_username = var.prod_rds_username

rds_password = var.prod_rds_password

rds_hostname = aws_db_instance.prod.address

},

)

...

}

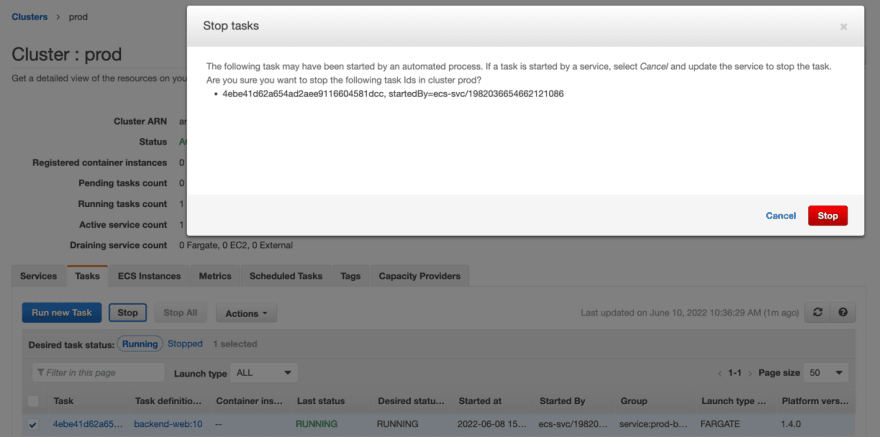

Let's apply changes and update the ECS service with the new task definition. Run terraform apply, stop current task via web console and wait for the new task to arise.

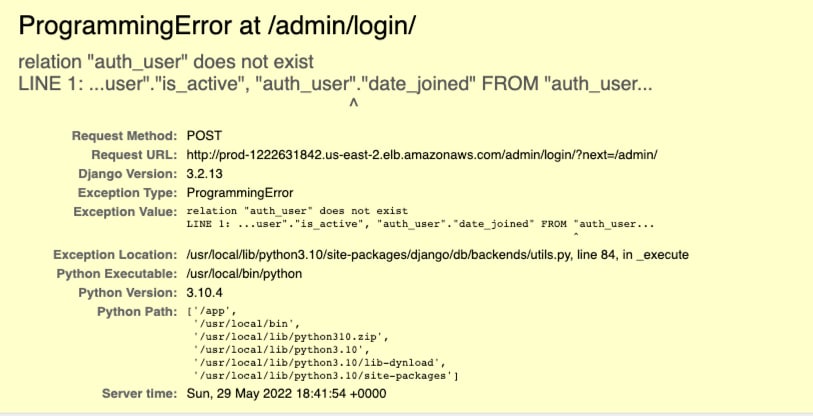

Now, go to the admin URL on the load balancer hostname and try to log in with random credentials. You should get the relation "auth_user" does not exist error. This error means that the Django application successfully connected to PostgreSQL, but no migrations was run.

Running migrations

Now, we need to run migrations and create a superuser. But how can we do it? Our infrastructure has no EC2 instances to connect via SSH and run this command. The solution is ECS Exec.

With Amazon ECS Exec, you can directly interact with containers without needing to first interact with the host container operating system, open inbound ports, or manage SSH keys.

First, we need to install the Session Manager plugin. For macOS, you can use brew install session-manager-plugin. For other platforms, check this link.

Next, we need to provide an IAM policy to the prod_backend_task role and enable execute_command for the ECS service. Add this code to the ecs.tf and apply changes:

resource "aws_ecs_service" "prod_backend_web" {

...

enable_execute_command = true

...

}

resource "aws_iam_role" "prod_backend_task" {

...

inline_policy {

name = "prod-backend-task-ssmmessages"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"ssmmessages:CreateControlChannel",

"ssmmessages:CreateDataChannel",

"ssmmessages:OpenControlChannel",

"ssmmessages:OpenDataChannel",

]

Effect = "Allow"

Resource = "*"

},

]

})

}

}

Stop the old ECS task in web console and wait for the new task.

Then let's retrieve the new task_id via CLI and run aws esc execute-command. Create a new file touch backend_web_shell.sh && chmod 777 backend_web_shell.sh with the content:

#!/bin/bash

TASK_ID=$(aws ecs list-tasks --cluster prod --service-name prod-backend-web --query 'taskArns[0]' --output text | awk '{split($0,a,"/"); print a[3]}')

aws ecs execute-command --task $TASK_ID --command "bash" --interactive --cluster prod --region us-east-2

Run ./backend_web_shell.sh to shell into task. If you have some troubles, please check Amazon ECS Exec Checker. This script checks that your CLI environment and ECS cluster/task are ready for ECS Exec.

Run migrations and create a superuser from the task's console:

$ ./manage.py migrate

$ ./manage.py createsuperuser

Username: admin

Email address:

Password:

Password (again):

Superuser created

After this, check your deployment admin URL and try to sign in with provided credentials.

Congratulations! We've successfully connected PostgreSQL to the ECS service. Now you can commit changes in the Terraform project and move to the next part.

In the next part we'll set up CI/CD with GitLab.

You can find the source code of backend and infrastructure projects here and here.

If you need technical consulting on your project, check out our website or connect with me directly on LinkedIn.

Top comments (2)

Since you have psycopg2 binaries in your python file, don't you need to include some

in your Dockerfile for it to build? I can't build the dockerfile with psycopg2 in my dependencies. Spits out

Error: pg_config executable not foundScratch that, solved by upgrading psycopg2-binary from 2.8.6 -> 2.9.3 as per your example