Caching is a technique in the Dev toolbox that can work some "magic" to resolve scalability challenges.

Caching: storing frequently accessed or computationally expensive data in a temporary storage area, often closer in space/time to the requester.

A software or hardware component that does this is a cache and there are local or remote methods to accomplish this.

-

Potential app benefits from caching:

- better performance,

- better reliability,

- less resources required,

- less resources may reduce cost/complexity,

- decreasing latency and user-perceived lag,

- increasing resilience/uptime,

- extending existing capabilities,

- improving usability.

This article is a high-level introduction.

In future articles we will explore implementation in great detail from pure Javascript code to React to CDNs, microservices, APIs and databases.

Why is caching important?

What are some major caching patterns we should know about?

🛠 Implementation

Implementation of caching is interesting for a few reasons...

-

Ubiquitous.

- We have many areas of the application ecosystem to implement it.

- Multiply performance optimizations, from the client to CDN, database, and/or servers. Opportunities are abundant.

-

Fast Results. Easy benefits which can extend existing capabilities at low cost.

- Basic caching can be achieved without much difficulty.

- A simple approach can yield quick results and save a lot of money!

-

Reliability.

- Caching can potentially prevent users knowing about a service or website outage.

- We can mitigate the impact of a service outage by serving cached content.

-

Overcome geographic and offline obstacles.

- Caching can help with reliability and usability in a variety of geographic and low/no-connectivity challenges.

-

Increase performance knowledge of the app.

- Exploring caching techniques helps us better understand the apps' limitations, and areas for optimization throughout the entire system.

Sound great?

Almost.... because caching is not perfect for all cases.

❌ Risks

Possible downsides:

- Complexity. May increase app complexity, especially when used a lot.

- Debugging. A lot of of caching can lead to a lot of difficult debugging! (been there, done that!)

- Data consistency and usability may be harmed if implemented incorrectly. Users may not be served the correct data/images

- Security. Storing data remotely for example could result in security risk in some cases, such as with PII (Personally Identifiable Information).

- Unnecessary? In some cases it simply may be unnecessary to implement.

💡 Considerations

Considerations:

-

Is your app making expensive/complex calculations or stalling from compute bottlenecks?

- If so, it may help to refactor code with caching for lower memory usage, read/writes and CPU cycles.

-

What is the response time of your application?

- If the response time is slow, caching may help improve performance.

-

Is it serving the same content to many users?

- If so, caching can help reduce the number of requests to the server and improve response times.

-

Do you have a large amount of static content on your website or app?

- Caching can help reduce the data over the network, improving performance.

-

Are there limited or unstable network resources?

- Caching can help reduce the amount of data that needs to be sent over the network, conserving bandwidth and reducing costs.

-

Do you have a high volume of concurrent users accessing your application

- It can help improve performance and reduce server load.

-

Is your application frequently requesting data from external sources?

- Caching can help reduce the load on external servers and improve response times.

Caching is used universally and a critical part of popular apps, despite potential drawbacks.

Caching Strategies

Basic caching patterns are implemented across different services, technologies and languages. One of the reason we look at this in the intro is because it is applicable throughout the series.

The caching strategy can optimize how effective it is for your use-case.

Locality

Local cache

- Cache is stored with the app, such as in memory in the client browser, or app server.

Remote cache

- Cache is stored as a remote independent service, such as CDN.

note:

- Some of the info below can get a bit tricky. And even some sources use slightly different terminology!

- The main thing is that you need to understand the trade-offs and the potential impact on your app.

For example, Write-Through will better ensure data consistency but will have higher latency (slightly slower). Write-Back will have lower latency (faster) but may have more issues with data inconsistency.

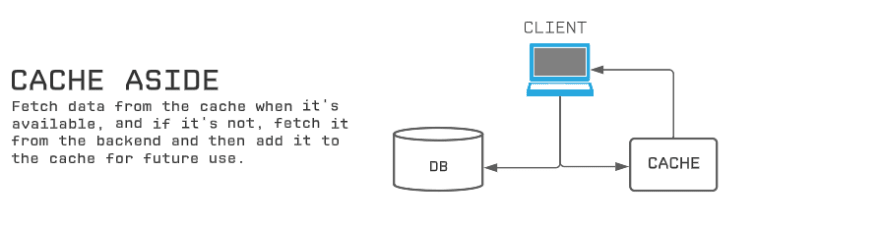

Cache-Aside

- Among the most common caching strategies, aka "lazy loading".

- Fetch data from the cache when it's available, and if it's not, fetch it from the backend.

- Then the client would add it to the cache for future use.

- Cache and database are independent and don't communicate with each other.

- Cache hit: Read from the cache. Cache miss: read from the backend store.

- Best for low write-to-read ratios. (more for heavy reads)

Cache-Aside with Read-Through

- Similar to above but with a slight difference - the cache or a 3rd-party library may communicate with the backend store to fetch data when it's not available in the cache. The client does not do this.

- Cache hit/miss same as above, write to cache.

- Good for high read-to-write ratios.

Write-Through (SYNCHRONOUS Write)

- Writes data to the cache and to the backend store SYNCHRONOUSLY.

- Also, the backend store can load new data (independently of the cache) and then the cache can be updated with the new data.

- The main point here is that the cache is populated only AFTER the new write data is in the backend store.

- Used for better data consistency on the backend. Higher latency for writes.

Write-Back (Write Behind, ASYNCHRONOUS Write)

- Write data to the cache first and ASYNCHRONOUSLY write to the backend store.

- The main difference with Write-Through is that the cache is updated first, returns a response, and in the background then the backend store is updated separately. This does create a risk of data inconsistency but returns a response to the user faster.

- Faster due to lower latency, but more risk of data inconsistency.

Cache-Aside with Write-Back

- Write data to the cache first and then asynchronously write it to the backend.

- High write-to-read ratios and requires data consistency between the cache and the backend.

Write allocate

- Data at the missed-write location is put into cache, followed by a write-hit operation.

Write around (No-Write allocate)

- Data at the missed-write location is not loaded to cache, and is written directly to the backing store. Data is loaded into the cache on read misses only.

Refresh Cache

- The cache is periodically refreshed ("invalidated") with data from the backing store to ensure that the data in the cache is up-to-date.

- Good if data in the backing store changes frequently, but it can also introduce some latency in read operations.

Cache-Aside with Refresh-Ahead

- The Cache-Aside with Refresh-Ahead pattern is a caching strategy that involves pre-fetch data into the cache before it's requested.

- Good for applications that have predictable access patterns.

Caching with Replicas

- Implementing caching and replicating multiple nodes of the cache store for increased availability.

- Used with cache managers like Redis.

Cache-pinning

- Keeping frequently accessed data in the cache, even if it is not being used.

- Used to reduce the frequency of cache misses and improve cache hit rates.

- Good for applications that have a small number of frequently accessed data items.

Time-to-live (TTL)

- Setting an expiration time for cached data, after which the data is invalidated and must be retrieved from the backend store.

- Ensure that cached data does not become stale and to free up space in the cache.

- Useful for applications that have data with a short lifespan, such as news or weather data.

Invalidating the Cache

- Invalidating the cache whenever the data in the underlying data source changes. For example, if you're caching data from a database, you could invalidate the cache whenever a record is updated or deleted. This ensures that the cache always contains up-to-date data.

These are the primary strategies, although there are others that may be used in special cases.

Also different caching services and software may implement additional performance enhancement - we'll check that out later.

We've looked at why caching is useful, and some downsides. Also, some considerations for deciding what and how too implement.

Also, we examined some of the main caching patterns which can be applied across all types of services.

Next article we'll start examining caching from the application/client and trace it through the cloud and into large-scale distributed systems.

If you liked this article check out my previous article on:

150+ Solutions Architect Metrics

References:

Top comments (0)