If you happen to be working on a large Angular application, you might encounter issues with the initial load time of your app.

If you use performance metrics such as the new Performance Insights Chrome dev tool (which is a very nice tool to explore your network requests, layout shifts, rendering, TTI (time to interactive), FCP (First Contentful Paint), etc. , and get insights from google on how to fix some of your main issues), or Google Lighthouse extension, you might see that your TTI might be slow. This is bad for the users since if you don't have an application that loads fast, a lot of consumers lose interest.

There are a lot of solutions for those issues, and most of them are not even related to the front end application. This article is only targeting Angular specific performance improvements tips for front end engineers/developers.

1. Lazy loading is your friend

If you are working on a large codebase, chances are that you have a lot of modules, and your main bundle might be very big. This usually slows down the execution of the scripts, so you have a slower interactive page.

The easiest way in angular to fix this is to lazy load most(or all) of your routes. This way, when a user loads a page, the chunk that is served to him only contains what is required for the route, and not modules that are not needed right now (thanks to Angular Ivy, tree shaking done by angular is great, it eliminates whatever is not used from angular, your job is to eliminate what is not used from your app for the initial load as well).

You can write a custom preload strategy to start fetching the rest of the modules in the background (or common used modules, if you use telemetry and know which ones are the most accessed ones), so they are already loaded when the user will navigate away from the current page.

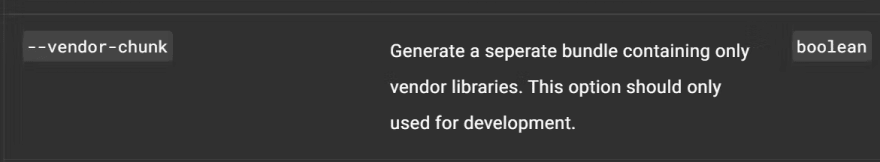

2. Split vendor chunk

This is not really recommended in production by the angular team in the official docs.

But hear me out: If you have a CDN that serves even your chunks (you have a great deployment pipeline that publishes your build to CDN), between two separate deploys usually the third party libraries don't change so it can be served from cache still, which is a bit faster. And your main bundle will contain only your application logic, so it will be smaller.

It might not be for you, here is a great discussion on the subject that I found on stackoverflow.

3. APP_INITIALIZER should not be very heavy

If you use APP_INITIALIZER, you know that angular will wait for everything in this provider to be finished during the application bootstrap phase.

This might tempt you to put a lot of async requests that might depend on each other but are required for the full global state of the app. You should avoid this, since it will delay angular and the time to interactive will be slower for the users.

If your application is already using this injection token and it's very hard to refactor, you might want to use a caching mechanism. You might want to make the request if there is nothing in the cache, but if it is, serve from there and start a new request in the background to update the cache without blocking angular. Maybe this is easier than refactoring, depending on your use case. Only problem would be that if you initialize an app state with this data, you might want to patch it as well after the background request has been made.

4. Startup API requests parallelizing / caching

A good performance monitoring solution is to check the network tab when initially loading your page. Check the API requests. Maybe you have a request that is triggered after another one, and so on. Check if anything can be parallelized in order to obtain a smaller waterfall time (if the requests are blocking the content).

You might want to delegate assets serving to service workers. Also, maybe you have large requests that do not change often. You might want to configure a service worker to cache those as well (this article might help you get started).

5. Analyze your webpack bundle

There is a nice npm package, webpack-bundle-analyzer. If you build your angular application in production mode, with the stats-json option, you can use it with this package by passing the location of the stats.json file to it.

After you run this command, a browser tab will open up showing you your webpack bundle. You can analyze how much of it is in the main.js, how much is in vendor.js (if you split the vendor chunk), and how much of it is lazy loaded. This way you can check your progress in reducing the bundle size. You can also determine what pieces of code are large in the chunk, and decide to import those on demand maybe (so they will not be in the main chunks).

6. Use telemetry to get actual prod performance

On your local machine, lighthouse or performance insights might give you fantastic results. The actual results for consumers in production might be really different from what you see (and there are a lot of reasons for this, such as internet speed, machines performance differences, and so on).

That's why, you might want to add performance telemetry in your application. A good solution for telemetry is Azure Application Insights. Here's a great article for integrating it with your angular application.

After you have your configuration ready, you can now track events into app insights. Only thing left to do is to actually track your performance. You can use the performance api for this. Maybe PerformancePaintTiming is enough for your needs. I'll work on an article to provide a concrete example on how to track performance in a simple angular app and query app insights to see actual metrics (and link it to this article). In the meantime, this is a good article as well that helps you track page load times for all of your routes and shows you how to query them.

As a conclusion, there are a lot of reasons why performance might be slow for some of your users. A lot of the issues are not really the responsibility of front end developers (slow API requests for example, or bad server configurations, bad scalability, etc.), but on large enterprise applications, there might be a lot of improvements that can be done on the front end application to ensure good load times.

If you have any suggestions or corrections for any of the steps, please let me know. I think it's important to learn from each other.

Top comments (2)

This is a good article to keep a check on some initial things but again if the application is big then it takes sometime even if the info is cached or the lazy loading has been used. So, the important thing here is to understand which architecture is important to be used.

Of course, those are just some general solutions to improve the load time initially. You need to dive deep into your application in order to understand what makes it slow.