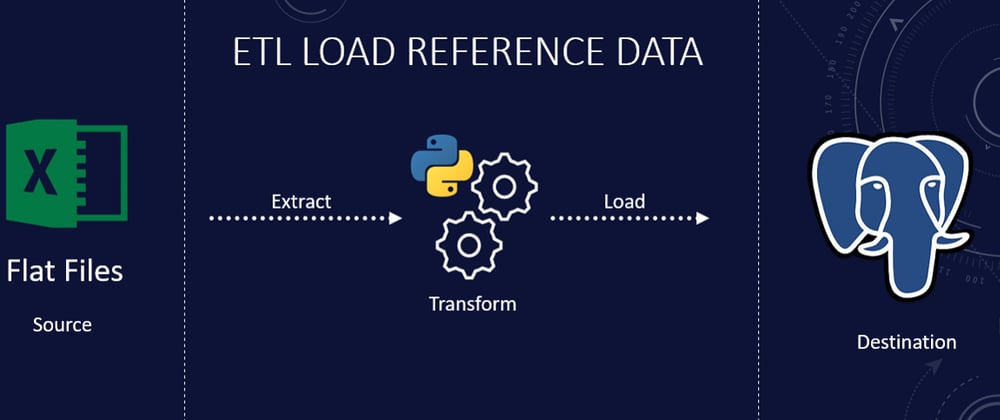

Are you looking to take your ETL pipeline to the next level? If so, you've come to the right place. In this article, I'll show you how to optimize your ETL pipeline for maximum efficiency. We'll cover a variety of topics, including data quality, data transformation, and data loading. By the end of this article, you'll have all the tools you need to optimize your ETL pipeline and get the most out of your data.

But of course, ETL pipelines are COMPLEX - the way they are built will change massively from use case to use case - so I will write here only from a "high level" approach, if you would like me to give some examples for different technologies let me know in the comments :D

- The first step to optimizing your ETL pipeline is understanding your data. -Once you have a good understanding of your data, you can start to optimize your ETL pipeline. There are a few key areas to focus on:

- Extracting data from sources: This is the first step in your ETL pipeline and it can be a bottleneck if not done efficiently. Make sure you are only extracting the data you need and that your extraction process is efficient.

- Transforming data: This is where you convert the data into the format you need for your downstream applications. Again, efficiency is key here. Make sure you are only doing the transformations that are absolutely necessary.

- Loading data into destination: The final step in your pipeline is loading the data into its destination. This step can also be a bottleneck if not done efficiently. Make sure you batch your data appropriately and use a parallel loading approach if possible.

2. The second step is to choose the right tools for the job.

When choosing data transformation tools, there are a few things to keep in mind. First, you'll need to decide whether you want to use a graphical or code-based tool. Code-based tools offer more flexibility and are often more powerful, but they can be more difficult to use. Graphical tools are usually easier to use, but they may not offer all the features you need. Second, you'll need to decide whether you want a tool that supports multiple data formats or one that specializes in a particular format. Multiple data format support can be useful if you need to work with different types of data, but it can also make the tool more complicated to use. Third, you should consider whether you need a tool that supports real-time data transformation or one that can batch process data. Real-time data transformation can be useful for projects that require up-to-date data, but it can also be more resource intensive.

When choosing a data loading tool, the first thing to consider is the type of target system you're using. If you're loading data into a relational database, you'll need a tool that supports SQL. If you're loading data into a nonrelational database, you'll need a tool that supports the appropriate query language. Second, you should consider the performance requirements of your project. If your project requires high performance, you'll need a tool that can load data quickly. Third, you should consider the scalability requirements of your project. If your project is expected to grow over time, you'll need a tool that can scale accordingly.

Choosing the right tools for your ETL pipeline is an important step in optimizing your workflow. The right tools will depend on the specific needs of your project, but there are a few general considerations that you should keep in mind. First, consider the type of data source you're working with and choose a tool accordingly. Second, think about the type of transformation you need to perform and choose a tool that offers the features you need. Third, consider the performance and scalability requirements of your project and choose a tool that can meet those needs. By taking the time to choose the right tools for your specific needs, you can optimize your ETL pipeline and improve your workflow.

3. The third step is to design an efficient workflow that meets your needs.

When designing your workflow, there are a few key factors to keep in mind. First, you need to understand what data you need to extract. This includes understanding the source data format and the target data format. Additionally, you need to understand how often you need to extract the data. This will help you determine the frequency of your ETL process. Finally, you need to consider what tools and processes you will use to load and transform the data. By understanding these factors, you can design a workflow that is optimized for your specific needs.

Once you have designed your workflow, the next step is to implement it. This includes setting up the necessary tools and processes, as well as testing it to ensure that it is running correctly. By taking the time to design and implement an efficient workflow, you can ensure that your ETL pipeline is running at peak efficiency.

4. Once you have an efficient workflow, the fourth step is to monitor and optimize it regularly.

There are a few key metrics that you should keep track of when monitoring your pipeline:

- ETL run time: This is the amount of time it takes for your pipeline to complete one full run. Ideally, you want this number to be as low as possible.

- Data quality: This is a measure of how accurate and complete your data is. You want to make sure that your data is of high quality so that it can be used effective for decision-making.

- Resource usage: This refers to the amount of resources (such as CPU and memory) that your pipeline uses. You want to keep an eye on this metric to make sure that your pipeline is not using more resources than necessary. By monitoring these metrics, you can get a good sense of how your pipeline is performing and identify areas where improvements can be made. For example, if you notice that your ETL run time is increasing, you may need to investigate why and make changes to improve efficiency. Similarly, if you see a decline in data quality, you may need to revisit your data cleansing process. Regular monitoring and optimization of your ETL pipeline is essential for keeping it running smoothly and efficiently. By paying attention to key metrics and making adjustments as needed, you can ensure that your pipeline continues to perform at its best.

There you have it! You now know how to optimize your ETL pipeline for maximum efficiency. Congratulations on becoming an efficiency expert!

Star our Github repo and join the discussion in our Discord channel!

Test your API for free now at BLST!

Latest comments (0)