CI jobs typically execute in a freshly spun-up container, with no leftover state from previous runs. This helps ensure that builds are repeatable. However it can also make them much slower than they need to be. Each run has to repeat a lot of time-consuming setup work, such as downloading and installing tools, and cannot rely on work done by previous runs, as you would when running build commands iteratively on your desktop.

Therefore most CI/CD providers offer a caching facility to speed up runs by selectively restoring state from previous runs. But because the details of your build workflows are opaque to the CI providers, they can only cache at a very coarse-grained level, and this limits how much benefit you can get from them. Read on to see why!

How CI Caches Work

These built-in caching facilities all tend to work the same way:

- You manually associate a directory in the workspace with a cache key that you construct. Typically the cache key is computed from the inputs to the process that populated the directory.

- When a CI job completes successfully it uploads the contents of the directory to its cache.

- A subsequent CI run can provide the same cache key, and the contents of the corresponding directory will be restored.

For example, say you want to cache a directory containing downloaded Python wheels[1], so that subsequent runs don’t have to download them again. To do so you could construct a cache key based on a hash of your requirements.txt file[2], say, wheel-downloads-a53be93cc8, and cache the wheel directory against that key. When the wheel downloading process completes successfully the CI system uploads the contents of the wheel directory and references them with that key. On the next CI run, if requirements.txt hasn’t changed, the same cache key, wheel-downloads-a53be93cc8, will be computed from it, and so that version of the wheel directory will be restored.

Partial cache restore

But what happens if requirements.txt does change, even in a minor way? Do we have to download all the wheels again because some small edit caused a cache miss?

To deal with this situation, some CI providers, including GitHub Actions and CircleCI, support so-called “partial cache restore”: A CI run can provide a list of key prefixes in a restore request. The system will look for all cache entries with the first prefix, and restore the most recent one, and if there are none, it will try the second prefix, and so on. This allows you to fall back to restoring an earlier version of state, as it may be better than nothing.

In our example, let’s say that after the change to requirements.txt the new cache key is computed as wheel-downloads-80b5cfa6cb. When restoring the wheel directory you can provide these prefixes: [wheel-downloads-80b5cfa6cb, wheel-downloads-]. If there is an exact match on the first key, great. But if not, the system will restore the most recent content with the wheel-downloads- prefix. In this case, our previous wheel-downloads- a53be93cc8, which was created from a slightly earlier version of requirements.txt. In typical cases this is likely to contain many of the wheels you need, even if it’s not the full set, so you get partial benefit.

The limitations of CI caching

CI directory caching can help with the “cold start” problem, but unfortunately it suffers from some major limitations.

- Uploading and downloading entire directories takes time. These directories tend to grow without bound, e.g., new wheel files get added old ones don’t get cleaned up. So at some point caching and restoring the wheel directory becomes more time-consuming than re-downloading the wheels from scratch! Dealing with trimming the cache adds even more complication.

- You have to manually generate a cache key. This can be complicated because you have to reason about which inputs could affect the content of the directory, and then fingerprint those inputs efficiently, using only the config constructs the CI system makes available.

- Not all build state is neatly sequestered into a small number of separable, cacheable directories. For example, a Java compiler might be configured to write

.classfiles into a large number of local output directories, rather than one central one.

Modern build systems to the rescue

A more powerful solution to the CI performance problem is to use a modern build system that supports remote caching:

A build system is a program that orchestrates the execution of underlying tools such as compilers, code generators, test runners, linters and so on. Examples of build systems include the venerable Make, the JVM-centric Ant, Maven and Gradle, and newer systems such as Pants and Bazel (full disclosure: I am one of the maintainers of Pants).

A system such as Pants takes simple command-line arguments like ./pants test path/to/some/tests and figures out the entire workflow that needs to occur to satisfy this request. This includes: breaking the work down into individual processes (such as “resolve 3rdparty dependencies”, “generate code”, “compile each source file”, “execute the test runner on each test file”), downloading, installing and configuring all the underlying tools (such as the dependency resolver, code generator, compiler, test runner), and executing them in the right order, feeding outputs into inputs as needed. And because the system understands the build workflow in a very fine-grained way, it can utilize caching and concurrency effectively to speed up builds.

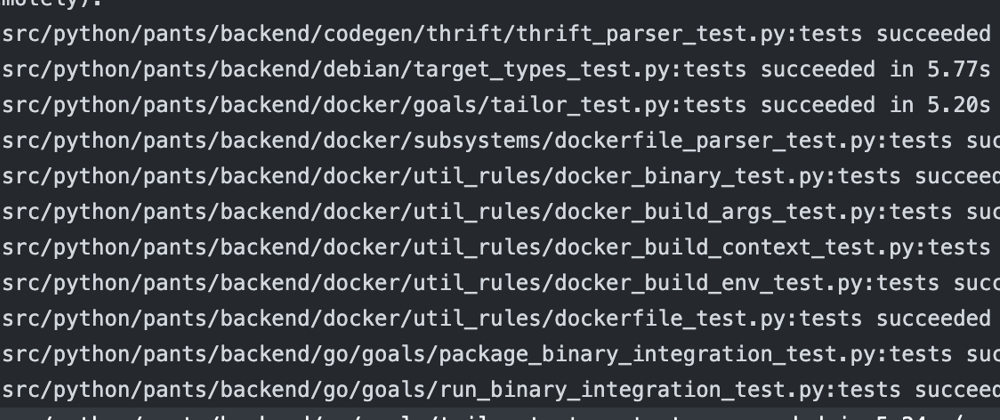

In the CI case, in particular, a new-gen build system like Pants can be configured to query a remote cache for fine-grained units of work, typically the result of a single process. So instead of laboriously uploading and downloading huge directories, the system makes a large number of small cache requests, in the flow of its work.

In practice we see huge speedups with this kind of remote caching. For example, a test job that takes 40 minutes with no cache might only take 1-2 minutes if a small change has affected only a handful of tests.

To summarize, naive, coarse-grained CI caching is a good start to reducing build times, but to get truly great performance in CI you need a build system designed for remote caching.

PS Feel free to reach out on Slack if you want to learn more about Pants and its support for Python, Go, JVM, Shell and more.

[1] A wheel is a binary distribution format for Python code.

[2] `requirements.txt` is a file specifying a set of third-party Python packages that our code depends on.

Oldest comments (0)