There are many database services in AWS. The purpose-built ones with massive scale-out possibilities (DynamoDB as the most popular). The RDS ones with active-passive streaming replication but all SQL features. And the distributed storage one with Aurora, to increase availability and scale out the read workloads. But the power of the cloud is also to let you run what you want on EC2/EBS, where you can install any database.

A distributed SQL database can provide higher availability and elasticity, thanks to sharding and distributing reads, and writes, over an unlimited number of nodes. YugabyteDB is open-source and, being PostgreSQL compatible, can run the same applications as you run on EC2 self-managed community PostgreSQL, or the managed RDS PostgreSQL, or Aurora with PostgreSQL compatibility.

For a managed service, you can provision it from the YugabyteDB cloud, managed by Yugabyte, the main contributor to YugabyteDB, and deployed on AWS or other cloud providers, in the region(s) you select, to be close to your application and users. You may prefer to manage the database yourself, on a managed Kubernetes. This is a good solution because the distributed database adapts to the elasticity, by re-balancing load and data across the pods. There is no need for complex operators dealing with pod roles and switchover risks, because all pods are active. Amazon EKS is then the right service to deploy YugabyteDB yourself in a cloud-native environment.

Here is an example, installing a lab on EKS to play with YugabyteDB on Kubernetes.

Client tooling

I install everything I need in ~/cloud/aws-cli/aws

mkdir -p ~/cloud/aws-cli

AWS CLI:

type ~/cloud/aws-cli/aws 2>/dev/null || ( mkdir -p ~/cloud/aws-cli && cd /var/tmp && rm -rf /var/tmp/aws && wget -qc https://awscli.amazonaws.com/awscli-exe-linux-$(uname -m).zip && unzip -qo awscli-exe-linux-$(uname -m).zip && ./aws/install --update --install-dir ~/cloud/aws-cli --bin-dir ~/cloud/aws-cli )

KUBECTL:

type ~/cloud/aws-cli/kubectl 2>/dev/null || curl -Lso ~/cloud/aws-cli/kubectl https://amazon-eks.s3.us-west-2.amazonaws.com/1.21.2/2021-07-05/bin/linux/$(uname -m | sed -e s/x86_64/amd64/ -e s/aarch64/arm64/)/kubectl ; chmod a+x ~/cloud/aws-cli/kubectl

HELM:

type helm 2>/dev/null || curl -s https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

EKSCTL:

type ~/cloud/aws-cli/eksctl 2>/dev/null || curl -sL "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_$(uname -m | sed -e s/x86_64/amd64/ -e s/aarch64/arm64/).tar.gz" | tar -zxvf - -C ~/cloud/aws-cli

Environment:

export PATH="$HOME/cloud/aws-cli:$PATH"

(I put that in my .bash_profile)

Credentials

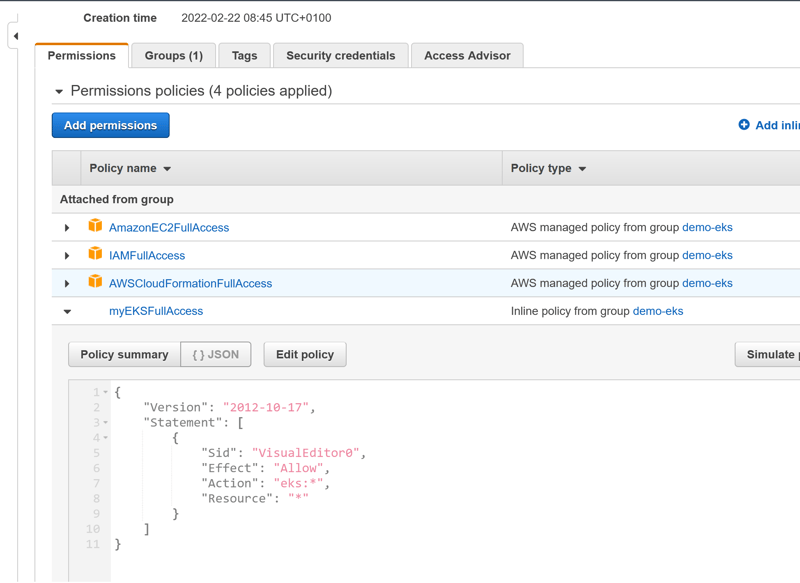

I have a "demo" user in a group with the following permissions:

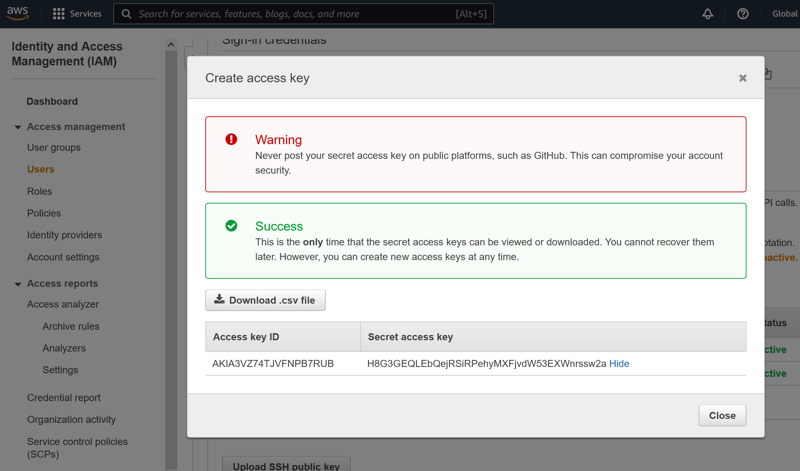

I create credentials for this user:

I configure it.

[opc@aba ~]$ aws configure

AWS Access Key ID [None]: AKIA3VZ74TJVFNPB7RUB

AWS Secret Access Key [None]: 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

Default region name [None]: eu-west-1

Default output format [None]: json

My configuration is in /home/opc/.aws/{config,credentials}.

I can check that the right user is used:

[opc@aba ~]$ aws sts get-caller-identity

444444444444 arn:aws:iam::444444444444:user/xxxx XXXXXXXXXXXX

444444444444 is my account id and xxxx the user I've generated credentials for.

EKS cluster creation

The following creates a yb-demo cluster in Ireland (eu-west-1) and a nodegroup across the 3 availability zones

eksctl create cluster --name yb-demo --version 1.21 --region eu-west-1 --zones eu-west-1a,eu-west-1b,eu-west-1c --nodegroup-name standard-workers --node-type c5.xlarge --nodes 6 --nodes-min 1 --nodes-max 12 --managed

Here is the provisioning log:

2022-02-22 19:33:09 [ℹ] eksctl version 0.84.0

2022-02-22 19:33:09 [ℹ] using region eu-west-1

2022-02-22 19:33:09 [ℹ] subnets for eu-west-1a - public:192.168.0.0/19 private:192.168.96.0/19

2022-02-22 19:33:09 [ℹ] subnets for eu-west-1b - public:192.168.32.0/19 private:192.168.128.0/19

2022-02-22 19:33:09 [ℹ] subnets for eu-west-1c - public:192.168.64.0/19 private:192.168.160.0/19

2022-02-22 19:33:09 [ℹ] nodegroup "standard-workers" will use "" [AmazonLinux2/1.21]

2022-02-22 19:33:09 [ℹ] using Kubernetes version 1.21

2022-02-22 19:33:09 [ℹ] creating EKS cluster "yb-demo" in "eu-west-1" region with managed nodes

2022-02-22 19:33:09 [ℹ] will create 2 separate CloudFormation stacks for cluster itself and the initial managed nodegroup

2022-02-22 19:33:09 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=eu-west-1 --c

luster=yb-demo'

2022-02-22 19:33:09 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "yb-demo" in

"eu-west-1"

2022-02-22 19:33:09 [ℹ] CloudWatch logging will not be enabled for cluster "yb-demo" in "eu-west-1"

2022-02-22 19:33:09 [ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} -

-region=eu-west-1 --cluster=yb-demo'

2022-02-22 19:33:09 [ℹ]

2 sequential tasks: { create cluster control plane "yb-demo",

2 sequential sub-tasks: {

wait for control plane to become ready,

create managed nodegroup "standard-workers",

}

}

2022-02-22 19:33:09 [ℹ] building cluster stack "eksctl-yb-demo-cluster"

2022-02-22 19:33:10 [ℹ] deploying stack "eksctl-yb-demo-cluster"

2022-02-22 19:33:40 [ℹ] waiting for CloudFormation stack "eksctl-yb-demo-cluster"

2022-02-22 19:34:10 [ℹ] waiting for CloudFormation stack "eksctl-yb-demo-cluster"

...

2022-02-22 19:46:11 [ℹ] waiting for CloudFormation stack "eksctl-yb-demo-cluster"

2022-02-22 19:48:12 [ℹ] building managed nodegroup stack "eksctl-yb-demo-nodegroup-standard-workers"

2022-02-22 19:48:12 [ℹ] deploying stack "eksctl-yb-demo-nodegroup-standard-workers"

2022-02-22 19:48:12 [ℹ] waiting for CloudFormation stack "eksctl-yb-demo-nodegroup-standard-workers"

...

2022-02-22 19:51:47 [✔] saved kubeconfig as "/home/opc/.kube/config"

2022-02-22 19:51:47 [ℹ] no tasks

2022-02-22 19:51:47 [✔] all EKS cluster resources for "yb-demo" have been created

2022-02-22 19:51:47 [ℹ] nodegroup "standard-workers" has 6 node(s)

2022-02-22 19:51:47 [ℹ] node "ip-192-168-11-1.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-45-193.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-53-80.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-64-141.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-7-172.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-87-15.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] waiting for at least 1 node(s) to become ready in "standard-workers"

2022-02-22 19:51:47 [ℹ] nodegroup "standard-workers" has 6 node(s)

2022-02-22 19:51:47 [ℹ] node "ip-192-168-11-1.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-45-193.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-53-80.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-64-141.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-7-172.eu-west-1.compute.internal" is ready

2022-02-22 19:51:47 [ℹ] node "ip-192-168-87-15.eu-west-1.compute.internal" is ready

2022-02-22 19:51:50 [ℹ] kubectl command should work with "/home/opc/.kube/config", try 'kubectl get nodes'

2022-02-22 19:51:50 [✔] EKS cluster "yb-demo" in "eu-west-1" region is ready

This creates a CloudFormation Stack. If it fails, you will have to remove it: aws cloudformation delete-stack --stack-name eksctl-yb-demo-cluster ; aws cloudformation delete-stack --stack-name eksctl-yb-demo-nodegroup-standard-workers ; eksctl delete cluster --region=eu-west-1 --name=yb-demo

KUBECONFIG

Here is how to get the KUBECONFIG for this cluster:

aws eks update-kubeconfig --region eu-west-1 --name yb-demo

sed -e "/command: aws/s?aws?$(which aws)?" -i ~/.kube/config

Note that I changed the command to the full path of AWS CLI to be able to run it without setting the PATH to include AWS CLI (and get Unable to connect to the server: getting credentials: exec: executable aws not found and some blah blah about a a client-go credential plugin).

Storage class

I check that all works by querying the default StorageClass:

[opc@aba ~]$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 19m

GP2 is ok for a lab, but not for performance predictability because of bursting. GP3 would be preferred, but this needs to install the aws-ebs-csi-driver and set the ebs.csi.aws.com provisioner.

Helm Chart

I'll install the database with the Helm Chart provided by Yugabyte

helm repo add yugabytedb https://charts.yugabyte.com

helm repo update

helm search repo yugabytedb/yugabyte

Check the YugabyteDB distributed SQL database version:

NAME CHART VERSION APP VERSION DESCRIPTION

yugabytedb/yugabyte 2.11.2 2.11.2.0-b89 YugabyteDB is the high-performance distributed ...

This is the latest development release, perfect for a lab.

Multi-AZ install

For a multi-AZ install the Helm Chart need some customization. The idea is to set a namespace for each zone, ensure that the storage is on the same zone, and that the containers are aware of their location.

The following script generates a .storageclass.yamland .overrides.yaml for each AZ, a yb-demo-install.sh to create the StorageClass, the namespaces, and install the StatefullSets. It also creates a yb-demo-deinstall.sh to remove the installation and the PersistentVolumes.

region=eu-west-1

masters=$(for az in {a..c} ; do echo "yb-master-0.yb-masters.yb-demo-${region}${az}.svc.cluster.local:7100" ; done | paste -sd,)

placement=$(for az in {a..c} ; do echo "aws.${region}.${region}${az}" ; done | paste -sd,)

{

for az in {a..c} ; do

{ cat <<CAT

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: standard-${region}${az}

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

zone: ${region}${az}

CAT

} > ${region}${az}.storageclass.yaml

echo "kubectl apply -f ${region}${az}.storageclass.yaml"

{ cat <<CAT

isMultiAz: True

AZ: ${region}${az}

masterAddresses: "$masters"

storage:

master:

storageClass: "standard-${region}${az}"

tserver:

storageClass: "standard-${region}${az}"

replicas:

master: 1

tserver: 2

totalMasters: 3

resource:

master:

requests:

cpu: 0.5

memory: 1Gi

tserver:

requests:

cpu: 1

memory: 2Gi

gflags:

master:

placement_cloud: "aws"

placement_region: "${region}"

placement_zone: "${region}${az}"

tserver:

placement_cloud: "aws"

placement_region: "${region}"

placement_zone: "${region}${az}"

CAT

} > ${region}${az}.overrides.yaml

echo "kubectl create namespace yb-demo-${region}${az}"

echo "helm install yb-demo yugabytedb/yugabyte --namespace yb-demo-${region}${az} -f ${region}${az}.overrides.yaml --wait \$* ; sleep 5"

done

echo "kubectl exec -it -n yb-demo-${region}a yb-master-0 -- yb-admin --master_addresses $masters modify_placement_info $placement 3"

} | tee yb-demo-install.sh

for az in {a..c} ; do

echo "helm uninstall yb-demo --namespace yb-demo-${region}${az}"

echo "kubectl delete pvc --namespace yb-demo-${region}${az} -l app=yb-master"

echo "kubectl delete pvc --namespace yb-demo-${region}${az} -l app=yb-tserver"

echo "kubectl delete storageclass standard-${region}${az}"

done | tee yb-demo-deinstall.sh

Note that I have set low CPU and RAM resource requests in order to be able to play with scaling on three c5.xlarge workers. You run on larger machines in productions.

Calling the install script:

. yb-demo-install.sh

Showing the pods:

kubectl get pods -A --field-selector metadata.namespace!=kube-system

NAMESPACE NAME READY STATUS RESTARTS AGE

yb-demo-eu-west-1a yb-master-0 2/2 Running 0 28m

yb-demo-eu-west-1a yb-tserver-0 2/2 Running 0 28m

yb-demo-eu-west-1a yb-tserver-1 2/2 Running 0 28m

yb-demo-eu-west-1b yb-master-0 2/2 Running 0 23m

yb-demo-eu-west-1b yb-tserver-0 2/2 Running 0 23m

yb-demo-eu-west-1b yb-tserver-1 2/2 Running 0 23m

yb-demo-eu-west-1c yb-master-0 2/2 Running 0 18m

yb-demo-eu-west-1c yb-tserver-0 2/2 Running 0 18m

yb-demo-eu-west-1c yb-tserver-1 2/2 Running 0 18m

You may wonder what is this "2/2". There actually two containers running in each pod. One is the yb-tserver or yb-master, and the other is the log_cleanup.sh script.

Listing the services:

kubectl get services -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP

PORT(S) AGE

default kubernetes ClusterIP 10.100.0.1 <none>

443/TCP 163m

kube-system kube-dns ClusterIP 10.100.0.10 <none>

53/UDP,53/TCP 163m

yb-demo-eu-west-1a yb-master-ui LoadBalancer 10.100.30.71 a114c8b83ff774aa5bee1b356370af25-1757278967.eu-west-1.elb.amazonaws.com 7000:30631/TCP 29m

yb-demo-eu-west-1a yb-masters ClusterIP None <none>

7000/TCP,7100/TCP 29m

yb-demo-eu-west-1a yb-tserver-service LoadBalancer 10.100.183.118 a825762f72f014377972f2c1e53e9d68-1227348119.eu-west-1.elb.amazonaws.com 6379:32052/TCP,9042:31883/TCP,5433:30538/TCP 29m

yb-demo-eu-west-1a yb-tservers ClusterIP None <none>

9000/TCP,12000/TCP,11000/TCP,13000/TCP,9100/TCP,6379/TCP,9042/TCP,5433/TCP 29m

yb-demo-eu-west-1b yb-master-ui LoadBalancer 10.100.111.89 aa1253c7392fd4ff9bdab6e7951ba3f0-101293562.eu-west-1.elb.amazonaws.com 7000:30523/TCP 24m

yb-demo-eu-west-1b yb-masters ClusterIP None <none>

7000/TCP,7100/TCP 24m

yb-demo-eu-west-1b yb-tserver-service LoadBalancer 10.100.109.67 a10cb25e4cfe941bc80b8416910203f7-2086545979.eu-west-1.elb.amazonaws.com 6379:30339/TCP,9042:32300/TCP,5433:31181/TCP 24m

yb-demo-eu-west-1b yb-tservers ClusterIP None <none>

9000/TCP,12000/TCP,11000/TCP,13000/TCP,9100/TCP,6379/TCP,9042/TCP,5433/TCP 24m

yb-demo-eu-west-1c yb-master-ui LoadBalancer 10.100.227.0 a493d9c28f8684c87bc6b0d399c48730-1851207237.eu-west-1.elb.amazonaws.com 7000:31392/TCP 19m

yb-demo-eu-west-1c yb-masters ClusterIP None <none>

7000/TCP,7100/TCP 19m

yb-demo-eu-west-1c yb-tserver-service LoadBalancer 10.100.224.161 a600828f847f842ba8d822ff5f2ab19e-303543099.eu-west-1.elb.amazonaws.com 6379:31754/TCP,9042:31992/TCP,5433:31823/TCP 19m

yb-demo-eu-west-1c yb-tservers ClusterIP None <none>

9000/TCP,12000/TCP,11000/TCP,13000/TCP,9100/TCP,6379/TCP,9042/TCP,5433/TCP 19m

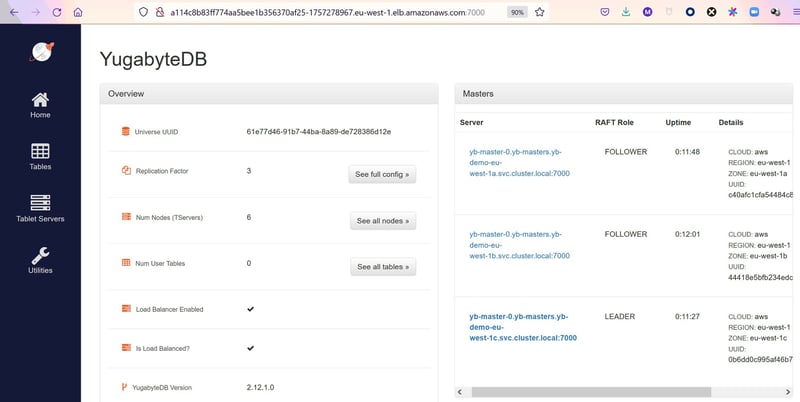

The servers are also visible from the YugabyteDB Web GUI from any yb-master on port 7000, exposed with the LoadBalancer service yb-master-ui port http-ui. Let's get the URL from the EXTERNAL-IP and PORT(S):

kubectl get services --all-namespaces --field-selector "metadata.name=yb-master-ui" -o jsonpath='http://{.items[0].status.loadBalancer.ingress[0].hostname}:{.items[0].spec.ports[?(@.name=="http-ui")].port}'

http://ae609ac9f128442229d5756ab959b796-833107203.eu-west-1.elb.amazonaws.com:7000

It is important to verify that the yb-admin modify_placement_info of the install script has correctly set the replication factor RF=3 with one replica in each AZ. This ensures application continuity even if an AZ.

curl $s $(kubectl get svc -A --field-selector "metadata.name=yb-master-ui" -o jsonpath='http://{.items[0].status.loadBalancer.ingress[0].hostname}:{.items[0].spec.ports[?(@.name=="http-ui")].port}/cluster-config?raw')

<h1>Current Cluster Config</h1>

<div class="alert alert-success">Successfully got cluster config!</div><pre class="prettyprint">version: 1

replication_info {

live_replicas {

num_replicas: 3

placement_blocks {

cloud_info {

placement_cloud: "aws"

placement_region: "eu-west-1"

placement_zone: "eu-west-1c"

}

min_num_replicas: 1

}

placement_blocks {

cloud_info {

placement_cloud: "aws"

placement_region: "eu-west-1"

placement_zone: "eu-west-1b"

}

min_num_replicas: 1

}

placement_blocks {

cloud_info {

placement_cloud: "aws"

placement_region: "eu-west-1"

placement_zone: "eu-west-1a"

}

min_num_replicas: 1

}

}

}

cluster_uuid: "61e77d46-91b7-44ba-8a89-de728386d12e"

SQL

The PostgreSQL endpoint is exposed with the LoadBalancer service yb-tserver-service port tcp-ysql-port (there is also a Cassandra-like enpoint on tcp-yql-port).

Here is how I connect with psql to the load balancer in eu-west-1a:

psql -U yugabyte -d $(kubectl get services -n yb-demo-eu-west-1a --field-selector "metadata.name=yb-tserver-service" -o jsonpath='postgresql://{.items[0].status.loadBalancer.ingress[0].hostname}:{.items[0].spec.ports[?(@.name=="tcp-ysql-port")].port}')/yugabyte

You will usually deploy the application in each AZ and it makes sense that each one connects to its local AZ. Because if the AZ is down, both are down. The ELB for the application endpoint ensures the high availability. From a performance point of view, any database node can accept transactions that reads and writes into the whole database. The latency within a region is usually low enough. However, for multi-region deployment, there are other placement options to allow high performance with consistent transactions.

You can check the configuration of the load balancers, but just to be sure I've run the following to verify that connections go evenly to the two servers I have in one AZ:

for i in {1..100}

do

psql -U yugabyte -d $(kubectl get services -n yb-demo-eu-west-1a --field-selector "metadata.name=yb-tserver-service" -o jsonpath='postgresql://{.items[0].status.loadBalancer.ingress[0].hostname}:{.items[0].spec.ports[?(@.name=="tcp-ysql-port")].port}')/yugabyte \

-c "create table if not exists franck (hostname text) ; copy franck from program 'hostname' ; select count(*),hostname from franck group by hostname"

done

...

CREATE TABLE

COPY 1

count | hostname

-------+--------------

54 | yb-tserver-0

49 | yb-tserver-1

(2 rows)

I list all 3 balancers to AZ tservers:

kubectl get services -A --field-selector "metadata.name=yb-tserver-service"

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP

PORT(S) AGE

yb-demo-eu-west-1a yb-tserver-service LoadBalancer 10.100.183.118 a825762f72f014377972f2c1e53e9d68-1227348119.eu-west-1.elb.amazonaws.com 6379:32052/TCP,9042:31883/TCP,5433:30538/TCP 80m

yb-demo-eu-west-1b yb-tserver-service LoadBalancer 10.100.109.67 a10cb25e4cfe941bc80b8416910203f7-2086545979.eu-west-1.elb.amazonaws.com 6379:30339/TCP,9042:32300/TCP,5433:31181/TCP 74m

yb-demo-eu-west-1c yb-tserver-service LoadBalancer 10.100.224.161 a600828f847f842ba8d822ff5f2ab19e-303543099.eu-west-1.elb.amazonaws.com 6379:31754/TCP,9042:31992/TCP,5433:31823/TCP 69m

kubectl get services -A --field-selector "metadata.name=yb-tserver-service" -o jsonpath='{.items[*].status.loadBalancer.ingress[0].hostname}'

a825762f72f014377972f2c1e53e9d68-1227348119.eu-west-1.elb.amazonaws.com

a10cb25e4cfe941bc80b8416910203f7-2086545979.eu-west-1.elb.amazonaws.com

a600828f847f842ba8d822ff5f2ab19e-303543099.eu-west-1.elb.amazonaws.com

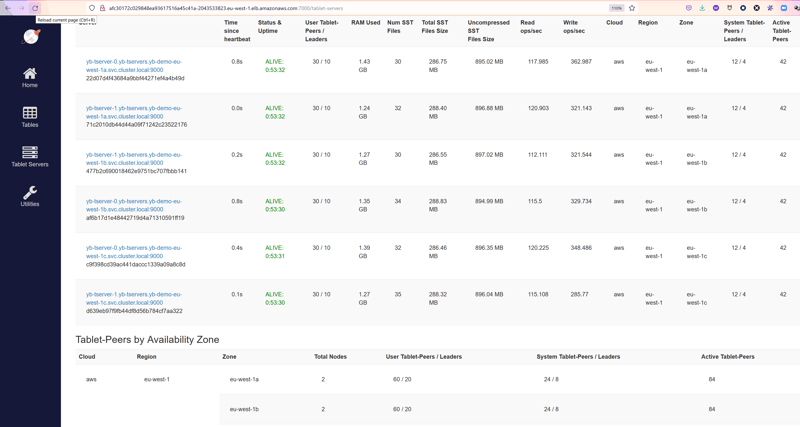

When the application is running the Web GUI display the nodes with their placement information (cloud provider, region, zone) their data volume and operation throughput:

Those are the 6 pods for the yb-tserver (2 replicas per AZ) and.

It is easy to scale:

for az in eu-west-1{a..c}

do

kubectl scale statefulsets -n yb-demo-$az yb-tserver --replicas=3

done

or test AZ failure:

kubectl delete pods --all -n yb-demo-eu-west-1c --force=true

No need for manual operations when this happens, load balancing and resilience is built in the database.

Cleanup

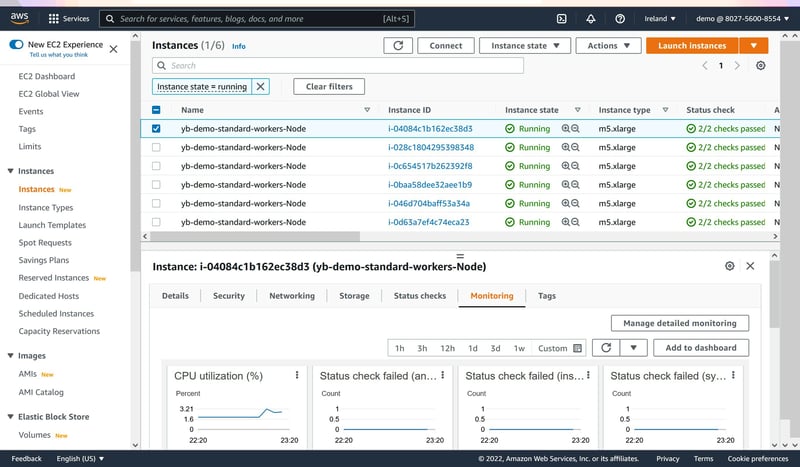

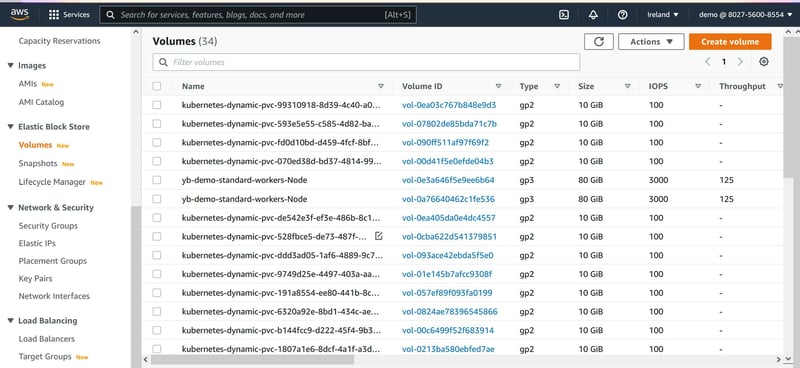

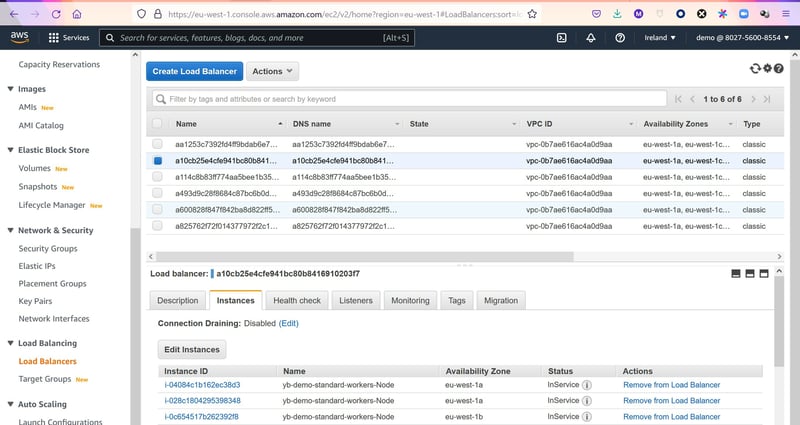

You probably want to stop or terminate your lab when not using it because it creates the following resources:

EC2 instances for the worker nodes

EBS volumes for the PersistentVolume

ELB load balancer for the services

To deprovision all, the deinstall script does all and you can delete the cluster

. yb-demo-deinstall.sh

eksctl delete cluster --region=eu-west-1 --name=yb-demo

Top comments (0)