It's sometimes difficult to communicate between public and private subnet in AWS.

We will see how we can easily do that with the help of API Gateway and AWS Lambda.

Use case example

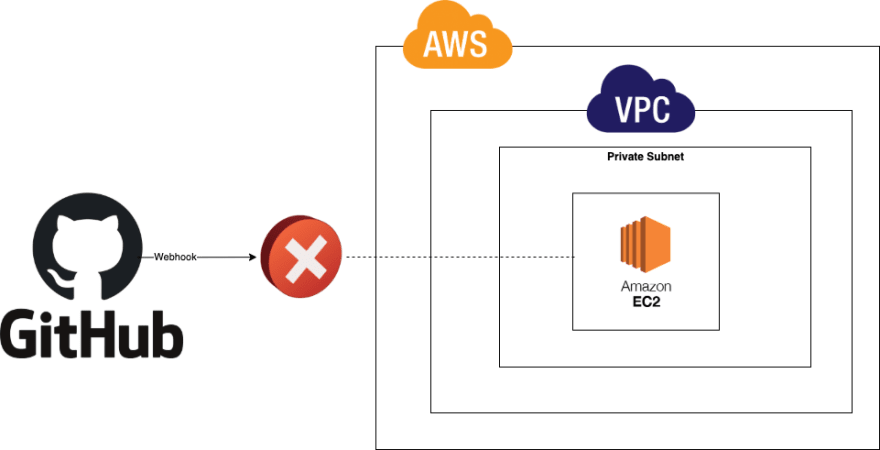

Example 1

It's sometimes necessary to have a Github repository communicating with a private EC2 instance.

But when an instance is private, it can be really complicated without impacting the security of the instance.

Solution

Github Webhook calls a Public API Gateway, API Gateway triggers a Lambda attached to VPC. The role of this Lambda is to forward the content of the Github Webhook to the EC2 instance.

Remark: An AWS Lambda attached to a VPC isn't deployed inside the VPC, an Elastic Network Interface (ENI) is created to link the Lambda function and the different subnets inside the VPC.

Example 2

Other example, we may need to communicate between a public instance inside a public subnet and a private instance inside a private subnet inside a VPC.

For security reason, direct routing between Public subnet and private Subnet is not possible. So, to facilitate this, it's possible to use this solution of Serverless Proxy.

Solution

On the same way as Solution 1, we can communicate between Public and Private instance via a Serverless Proxy thanks to AWS Api Gateway and AWS Lambda.

This solution is easy to implement, highly scalable, secure and not expensive.

Implementation

AWS Lambda

Lambda function is quite simple. The goal of this lambda is just to forward the information received from API Gateway to the destination inside VPC.

Here we use python and Lambda function is as simple as that:

import json

import os

import urllib3

def main(event, context):

url = os.environ['URL']

# Two way to have http method following if lambda proxy is enabled or not

if event.get('httpMethod'):

http_method = event['httpMethod']

else:

http_method = event['requestContext']['http']['method']

headers = ''

if event.get('headers'):

headers = event['headers']

# Important to remove the Host header before forwarding the request

if headers.get('Host'):

headers.pop('Host')

if headers.get('host'):

headers.pop('host')

body = ''

if event.get('body'):

body = event['body']

try:

http = urllib3.PoolManager()

resp = http.request(method=http_method, url=url, headers=headers,

body=body)

body = {

"result": resp.data.decode('utf-8')

}

response = {

"statusCode": resp.status,

"body": json.dumps(body)

}

except urllib3.exceptions.NewConnectionError:

print('Connection failed.')

response = {

"statusCode": 500,

"body": 'Connection failed.'

}

return response

To redirect requests I use the python lib urllib3, for more information or if you want to update the code check the official documentation.

To facilitate the deployment, backend url is passed in environment variable.

This lambda need to be attached to a VPC.

To know how to optimize Lambda for cost and/or performance, 2 tools exists:

- the well know AWS Lambda Power Tuning

- AWS Compute Optimizer

By executing the Forwarder Lambda with 50 parallel executions thanks to Lambda Power Tuning tool, I have the following result:

We can see that 512 mb of memory is the best for this Lambda with 9 ms execution time on average.

AWS Api Gateway

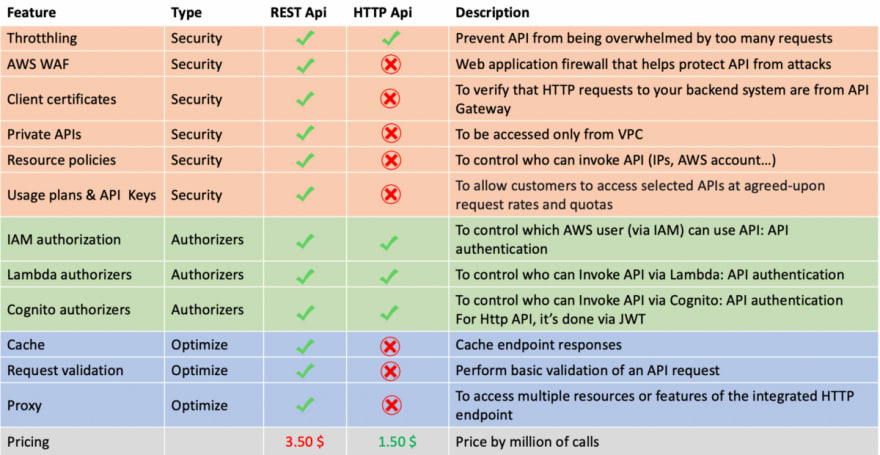

Here AWS Api Gateway need to be public and have an AWS Lambda integration. So 2 types of Api Gateway can be used:

- REST Api

- HTTP Api

Let's see how to choose between both types of Api Gateway.

So here, we can see that REST Api have really more feature than HTTP Api, especially concerning security and optimization.

Concerning pricing, it's also really important to know that:

Requests are not charged for authorization and authentication failures.

Calls to methods that require API keys are not charged when API keys are missing or invalid.

API Gateway-throttled requests are not charged when the request rate or burst rate exceeds the preconfigured limits.

Usage plan-throttled requests are not charged when rate limits or quota exceed the preconfigured limits.

More information here.

Another important things to know before choosing between REST Api or HTTP Api is to take care about security and particularly about the most common attacks in the cloud:

- Denial-of-service (DoS) and distributed denial-of-service (DDoS) attacks

- Denial-of-wallet (DoW) attacks

Denial-of-Wallet (DoW) exploits are similar to traditional denial-of-service (DoS) attacks in the sense that both are carried with the intent to cause disruption.

However, while DoS assaults aim to force a targeted service offline, DoW seeks to cause the victim financial loss.

And also we can see that everybody is concerned by DoW or generally costs out of control in the Cloud and not only big companies: multiple examples exist for example this one or lately this one.

Recommendation

Following all the information above, my recommendation is to use REST Api when APIs calls can't be authenticated.

If it's possible to authenticate APIs calls and if pricing concern is an important consideration, HTTP api can be used.

So here in example 1, REST api should be used because it's not possible to authenticate calls from Github. In example 2, HTTP Api can be used if front APIs calls are authenticated else REST Api should be used.

Remarks: HTTP Api is quite new compared to REST Api. Some features are possible but complicate to deploy. Example, with Serverless Framework throttle isn't yet supported.

Deployment

To deploy the stack, I've decided to use Serverless Framework.

If you don't know Serverless Framework, I let you see the documentation.

My serverless.yml looks like this:

service: serverless-proxy

plugins:

- serverless-python-requirements

- serverless-prune-plugin

custom:

url: http://myurl:80 #to be replaced by backend url

vpcId: vpc-123456 #to be replaced by vpc id

subnetIds: [ subnet-123, subnet-456, subnet-789 ] #to be replaced by subnet ids

pythonRequirements: #serverless-python-requirements configuration

dockerizePip: true

prune: #serverless-prune-plugin configuration: 3 versions are kept

automatic: true

number: 3

#Create security group

resources:

Resources:

SecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: SG for Serverless Proxy forwarder

VpcId: ${self:custom.vpcId}

SecurityGroupEgress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: 443

ToPort: 443

CidrIp: 0.0.0.0/0

Tags:

- Key: Name

Value: serverless-proxy-sg

provider:

name: aws

runtime: python3.8

region: eu-west-1 #to be changed by your AWS function

functions:

serverless-proxy:

handler: handler.main

name: serverless-proxy

description: Lambda Forwarder function

memorySize: 512

environment:

URL: ${self:custom.url}

vpc:

securityGroupIds:

- Ref: SecurityGroup

subnetIds: ${self:custom.subnetIds}

events:

- http:

path: forward

method: get

- http:

path: forward

method: head

- http:

path: forward

method: post

- http:

path: forward

method: put

2 Serverless plugins are used:

- serverless-python-requirements: to automatically bundle Python dependencies from requirements.txt

- serverless-prune-plugin: to purge previous versions of AWS Lambda automatically

"requirements.txt" looks like this:

urllib3==1.25.8

3 parameters need to be updated before deploy the stack:

- url: with the correct url of your backend service

- vpcId: vpc id of where is deployed your backend service

- subnetIds: subnet ids of where is deployed your backend service

"resources" part is used to create a new security group, we will see this in detail in the security part.

Concerning "functions" part:

- memorySize is 512mb according to AWS Lambda Power Tuning

- url is passed in environment variable in "environment" part

- Lambda is attached to vpc in "vpc" part

In "event", we have here a REST Api with 4 routes /forward: GET, HEAD, POST and PUT. If you want another HTTP verb simply add a new route here.

If you want to replace REST Api by HTTP Api, you have just to replace:

events:

- http:

path: forward

method: get

- http:

path: forward

method: head

- http:

path: forward

method: post

- http:

path: forward

method: put

By:

- httpApi:

path: /forward

method: get

- httpApi:

path: /forward

method: head

- httpApi:

path: /forward

method: post

- httpApi:

path: /forward

method: put

Once everything is good, simply execute the following command to deploy the stack:

sls deploy

Security

Lambda

To protect Lambda against security attacks like Denial-of-Service or Denial-of-Wallet, it's important to:

- protect security group

- set a good timeout for the function

Security Group

For Security Group, only the Egress port of your backend route need to be open. And only the specific IP (or CIDR block) need to be opened. No Ingress rule need to be opened.

Example: if your backend route is on port 80 and IP: 172.0.0.10, Security Group need to be as following:

This gives in "serverless.yml"

resources:

Resources:

SecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: SG for Serverless Proxy forwarder

VpcId: ${self:custom.vpcId}

SecurityGroupEgress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: 172.0.0.10/32

Tags:

- Key: Name

Value: serverless-proxy-sg

Timeout

Concerning timeout, it's important to set a good timeout to protect cost. As a reminder, a Lambda function is priced in function of the memory and the execution time. Minimum timeout is 1 second and maximum 900 seconds.

My suggestion is to set a timeout to 3 times the average execution time, average execution time is 9ms so here, we need to set the timeout to the minimum of 1 second.

It gives in "serverless.yml"

memorySize: 512

timeout: 1

Api Gateway

In the same way as Lambda, Api Gateway need to be protected against security attacks. And it's even more important because Api Gateway here is public.

So we need to ensure:

- throttling is enabled and set at a correct value by endpoint

- authentication is enabled when it's possible

- usage plan and api key is enabled

- resource policy

Throttling

To understand more precisely how throttling works, check this documentation.

To enable throttling on REST Api with Serverless Framework, we need to install a new plugin:

plugins:

- serverless-api-gateway-throttling

After this, 2 ways, first you set a throttling at the stage level and it will be applied for all endpoint:

custom:

apiGatewayThrottling:

maxRequestsPerSecond: 100

maxConcurrentRequests: 50

Else, you set a throttling directly at an endpoint level:

events:

- http:

path: forward

method: get

throttling:

maxRequestsPerSecond: 100

maxConcurrentRequests: 50

For HTTP Api, it's possible to have throttle but it's not possible to do it with Serverless Framework.

Remark: It's important to set throttling that fit your use case.

Authentication

Authentication is a good way to secure HTTP Api or REST Api and it's possible to do it with different manners. I won't detail in this article how we can manage authentication but it can be a really good article for later.

Usage plan and Api Key

A good way to secure an Api is to provide an Api Key.

To do this, an Api Key need to be provided to the Api and after each call need to have a header name "x-api-key", more information here. Once it's done, it's possible to specify usage plan by endpoint to limit the number of calls allowed.

In Serverless Framework it's possible with the help of the plugin serverless-add-api-key on this way:

plugins:

- serverless-add-api-key

To specify a key, let AWS auto set the value and specify Usage Plan at the stage level:

custom:

apiKeys:

- name: secret

usagePlan:

name: "global-plan"

quota:

limit: 1000

period: DAY

throttle:

burstLimit: 100

rateLimit: 50

To finish, it's important to required Api Key on all the endpoints in the following way:

events:

- http:

path: forward

method: get

private: true

- http:

path: forward

method: head

private: true

- http:

path: forward

method: post

private: true

- http:

path: forward

method: put

private: true

Once it's done, to call api, you need to do:

curl -X GET https://ep.execute-api.eu-west-1.amazonaws.com/stage/forward -H "x-api-key: my-generate-key"

Remark: This feature is available only with REST Api, not with HTTP Api. It's not possible to do that easily with Github example 1, because with Github Webhook it's not possible to add a key "x-api-key"… Something is possible with a Lambda authorizer but it's much more complicated.

Resource Policy

Resource Policy is a good way to secure REST Api, for example by limiting invocation to specific IPs or CIDR blocks.

In example 1 with Github Webhook, is a good way to limit invocation only to Github hooks CIDR blocks. Github hooks CIDR blocks are publicly available here. So with the help of the following policy, only Github IPs are able to call my REST Api.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": "*",

"Action": "execute-api:Invoke",

"Resource": "execute-api:/*",

"Condition": {

"NotIpAddress": {

"aws:SourceIp": [

"192.30.252.0/22",

"185.199.108.0/22",

"140.82.112.0/20"

]

}

}

},

{

"Effect": "Allow",

"Principal": "*",

"Action": "execute-api:Invoke",

"Resource": "execute-api:/*",

"Condition": {

"IpAddress": {

"aws:SourceIp": [

"192.30.252.0/22",

"185.199.108.0/22",

"140.82.112.0/20"

]

}

}

}

]

}

If we do exactly the same thing with Serverless Framework:

provider:

name: aws

runtime: python3.8

region: eu-west-1

resourcePolicy:

- Effect: Deny

Principal: "*"

Action: execute-api:Invoke

Resource:

- execute-api:/*/*/forward

Condition:

NotIpAddress:

aws:SourceIp:

- 192.30.252.0/22

- 185.199.108.0/22

- 140.82.112.0/20

- Effect: Allow

Principal: "*"

Action: execute-api:Invoke

Resource:

- execute-api:/*/*/forward

Condition:

IpAddress:

aws:SourceIp:

- 192.30.252.0/22

- 185.199.108.0/22

- 140.82.112.0/20

Remarks: This feature is available only with REST Api, not with HTTP Api.

With all the security protection above, serverless.yml looks like:

service: serverless-proxy

plugins:

- serverless-python-requirements

- serverless-prune-plugin

- serverless-api-gateway-throttling

- serverless-add-api-key

custom:

url: http://myurl:80 #to be replaced by backend url

vpcId: vpc-123456 #to be replaced by vpc id

subnetIds: [ subnet-123, subnet-456, subnet-789 ] #to be replaced by subnet ids

pythonRequirements: #serverless-python-requirements configuration

dockerizePip: true

prune: #serverless-prune-plugin configuration: 3 versions are kept

automatic: true

number: 3

apiGatewayThrottling:

maxRequestsPerSecond: 100

maxConcurrentRequests: 50

apiKeys:

- name: secret

usagePlan:

name: "global-plan"

quota:

limit: 1000

period: DAY

throttle:

burstLimit: 100

rateLimit: 50

resources:

Resources:

SecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: SG for Serverless Proxy forwarder

VpcId: ${self:custom.vpcId}

SecurityGroupEgress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

CidrIp: 0.0.0.0/0

- IpProtocol: tcp

FromPort: 443

ToPort: 443

CidrIp: 0.0.0.0/0

Tags:

- Key: Name

Value: serverless-proxy-sg

provider:

name: aws

runtime: python3.8

region: eu-west-1

resourcePolicy:

- Effect: Deny

Principal: "*"

Action: execute-api:Invoke

Resource:

- execute-api:/*/*/forward

Condition:

NotIpAddress:

aws:SourceIp:

- 192.30.252.0/22

- 185.199.108.0/22

- 140.82.112.0/20

- Effect: Allow

Principal: "*"

Action: execute-api:Invoke

Resource:

- execute-api:/*/*/forward

Condition:

IpAddress:

aws:SourceIp:

- 192.30.252.0/22

- 185.199.108.0/22

- 140.82.112.0/20

functions:

serverless-proxy:

handler: handler.main

name: serverless-proxy

description: Lambda Forwarder function

memorySize: 512

timeout: 1

environment:

URL: ${self:custom.url}

vpc:

securityGroupIds:

- Ref: SecurityGroup

subnetIds: ${self:custom.subnetIds}

events:

- http:

path: forward

method: get

private: true

- http:

path: forward

method: head

private: true

- http:

path: forward

method: post

private: true

- http:

path: forward

method: put

private: true

#- httpApi:

# path: /forward

# method: get

#- httpApi:

# path: /forward

# method: head

#- httpApi:

# path: /forward

# method: post

#- httpApi:

# path: /forward

# method: put

To go further

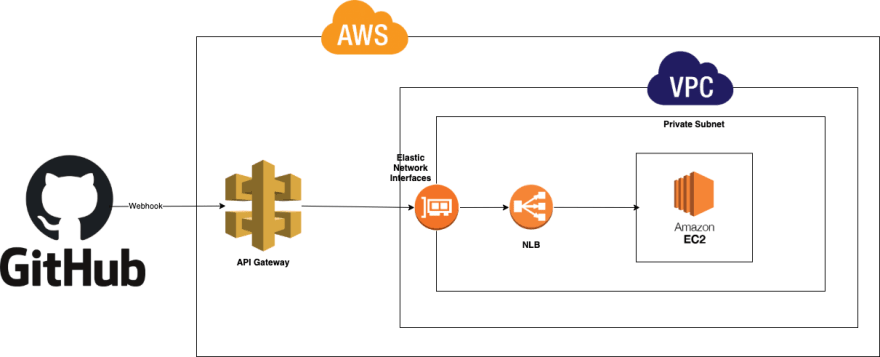

If in front of your EC2 instance, you have a Network Load balancer (NLB) or an Application Load Balancer (ALB), it can be possible to integrate it without Lambda.

It's possible because:

- HTTP Api can be integrated with private API through NLB and ALB

- REST Api can be integrated with private API through NLB only

For example, Example 1 with an NLB in front of EC2 instance gives:

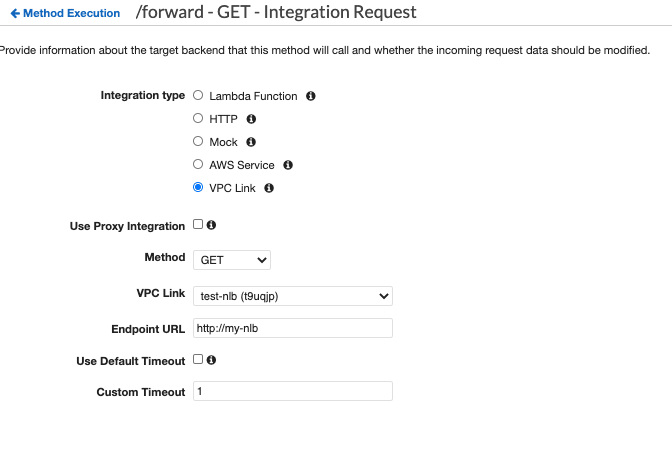

To do that you should:

- Create a VPC link in API Gateway

- Change method execution from Lambda to VPC Link

Remarks:

- I have the same recommendation as above concerning choice between REST Api and HTTP Api

- All security stuffs listed above are applicable here for API Gateway

- This solution is more simple but also less customisable

Conclusion

This solution is relatively easy to implement, highly scalable, secure and not expensive. It can be deployed when we have communication issue between public and private zone.

You can find the implementation of the solution in my github account.

Top comments (0)