AWS S3 supports event notifications, where you can set up notifications on specific events on an s3 bucket. Basically, this allows us to build application flows on an event that happens on the s3 bucket.

Overview of Amazon S3 Event Notifications

Currently, Amazon S3 can publish notifications for the following events:

- Object created events

- Object removal events

- Restore object events

- Reduced Redundancy Storage (RRS) object lost events

- Replication events

- S3 Lifecycle expiration events

- S3 Lifecycle transition events

- S3 Intelligent-Tiering automatic archival events

- Object tagging events

- Object ACL PUT events

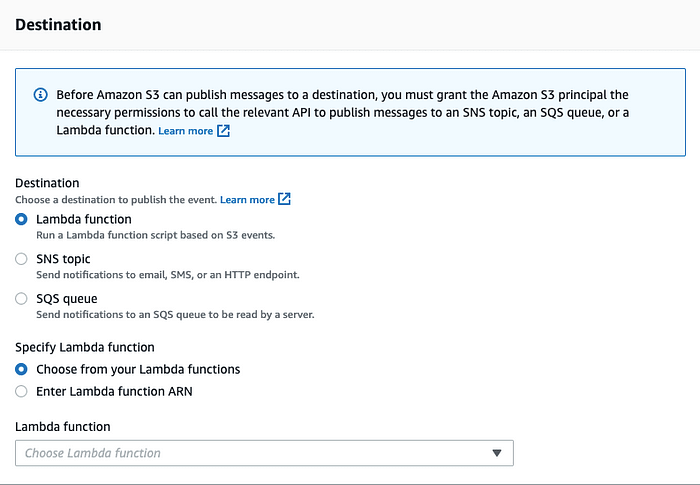

In addition to that, Amazon S3 has the ability to send event notifications to the following destinations,

- Amazon Simple Notification Service (Amazon SNS) topics

- Amazon Simple Queue Service (Amazon SQS) queues

- AWS Lambda function

Practical Implementation

As of now, we have a good understanding of the theoretical approach to how we can set up Amazon S3 event notifications. Let’s check how we can use these notifications in a practical scenario.

Requirement

EasyPhoto application allows its users to upload images using their web application, which will be stored on an s3 bucket. These images can be in various sizes and high resolutions. Hence there should be a lambda function that processes these uploads and create thumbnail images for all the uploads that EasyPhoto could use in the web application, which cost less data for web application users.

Suggested Solution

Here we can set up an event notification that triggers a lambda function on file upload to the uploads folder under the bucket. Then post-processing will be done from lambda function using sharp npm module. Then we will be going to store the final output on the same bucket under a different folder named processed.

Lambda Function

Let’s start with setting up AWS lambda function that takes care of processing image files.

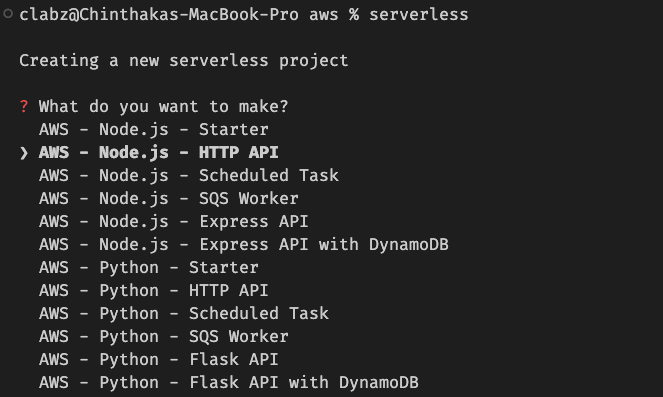

Here I’ve used serverless CLI for AWS to build this AWS Lambda function. If you don’t have CLI installed first configure it.

After that create a serverless application using the following commands,

$ serverless

Here we have to use HTTP Node.js — HTTP API, where we have the capability on processing incoming event notifications from Amazon S3.

Project structure,

Install necessary NPM modules to the application,

- aws-sdk

- sharp — If you are using macOS based development environment make sure you are using the following command to install sharp library since when installing sharp on MacOS via NPM the normal way (i.e.: npm i sharp --save), the installer automatically adds binaries for OS X. But AWS lambda functions run on Linux 2 machines with x64 processors, which makes issues in the lambda process.

Then we can build the function which processes images, Just copy the following code snippet into the hander.js.

"use strict";

const AWS = require('aws-sdk');

const sharp = require('sharp');

//AWS S3 Client

const s3 = new AWS.S3();

module.exports.processFile = async (event, context) => {

const s3MetaData = event.Records[0].s3;

const bucketName = s3MetaData.bucket.name;

const processed = 'processed'

const fileKey = s3MetaData.object.key;

console.log(

`S3 Metadata bucketname : ${bucketName} and uploaded file key : ${fileKey}`

);

try {

const params = {

Bucket: bucketName,

Key: fileKey

};

console.log(`Original params ${JSON.stringify(params)}`);

var origimage = await s3.getObject(params).promise();

} catch (error) {

console.log(error);

return;

}

// set thumbnail width. Resize will set the height automatically to maintain aspect ratio.

const width = 200;

// Use the sharp module to resize the image and save in a buffer.

try {

var buffer = await sharp(origimage.Body).resize(width).toBuffer();

} catch (error) {

console.log(error);

return;

}

// Upload the thumbnail image to the destination bucket

try {

const destparams = {

Bucket: bucketName,

Key: `${processed}/${fileKey.split("/").pop()}`,

Body: buffer,

ContentType: "image"

};

console.log(`Destination params ${JSON.stringify(destparams)}`);

const putResult = await s3.putObject(destparams).promise();

} catch (error) {

console.log(error);

return;

}

console.log(`File conversion done ${bucketName} / ${fileKey} ${JSON.stringify(putResult)}`);

return {

statusCode: 200

};

};

Here I’ve changed the default function name to processFile, Hence you need to change that on serverless.yml as well,

functions:

processFile:

handler: handler.processFile

events:

- httpApi:

path: /

method: get

As of now, we are ready with all the necessary function codes, But there is one more thing that we need to configure in this lambda function.

Here you can see we are accessing the S3 bucket and creating processed images on that bucket in this code sample. Hence this lambda function should have permission to execute getObject and putObject functions on that bucket.

We can supply these permissions through the same serverless.yml with the following code snippet.

provider:

name: aws

runtime: nodejs14.x

timeout: 10

stage: dev

iam:

role:

statements:

- Effect: "Allow"

Action:

- "s3:GetObject"

- "s3:PutObject"

Resource: "arn:aws:s3:::<S3_BUCKET_NAME>/*"

Finally, the serverless.yml should look like the below,

service: image-processing-lambda

frameworkVersion: '3'

provider:

name: aws

runtime: nodejs14.x

timeout: 10

stage: dev

iam:

role:

statements:

- Effect: "Allow"

Action:

- "s3:GetObject"

- "s3:PutObject"

Resource: "arn:aws:s3:::<S3_BUCKET_NAME>/*"

functions:

processFile:

handler: handler.processFile

events:

- httpApi:

path: /

method: get

All done, then we can deploy the lambda function on AWS lambda using the following command,

$ serverless deploy

Now we are ready with our lambda function and we can now focus on S3 bucket configurations.

S3 Bucket

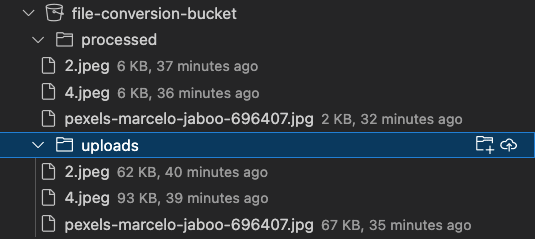

As I mentioned earlier, we should have 2 folders under the same bucket to put uploads, and then store processed file.

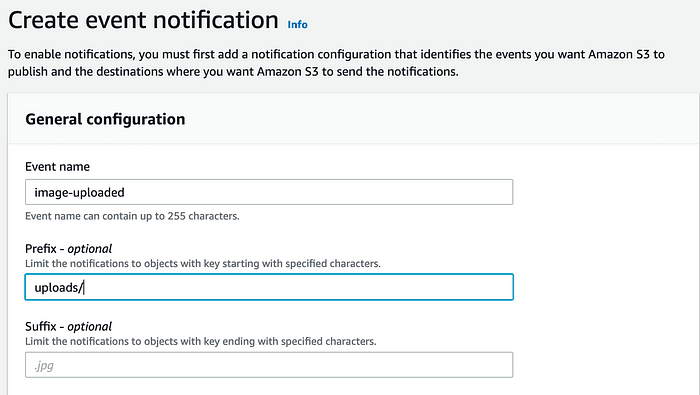

Then we can set up event notification to send object creation notifications to the lambda we deployed.

Goto properties -> Event Notifications -> Create event notification

choose All object to create events event from event types, then choose deployed lambda function from the destination,

All done, now we have successfully configured S3 bucket to send event upload notifications to post-processing through a serverless application.

You can simply test the application using the S3 dashboard, and upload any kind of image file to the uploads bucket, Then lambda function will do the post processing and store the result in processed folder under that same bucket.

Wrapping Up

Thanks for reading this article on how we can configure amazon s3 event notifications and post process those images automatically using AWS Lambda serverless application.

You can find all the source codes from Github.

Feel Free to write down your views. 👐

Top comments (0)