Before talking about the ingress controller lets know first

What is Ingress?

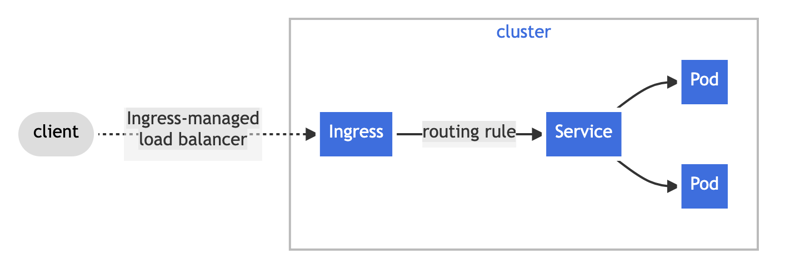

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

Ingress is not actually a type of service. Instead, it is an entry point that sits in front of multiple services in the cluster. It can be defined as a collection of routing rules that govern how external users access services running inside a Kubernetes cluster.

Above image is a simple example where an Ingress sends all its traffic to one Service.

What is an Ingress controller?

An Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router or additional frontends to help handle the traffic.

In order for the Ingress resource to work, the cluster must have an ingress controller running.

Only creating an Ingress resource has no effect.

Unlike other types of controllers which run as part of the kube-controller-manager binary, Ingress controllers are not started automatically with a cluster.

Kubernetes as a project supports and maintains AWS, GCE, and nginx ingress controllers.

In this article, I will create an Ingress NGINX Controller and then will create a full example to show you the traffic flow.

I've implemented a terraform module to create an NGINX ingress controller with the full example above in my GitHub repo create-simple-nginx-controller

please have a look at Getting started part in the README file to able to use it.

Prerequisites:

1- Terraform Tool: An IAC tool, use version >= 0.13.1.

2- An existing EKS cluster if you don't know how to create an EKS cluster please have a look at my previous article where I explained to you how to create a simple EKS cluster using terraform.

3- AWS CLI: A command line tool for working with Amazon EKS.

4- kubectl: A command line tool for working with Kubernetes clusters.

Steps:

1- Create EKS cluster.

2- Create an Ingress NGINX controller.

3- Create PODs for app1 and app2.

4- Create Services for app1 and app2.

5- Create an Ingress resource.

6- Run the code.

7- Try to connect to your PODs using the LoadBalancer URL.

8- Clean Up.

1- Create EKS cluster

I'll use the terraform module that I've created before

that module will create a VPC containing 2 public subnets with its public route tables, internet gateway attached to the VPC, and EKS cluster on it with two worker nodes.

please have a look at Getting started part in the README to see how to use it.

module "public_eks_cluster" {

source = "git::https://github.com/Noura98Houssien/simple-EKS-cluster.git?ref=v0.0.1"

vpc_name = "my-VPC1"

cluster_name = "my-EKS1"

desired_size = 2

max_size = 2

min_size = 1

instance_types = ["t3.medium"]

}

2- Create Ingress NGINX controller

In nginx-ingress-controller.tf file I installed the Nginx controller using helm chart nginx-ingress-controller from the repository https://charts.bitnami.com/bitnami via helm release resource in Terraform.

helm is a package manager that helps you define, install, and upgrade applications running on Kubernetes.

resource "helm_release" "nginx-ingress-controller" {

name = "nginx-ingress-controller"

repository = "https://charts.bitnami.com/bitnami"

chart = "nginx-ingress-controller"

set {

name = "service.type"

value = "LoadBalancer"

}

}

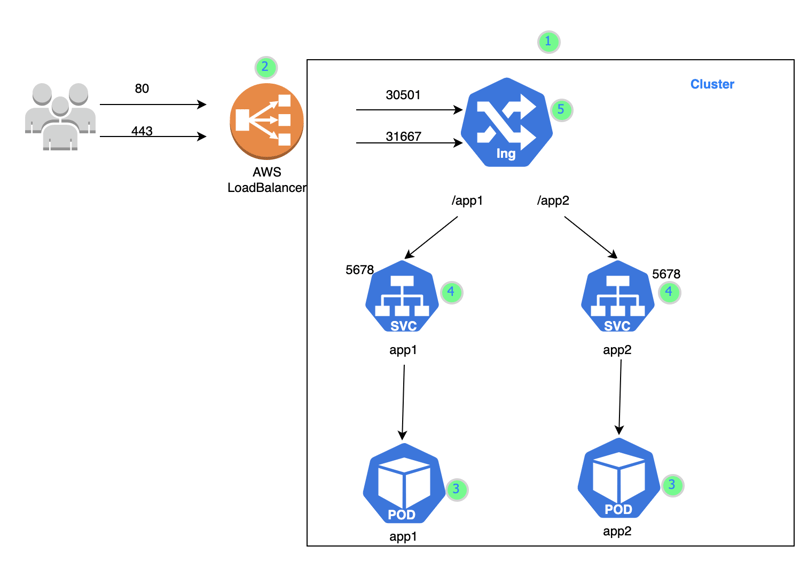

The helm chart will create in a default namespace 2 replicas, 2 deployments, 2 pods, and 2 services for the Nginx ingress controller we will use that service with the type of Load balancer, and that LB will listen on ports 80 and 443 to expose our application from the internet.

3- Create PODs for app1 and app2

In k8s-pods.tf file I created two pods one for app1 and another one for app2

here is an example of creating a pod for app1 and the same will be for app2 but with different values.

In the metadata section we define the pod name my-app1 and give it the label app with the value app1

and that label we will use to select a pod while creating a service.

In the spec section we define the container required parameters like container name "my-app1", and an image name "hashicorp/http-echo" this image will display the text message as in args parameter "Hello from my app 1".

resource "kubernetes_pod_v1" "app1" {

metadata {

name = "my-app1"

labels = {

"app" = "app1"

}

}

spec {

container {

image = "hashicorp/http-echo"

name = "my-app1"

args = ["-text=Hello from my app 1"]

}

}

}

4- Create Services for app1 and app2

In k8s-services.tf file I created two services one for my-app1 pod and another one for my-app2 pod

that we created in the previous step.

Here is an example of creating a service for the "my-app1" pod and the same will be for the "my-app2" pod but with different values.

In the metadata section define the service name "my-app1-service" and in the spec section select the pod that should be behind that service by using the same label we created in the pod resource.

the kubernetes_pod_v1.app1.metadata.0.labels.app should give us the value of the label app in the "my-app1" pod which is "app1".and also we should define which port the service will listen to.

the 5678 is the default port for the hashicorp image.

resource "kubernetes_service_v1" "app1_service" {

metadata {

name = "my-app1-service"

}

spec {

selector = {

app = kubernetes_pod_v1.app1.metadata.0.labels.app

}

port {

port = 5678

}

}

}

5- Create Ingress resource

In k8s-ingress.tf file I created an Ingress resource where we will define a collection of routing rules that govern how external users access services running inside a Kubernetes cluster.

resource "kubernetes_ingress_v1" "ingress" {

wait_for_load_balancer = true

metadata {

name = "simple-fanout-ingress"

}

spec {

ingress_class_name = "nginx"

rule {

http {

path {

backend {

service {

name = "my-app1-service"

port {

number = 5678

}

}

}

path = "/app1"

}

path {

backend {

service {

name = "my-app2-service"

port {

number = 5678

}

}

}

path = "/app2"

}

}

}

}

}

Here we have 2 paths, for the /app1 path the backend will be "my-app1-service" which means if the user writes "/app1" at the end of the Loadbalancer URL he should see the hello message from app1

and for the /app2 path the backend will be "my-app2-service"

that means if the user writes "/app2" at the end of the Loadbalancer URL he should see the hello message from app2

Let's run the code to get the Load Balancer URL -that comes after creating the ingress controller- and test those paths

6- Run the code

git clone https://github.com/Noura98Houssien/simple-nginx-ingress-controller.git

cd simple-nginx-ingress-controller/examples

terraform init

terraform plan

terraform apply

Plan: 23 to add, 0 to change, 0 to destroy.

Outputs:

k8s_service_ingress_elb = "aa593a2f34eb34e7daf843d81ba15480-956643453.us-east-1.elb.amazonaws.com"

7- Try to connect to your PODs using the LoadBalancer URL

after applying the code you get the output k8s_service_ingress_elb

or you can get it from the services running in the cluster

To see the services you need first get into your cluster

aws eks update-kubeconfig --region region-code --name my-cluster

that will update your kubectl config file

then write that command to see the services created in the default namespace kubectl get svc

nginx-ingress-controller LoadBalancer 172.20.184.7 aa593a2f34eb34e7daf843d81ba15480-956643453.us-east-1.elb.amazonaws.com 80:30047/TCP,443:31165/TCP 13m

nginx-ingress-controller-default-backend ClusterIP 172.20.231.41 <none> 80/TCP 13m

copy and past that URL in your browser and type /app1 at the end of the URL

you should see the page with a message "Hello from my app 1"

![]()

Do the same for the /app2 and you should see the page with a message "Hello from my app 2"

8- Clean Up

terraform destroy

Plan: 0 to add, 0 to change, 23 to destroy.

Changes to Outputs:

- k8s_service_ingress_elb = "aa593a2f34eb34e7daf843d81ba15480-956643453.us-east-1.elb.amazonaws.com" -> null

Summary:

In this article, I explained what ingress and ingress controllers are and how to use them. I created a simple example without diving too deep into complex details to keep it as simple as possible. I hope this has added new value to your knowledge.

Top comments (2)

Great job with the post buddy! Keep going.

Good job