by author Craig Buckler

The average web page takes seven seconds to load on a desktop device and 20 seconds on mobile. It makes 70 HTTP requests and downloads more than 2MB of data including:

- 20Kb of HTML

- 60Kb of CSS over seven files

- 430Kb of JavaScript in 20 files

- 26 images totalling 900Kb

Source: httparchive.org

Slow sites are bad for:

-

Users

- Downloading a single page on a mobile phone costs $0.29 in Canada, $0.19 in Japan, and $0.13 in the US. Those browsing in Vanuatu, Mauritania, or Madagascar pay more than 1% of their daily income.

-

Business

- Slow sites rank lower in search engines, incur higher hosting costs, are more difficult to maintain, and reduce conversion rates.

-

The environment

- The average page emits 1.76g of CO2 per view. A site with 10,000 monthly page views spews 211Kg of CO2 per year.

There is simple solution to web performance: transmit less data and do less processing. The practicalities are more challenging.

The following tips may be unconventional, controversial, counter-intuitive, or reverse previous advice. As always, the best result will depend on what you're trying to achieve. Testing is imperative so tools such as Lighthouse and Asayer.io can help evaluate the impact of your changes.

1. Set image width and height attributes

At the dawn of the web, developers added width and height attributes to every <img> element to reserve space on the page. The attributes became redundant when Responsive Web Design techniques arrived in 2010 since the values were overridden by CSS which sized an image to the width of its container:

img {

width: 100%;

height: auto;

}

or to no more than the physical pixel width:

img {

max-width: 100%;

height: auto;

}

Vertical space could only be allocated when an image started to download and its dimensions were determined. Page content would reflow and cause a content jump that was especially noticeable on smaller or slower devices:

To solve this issue, the width and height attributes are back! Add them to your images and modern browsers will calculate the layout space according to the aspect ratio:

<!-- set a 400:300 (4:3) aspect ratio -->

<img src="image.webp" width="400" height="300" alt="an image" />

The CSS width and height: auto properties are still required. If this image is placed into a 200px container, the browser reserves a area 200 x 150px and page reflows do not occur:

An alternative option is the new CSS [aspect-ratio](https://developer.mozilla.org/docs/Web/CSS/aspect-ratio) property but this has less browser support:

img.ratio4-3 {

width: 100;

height: auto;

aspect-ratio: 4 / 3;

}

2. Compression can result in slower responses

Algorithms such as gzip and Brotli are commonly used to reduce HTTP payloads by compressing data on the server before it is sent to the client. The process is efficient when the server compression, network delivery, and browser decompression time is less than sending an identical uncompressed asset.

Higher compression results in smaller files but requires more processing time. This rarely matters for static assets such as images which can be compressed once and delivered to everyone.

However, compression can be an issue for server-rendered content which changes according to the user request (social media messages, shopping baskets, etc). A faster, less effective algorithm -- or even no compression -- can result in a faster response.

3. File concatenation can make a site slower

Developers are advised to concatenate CSS and JavaScript into single files to reduce the number of HTTP requests. Image sprites containing multiple icons is a similar technique.

This was good advice for HTTP/1.1 connections but most hosts now offer HTTP/2 or above. HTTP/2 is multiplexed: multiple request and response messages can occur on a single connection at the same time. Making numerous requests is less expensive.

Consider a complex web application with 5,000Kb of JavaScript split over 50 files of 100Kb each. If they remain separate, changing a single .js file results in a 100Kb update for existing users. When the files are concatenated, the whole 5,000Kb payload must be invalidated and downloaded again.

4. Multiple domains may not improve performance

Moving assets such as images, fonts, or scripts to a different domain -- perhaps on a Content Delivery Network (CDN) -- permits the browser to open additional connections. On HTTP/1.1, two domains doubles the number of concurrent requests.

As mentioned, HTTP/2 permits any number of requests on a single connection. Separate domains can have a detrimental effect on performance since each incurs an additional DNS look-up and TCP connection.

5. Use font repositories effectively

Font repositories such as Google Fonts make management easy. However, self-hosting fonts on your own domain can give a performance boost:

- there is no additional DNS lookup

- a smaller set of minified font files can be created

- no additional CSS is appended to the payload

If you continue to use a repository, always load the font using a HTML <link> element toward the top of the <head>, e.g.

<link href="https://fonts.googleapis.com/css?family=Open+Sans" rel="stylesheet" />

This downloads the font in parallel with other fonts and stylesheets.

A CSS @import rule seems cleaner but it blocks the download and parsing of its stylesheet until the font CSS has been processed.

6. Avoid base64 encoding

Images, fonts, and other assets can be base64-encoded within HTML, CSS, and JavaScript files. It results in an indecipherable string of data but reduces the number of HTTP requests.

/* fake and cropped base64 example */

.myimg {

background-image: url('data:image/webp;base64,0123456789ABCDEF+etc+etc');

}

This can have a negative effect on performance:

- Base64 encoding is typically 30% larger than a binary equivalent.

- The browser takes longer to parse and process base64 strings.

- The resulting file with encoded data is considerably larger.

- Altering a small asset invalidates the whole of its (cached) container file.

- Multiple requests are less expensive on HTTP/2 connections.

Only consider base64 if an asset is unlikely to change and very small -- perhaps no longer than its equivalent URL.

7. Load ES6 code in the HTML <head>

Modern frameworks often introduce build processes such as Babel to transpile ES6-level JavaScript to older ES5 scripts. The resulting code works in more browsers but:

- Modern browsers provide good ES6 support. The primary reason for serving ES5 is Internet Explorer: a decade-old application with a tiny market share.

- ES5 bundles can be considerably larger than ES6. Why serve less efficient scripts to the majority of users?

Consider one of these options if you must support IE:

- Use progressive enhancement techniques.

- All browsers can load the site, but functionality is limited to whatever can be achieved in HTML and CSS alone.

- Build both ES6 and ES5 scripts and serve to browsers according to support.

- This has become easier because browsers which support ES6 modules have good ES6 support.

Developers often place <script> tags just before the closing HTML <body> tag. This ensures the content can be viewed while a script loads even in very old browsers.

However, ES6 modules defer execution by default. Script files and inline code download in parallel and are executed in order after the HTML document has loaded. Similarly, ES5 <script> tags can use a defer attribute which is supported in IE10 and IE11.

It is more efficient to reference scripts toward the top of the HTML <head> so they download as soon as possible. The following code loads which ever script the browser supports and runs it once the page content is ready:

<script type="module" src="es6-script.js"></script>

<script nomodule defer src="es5-script.js"></script>

8. Check the efficiency of ES5 transpilation

If you're not willing to jump to an ES6-only world just yet, always check the efficiency of your transpiled scripts. Consider this ES6 code:

const myArray = ['a', 'b', 'c'];

for (const element of myArray) {

console.log(element);

}

Babel outputs more than 600 characters of ES5 code for every for...of loop it encounters:

'use strict';

var myArray = ['a', 'b', 'c'];

var _iteratorNormalCompletion = true;

var _didIteratorError = false;

var _iteratorError = undefined;

try {

for (var _iterator = myArray\[Symbol.iterator\](), _step; !(_iteratorNormalCompletion = (_step = _iterator.next()).done); _iteratorNormalCompletion = true) {

var element = _step.value;

console.log(element);

}

} catch (err) {

_didIteratorError = true;

_iteratorError = err;

} finally {

try {

if (!_iteratorNormalCompletion && _iterator.return) {

_iterator.return();

}

} finally {

if (_didIteratorError) {

throw _iteratorError;

}

}

}

The script also fails in IE11 because it uses a Symbol -- which partly defeats the point of transpilation!. A standard ES5-compatible for loop will solve this issue but you may encounter problems with other code structures.

9. Caching CDN assets can be dangerous!

This tip is obscure and will mostly affect Progressive Web Apps (PWAs) which attempt to cache assets from other domains -- such as an image loaded from a CDN.

A PWA service worker script can intercept network requests. On the first request, it can fetch an asset and store it using the Cache API. Subsequent requests are returned from this cache so the PWA can operate offline.

Requests to other domains -- known as untrusted origins -- return an opaque response with a status code of zero rather than 200. The browser cannot verify or examine this asset so it makes several presumptions...

Chrome presumes every opaque file is 7MB!

Each PWA is allocated around 100MB of cache storage. A dozen tiny untrusted-origin assets will fill this space and could cause offline functionality to fail.

There are several ways to address this problem. The first is to ignore any asset which returns a status code other than 200. It will not be cached so the PWA cannot fully operate offline.

Alternatively, you could move all your assets to the PWA's primary domain. This can have performance benefits but may be impractical if you are using services such as Cloudinary to host and manipulate images.

Adding a [crossorigin="anonymous"](https://developer.mozilla.org/docs/Web/HTML/Attributes/crossorigin) attribute to every HTML element that loads an untrusted origin asset enables 'same-origin' Cross-Origin Resource Sharing (CORS) requests. This fixes the problem but could incur some effort on larger sites.

Finally, you could consider using serverless edge functions such as CloudFlare Workers. When your site makes a request to yourdomain.com/image/one.png, a function rewrites the URL and returns data from yourcdn.com/one.png. The image appears to be on your primary domain and the service worker functions without issues.

10. Is analytics harming site performance?

Site owners should measure page views, journeys, and feature usage to assess customer priorities. Systems such as Google Analytics offer professional traffic reporting tools at zero cost (if you're prepared to share usage data).

Unfortunately, these scripts often have a negative impact on performance. Google Analytics downloads and processes 73Kb of compressed JavaScript. There are also questions regarding its accuracy now it's blocked by Safari and Firefox.

Try removing your analytics scripts and reassess page performance -- the speed boost can be surprising. Other considerations:

- Use a single analytics system. More than one adversely affects performance and gives mismatching reports. Traffic analysis is based on a stack of assumptions and it can be impossible to compare results.

- Try alternative analytics options to determine which offers the best performance verses cost and reports. Server log analysers have no impact on speed but output information can be more limited.

- If you want to continue with Google, consider minimalanalytics.com which offers basic page tracking in a 1.5Kb script.

- Ignore the provider's request to have their script called first in the page -- try loading it last or after a short

setTimeoutdelay.

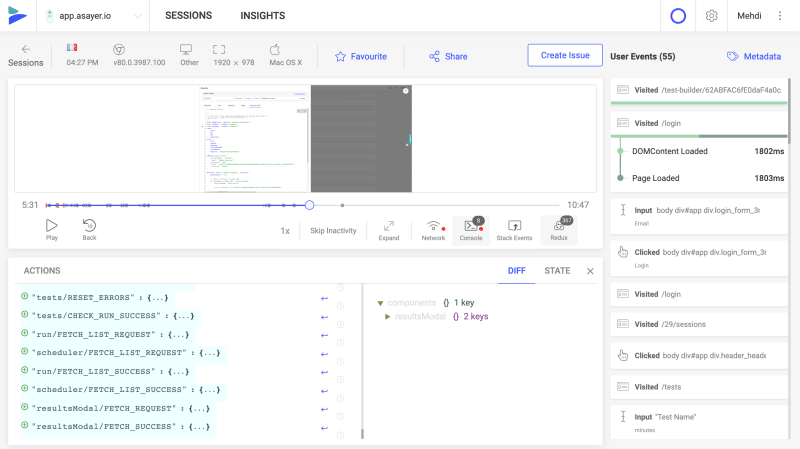

Frontend Monitoring

Debugging and measuring the performance of a web application in production may be challenging and time consuming. {Asayer}(http://asayer.io) is a frontend monitoring tool that replays everything your users do and shows how your app behaves for every issue. It’s like having your browser’s inspector open while looking over your user’s shoulder.

Asayer lets you reproduce issues, aggregate JS errors and monitor your app’s performance. Asayer offers plugins for capturing the state of your Redux or VueX store and for inspecting Fetch requests and GraphQL queries.

Happy debugging, for modern frontend teams - Start monitoring your web app for free.

Performance is paramount

In our rush to deliver features we rarely give equal priority to performance until it's too late. I hope you find some of these unusual tips useful, but you cannot go far wrong by transmitting fewer bytes and using fewer processes!

Top comments (0)