Bash script is one of the amazing scripting language used to automate tasks in Linux & Unix and it is one of my favourite scripting language for automating the tasks.

A few days ago I was searching about how to crawl website page?

After founding lot of stuff in the internet I learnt about 'Wget' tool into linux system.

Wget is a useful for downloading and crawling a website page.

So after this I started writing a bash script for website page crawling.

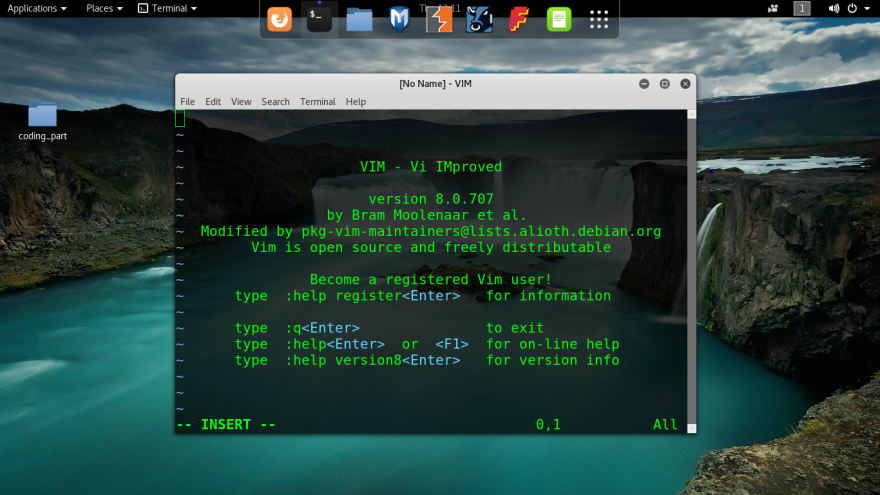

-> Firstly open up my favourite vim editor

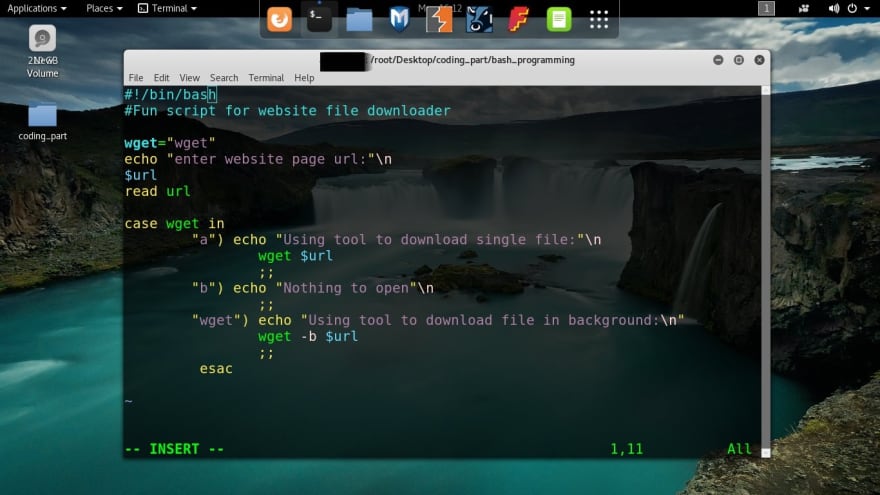

-> Then started writing script with case statement

->As you can see I uses case statements and automated wget tool into a simple bash script and it its a working code..

For more details about bash and automation

visit my github account

Top comments (15)

Crawling means to grab a page and extract page data into a structured format.

Wget does the first part, download the page. For the second phase, you can use Scrapy or BeautifulSoup

that's what I was wondering, wget will only download the page. Crawling means going through the content of the page.

I admire your response I know its not a pure crawling but if I only want to crawl one page then I will use wget

Or to download or crawl whole I will surely some python stuffs

I admire your response.

You are right to crawl i can use some python like u explain

Or also use some tools

You can also stay in bash using hxselect and other html and xml bash tools

wget "$url".$urldo?case wget inorcase $wget inorcase "$wget" in? There are significant differences.case wget inis always string "wget".-> Wget $url will help me to download page and The whole script working very well.

-> double will make it string

scrapy.org/

Good suggestion

Working harder

Thanks

Nice idea, but what is the benefit of using such crawl process?

Its just a starting sir I enjoyed a lot when I was doing this

An unusable script with too much mistakes. Pure wget is better.

What kind of mistakes sir if u can explain plz sir I

appreciate your comment

&its just a fun script..