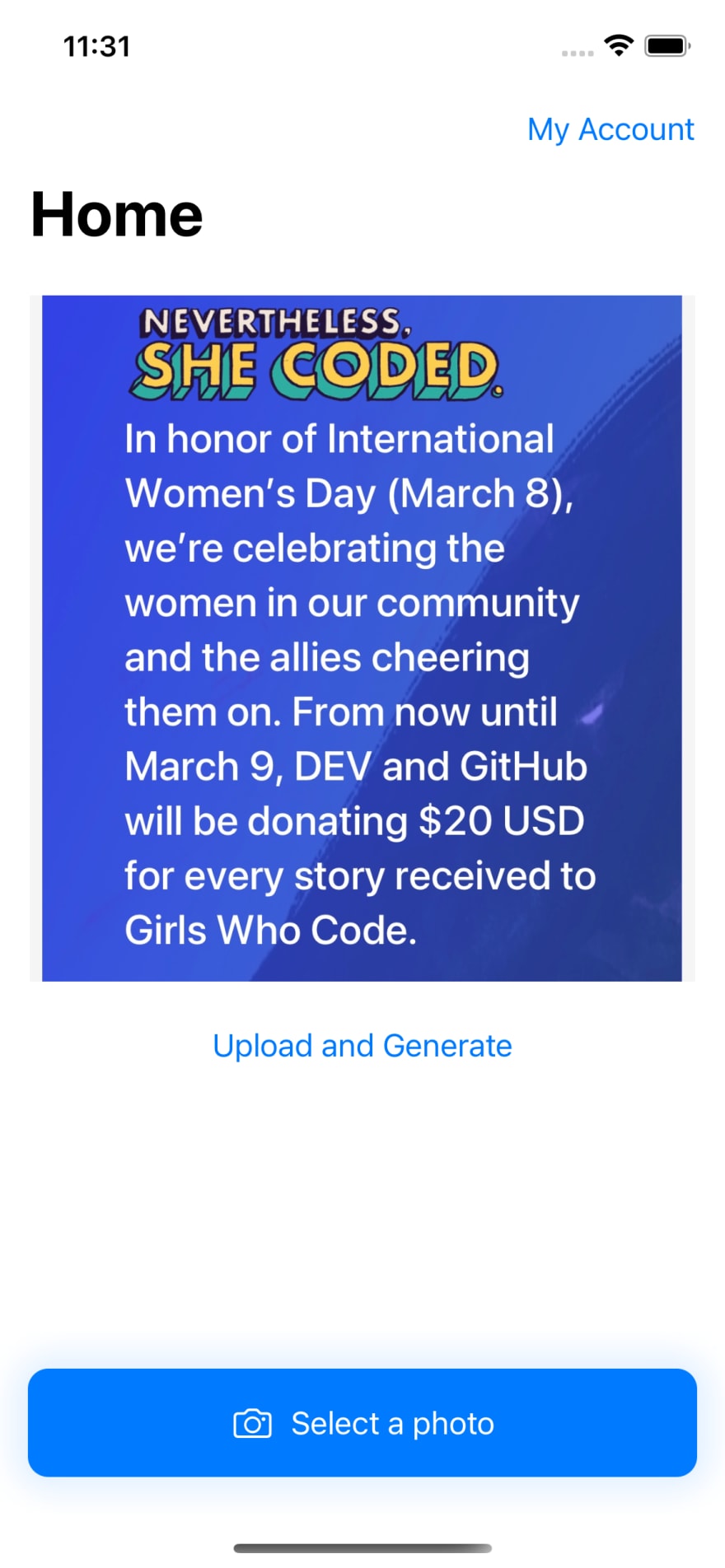

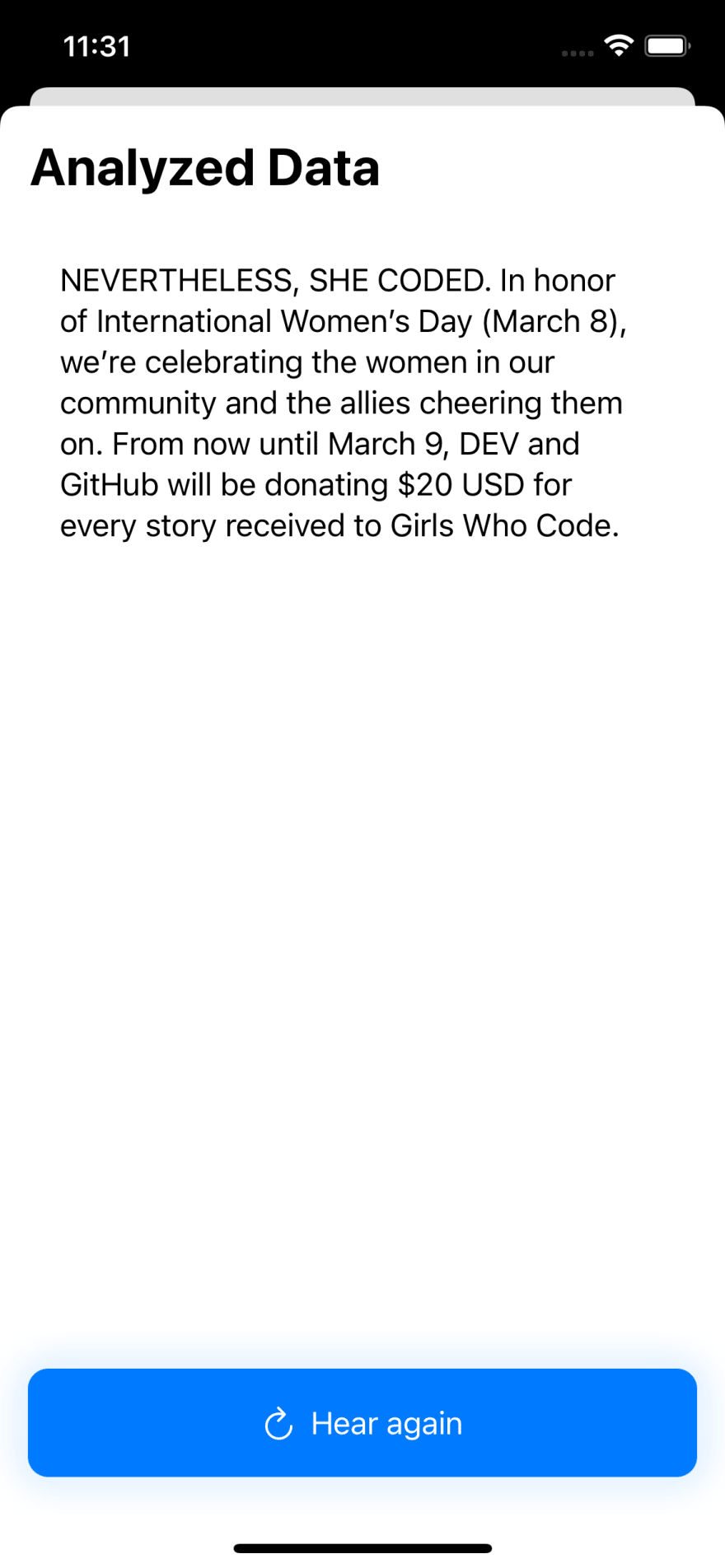

Overview of My Submission

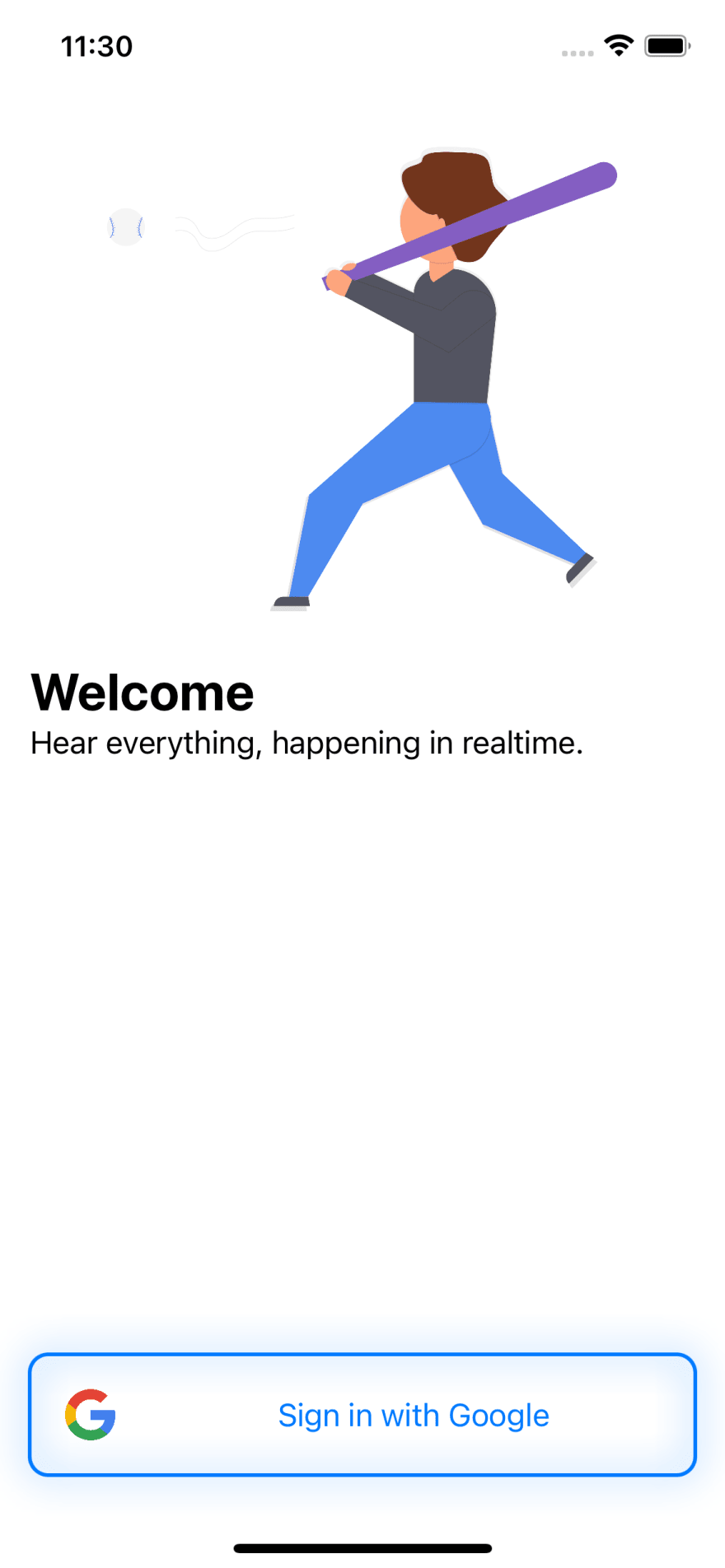

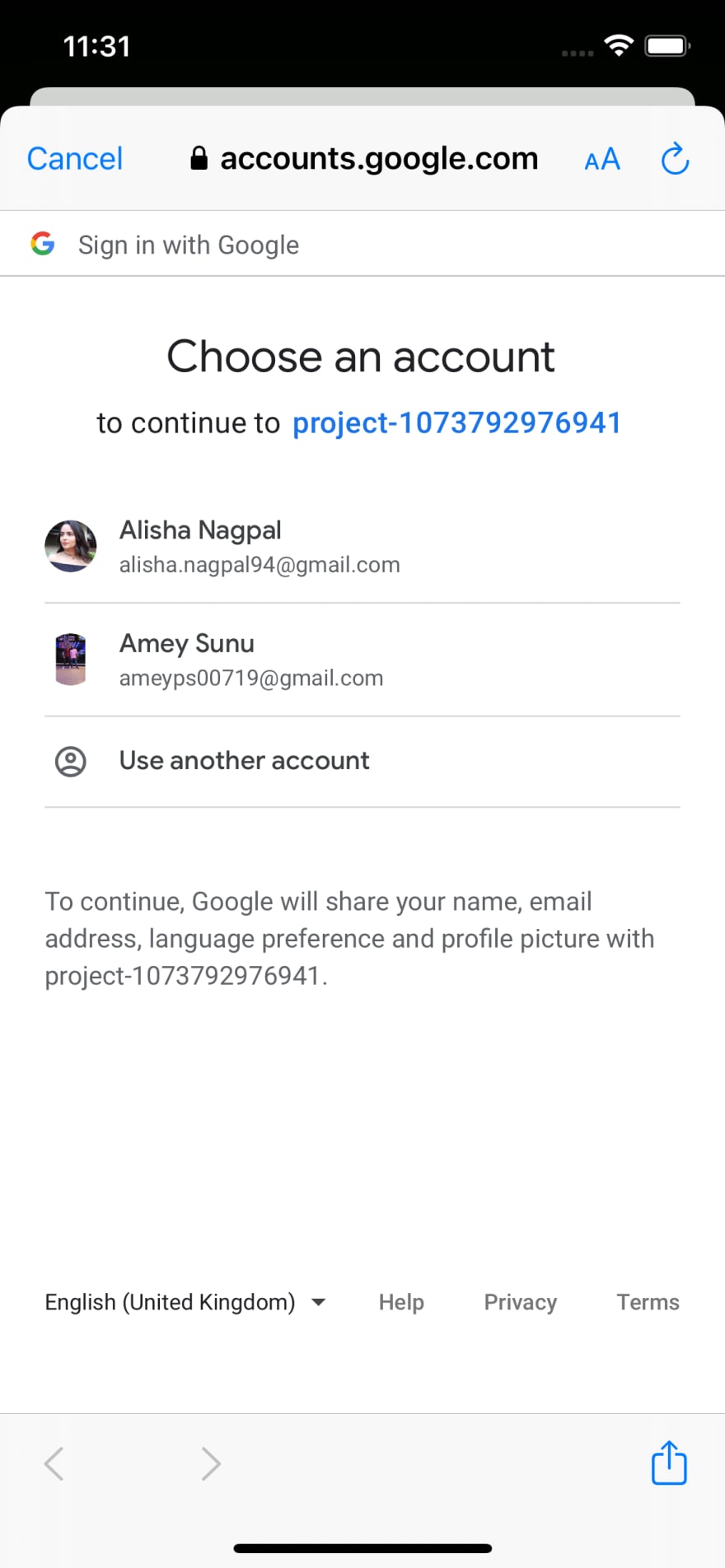

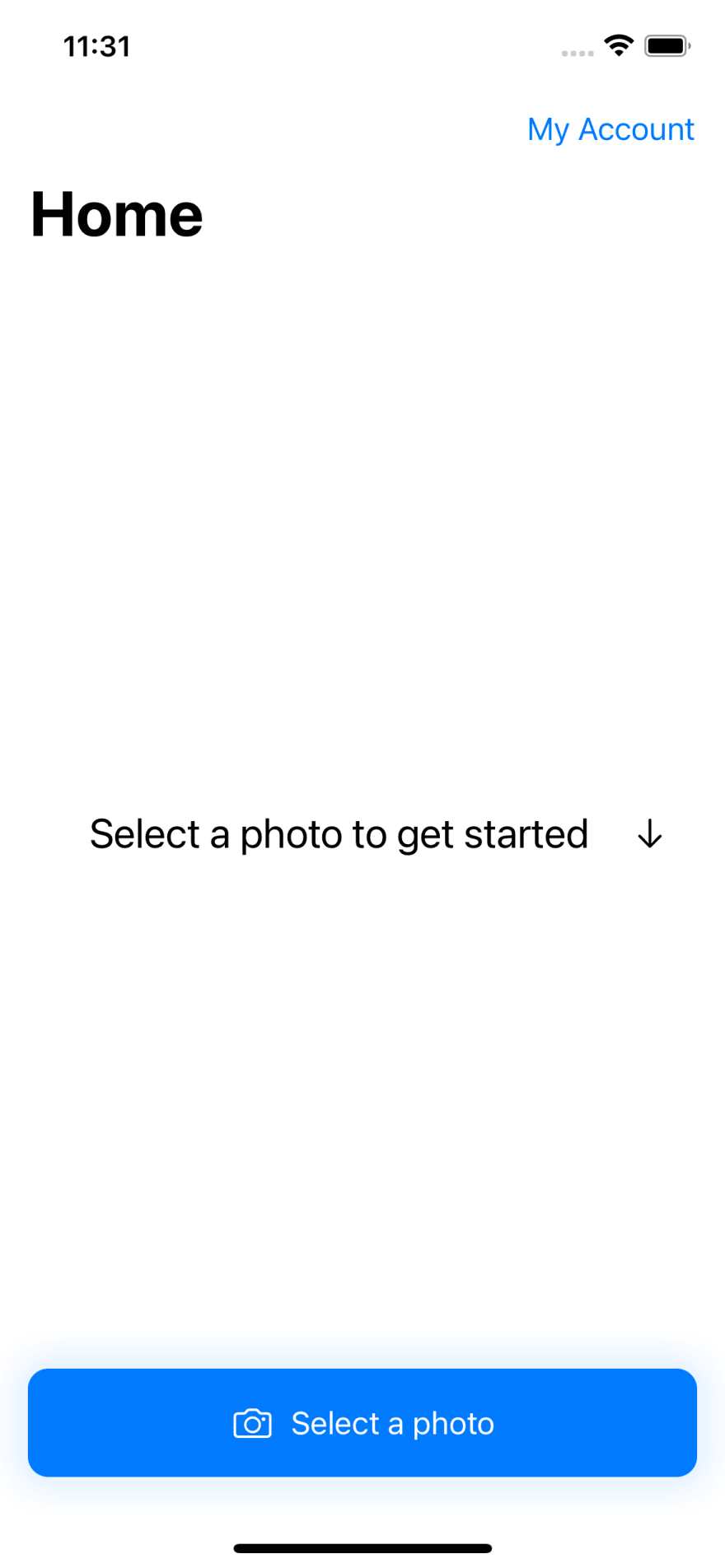

The application converts images to text and then to speech. The main target audience for this application would be visually impaired, who shall use the Braille technology inbuilt within iOS such that they can convert images to speech in realtime.

Submission Category:

AI Aces

Link to Code on GitHub

HearMe

Speechify text from images, using Microsoft Azure Cognitive Services.

Getting Started

The application uses Microsoft's Azure cognitive services to convert text from images and then synthesise the text to speech. Microsoft Azure Translation Service is also added to the code base.

Screenshots

Permissive License

Additional Resources / Info

The application is made using SwiftUI, Azure Cognitive Services, Computer Vision, Firebase and Google Authentication. The app converts text to image, and then converts it to audio. A translate feature was also added to the code, however not implemented, due to time constraints. The translation was handled by Azure Cognitive Services.

Latest comments (2)

Cool work. Not many people explored the iOS/mobile field in this Hackathon -- best of luck!

Thank you so much!