Hey guys,

Welcome to WebScraping Series, Hoping that you have already gone through part-1 in this series.

Article No Longer Available

In my previous tutorial/post, some of them asked what is the necessity to scrape the data?

The answer is very simple, To source data for ML, AI, or data science projects, you’ll often rely on databases, APIs, or ready-made CSV datasets. But what if you can’t find a dataset you want to use and analyze? That’s where a web scraper comes in.

And some websites restricts the bots accessing it's website by checking the user agent of the http message, in our previous post we didn't use any headers so that the respective server will know that we are accessing it using a python script.

So in this tutorial, we are faking ourselves as a Firefox user to prevent restrictions.

headers[

"User-Agent"

] = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36"

In our previous tutorial, we scraped only single page but in this tutorial, we gonna scrape multiple pages and grab all 1000 movie lists and will control the loop’s rate to avoid flooding the server with requests.

Let's dive in...

Analyzing the URL

https://www.imdb.com/search/title/?groups=top_1000

Now, let’s click on the next page and see what page 2’s URL looks like:

https://www.imdb.com/search/title/?groups=top_1000&start=51

And then page 3’s URL:

https://www.imdb.com/search/title/?groups=top_1000&start=101

We noticed that &start=51 is added into the URL when we go to page 2, and the number 51 turns to the number 101 on page 3.

This makes sense because there are 50 movies on each page. Page1 is 1-50, page 2 is 51-100, page 3 is 101-150, and so on.

Why is this important? This information will helps us to tell our loop how to go to the next page to scrape

So we create a function to iterate over 1 to 1000 movie lists, np.arrange(start,stop,step) is a function in the NumPy Python library, and it takes start, stop, and step arguments. step is the number that defines the spacing between each. So Start at 1, stop at 1001, and step by 50.

- pages is a function we created np.arrange(1,1001,50)

- page is variable which iterates over pages

pages = np.arange(1, 1001, 50)

for page in pages:

url = "https://www.imdb.com/search/title/?groups=top_1000&start=" + str(page)

results = requests.get(url, headers=headers)

Controlling the Crawl Rate

Controlling the crawl rate is beneficial for the scraper and for the website we’re scraping. If we avoid hammering the server with a lot of requests all at once, then we’re much less likely to get our IP address banned — and we also avoid disrupting the activity of the website we scrape by allowing the server to respond to other user requests as well.

We’ll be adding this code to our new for loop:

Breaking crawl rate down

The sleep() function will control the loop’s rate by pausing the execution of the loop for a specified amount of time

The randint(2,10) function will vary the amount of waiting time between requests for a number between 2-10 seconds. You can change these parameters to any that you like.

from time import sleep

from random import randint

Final Scraping Code:

import requests

from bs4 import BeautifulSoup

import pandas as pd

import csv

from time import sleep

from random import randint

import numpy as np

headers = dict()

headers[

"User-Agent"

] = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36"

titles = []

years = []

time = []

imdb_ratings = []

genre = []

votes = []

pages = np.arange(1, 1001, 50)

for page in pages:

url = "https://www.imdb.com/search/title/?groups=top_1000&start=" + str(page)

results = requests.get(url, headers=headers)

soup = BeautifulSoup(results.text, "html.parser")

movie_div = soup.find_all("div", class_="lister-item mode-advanced")

sleep(randint(2, 10))

print(page)

for movieSection in movie_div:

name = movieSection.h3.a.text

titles.append(name)

year = movieSection.h3.find("span", class_="lister-item-year").text

years.append(year)

ratings = movieSection.strong.text

imdb_ratings.append(ratings)

category = movieSection.find("span", class_="genre").text.strip()

genre.append(category)

runTime = movieSection.find("span", class_="runtime").text

time.append(runTime)

nv = movieSection.find_all("span", attrs={"name": "nv"})

vote = nv[0].text

votes.append(vote)

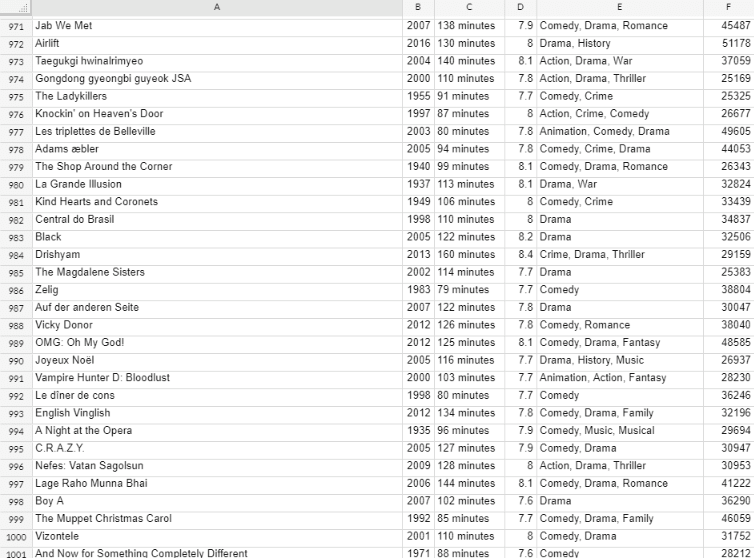

movies = pd.DataFrame(

{

"Movie": titles,

"Year": years,

"RunTime": time,

"imdb": imdb_ratings,

"Genre": genre,

"votes": votes,

}

)

# cleaning

movies["Year"] = movies["Year"].str.extract("(\\d+)").astype(int)

movies["RunTime"] = movies["RunTime"].str.replace("min", "minutes")

movies["votes"] = movies["votes"].str.replace(",", "").astype(int)

print(movies)

movies.to_csv(r"C:\Users\Aleti Sunil\Downloads\movies.csv", index=False, header=True)

As always, we can save the scraper data in to csv file

movies.to_csv(r"C:\Users\Aleti Sunil\Downloads\movies.csv", index=False, header=True)

Conclusion

There you have it! We’ve successfully extracted data of the top 1,000 best movies of all time on IMDb, which included multiple pages, and saved it into a CSV file.

let me know if any queries.

Hope it's useful

A ❤️ would be Awesome 😊

Top comments (0)