Kubernetes Cluster Over-Provisioning: Proactive App Scaling

Scalability is a desirable attribute of a system or process. Poor scalability can result in poor system performance. Kubernetes is very much flexible, dynamic but when it comes to scaling, we encountered few challenges in application pod scaling which ended up having an adverse impact on application performance. In this blog, we will walk through the steps to overprovision a k8s cluster for scaling and failover.

Need of a cluster overprovisioning

Let's say we have a Kubernetes cluster running with X number of worker-nodes. All nodes in the cluster are running at their full capacity, meaning there is no space left on any of the nodes for incoming pods. Everything till now is working fine, applications running on the Kubernetes cluster are able to respond in time(We are scaling applications on the basis of Memory/CPU usage) but suddenly load/traffic on the application increases. As the load on the application increases, Memory/CPU consumption of an application also increases and Kubernetes starts scaling the application horizontally by adding new pods in the k8s cluster when the metric considered for scaling crosses a threshold value. But, all the newly created pods will go in a pending state (as all our worker nodes are running at full capacity).

The Horizontal Pod Autoscaler creates additional pod when the need for them arises. But what happens when all the nodes in the cluster are at full capacity and can’t run any more pods?

The Cluster Autoscaler takes care of automatically provisioning additional nodes when it notices a pod that can’t be scheduled to existing nodes because of the lack of resources on those nodes. A new node will be provisioned if, after a new pod is created and the Scheduler can’t schedule it to any of the existing nodes. The Cluster Autoscaler looks for such pods and signals the cloud provider (eg. AWS) to spin up an additional node and the problem lies in here. To provision, a new node in a cluster cloud provider may take some time (a minute or more) before the created nodes appear in Kubernetes cluster. It almost entirely depends on the cloud provider (We are using AWS) and the speed of node provisioning. It may take some time till the new pods can be scheduled.

Any pod that is in a pending state will have to wait till the time node gets added to the cluster. This is not an ideal situation for the applications which need quick scaling as the amount of request/load increases, as any delays in the scaling may hinder the application performance.

To overcome the problem, we thought of implementing the below solutions

- Fix the number of extra nodes in the cluster

- Make use of pod-priority-preemption

Fix the number of extra nodes in the cluster

One of the ideas was to add a fixed number of extra nodes in the cluster that will always be available, waiting, and ready to accept new pods. In this way, our apps would always be able to scale up without having to wait for AWS to create a new EC2 instance to join the Kubernetes cluster. The apps could always scale up without getting in a pending state. That was exactly what we wanted.

But, this was a temporary solution that was not cost-effective and efficient. Also overprovisioning was not dynamic, meaning the number of fixed extra nodes would not change as the cluster grows or shrinks and we started facing the same issue again.

Make use of pod-priority-preemption

The second solution was difficult to implement as it was difficult to decide the pod priority of different applications as we were having multiple applications that are sensitive to such scaling delays. Any delay in the scaling of an application may hinder the application performance and will have an adverse impact on application performance.

As we were in search of excellence, we came across a tool that would make use of cluster autoscaler to overscale the cluster.

Introduction to Horizontal Cluster Proportional Autoscaler

Horizontal cluster proportional autoscaler image watches over the number of schedulable nodes and cores of the cluster and resizes the number of replicas for the required resource.

If we want to configure dynamic overprovisioning of a cluster (e.g. 20% of resources in the cluster) then we need to use Horizontal Cluster Proportional Autoscaler.

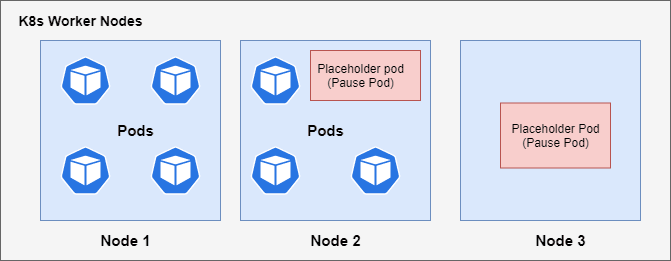

It gives a simple control loop that watches the cluster size and scales the target controller. To overscale the cluster, we will create pods that will occupy space in the cluster and will do nothing. We can name these pods as placeholder pods (We will be using paused pod replica-controller as the target controller). Having these pods occupying extra space in the cluster will allow us to have some extra resources already created and ready to be used at any time.

But how we are going to use this extra space? This is where Kubernetes will help us with pod-priority-preemption.The placeholder pods(paused pods) will have the lowest priority assigned to them than the actual workload. Since these pods have a lower priority than regular pods, Kubernetes will compare the priority of all pods and evict those with lower priority as soon as resources become scarce. The placeholder pods then go into a pending state on which cluster-autoscaler reacts by adding new nodes in the cluster.

Cluster Proportional Autoscaler increases the number of replicas of placeholder pods when cluster grows and decreases the number of replicas if cluster shrinks.

Scaling with over-provisioning: what happens under the hood

- Load hits the cluster

- Kubernetes starts scaling application pods horizontally by adding new pods.

- Kube-scheduler tries to place newly created application pods but finds insufficient resources.

- placeholder-pods (pause pods in our case) gets evicted as it has low priority and application pods get placed.

- Application pods gets placed and scaling happens immediately without any delay.

- placeholder-pods go in pending state and cannot be scheduled due to insufficient resources.

- Cluster autoscaler watches the pending pods and will scale the cluster by adding new nodes in the cluster

- Kube-scheduler waits, for instance, to be provisioned, boot, join the cluster, and become ready.

- Kube-scheduler notices there is a new node in the cluster where pods can be placed and will schedule placeholder-pods on such nodes.

Implementation

Note:- Change pod priority cut off in Cluster Autoscaler to -10 so pause pods are considered during scale down and scale-up. Set flag **expendable-pods-priority-cut-off to -10**.

This helm chart helps you to deploy the placeholder-pods (which will occupy extra space in the cluster) and cluster proportional autoscaler deployment (for dynamic overprovisioning).

List of Kubernetes resources that gets created with helm chart.

- Placeholder-pod (paused-pod) deployment

- Cluster proportional autoscaler deployment

- Serviceaccount

- Clusterrole

- Clusterrolebinding

- Configmap

- Priority class for the paused pods with a priority value of -1

The helm chart uses the following configuration to overscale the cluster.

1 replica of placeholder-pod per node (with 2 core CPU and 2 Gi of memory request). For example, if the Kubernetes cluster is running with 15 nodes then 15 replicas of placeholder-pod will be there in the cluster.

This link will help you know different Control patterns and ConfigMap formats

Conclusion

Cluster-Overprovisioning is needed when we have applications running in the cluster which are sensitive to scaling delays and don't want to wait for new nodes to be created and join the cluster, for scaling.

With Cluster-Overprovisioning implemented, it takes only a few seconds to scale the application horizontally, maintaining the performance of the application as per increased usage or traffic or load.

References

- https://github.com/kubernetes-sigs/cluster-proportional-autoscaler

- https://github.com/kubernetes-sigs/cluster-proportional-autoscaler#horizontal-cluster-proportional-autoscaler-container

- https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/FAQ.md#how-can-i-configure-overprovisioning-with-cluster-autoscaler

- https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/

- https://www.ianlewis.org/en/almighty-pause-container

Top comments (0)