After finding a good enough solution for FastAPI and cooperative multi-threading issues, a part of me was still not happy with the results. There was a significant drop in the number of concurrent requests:

1643620388 309

1643620389 5

1643620390 3

1643620391 6

1643620392 5

1643620393 322

It's not this bad in practice or for any realistic response size. The numbers above were obtained by running the whole process under strace which made it slower but also revealed that threads were waiting on a mutex (futex(...) functions) and even timing out while trying to acquire it. The other frequent activity was memory allocation. So I started to follow the JSON encoder of the response model as that was taking the most time.

It starts by encoding the root Pydantic model as a dictionary and then calling the json.dumps function and waiting for resulting JSON string. The json library has a mixed Python and C implementation. If possible, it will try to use the C implementation for the speed unless you request a nice indented output.

So the entire JSON is encoded with a single C function call. Having written several Python modules in C, I know that the C code is called with the notorious Global Interpreter Lock held and no Python code can execute in the meanwhile. It is the responsibility of the C extension to release that lock if possible. And we are back to the topic of cooperative multi-threading but this time it's not coroutines and threads but Python threads and native threads.

CPython is like a single CPU computer that gives an impression of parallel processing by switching between all threads and letting each execute for a while. At any point in time, only a single thread of Python code is being executed. The interpreter switches between Python threads when a thread has executed a certain amount of instructions. However once Python calls a C extension the interpreter can no longer count instructions and interrupt the native thread. The C extension has to either finish or explicitly release the GIL before long-running system calls and acquire GIL once the system call returns. All other Python threads will starve while the C extension holds the GIL.

But if JSON is encoded by the C extension, why did I still observe some concurrent requests instead of zero? After going through the code once again I realized that the json library only knows how to encode the built-in Python types. The response I am trying to serialize is a tree of Pydantic models. How does this not fail with "Account is not JSON serializable" error?

For unrecognized types json.dumps uses the optional parameter named default. See the documentation for more details about it. The Pydantic library provides an implementation for this function that converts the current model to a dictionary. json.dumps then encodes the resulting dictionary and may call pydantic_encoder again for Pydantic models it finds.

So Python is jumping back and forth between the json module C extension and the Pydantic encoder written in Python. And while Python instructions are executed, a threshold is reached for switching to a different Python thread running concurrent requests. And this is where I got stuck thinking of a way how to improve the concurrency.

A) I could convert all Pydantic models to dictionaries ahead of time so the C code completes faster without jumping back and forth between C extension and Python code. But that means no other code will be executed concurrently and I will be back to where I started.

B) I could use a pure Python implementation of json.dumps for better multithreading. But that will be slower and lead to longer response times. I think it puts more pressure on the memory because it collects all parts of the JSON string in a list and builds a single string from that even when generators are used.

I did not like any of those choices so this is where I ended my quest for better concurrency and did not make any changes to my code.

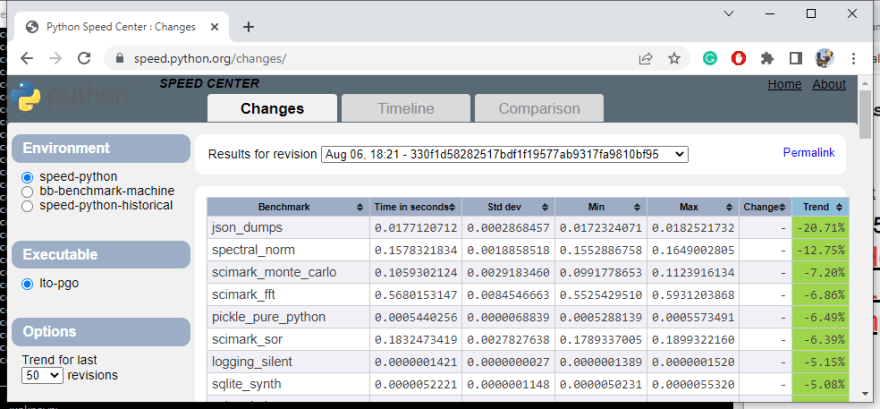

Spoiler alert! Following the boy scout principle and leaving the world better than I found it I did a couple of patches to CPython and improved the performance of json.dumps.

Oldest comments (0)