Terraform state migration

We have learned a lot about Terraform's backend and its features. But there is one important topic that is usually skipped by new learners. This topic is state migration.

There are many reasons why you may have a need to migrate your state: moving to cloud, switching cloud providers, centralizing state management, and many others.

Luckily, Terraform developers thought about this issue and provided us with tools to make this process manageable.

Code for this article can be found HERE

We will start with a local backend and then migrate to AWS S3 backend.

First let's create a configuration with a local backend in terraform.tf:

# terraform.tf

# Local backend

terraform {

# Provider-specific settings

required_providers {

aws = {

version = ">= 2.7.0"

source = "hashicorp/aws"

}

}

# Terraform version

required_version = ">= 0.14.9"

}

Reinitialize the backend, validate, plan, and apply:

$ terraform init

# output

Terraform has been successfully initialized!

$ terraform validate

# output

Success! The configuration is valid.

$ terraform plan

# output

Plan: 25 to add, 0 to change, 0 to destroy.

$ terraform apply

# output

Apply complete! Resources: 25 added, 0 changed, 0 destroyed.

Verify that resources have been deployed using a state list command:

$ terraform state list

# output

data.aws_ami.ubuntu

data.aws_availability_zones.available

data.aws_region.current

aws_eip.nat_gateway_eip

aws_internet_gateway.internet_gateway

aws_nat_gateway.nat_gateway

aws_route_table.private_route_table

aws_route_table.public_route_table

aws_route_table_association.private

aws_route_table_association.public

aws_security_group.private_sg

aws_security_group.public_sg

aws_security_group_rule.private_in

aws_security_group_rule.private_out

aws_security_group_rule.public_http_in

aws_security_group_rule.public_https_in

aws_security_group_rule.public_out

aws_security_group_rule.public_ssh_in

aws_subnet.private_subnet

aws_subnet.public_subnet

aws_vpc.vpc

module._server_from_local_module.data.aws_ami.ubuntu

module._server_from_local_module.aws_instance.web_server

module.another_server_from_a_module.data.aws_ami.ubuntu

module.another_server_from_a_module.aws_instance.web_server

module.autoscaling_from_github.data.aws_default_tags.current

module.autoscaling_from_github.data.aws_partition.current

module.autoscaling_from_github.aws_autoscaling_group.this[0]

module.autoscaling_from_github.aws_launch_template.this[0]

module.autoscaling_from_registry.data.aws_default_tags.current

module.autoscaling_from_registry.data.aws_partition.current

module.autoscaling_from_registry.aws_autoscaling_group.this[0]

module.autoscaling_from_registry.aws_launch_template.this[0]

module.my_server_module.data.aws_ami.ubuntu

module.my_server_module.aws_instance.web_server

We know that our local backend works and we can now learn how to migrate our state to an S3 bucket on AWS.

Details on how to create an S3 bucket and a DynamoDB table can be found in this article. You will need these values:

- S3 bucket name

- DynamoDB table name

- Your region

Backend settings are inside the terraform.tf file and we need to modify it to let Terraform know about new backend. I commented out the local backend for demonstration purposes:

# terraform.tf

# Local backend

# terraform {

# # Provider-specific settings

# required_providers {

# aws = {

# version = ">= 2.7.0"

# source = "hashicorp/aws"

# }

# }

# # Terraform version

# required_version = ">= 0.14.9"

# }

terraform {

backend "s3" {

bucket = "terraform-state-migration"

key = "dev/aws_infrastructure"

region = "ca-central-1"

dynamodb_table = "tf-state-mig"

encrypt = true

}

# Provider-specific settings

required_providers {

aws = {

version = ">= 2.7.0"

source = "hashicorp/aws"

}

}

# Terraform version

required_version = ">= 0.14.9"

}

All that is left to do is migrate our backend to S3:

$ terraform init -migrate-state

# output

Initializing modules...

Initializing the backend...

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "local" backend to the

newly configured "s3" backend. No existing state was found in the newly

configured "s3" backend. Do you want to copy this state to the new "s3"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value: yes

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v4.18.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Let's check if the resources are still there:

$ terraform state list

# output

data.aws_ami.ubuntu

data.aws_availability_zones.available

data.aws_region.current

aws_eip.nat_gateway_eip

aws_internet_gateway.internet_gateway

aws_nat_gateway.nat_gateway

aws_route_table.private_route_table

aws_route_table.public_route_table

aws_route_table_association.private

aws_route_table_association.public

aws_security_group.private_sg

aws_security_group.public_sg

aws_security_group_rule.private_in

aws_security_group_rule.private_out

aws_security_group_rule.public_http_in

aws_security_group_rule.public_https_in

aws_security_group_rule.public_out

aws_security_group_rule.public_ssh_in

aws_subnet.private_subnet

aws_subnet.public_subnet

aws_vpc.vpc

module._server_from_local_module.data.aws_ami.ubuntu

module._server_from_local_module.aws_instance.web_server

module.another_server_from_a_module.data.aws_ami.ubuntu

module.another_server_from_a_module.aws_instance.web_server

module.autoscaling_from_github.data.aws_default_tags.current

module.autoscaling_from_github.data.aws_partition.current

module.autoscaling_from_github.aws_autoscaling_group.this[0]

module.autoscaling_from_github.aws_launch_template.this[0]

module.autoscaling_from_registry.data.aws_default_tags.current

module.autoscaling_from_registry.data.aws_partition.current

module.autoscaling_from_registry.aws_autoscaling_group.this[0]

module.autoscaling_from_registry.aws_launch_template.this[0]

module.my_server_module.data.aws_ami.ubuntu

module.my_server_module.aws_instance.web_server

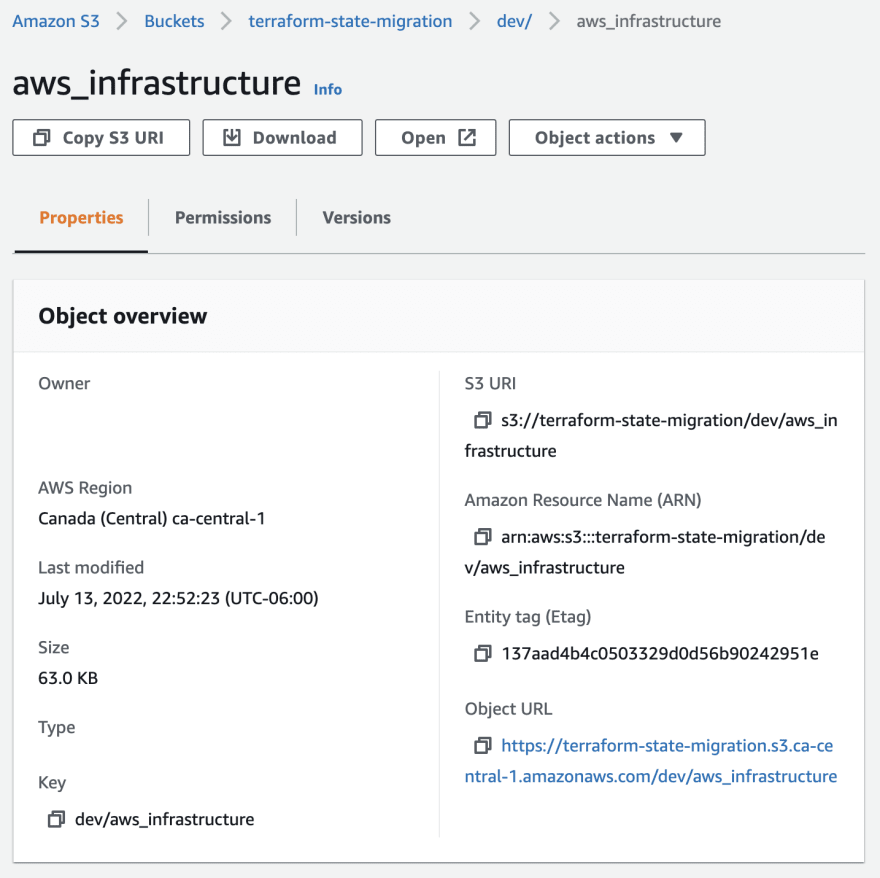

We can also check our bucket to see if the state file was created there:

We already covered the benefits of storing backend remotely and how it is often the best choice when there are many people working on infrastructure and they need to collaborate.

Congratulation! You have just migrated your backend from a local machine to a remote location. This is a valuable skill because most of the time you would start working on a new project locally and migrate it to a remote backend afterwards. Now you know how to do it.

Let's migrate our remote backend back to our local machine.

This is relatively easy. All we need to do is delete the backend block from the Terraform settings and force Terraform to fall back to the default local backend.

This is the modified terraform.tf file:

# terraform.tf

# Local backend

terraform {

# Provider-specific settings

required_providers {

aws = {

version = ">= 2.7.0"

source = "hashicorp/aws"

}

}

# Terraform version

required_version = ">= 0.14.9"

}

We will go through the same steps as before. Validate, initialize with -migrate-state flag, check state list:

$ terraform validate

# output

Success! The configuration is valid.

$ terraform init -migrate-state

Initializing modules...

Initializing the backend...

Terraform has detected you're unconfiguring your previously set "s3" backend.

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "s3" backend to the

newly configured "local" backend. No existing state was found in the newly

configured "local" backend. Do you want to copy this state to the new "local"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value: yes

Successfully unset the backend "s3". Terraform will now operate locally.

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v4.18.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Let's check the state list:

$ terraform state list

# output with list of resources...

Finally, let's destroy our resources:

$ terraform destroy

# output

Destroy complete! Resources: 25 destroyed.

This is it. We migrated our backend to an S3 bucket from local machine and back. Give yourself a round of applause!

Terraform makes it really easy to migrate your state file wherever you need and I absolutely love this feature.

Terraform state refresh

When you run terraform refresh Terraform reads the settings of all existing resources belonging to the current configuration and updates Terraform state to ensure that things match. This command only modifies the state. Refresh is run automatically as a part of plan and apply commands.

This command is useful to deal with what is known as configuration drift. There are instances when we need to go and modify an existing resource. With terraform refresh we can let Terraform know about these changes and prevent configuration drift where the settings of remote resources differ from the Terraform configuration.

To demonstrate how this command works we will intentionally introduce a configuration drift by modifying a tag of an EC2 instance manually. Then we will run the refresh command and let Terraform state be in sync with the real configuration of our resources.

If your configuration is not up run the apply command:

$ terraform apply

Let's see the state list and select an instance to modify:

$ terraform state list

# output

data.aws_ami.ubuntu

data.aws_availability_zones.available

data.aws_region.current

aws_eip.nat_gateway_eip

aws_internet_gateway.internet_gateway

aws_nat_gateway.nat_gateway

aws_route_table.private_route_table

aws_route_table.public_route_table

aws_route_table_association.private

aws_route_table_association.public

aws_security_group.private_sg

aws_security_group.public_sg

aws_security_group_rule.private_in

aws_security_group_rule.private_out

aws_security_group_rule.public_http_in

aws_security_group_rule.public_https_in

aws_security_group_rule.public_out

aws_security_group_rule.public_ssh_in

aws_subnet.private_subnet

aws_subnet.public_subnet

aws_vpc.vpc

module._server_from_local_module.data.aws_ami.ubuntu

module._server_from_local_module.aws_instance.web_server

module.another_server_from_a_module.data.aws_ami.ubuntu

module.another_server_from_a_module.aws_instance.web_server

module.autoscaling_from_github.data.aws_default_tags.current

module.autoscaling_from_github.data.aws_partition.current

module.autoscaling_from_github.aws_autoscaling_group.this[0]

module.autoscaling_from_github.aws_launch_template.this[0]

module.autoscaling_from_registry.data.aws_default_tags.current

module.autoscaling_from_registry.data.aws_partition.current

module.autoscaling_from_registry.aws_autoscaling_group.this[0]

module.autoscaling_from_registry.aws_launch_template.this[0]

module.my_server_module.data.aws_ami.ubuntu

module.my_server_module.aws_instance.web_server

I chose module.my_server_module.aws_instance.web_server. Let's see what kind of data Terraform state has on this instance:

$ terraform state show module.my_server_module.aws_instance.web_server

# output

# module.my_server_module.aws_instance.web_server:

resource "aws_instance" "web_server" {

ami = "ami-04a579d2f00bb4001"

arn = "arn:aws:ec2:ca-central-1:490750479946:instance/i-0d09c23db0e4dbb51"

associate_public_ip_address = true

availability_zone = "ca-central-1a"

cpu_core_count = 1

cpu_threads_per_core = 1

disable_api_termination = false

ebs_optimized = false

get_password_data = false

hibernation = false

id = "i-0d09c23db0e4dbb51"

instance_initiated_shutdown_behavior = "stop"

instance_state = "running"

instance_type = "t2.micro"

ipv6_address_count = 0

ipv6_addresses = []

monitoring = false

primary_network_interface_id = "eni-01fef74a6aff22cac"

private_dns = "ip-10-0-0-224.ca-central-1.compute.internal"

private_ip = "10.0.0.224"

public_ip = "3.97.16.128"

secondary_private_ips = []

security_groups = []

source_dest_check = true

subnet_id = "subnet-0f1f35c97902550da"

tags = {

"Name" = "Web Server from module"

"Terraform" = "true"

}

tags_all = {

"Name" = "Web Server from module"

"Terraform" = "true"

}

tenancy = "default"

user_data_replace_on_change = false

vpc_security_group_ids = [

"sg-08fc17f9b71be12fd",

]

capacity_reservation_specification {

capacity_reservation_preference = "open"

}

credit_specification {

cpu_credits = "standard"

}

enclave_options {

enabled = false

}

maintenance_options {

auto_recovery = "default"

}

metadata_options {

http_endpoint = "enabled"

http_put_response_hop_limit = 1

http_tokens = "optional"

instance_metadata_tags = "disabled"

}

root_block_device {

delete_on_termination = true

device_name = "/dev/sda1"

encrypted = false

iops = 100

tags = {}

throughput = 0

volume_id = "vol-04f7e86e0d3cdcd7a"

volume_size = 8

volume_type = "gp2"

}

}

I will introduce drift by adding a tag to this instance:

Now we run the refresh command:

$ terraform refresh

If we check the data about this instance we can see that name (look at tags and tags_all) was updated to refresh-demo:

$ terraform state show

module.my_server_module.aws_instance.web_server

# output

# module.my_server_module.aws_instance.web_server:

resource "aws_instance" "web_server" {

ami = "ami-04a579d2f00bb4001"

arn = "arn:aws:ec2:ca-central-1:490750479946:instance/i-0d09c23db0e4dbb51"

associate_public_ip_address = true

availability_zone = "ca-central-1a"

cpu_core_count = 1

cpu_threads_per_core = 1

disable_api_termination = false

ebs_optimized = false

get_password_data = false

hibernation = false

id = "i-0d09c23db0e4dbb51"

instance_initiated_shutdown_behavior = "stop"

instance_state = "running"

instance_type = "t2.micro"

ipv6_address_count = 0

ipv6_addresses = []

monitoring = false

primary_network_interface_id = "eni-01fef74a6aff22cac"

private_dns = "ip-10-0-0-224.ca-central-1.compute.internal"

private_ip = "10.0.0.224"

public_ip = "3.97.16.128"

secondary_private_ips = []

security_groups = []

source_dest_check = true

subnet_id = "subnet-0f1f35c97902550da"

tags = {

"Name" = "refresh-demo"

"Terraform" = "true"

}

tags_all = {

"Name" = "refresh-demo"

"Terraform" = "true"

}

tenancy = "default"

user_data_replace_on_change = false

vpc_security_group_ids = [

"sg-08fc17f9b71be12fd",

]

capacity_reservation_specification {

capacity_reservation_preference = "open"

}

credit_specification {

cpu_credits = "standard"

}

enclave_options {

enabled = false

}

maintenance_options {

auto_recovery = "default"

}

metadata_options {

http_endpoint = "enabled"

http_put_response_hop_limit = 1

http_tokens = "optional"

instance_metadata_tags = "disabled"

}

root_block_device {

delete_on_termination = true

device_name = "/dev/sda1"

encrypted = false

iops = 100

tags = {}

throughput = 0

volume_id = "vol-04f7e86e0d3cdcd7a"

volume_size = 8

volume_type = "gp2"

}

}

Lastly, I will destroy the infrastructure because I have no use for it anymore:

$ terraform destroy -auto-approve

Please note that this command only updates the state to match any manual changes to the resources. If you want to make permanent changes it is recommended that you modify the configuration and reapply the changes.

Thank you for reading. We got pretty far into Terraform and I hope that people find these articles helpful. See you in the next article!

Top comments (2)

Hello, @af

we are leveraging terraform to create AWS resources, for migrating the existing project from tf v0.10 to tf v1.0.

is there any an efficient way to verify aws resources changes between v0.10 tf state file and v1.0 tf state file? expecting nothing change

Hi @havenlin i do not know of the efficient way to do it. Since your version is kinda old I understand your concern about things breaking.

If I had to do this migration I would try to upgrade tf to a newer version and deploy a dev/staging infrastructure to see if anything broke. Luckily deploying a copy of infra is exactly what terraform was made for.

Another possible issue could be in AWS services themselves. AWS upgrades services and while your already deployed services can be deprecated they will work, but you won’t be able to deploy a deprecated version of the service in a new configuration.

Proceed with caution, cloud can be a challenge 😅