The mission

This was a fun one. It came from reddit from u/HamsterFlex. His request was that he be able to enter a zipcode and it would find foreclosure auctions coming up for that zipcode.

The website was

https://miamidade.realforeclose.com/index.cfm?zaction=USER&zmethod=CALENDAR which is specific for Miami-Dade in Florida. The pretty cool thing is that there are quite a few other regions so this code could pretty easily be adapted to those other regions.

I opted to go for a slightly different approach so it would cater more broadly to different audiences. What the scrape does now is just get all auctions for any dates greater than today for the current month and the month following. It’ll track the status of if the auction is canceled or the date of the auction.

The website in general was a bit trickier to use. A lot of the HTML structure didn’t have unique css selectors for things like going right and left so I was forced to get more creative. Mission accomplished, though!

How to for the less technical

First thing to do if you are a non technical person is download and install Nodejs. I tested this on Node 12 but it should work fine with Node 10 and most other versions of Node.

Next step is to download the repository. You’ll then need to open a command prompt and navigate to the wherever the repository is downloaded. You’ll need to execute the following commands from that directory where the repository is downloaded. npm i will installed all the things necessary to run the script and then npm start will run the script. It will then find the upcoming auctions and put them into a csv for Miami-Dade.

I realize that was a very quick explanation and if more is needed, please feel free to reach out to me.

The code

There are three main parts to this bit of code. The first is where I navigate to the base calendar page and check for any auctions for days greater than today and then navigate to the following month to get all auctions for that month.

const url = `https://www.${regionalDomain}.realforeclose.com/index.cfm?zaction=USER&zmethod=CALENDAR`;

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(url);

const auctions: any[] = [];

const nextMonthDate = getDateWithFollowingMonth();

await handleMonth(page, auctions);

await page.goto(`https://www.${regionalDomain}.realforeclose.com/index.cfm?zaction=user&zmethod=calendar&selCalDate=${nextMonthDate}`);

// Let's do it for the following month as well

await handleMonth(page, auctions);

console.log('Total auctions', auctions.length);

await browser.close();

const csv = json2csv.parse(auctions);

fs.writeFile('auctions.csv', csv, async (err) => {

if (err) {

console.log('err while saving file', err);

}

});

regionalDomain is a global variable at the top of the script that can be changed to whatever the location is. There were a lot of other locations besides “miamidade” that included places like Denver and counties in Arizona.

So I handle the rest in a few steps. The first part handles the calendar with the month. This gets all of the auction days and gets a list from each day. There aren’t any links associated with these days so I just grab the dayidand then loop through those and navigate directly to those pages.

async function handleMonth(page: Page, auctions: any[]) {

await page.waitForSelector('.CALDAYBOX');

const dayids = await page.$$eval('.CALSELF', elements => elements.map(element => element.getAttribute('dayid')));

const baseDayPage = `https://www.${regionalDomain}.realforeclose.com/index.cfm?zaction=AUCTION&Zmethod=PREVIEW&AUCTIONDATE=`;

for (let dayid of dayids) {

// check if dayid is greater than today

if (new Date(dayid) > new Date()) {

await Promise.all([page.goto(`${baseDayPage}${dayid}`), page.waitForNavigation({ waitUntil: 'networkidle2' })]);

await handleAuction(page, auctions);

console.log('Finished checking day:', dayid, 'Total auctions now:', auctions.length);

}

}

}

A neat little trick I learned this time to handle navigation better and waiting for the page to load (since there is so much javascript/ajax on this site) is from here. This way I make sure that everything is loaded in before I continue with my code. I use the trick here – await Promise.all([page.goto(${baseDayPage}${dayid}), page.waitForNavigation({ waitUntil: 'networkidle2' })]);

Handling the page with all the auctions was probably the trickiest part of this whole scrape. I first attempted to just grab the total number of pages with const maxPagesForClosedAuctions = parseInt(await getPropertyBySelector(page, '#maxCA', 'innerHTML'));. From then I would just loop through the number of pages and click the “Next” button each time. The problem came from the html structure. There is a paginator at the top and bottom that is EXACTLY the same as far as selectors goes.

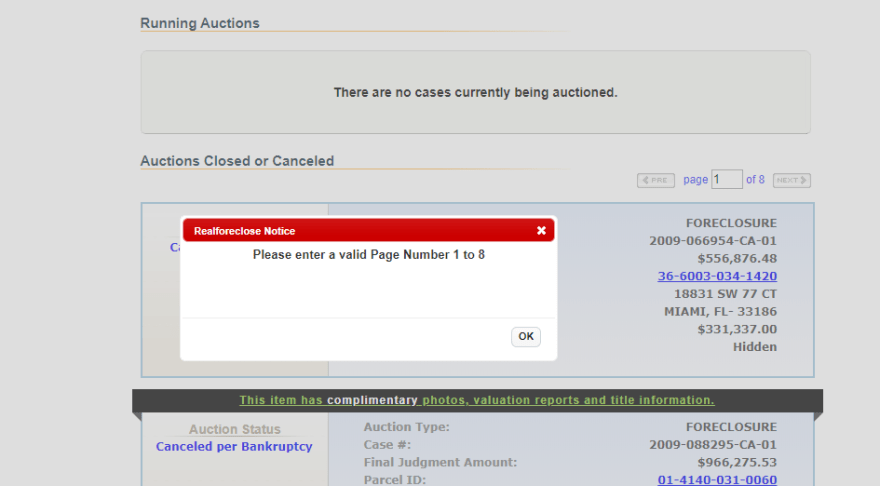

I tried to be clever and just type the page number I want and hit enter. The problem I ran into there is apparently (that I know of) Puppeteer can’t enter an item as a number. When you enter it, it goes in as a string and this field only accepts numbers and you get this error.

So I just had to get better with my selectors and do things that didn’t make a ton of sense to me, like selecting with const pageRight = await page.$('.Head_C .PageRight:nth-of-type(3)'); and const pageRight = await page.$('.Head_W .PageRight:nth-of-type(3)'); depending on what the auction types are. Here’s the whole chunk of code:

async function handleAuction(page: Page, auctions: any[]) {

// Handle closed auctions

const maxPagesForClosedAuctions = parseInt(await getPropertyBySelector(page, '#maxCA', 'innerHTML'));

for (let i = 1; i < maxPagesForClosedAuctions; i++) {

await handleAuctions(page, auctions);

const pageRight = await page.$('.Head_C .PageRight:nth-of-type(3)');

await Promise.all([pageRight.click(), await page.waitFor(750)]);

}

// Handle waiting auctions

const maxPagesForWaitingAuctions = parseInt(await getPropertyBySelector(page, '#maxWA', 'innerHTML'));

for (let i = 1; i < maxPagesForWaitingAuctions; i++) {

await handleAuctions(page, auctions);

const pageRight = await page.$('.Head_W .PageRight:nth-of-type(3)');

await Promise.all([pageRight.click(), await page.waitFor(750)]);

}

}

Finally, I end with getting the labels from the individual auction. I had to handle the parcel ids differently since I wanted to pluck the href out of the anchor tag but it was overall pretty simple.

async function handleAuctions(page: Page, auctions: any[]) {

const auctionsHandle = await page.$$('.AUCTION_ITEM');

for (let auctionHandle of auctionsHandle) {

let status;

try {

status = await getPropertyBySelector(auctionHandle, '.ASTAT_MSGB.Astat_DATA', 'innerHTML');

}

catch (e) {

console.log('error getting status', e);

}

const auction: any = {

status: status

};

const auctionRows = await auctionHandle.$$('table tr');

for (let row of auctionRows) {

let label = await getPropertyBySelector(row, 'th', 'innerHTML');

label = label.trim().replace(' ', '');

if (label === 'ParcelID:') {

auction[label] = await getPropertyBySelector(row, 'td a', 'innerHTML');

auction['ParcelLink'] = await getPropertyBySelector(row, 'a', 'href');

}

else if (label !== '') {

label = label.trim().replace(' ', '');

auction[label] = await getPropertyBySelector(row, 'td', 'innerHTML');

}

else {

auction['address2'] = await getPropertyBySelector(row, 'td', 'innerHTML');

}

}

auctions.push(auction);

}

}

Done! Once all the auctions get combined, it’ll put them into a csv in the root of the project and everyone is happy.

The post Jordan Scrapes Real Foreclose appeared first on JavaScript Web Scraping Guy.

Top comments (0)